Google Cloud: High Performance Storage Innovations for AI Workloads

Several innovations to improve accelerator utilization with high-performance storage

This is a Press Release edited by StorageNewsletter.com on April 15, 2025 at 2:20 pmBy Sameet Agarwal, VP and GM, storage, and Asad Khan, Sr director PM, storage, Google Cloud

The high-performance storage stack in AI Hypercomputer incorporates learnings from geographic regions, zones, and GPU/TPU architectures, to create an agile, economical, integrated storage architecture.

Recently, we’ve made several innovations to improve accelerator utilization with high-performance storage, helping you to optimize costs, resources, and accelerate your AI workloads:

- Rapid Storage: A new Cloud Storage zonal bucket that provides industry-leading <1ms random read and write latency, 20x faster data access, 6TB/s of throughput, and 5x lower latency for random reads and writes compared to other leading hyperscalers.

- Anywhere Cache: A new, strongly consistent cache that works with existing regional buckets to cache data within a selected zone. Anywhere Cache reduces latency up to 70% and 2.5TB/s, accelerating AI workloads; and maximizes goodput by keeping data close to GPU or TPUs.

- Google Cloud Managed Lustre: A new high-performance, fully managed parallel file system built on the DDN EXAScaler Lustre file system. This zonal storage solution provides PB scale at under 1ms latency, millions of IOs, and TB/s of throughput for AI workloads.

- Storage Intelligence, the industry’s 1st offering for generating storage insights specific to their environment by querying object metadata at scale and using the power of LLMs. Storage Intelligence not only provides insights into vast data estates, it also provides the ability to take actions, e.g., using ‘bucket relocation’ to non-disruptively co-locate data with accelerators.

Rapid Storage enables AI workloads with millisecond-latency

To train, checkpoint, and serve AI models at peak efficiency, you need to keep your GPU or TPUs saturated with data to minimize wasted compute (as measured by goodput). But traditional object storage suffers from a critical limitation: latency. Using Google’s Colossus cluster-level file system, we are delivering a new approach to colocate storage and AI accelerators in a new zonal bucket. By ‘sitting on’ Colossus, Rapid Storage avoids the typical regional storage latency of having accelerators that reside in one zone and data that resides in another.

Unlike regional Cloud Storage buckets, a Rapid Storage zonal bucket concentrates data within the same zone that your GPUs and TPUs run in, helping to achieve sub-millisecond read/write latencies and high throughput. In fact, Rapid Storage delivers 5x lower latency for random reads and writes compared to other leading hyperscalers. Combined with throughput of up to 6TB/s per bucket and up to 20 million queries per second (QPS), you can now use Rapid Storage to train AI models with new levels of performance.

And because performance shouldn’t come at the cost of complexity, you can mount a Rapid Storage bucket as a file system leveraging Cloud Storage FUSE. This lets common AI frameworks such as TensorFlow and PyTorch access object storage without having to modify any code.

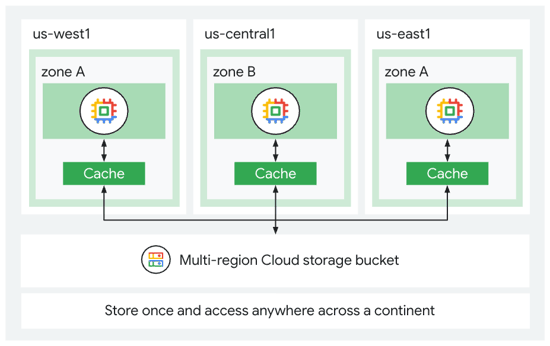

Anywhere Cache puts data in your preferred zone

Anywhere Cache is a strongly consistent zonal read cache that works with existing storage buckets (Regional, Multi-regional, or Dual-Region) and intelligently caches data within your selected zone. As a result, Anywhere Cache delivers up to 70% improvement in read-storage latency. By dynamically caching data to the desired zone and close to your GPUs or TPUs, it delivers performance of up to 2.5TB/s, keeping multiple epoch training times minimized. Should conditions change, e.g., there’s a shift in accelerator availability, Anywhere Cache ensures your data accompanies the AI accelerators. You can enable Anywhere Cache in other regions and other zones with a single click, with no changes to your bucket or application. Moreover, it eliminates egress fees for cached data — among existing Anywhere Cache customers with multi-region buckets, 70% have seen cost benefits.

Anthropic leverages Anywhere Cache to improve the resilience of their cloud workload by co-locating data with TPUs in a single zone and providing dynamically scalable read throughput up to 6TB/s. They also use Storage Intelligence to gain deep insight into their 85+ billion objects allowing them to optimize their storage infrastructure.

Google Cloud Managed Lustre accelerates HPC and AI workloads

AI workloads can access small files, random I/O, while needing the sub-millisecond latency of a parallel file system. The new Google Cloud Managed Lustre is a fully managed parallel file system service that provides full POSIX support and persistent zonal storage that scales from terabytes to petabytes. As a persistent parallel file system, Managed Lustre lets you confidently store your training, checkpoint, and serving data, while delivering high throughput, sub-millisecond latency, and millions of IOPS across multiple jobs — all while maximizing goodput. With its full-duplex network utilization, Managed Lustre can fully saturate VMs at 20GB/s and can deliver up to 1TB/s in aggregate throughput, while support for the Cloud Storage bulk import/export API makes it easy to move datasets to and from Cloud Storage. Managed Lustre is built in collaboration with DDN and based on EXAScaler.

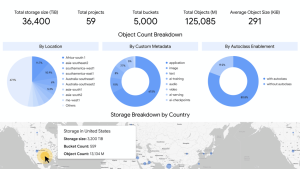

Analyze and act on data with Storage Intelligence

Your AI models can only be as good as the data you train them on. Today, we announced Storage Intelligence, a new service that can help you find the right set of data by querying the metadata across all of your buckets to be used for AI training, improving your AI cost-optimization efforts. Storage Intelligence queries object metadata at scale using the power of LLMs, helping to generate storage insights specific to an environment. The first such service from a cloud hyperscaler, Storage Intelligence lets you analyze the millions — or billions — of object metadata in your buckets, and projects across your organization. With the insights from this analysis, you can make informed decisions about eliminating duplicate objects, identifying objects that can be deleted or tiered to a lower storage class through Object Lifecycle Management or Autoclass, or identifying objects that violate your company’s security policies, to name a few.

Click to enlarge

Google’s Cloud Storage’s Autoclass and Storage Intelligence features have helped Spotify understand and optimize its storage costs. In 2024, Spotify took advantage of these features to reduce its storage spend by 37%.

High performance storage for your AI workloads

We built our Rapid Storage, Anywhere Cache, and Managed Lustre, high-performance storage solutions to deliver availability, high throughput, low latency, and durable architectures. Storage Intelligence adds to that, providing valuable, actionable insights into your storage estate.

Resources:

To learn more about these innovations, breakout sessions :

What’s new with Google Cloud’s Storage (BRK2-025)

AI Hypercomputer: Mastering your Storage Infrastructure (BRK2-020).

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter