Recap of 60th IT Press Tour in Silicon Valley, CA

With 9 companies: Aquila Clouds, CloudFabrix, Crystal DB, Hammerspace, iXsystems, Komprise, MLCommons, Panzura and Volumez

By Philippe Nicolas | March 10, 2025 at 2:02 pm By Philippe Nicolas, the initiator, conceptor, and co-organizer of the event, which was first launched in 2009.

By Philippe Nicolas, the initiator, conceptor, and co-organizer of the event, which was first launched in 2009.

The 60th edition of The IT Press Tour was held in Silicon Valley, CA, end of January. The event provided an excellent platform for the press group and participating organizations to engage in meaningful discussions on a wide range of topics, including IT infrastructure, cloud computing, networking, security, data management and storage, big data, analytics, and the pervasive role of AI across these domains. Nine organizations participated in the tour, listed here in alphabetical order: Aquila Clouds, CloudFabrix, Crystal DBA, Hammerspace, Komprise, MLCommons, Panzura, TrueNAS and Volumez.

Aquila Clouds

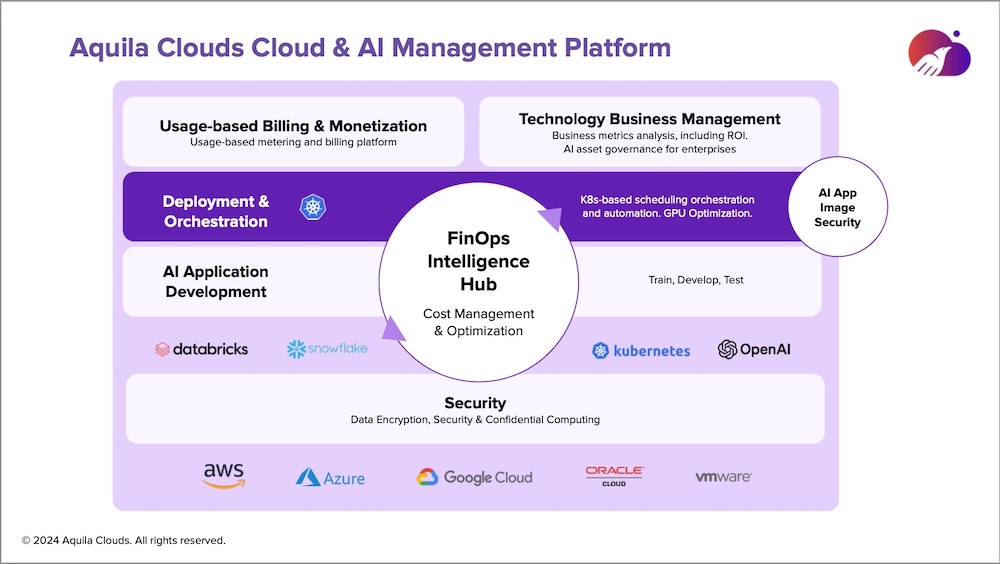

Founded in 2020 by Suchit Kaura, CEO, and Desmond Chan, CPO, Aquila Clouds develops a multi-cloud financial management platform for enterprises and MSPs. As Cloud adoption continues to skyrocket with, at the same time, some repatriation projects for some workloads, and others being deployed in a hybrid way, it appears critical to understand with high precision the cost profile of all applications, online services, and specific dependencies wherever all these stack components run.

In details, it means to report and act on it:

- Wasted Cloud Spend with very often idle or oversubscribed resources,

- Need for visibility with granular information on cloud costs and usage patterns,

- Cloud cost profile and accuracy to avoid manual cost management that can be fully automated

- and harmonization of the governance across clouds and put limits and track budget overruns…

The Aquila Clouds solution covers BillOps and FinOps for multiple clouds such as AWS, Azure, GCP, Oracle and VMware on-premise environments via the continuous monitoring continuous optimization aka CMCO approach.

The team offers a set of AI agents to collect information about workloads, applications and infrastructure, feed the engine and continue this feedback-loop to converge to the truth.

The trend is set, confirmed by several players and end-users’ desire, the usage-based pricing and billing seem to be the way to go to align real usage and associated cost. Users have clearly put pressure on vendors and a recent study mentioned that 60% of SaaS companies have adopted usage-based pricing models. Another survey ranks #1 the cloud cost before security placed #2.

Aquila Clouds provides a SaaS platform running in the cloud with collectors in order to provide a deep observability level to all the cloud usage, performance, cost, data and resources utilization. The service delivers a near real time view of all metrics. The integration of AI/ML facilitates how resources consumption could be optimized and right-sized, eliminates waste and plans some scheduling. Reports serve as a single source of truth able to be shared across teams to better allocate budgets.

The engineering team is also working on a new product called Cloud Advisory that can actually get your cloud footprint and potentially project futures consumption between various deployments such as cloud vs. VMware.

Click to enlarge

CloudFabrix, now Fabrix.AI, Inc.

CloudFabrix has made a big push during our session with a change of the company name to accelerate their market penetration thanks to AI. CloudFabrix officially became Fabrix.AI and it appears that AI is bigger than the cloud as they dropped that part of their name. It reminds us what we saw 20 years ago when all companies promoted their .com extension in a very dynamic internet buble.

IT environments became more and more complex with plenty of coupled solutions from various vendors, this is once again the industry creating its own complexity putting pressure on end-users and enterprises. This is also the result of the digitalization of the economy, nothing new here but probably a new degree in the ubiquity of it. Everything moves fast and it’s even more true with AI present at every stage of enterprises with users wishing to implement AI within many of their processes to boost productivity and therefore results. But it turns out that complexity and acceleration of change are a bit orthogonal and to address this and continue to adapt, observability is key, automation is mandatory but now AI becomes the enabler to a new quality of service for the IT infrastructure to sustain high change rates.

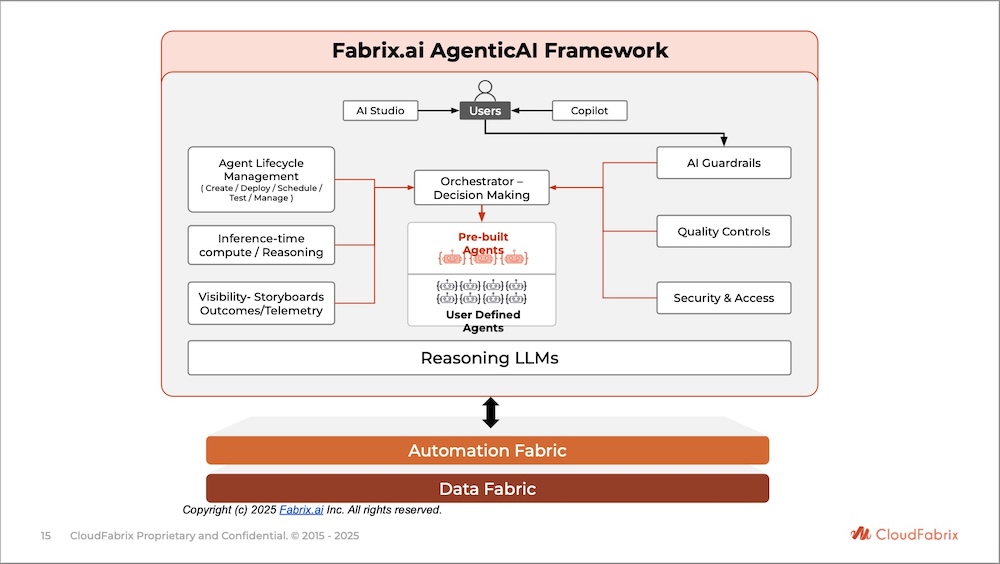

CloudFabrix has developed and addressed this for several years with their AIOps platform thanks to a deep telemetry services and machine learning integration. Now under the Fabrix.AI umbrella, the company enters into a new era with AI integration with agents to offer new automation and autonomous levels. Various studies show that ROI for GenAI consideration is high versus traditional AI services. Fabrix.AI adds Agentic AI capabilities into Robotic Data Automation Fabric within its platform approach. This platform model unifies all data sources, leveraging telemetry and observability pipelines, optimizing data collected to feed LLMs plus coupling partner data services like Splunk, Elastic… among others.

At the heart of the platform resides an AI Fabric relying on AI agents for better automation and results from inference. It orchestrates both Data and Automation Fabric. The engineering team has picked Nvidia NIM coupled with various models from Meta, IBM or OpenAI. Beyond this LLMs, Fabrix.AI selects key partners such as AWS, Cisco, Splunk, IBM, Nvidia, HPE, Evolutio and MinIO.

The company will add this year what they name Storyboards, Copilot and some AI driven Telco service assurance.

Clearly, the team wishes to be at the forefront of the domain and we won’t be surprised to see some M&A discussions in the coming few quarters.

Click to enlarge

Crystal DBA

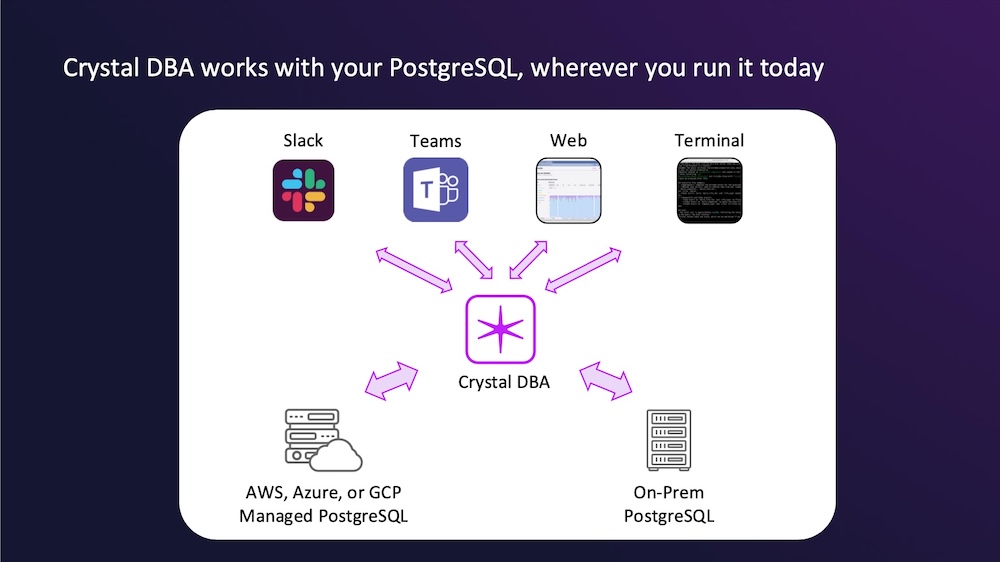

Launched in 2022 by Johann Schleier-Smith, Crystal DBA’s goal is to improve administration of PostgreSQL databases. The idea is to provide some assistance to DBAs with some specific AI oriented services in order to make operational gains.

It appears that PostgreSQL is a good pick as the open source database with MySQL and a few others are really very well adopted and deployed. On the AI side, different flavors exist today, we find chatbot, copilot, agent and now teammate illustrated by this solution.

DBAs have a mission to maintain a high level of quality of service with reliability, security, performance and scalability dimensions. Some of these aspects are real challenges and being helped by AI powered agents is a real plus. Among classic DBAs’ tasks, Crystal DBA is aligned with DBaaS around DB seamless installation, patching and upgrades, tuning parameter, index optimization, scalability and availability planning, and finally of course data protection with backup and disaster recovery.

Deployed to capture commands via natural language interaction, Crystal DBA is integrated with Slack, Teams, Web API and CLI and then coupled in the back with PostgreSQL engine whatever the database is deployed. In other words, as Crystal DBA never sleeps, the knowledge of the database environment grows with the time and usage.

Based on the role given to users, the solution provides advanced capabilities to developers and DevOps engineers to accelerate applications development and delivery and finally reduce development cycle. A demo is available online to test a very interesting approach.

Click to enlarge

Hammerspace, Inc.

Hammerspace is moving fast with several key announcements during the last few months plus key recruitments at the same time surfing on the coming AI tsunami. Surprisingly, Jeff Giannetti, former CRO at WEKA, joined Hammerspace for the same position, to continue to promote parallel file storage, this time based on pNFS.

In 2024, the firm made progress in several domains having multiplied the revenue by 10 with a Net Revenue Retention greater than 330% and +75% of new employees. At the same time, being very US centric since the inception, international sales have started with customers in Germany, India and Middle East. Their new Tier0 model attracted some users and the 1st opportunity just came after less than 90 days following the announcement.

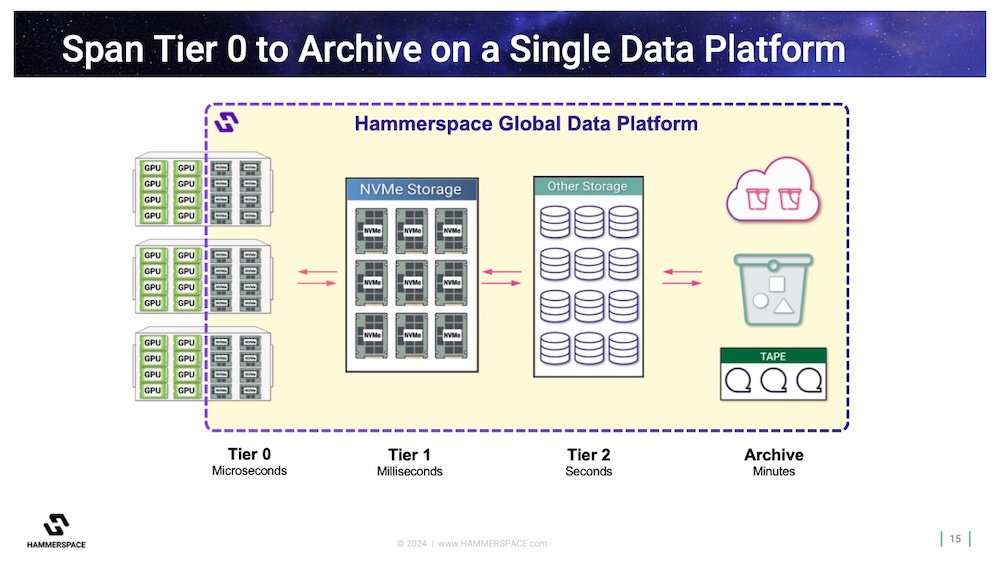

Hammerspace is known for 3 solutions: Global Data Platform aka GDP, Hyperscale NAS and Tier0.

GDP, introduced a few years ago as Global Data Environment, not the best name, introduced global file services and namespace plus associated unstructured data management capabilities. It is one of the products that embody real data orchestration, a dimension above data management.

Hyperscale NAS, introduced almost one year ago, is an architecture that promotes pNFS as a foundation for high performance NAS. pNFS, a standard NFS-based parallel file system is an asymmetric distributed file system with a set of metadata servers coupled with a back-end of data servers exposing native NFS protocols. Invented 15 years ago with multiple internal protocols support but not really promoted by NAS vendors, it has attracted lots of interest on the market. We see other players such as Red Hat, NetApp or Dell accelerating on this NFS 4.2 with this pNFS iteration.

Tier0 is the last development that addresses the absence of integration of embedded NVMe SSDs delivered with GPU servers. In other words, how is it possible to leverage these storage space for computing in HPC or AI domains? Hammerspace solved that thanks to the development of the last NFS client release and LOCALIO attribute that invites server and client in the same machine to bypass RPC and XDR layers. Therefore this storage entity can be orchestrated by GDP and used by HPC and AI applications.

Hammerspace appears in various analyst reports and rankings especially in the Coldago Map 2024 for High Performance and Cloud File Storage and Unstructured Data Management.

Click to enlarge

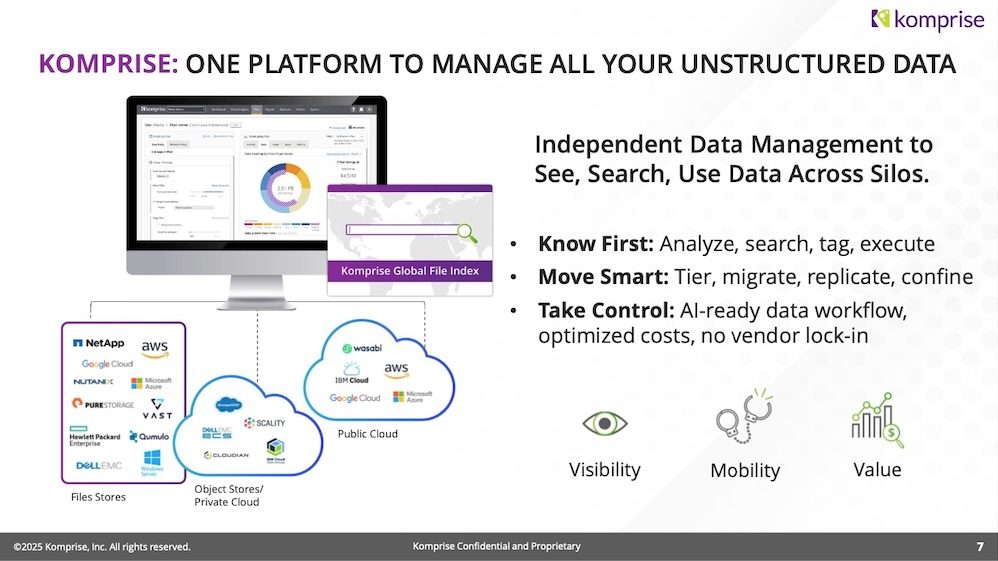

Komprise, Inc.

Recognized as a reference in unstructured data management, Komprise continues to penetrate the market at 40% growth year-over-year with its open platform to better understand the environment. Super open in terms of support of file servers, NAS, object stores and public cloud, the solution delivers several key functions in a fast growing AI context.

For our audience, the product leverages elasticsearch scalable back-end grid architecture to catalog all data attributes and metadata. It eliminates inactive data to secondary storage to reduce cost without any stub technique being out of the data path. The platform is very rich with file tiering, migration, replication and of course analytics and more recently integrated some protection mechanisms against the data absorption by AI engines.

AI represents an opportunity for everyone but also a risk for corporations as it needs data and wishes to collect the maximum amount of data to better provide results, recommendations or more globally output content. And it turns out that it is critical for enterprises to prevent AI from accessing some data, with the idea to select which one is submitted to these engines.

Beyond the classic Komprise data platform, the company has developed the Smart Data Workflow Manager to facilitate this data selection with some exclusions and routing to AI. This service delivers 2 key results: faster results as the data submitted is reduced with the right perimeter and removal of sensitive data.

The new product iteration is able to search content across a wide variety of sources and scan about some specific regular expressions but also some links to regulations. As soon as some critical data is found, the ones associated with users’ rules, the product tags them and excludes all this data from being submitted and swallowed by AI engines. This data governance action prevents sensitive data from escaping the corporate control of it which is a real risk and danger. It invites users to consider some local AI within the company perimeter. The demo shown with the integration of the Komprise platform with Azure and OpenAI was spectacular leveraging both worlds, local data selection and curation and external processing.

Elected leader for several years, Komprise confirms once again its position in the last Coldago Research Map 2024 for Unstructured Data Management.

Click to enlarge

MLCommons

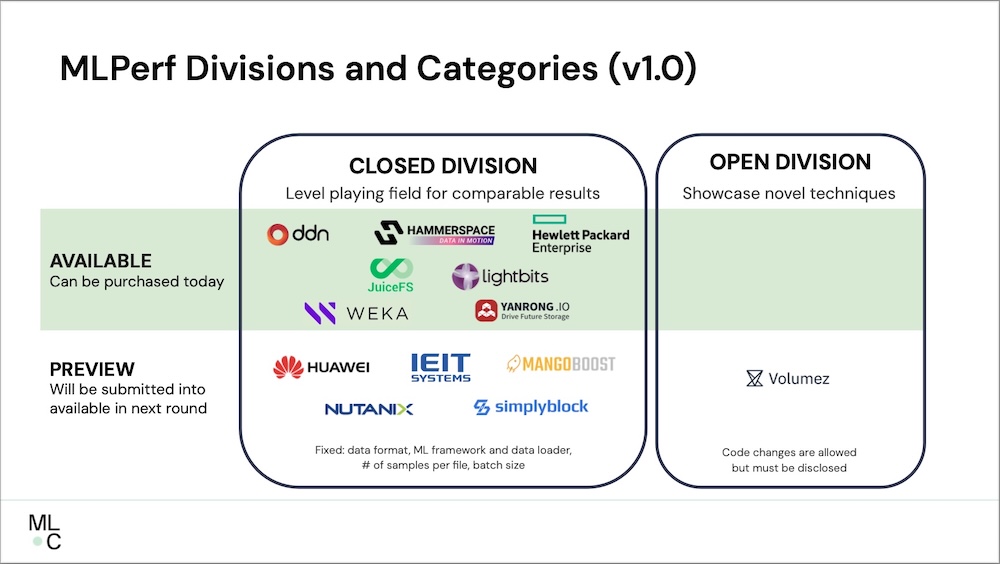

MLCommons is a consortium with 125+ members and affiliates dedicated to AI by improving the accuracy, safety, speed and efficiency of this fantastic opportunity for everyone. So far the organization has produced 56,000+ MLPerf results.

Benchmarks are useful to compare solutions with 2 main approaches: the first one has a mission to beat records and the configuration could be unaffordable for companies and the second is to deliver the best results in specific users’ configurations and environments. This is this one which is very interesting aligned with real use cases.

Big data has a serious positive impact with machine learning and big models but also requires new computing power. MLCommons promotes what they call the Fundamental Theorem of Machine Learning: Data + Model + Compute equals Innovation. Unlike some other benchmarks, ML generates stress for several aspects of IT: processors, architecture, algorithms, software and of course data at scale.

MLPerf, the benchmark suite operating by the organization, covers multiple domains such as training, inference in different sub-areas like datacenter, edge, mobile or IoT, storage, automotive or client.

On the storage side, MLPerf Storage was built to understand bottlenecks when datasets can’t fit in the memory, help practitioners optimize GPUs utilization above certain thresholds or tune ML workloads with deep measurements. Some parameters are important such as the framework used, the network connected to the storage unit, the storage hardware or software flavor and how it is exposed, the type of data and training and the cache system as it is fundamental that datasets are larger than the host node cache. In other words, it is not the memory that is tested.

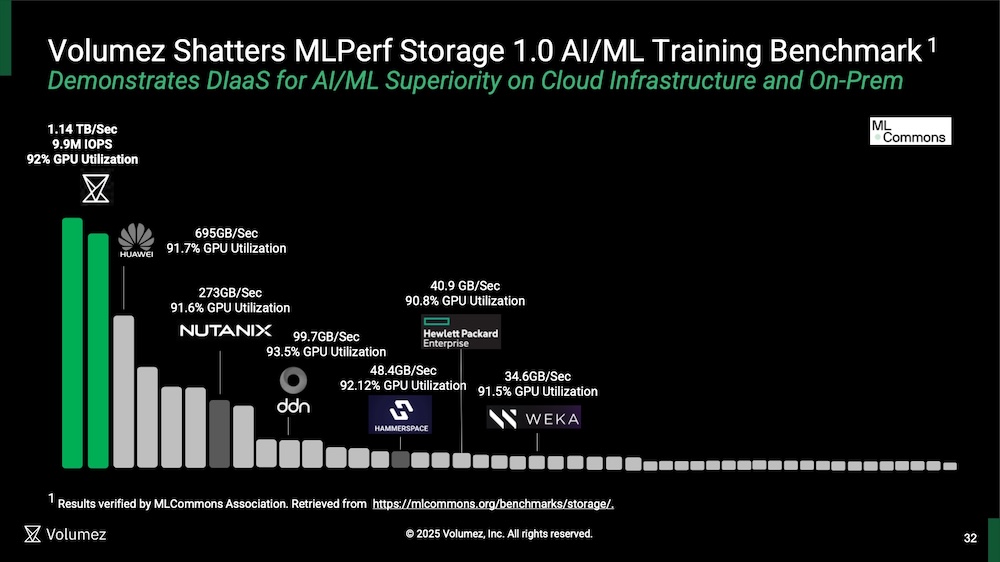

The version 1.0 campaign was released a few months ago with 13 submitting organizations producing 100+ results across 3 workloads. These 13 vendors are: DDN, Hammerspace, HPE, Huawei, IEIT Systems, Juicedata, Lightbits Labs, MangoBoost, Nutanix, Simplyblock, Volumez, WEKA and YanRong Technology. The first impression could be that many of them offer different solutions but the benchmark guarantees normalization of the test. More details are given here.

Click to enlarge

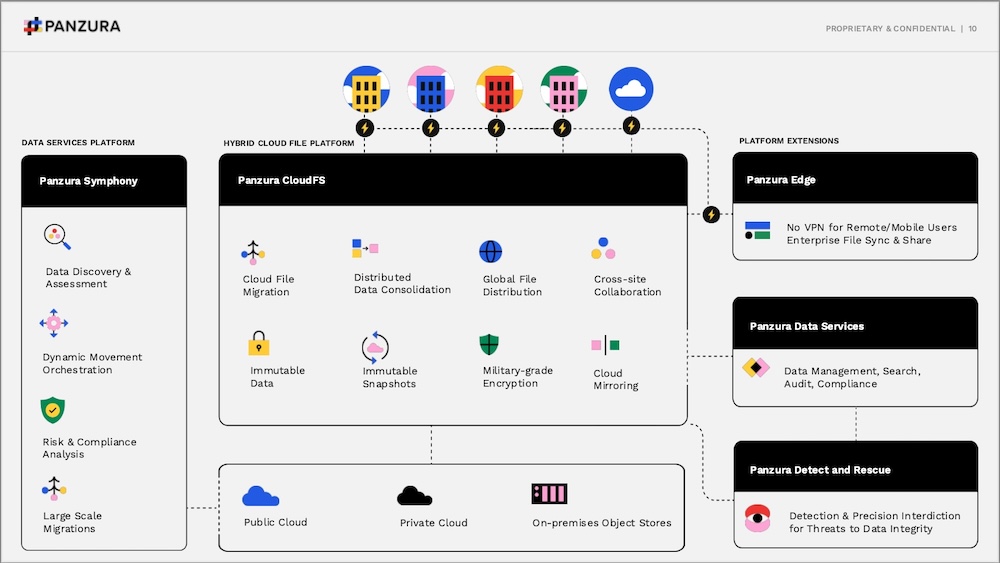

Panzura

Recognized as a pioneer in global file services, Panzura has had multiple lives since the inception of the company in 2008. The current era started in 2020 with a new majority investor that initiated a refresh of the entity with a new management team. Today Panzura promotes a hybrid cloud file and data services platform thanks to acquisition in 2024 of Moonwalk Universal, a small data management software vendor based in Australia, recognized for its expertise for 20+ years.

The core of the portfolio is CloudFS, now in 8.5 release, exposing file-based data at the edge via NAS protocols and specific mode leveraging central immutable data repository instantiated on private or public object storage. All metadata are locally present at edge serving entities connected to other peers to maintain consistency via immutable snapshots and global file locking. Every 60 seconds, data deltas from snapshots are shipped by edge devices meaning that the global RPO is a maximum of 60 seconds. Some other data services are provided such as data reduction, encryption, global search and audit plus ransomware detection and alerting. For light users on mobile, Panzura Edge exists without the need of installing any VPN.

The last release introduced several key core features like the regional store being the consideration of multiple central data repositories with synchronization across them. Instead of mirroring controlled by the edge access point, now different local servers rely on their “own” regional back-end delivering better access times. The second is Instant Node to facilitate a hardware refresh or repair a failed node. Among other capabilities we notice the storage tier support for Azure, already available for AWS.

Panzura has been recognized by Coldago Research as being among the few leaders in the Map 2024 for Cloud File Storage.

Beyond this, the company has jumped into unstructured data management with Symphony as the continuum of Moonwalk. It delivers data discovery and assessment with full collection and reporting, evaluates risk and compliance alignments with the integration of GRAU Data MatadataHub and IBM Storage Fusion, and obviously some data movement functions to optimize data residence and placement across tiers or sites.

On the business side, 86% of the deals come from resellers, MSPs are also key for adoption, same thing for cloud providers marketplaces or even direct. The product direction is oriented towards autonomous data infrastructure for hybrid and multi-cloud environments.

Click to enlarge

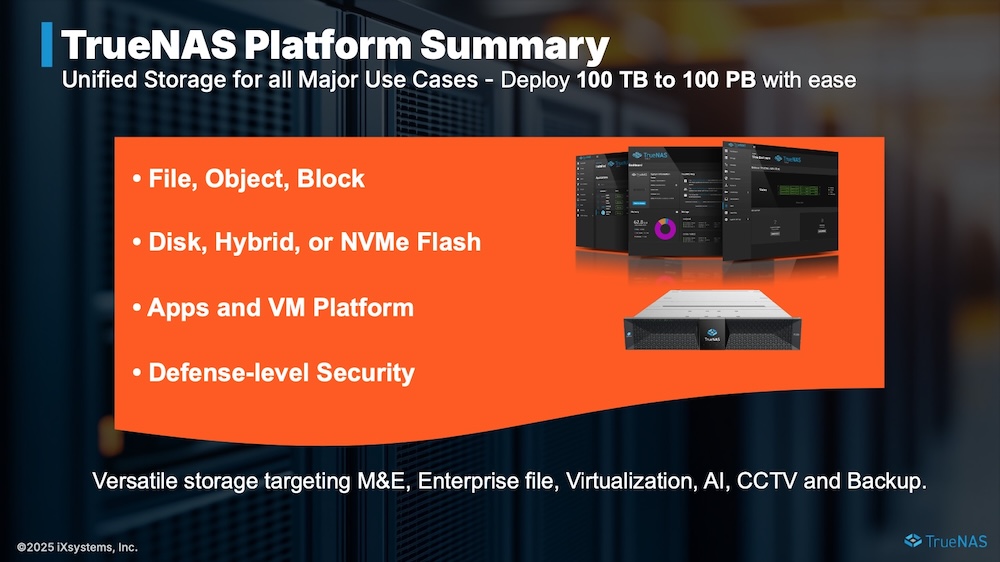

TrueNAS, also known as iXsystems, Inc.

Famous for its TrueNAS open enterprise storage operating software, downloaded millions of times, iXsystems insisted on its new corporate message wishing to be seen as TrueNAS to maintain things consistent and aligned. The company is historically a compute and storage server manufacturer and vendor and has sold its server business to Amaara Networks. So now, iXsystems sells only storage products. 2024 has been a strong business year with increases in several domains: capacity deployed, TrueNAS Enterprise Appliances adoption or deals larger than $1 million.

The second key announcement is the harmonization of the software line with 2 flavors: TrueNAS Enterprise and TrueNAS Community Edition. The first is obviously more rich with enterprise support and the second can be seen as an open source SDS with community support. To summarize, TrueNAS exposes multiple access modes with file and block protocols plus object API going beyond the product name. Internally, data and storage management are managed by services built on top of OpenZFS.

The engineering team developed TrueCloud Backup powered by StorJ, a decentralized online storage service, serving as a backup images target.

The appliance line is very wide with F-, M-, H-, R- and Mini Series, all aligned to different usages and various hardware profiles – full NVMe or hybrid, to support performance, capacity, density, power, cost oriented goals or branch office.

In 2025, the team will accelerate on container and Kubernetes, fast copy and deduplication, cloud backup, large scale deployments and trimode NVMe with HA. Some of these elements will be available or enhanced with the Fangtooth release, TrueNAS 25.04, plus RaidZ expansion, SMB block cloning for Veeam, iSCSI Xcopy block cloning for VMware, iSCSI and NFS over RDMA, LXCs and improved VMs…

At the same time, a new 2U appliance, the H30 with 2 HA controllers, 20 cores, 256GB, 8GB/s, FC up to 32Gb and Ethernet up to 100Gbe, has been announced with 720TB with only NVMe SSDs or 300TB full HDDs. A new F-Series should be unveiled soon with new density and performance dimensions.

Like a few other companies such as WEKA and Resilio, TrueNAS is used at Vegas Sphere to support the 10k video resolution environment.

Click to enlarge

Volumez

Volumez, a young storage software infrastructure player, accelerates on AI storage optimization with its Data Infrastructure as a Service aka DIaaS approach. Not a surprise, it turns out that AI really puts pressure on the IT and storage infrastructure especially in the training phase. Cloud-aware seems to be an attractive choice with its flexibility and consumption model, of course with ROI in mind, with self-service AI infrastructure for high performance and potentially compact footprint.

DIaaS introduces a way to create, control and manage NVMe-based storage volumes with full Linux native data path, without any dedicated storage controller, with a comprehensive control plane running in the cloud as a SaaS service. Just a config agent has to be installed in hosts, linked to the outside engine to receive config profiles, and then talk and control Linux local standard tools to build storage volume from individual NVMe partitions with mpadm, lvm or similar OS commands. The engine profiles and catalogs all available NVMe devices with all characteristics to allow fine choice of devices in future usages. These configuration profiles are sent on-demand to deployed agents to compose dynamically volumes across servers. Then disk file systems are generated on these volumes and assigned to jobs and workloads, we can even think about exposing these file system volumes via NFS. DIaaS supports today AWS, Azure, GCP and OCI and 2 configs are referenced: hyperconverged with local SSDs and flex with SSDs outside of the GPU cluster.

Now as DIaaS is set, it can be used for several use cases but AI/ML training appears to be a good candidate solving the performance and cost dimensions being a very simple way to work with a very limited learning curve and being horizontally available at multiple cloud providers.

The team has participated in the MLPerf 1.0 benchmark campaign among the 13 players with the following setup: 137 application nodes connected to 128 media nodes with 60TB NVMe SSD per node. Results have been published with 9.5M IOPS, 1.10TB/s throughput and 92% GPU utilization, with 411 simulated GPUs, this threshold is important in the benchmark validation. Volumez demonstrated interesting metrics like the performance/density ratio, pure performance and automation level and pushed the data pipeline yield.

Volumez has been named a Coldago Research Gem 2025.

Click to enlarge

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter