Industry Predictions 2025

An incredible year in perspective - 50+ vendors share predictions

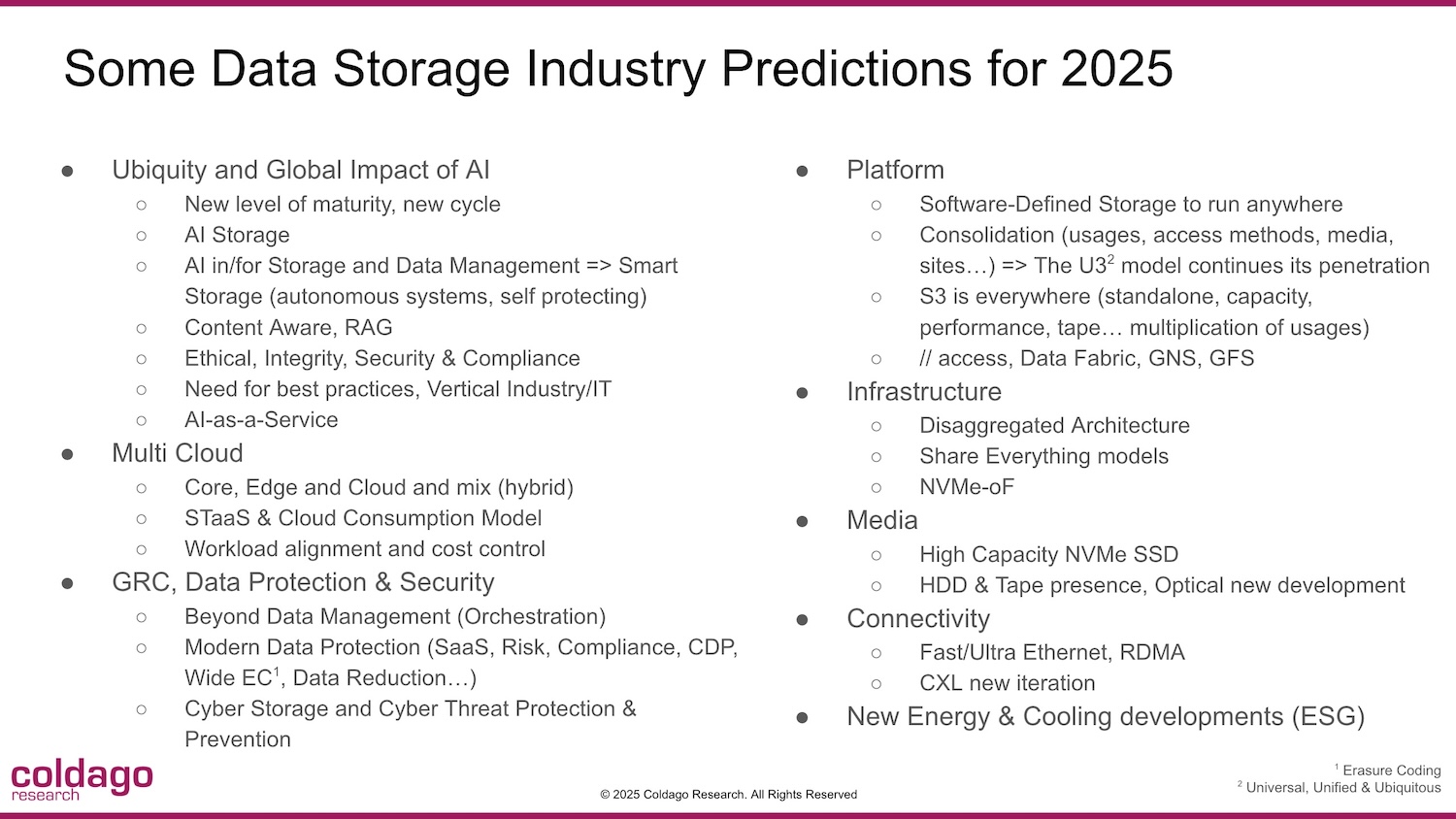

By Philippe Nicolas | January 20, 2025 at 2:02 pmWe collected more than 50 inputs from industry vendors that give, in their context, some market directions. Before that, we summarize many of these inputs in a slide, the coming year 2025 will be for sure another amazing one for our industry thanks to the rapid innovation of the sector.

Click to enlarge

- As organizations look to optimize their storage strategies, the rise of storage virtualization is making it easier to interconnect various data storage technologies. Businesses can maximize their existing investments and avoid vendor lock-in by leveraging a data fabric—an architecture that unifies cloud, disk, tape, and flash storage into a single, logical namespace.

- This trend towards virtualization allows for a more flexible approach to data management, enabling businesses to mix and match technologies to meet specific needs. For example, high-performance workloads can run on flash storage while archival data is moved to tape.

- The ability to integrate various storage solutions seamlessly will be a key enabler for organizations aiming to improve efficiency, reduce costs, and scale their operations.

- Managing Extreme Data Volumes Becomes a Top Priority

As data volumes grow exponentially, businesses will face significant challenges in scaling their storage infrastructures. High-performance and scalable solutions, particularly for unstructured data, will be critical to ensure efficiency and cost control. - AI Revolutionizes Unstructured Data Storage

Artificial Intelligence will play a key role in optimizing unstructured data storage. Through intelligent data classification, deduplication, and tiering, AI will automate data placement across storage tiers, reducing costs while improving accessibility and performance. - Proactive Data Governance for Very Large Datasets

Companies will adopt proactive governance strategies powered by AI to manage data lifecycles, compliance, and redundancy at scale. These approaches will ensure that vast amounts of data remain secure, accessible, and aligned with evolving business priorities.

- The rise of high-resolution imaging and AI applications!

Exploding file sizes of images and videos, for example, for diagnostics or AI-based decision-making, will accelerate data storage demand. Unprecedented amounts of audit data to document AI decisions must be preserved to mitigate potential liability. This will fuel the need to accelerate the scaling of data storage technologies, which will become increasingly more difficult for maturing data storage technologies, leading to a looming shortage of data storage supply. - Permanent file formats in focus!

With emerging solutions for true permanent data storage (WORF : write once, read forever) the debate for “permanent file formats” will rise. The digital age is simply too young and volatile to have brought forth solutions for real long-term data storage, nor was digital processing of information designed to keep this information for longer periods of time in the first place. - Accessible cold data on the rise!

Applying AI requires data to be accessible. Thus, blobs of cold data frequently need to be warmed up quickly and equally quickly cooled down. The storage stack of “hot-warm-cold” will experience a significant change: An Active Archive will be required to move data across the storage tiers as requested to create insight using AI. Thus, cold data must be storage accessible.

- The Rise of the “Observability Engineer”

- Convergence of Roles: With the unification of AIOps, Observability, and Security, a new breed of “Observability Engineer” will emerge. These professionals will possess a holistic understanding of IT systems and be proficient in leveraging AI and data-driven insights to ensure system health, performance, and security.

- Skill Shift: Traditional subject matter experts will need to adapt and acquire skills in data analysis, AI/ML, and automation to remain relevant in this evolving landscape.

- Data Fabric Becomes Mission-Critical

- Central Nervous System: Data Fabric will evolve into the central nervous system of IT operations, unifying data from disparate sources and providing a single source of truth for all operational insights.

- Increased Investment: Organizations will significantly invest in Data Fabric technologies to break down data silos, improve data quality, and enable real-time analytics and automation.

- The Rise of “Explainable AI” in Observability

- Trust and Transparency: As AI plays a larger role in operational decision-making, the need for explainable AI will become paramount. Organizations will demand transparency into how AI models arrive at their conclusions to build trust and ensure accountability.

- Debugging and Improvement: Explainable AI will facilitate debugging and improvement of AI models, leading to more accurate and reliable predictions and recommendations.

- Security and Observability Converge Further

- Unified Platforms: Security and observability will be increasingly integrated into unified platforms, enabling a holistic view of system health, performance, and security posture.

- AI-Powered Threat Detection: AI and ML will be used to detect and respond to security threats in real-time, leveraging insights from both security and observability data.

- Sustainability Takes Center Stage

- Green IT: Observability tools will be used to monitor and optimize energy consumption in IT infrastructure, contributing to sustainability goals and reducing environmental impact.

- Carbon Footprint Tracking: Organizations will leverage observability data to track and report on their carbon footprint, supporting their ESG (Environmental, Social, and Governance) initiatives.

These predictions paint a picture of a future where observability is no longer a niche domain but a core function of IT operations, driven by AI, automation, and a unified approach to data management. Organizations that embrace these trends will be well-positioned to navigate the complexities of the digital landscape and deliver exceptional user experiences.

- AI Workloads Reshape Storage Architectures

As we enter 2025, the rapid evolution of AI will significantly impact data storage, management, and infrastructure. Traditional block and file storage systems will struggle to meet the exponential growth of AI workloads, which require massive parallel data access and low latency across vast datasets. This will drive the adoption of distributed object storage with enhanced parallel access and sophisticated metadata management.

Geo-distributed storage systems will integrate compute capabilities to enable data preprocessing closer to where data resides, blurring the lines between storage and compute. Advanced metadata tagging and indexing will be critical to accelerate search, track data lineage and ensure reproducibility of AI training results. - Acceleration of AI-Driven Storage Management

AI-driven storage management will reach a critical inflection point, with organizations demanding solutions that can optimize data placement, predict capacity needs, and manage data lifecycle based on AI analytics. The distinction between active and archive storage will blur as AI systems require rapid access to historical data. - Hybrid Cloud Storage Evolution with Edge Computing Focus

Hybrid cloud storage architectures will evolve to support edge computing and 5G networks, with S3-compatible object storage systems needed to enable seamless data mobility between edge locations, on-premises data centers, and multiple cloud providers. Storage vendors will further develop geo-distribution capabilities to offer enhanced support for edge use cases while maintaining enterprise-grade reliability and security.

- Cyber resilience continues to increase in importance

In the next year we’ll see organizations continue their pursuit of cyber resilience, as more companies understand their real objective isn’t just preventing a breach but minimizing the business impact a breach would cause. As attackers get access to the same technology that companies have, and the effectiveness of these attacks continue to increase, investing in breach defense isn’t enough. Organizations are forced to execute a balanced cyber security strategy prioritizing investments that minimizes the impact if breached – like hardening business operations or increasing an organization’s ability to recover core business processes quickly at scale. - Accountability for cyber resilience becomes the responsibility of CIO organizations

The increased focus on cyber resilience requires operational integration with the IT teams. In 2025 organizations will question the separation of IT and Cyber Security disciplines and there will be a deliberate move to have cyber security controls be an attribute of IT services. This will reduce the friction between InfoSec and IT and ensure the security of IT assets is in alignment with the priorities of the business. This transition will lead to significant changes in the CISO role where accountability for the day-to-day work required to build and maintain cyber resiliency transitions to the CIO. - Quantum computing overtakes AI as the number 1 cyber security concern

Many of the fears expressed after the arrival of ChatGPT and the presence of AI in everyday life have not materialized like autonomous systems threatening human engagement and AI’s potential to brute force data encryption. This is not meant to downplay the impact that we’ve seen with the emergence of AI, this is meant to illustrate the significantly greater impact we are going to see from the advent of quantum computing. In 2025 organizations need to at least develop a plan to migrate to encryption that isn’t vulnerable to quantum computing attacks.

- The Year of Data Classification

Data Classification is the CORE to efficient, secure and compliant DSPM and AI - GRC solutions are MUST haves versus nice to haves!

Whether driven by NYDFS, HiTrust or GDPR, companies must demonstrate that they are implementing GRC standards and defensible process. - Unstructured data bloat will no longer be accepted

Budgets will mandate the remediation of ROT(Redundant, Obsolete and Trivial) data

- Multi-Cloud Adoption Will Be the Global Standard

By 2028, multi-cloud adoption will be the standard for enterprises worldwide, driven by tightening regulatory frameworks, evolving privacy mandates, and the growing need for data sovereignty. According to IDC, nearly 90% of Asia/Pacific enterprises already deploy significant workloads across multiple public clouds—a trend mirrored globally as organizations seek to reduce vendor lock-in, ensure data availability, and meet complex compliance demands. As multi-cloud environments mature, businesses will prioritize unified management, optimized workload placement, and seamless data mobility across cloud platforms. - Cyberstorage Will Become a Core Security Standard

By 2028, built-in cyberstorage capabilities will be a standard feature in all enterprise storage products, up from just 10% in 2023, according to Gartner. Advanced technologies like AI-powered ransomware protection and integrated honeypot decoys at the storage layer will reshape how businesses defend against cyber threats. These features will enable real-time threat detection, automated attack containment, and enhanced resilience, transforming enterprise storage from passive data repositories into active components of comprehensive cybersecurity strategies. - Sustainability Will Drives Compliance-Centered IT Strategy

As environmental regulations tighten and data storage needs surge, businesses will be compelled to adopt sustainable practices such as energy-efficient data centers, carbon-neutral cloud deployments, and eco-friendly storage solutions. Enterprises balancing sustainability with legislative mandates will gain a competitive edge, turning compliance from a cost center into a driver of long-term value creation.”

- Cyberstorage as a Vital Defense Against Modern Threats

Cyberstorage will emerge as a key component of IT security, integrating features like immutability, real-time malware detection, and seamless backup integration to protect data across core, edge, and cloud environments. With rising ransomware threats and stringent compliance requirements, storage solutions with tamperproof capabilities will become essential, helping organizations meet regulatory demands while safeguarding operations. - Shifting Virtualization Strategies for Greater Flexibility

Broadcom’s acquisition of VMware will continue to prompt organizations to rethink virtualization strategies, prioritizing cost-efficiency and vendor independence. Diversified approaches, including alternatives like Hyper-V and KVM-based solutions such as Proxmox VE, will gain traction as enterprises seek agility. Storage vendors will develop platforms that integrate seamlessly across SAN, HCI, and dHCI deployments, ensuring freedom of choice without compromising performance or compatibility. - AI Transforming Enterprise Storage and Operations

AI will drive demand for storage capable of supporting high-performance workloads with low latency, scalability, and real-time data processing. Simultaneously, organizations will embed AI into storage systems to automate management, enhance data placement, and optimize performance. This dual role of AI will enable proactive, self-optimizing infrastructures, positioning enterprises to meet today’s demands and the future challenges of AI-driven innovation.

- The amount of unstructured data stored in both public cloud and private cloud environments will continue to grow. The impact of unstructured data management solutions that give customers the ability to manage data no matter where it is located will increase as the data in multiple environments accumulates. It’s no longer realistic to ignore the fact that, in most organizations, data lives in a hybrid environment and global data management is required.

- The adoption of Unstructured Data Management solutions will continue to increase in 2025 as customers wrestle with cost increases due to endless storage expansion, higher consumption of cloud storage, new workload adoption, increasing regulations, and ever-growing data protection requirements.

- Backup data will be stored in multiple locations: On-premise data centers, outsourced data centers, and in the public cloud.

- There will be a continued increase to improve data integrity to ensure that data can be restored when required, and third-party certifications and government regulations to prove data integrity and security will continue to grow in popularity such as the Common Criteria, DoDIN APL, NIS 2, DORA, etc.

- Business continuity will take center stage in 2 major areas:

- Ability to survive or quickly recover from cyber-attacks, including a ransomware attack. These attacks will increase in 2025.

- Ability to recovery from a site disaster, whether man-made such as fire or natural such as earthquake, tornado, hurricane, flood, etc. There are major wildfires in the news on a weekly basis and natural disasters are happening more frequently and with greater impact and destruction due to climate change. You will see more and more requirements for a tertiary hop to a third disaster recovery site in order to ensure multiple copies of data across multiple locations to keep the business running in the event of a site disaster.

- AI/ML Data Deluge Generates Need for Active Archiving

AI/ML will have a profound impact on the need for all types of storage. AI and ML applications work with and produce vast amounts of data and organizations will want to maintain access to data sets for longer periods of time to support a continuous cycle of data ingest, analytics and inference. When AI/ML data sets get cool or cold, a dramatic increase in readily accessible, but cost-effective and energy-efficient storage capacity in the form of active archives will be needed to support this model. An active archive solution can leverage the unique performance and economic benefits of SSDs, HDDs, tape, or the cloud (public or private) in a single virtualized storage pool. - Large Scale Tier 2 Data Centers Increase Tape Adoption

Taking a cue from the largest and most successful cloud service providers, an increasing number of large scale “tier 2” data center operations including secondary cloud service providers, will follow the leaders and deploy on-premises tape systems. This will likely take the form of standard S3 compatible object storage on modern tape systems to facilitate true hybrid infrastructure, getting the most out of on-prem plus cloud. Having an instance of cold storage on-prem reduces cost, latency and risk while maintaining flexibility and compatibility with the big cloud service providers. - Video Surveillance Market Will Benefit from LTO Tape

The storage of video surveillance content is reaching a crisis as data intensive, high-definition cameras proliferate. But many users can’t afford high-res storage. Solutions will continue to emerge in 2025 that combine high speed HDD storage with efficient LTO tape storage to create a 2-tier strategy that balances access speed, cost, and long-term retention of quality footage. In the “active tier”, high-speed hard disk storage manages the most recent video content. In the “active archive tier”, high capacity LTO tape technology can be leveraged for the long-term retention of hi-res video content, providing a cost-effective, energy-efficient, and reliable solution. This ensures access to older video footage while relieving expensive and energy-intensive high-speed storage in the active tier.

- Embedded Metadata: The Key to Data Quality and Speed

The explosive growth of unstructured data from machine-generated sources like microscopes, genomic sequencers, sensors, and cameras will hit a tipping point in 2025. Embedded metadata will be essential for AI workflows, automating the extraction and provisioning of critical content and context directly to applications. This “meta” data fabric will span entire data ecosystems, dramatically improving the speed, accuracy, and relevance of research and discovery, while reducing errors and data preparation bottlenecks. - Transforming Storage Management with Content-Aware Orchestration

Embedded metadata will redefine traditional storage systems by enabling content-aware orchestration. Instead of relying on metrics like file age, storage will be dynamically optimized based on the actual content and contextual value of each file. Organizations will achieve significant cost savings through intelligent tiering, maximizing the use of low-cost storage while maintaining rapid access to high-value data and insights. - Accelerating AI-Driven Innovation Across Industries

By leveraging metadata-driven insights, organizations will gain real-time access to critical information without retrieving full files. This innovation will streamline workflows in AI systems such as Retrieval-Augmented Generation (RAG) and Large Language Models (LLMs). Faster, more accurate insights will enhance applications in healthcare, scientific research, and analytics, reducing computational overhead and fueling competitive advantage.

- Breaking Down Data Silos Will Become a Central Focus for AI & Data Architects

Breaking down data silos will emerge as a critical architectural concern for data engineers and AI architects, reshaping how organizations approach their data strategies to enable the holistic insights and decision-making that modern AI systems demand. The focus will shift toward seamless data integration and ecosystems where data is easily accessible, shareable, and actionable across all domains, with new ways to simplify data integration and foster greater collaboration across traditionally siloed environments. - 2025: The Rise of Collaborative Global Namespaces

The importance of how companies manage global namespaces will reshape data-handling strategies across the industry. Not all global namespaces will be created equal: some will offer only read-only capabilities, while others will enable active read-write functionality. Companies will prioritize implementing global namespaces that allow unified data views and support active read-write capabilities to achieve more collaborative, efficient data environments that are functional for modern workloads. - GPU-Centric Data Orchestration Becomes Top Priority

One of the key challenges in AI and machine learning (ML) architectures is the efficient movement of data to and between GPUs, particularly remote GPUs. GPU access is becoming a critical architectural concern as companies scale their AI/ML workloads across distributed systems. Expect a surge in innovation around GPU-centric data orchestration solutions that streamline data movement, prioritize hardware efficiency, and enable scalable AI models that can thrive in distributed and GPU-driven environments.

- Consuming more than 3% of global power, data centers account for more carbon footprint than the airline industry. Energy-efficient data management will become even more central to driving operational effectiveness and optimizing financial outcomes as we move forward.

- Commoditized data hardware and systems will give way to those that can deliver high-performance and security in a way that prioritizes energy and cost efficiencies. The rise of integrated systems will revolutionize Data Center Infrastructure Management (DCIM), while Sustainability Operations (SustOps) will emerge as a vital framework.

- Gartner predicts that 75% of IT organizations will implement targeted data center infrastructure sustainability initiatives by 2027. However, the data industry is still in the early stages of recognizing the need for sustainability within data centers.

- AI advancements will unveil fresh possibilities for green algorithms and IT practices, enhancing energy efficiency across not just the data racks but all aspects of data center operations.

- The ‘pants-on-fire’ approach to AI in 2024 will give way to enterprises approaching and delivering AI with greater care in 2025. Companies will do more work on the front end of their artificial intelligence projects to prepare data and ensure the use cases that they have identified are a good match for AI. At the same time, increased adoption of small language and RAG models will reduce bias and hallucinations.

- Businesses are also expected to become more vigilant about AI training datasets, ensuring they safeguard personally identifiable information.

- Sustainability will also rise to the forefront as companies begin working to limit the power consumption of their data infrastructure, minimizing their environmental impacts, enhancing their operational efficiency, and making their companies more competitive.

- In 2025, the AI bandwagon will start to transition from a headstrong rush into the unknown to a more measured approach, with ROI playing a role in justification. A critical piece of that assessment will be IT and infrastructure’s role in a proper AI strategy, including the need for AI-ready infrastructure.

- In 2025, services that support more intelligent infrastructures will dominate the priority list for MSPs. Expect MSP clients to demand services that build on AI and machine learning and transform data infrastructures to enable automation, predictive analytics, and augmented intelligence decision-making.

- Meanwhile, enterprises will look to their MSPs for direction in identifying and implementing such tools in a cost-effective, compliant, and sustainable manner.

- Modular, scalable solutions that can adapt to each enterprise’s specific requirements will be in high demand. Enterprises want technologies, services, and partners that manage all the backend integrations, maximize their IT ROI, and deliver a seamless user experience.

- The rise requirement for Retrieval-Augmented Generation (RAG) storage by AI inference development.

- People will rethink the approach of tiering storage instead of traditional hot-warm-cold, by new archiving media is introduced, e.g. Huawei’s magneto-electrical disks(MED).

- Large capacity SSD will become more common and applied to more use cases, such as HPC, HPDA, content management, backup.

- Third-Party (Supply Chain) Risk Rises to New Levels

Third-party and supply chain risk is not new, but it has taken on renewed urgency after high-profile attacks like the one led by CrowdStrike in 2024. This event forced organizations to reassess their third-party dependencies and the associated risks including the vast amount of data residing in SaaS platforms. In 2025, we anticipate increased investments in data protection for public cloud and SaaS environments as companies put into action the risk assessments they performed in 2024. - AI Moving Mainstream Drives Need for Holistic Data Management Strategies

In 2025, to operationalize AI, organizations will begin to unify data from across the enterprise, creating data lakes in massive data warehouses (e.g., BigQuery), object stores, or file systems. As AI technologies become integral to customer-facing experiences, the requirements for availability, compliance, and data protection will only intensify. - Compliance Mandates Drive a New Data Protection Frenzy

Historically, Europe has led in technology and data regulations. Two key regulations to watch in 2025 are the Digital Operational Resilience Act (DORA), going into full effect on April 30,2025, and NIS2, which became active at the end of 2024. Both require organizations to ensure their data is protected, recoverable, and auditable in the event of a security breach.

Observability costs will continue rising for most organizations in 2025, a primary contributor to the exponential growth of spend on storage of log data. As cost increases are prime targets for innovation, three trends will introduce new opportunities for cost savings in storage:

- Streaming data platforms will gain widespread adoption not through direct use by enterprises but rather as capabilities built that will be integrated into the SaaS platforms and service providers they already rely on. The very real complexity of running streaming infrastructure extracts a cost, but these benefits exceed that cost when used at scale. This makes it a worthy investment for platforms and large enterprises.

- Data-based organizations will increasingly adopt federated data architectures, superseding the “walled garden” paradigm of data storage. Innovative tools will integrate with the virtualization layer of federated data to provide a more cost-effective approach to accessing and performing analytics on this in near real time.

- CFOs will begin asking why the organization is spending so much on data analytics systems with tightly-coupled architectures like Elastic and the ELK stack. This will drive a search for less expensive alternatives, which might disrupt the popular stack. We’ll see if this means innovators find ways to make ELK cheaper or if alternatives take root.

- AI-Powered Detection In 2025

AI-powered detection systems trained on the latest data corruption methods will be at the forefront of cybersecurity strategies. Predictive AI will enable organizations to identify and respond to threats faster catching ransomware attacks at their earliest stages. Unlike traditional tools that focus on indicators like metadata, these AI models continuously analyze data content and behavior over time to detect subtle anomalies that may indicate corruption. As ransomware evolves to evade traditional defenses, organizations will rely on predictive AI not only to anticipate attacks but to safeguard critical systems and ensure data remains uncompromised. - Collaborative Cyber Resilience

In 2025, cybersecurity will no longer be the sole responsibility of the security team; instead, it will require a unified, collaborative approach across an organization. Security teams, storage teams, and executive leadership will need to work together to design and implement integrated resilience plans that minimize both risk and disruption. This means breaking down silos and ensuring that every stakeholder understands their role in preventing and recovering from cyberattacks. Companies that foster a culture of shared responsibility, where cybersecurity is viewed as a “team sport” will not only reduce the likelihood of attacks but also strengthen their ability to recover quickly and cost-effectively. - Data Integrity as a Standard

As ransomware becomes increasingly difficult to detect and recover from, continuous data integrity monitoring will become essential for businesses in 2025. Organizations will adopt tools that are capable of analyzing how data changes over time to ensure it remains uncompromised and trustworthy. By moving beyond surface-level checks and focusing on the actual content and behavior of data, businesses can proactively identify corruption attempts before they escalate into full-scale attacks. This approach will provide organizations with confidence in their ability to recover clean, uncompromised data in the event of an attack. As data integrity becomes a cornerstone of cyber resilience, businesses will see improved recovery times, and minimal operational impact.

- AI data governance is a looming requirement for all enterprise IT teams

The Komprise 2024 State of Unstructured Data Management report uncovered that IT leaders are prioritizing AI data governance and security as the top future capability for solutions. They will look for unstructured data management solutions that offer automated capabilities to protect, segment and audit sensitive and internal data use in AI with the goal of protecting data from breaches or misuse, maintaining compliance with industry regulations, managing biases in data, and ensuring that AI does not lead to false, misleading or libelous results. - With the proper safeguards and policies in place, IT directors will need to address the next mandate: data ingestion for AI.

Up until now, model training has been the main investment in AI—handled by specialized data scientists rather than IT. But this is changing as AI evolves to inferencing with corporate data. Storage IT will need to create systematic, automated ways for users to search across corporate data stores, curate the right data, check for sensitive data and move data to AI with audit reporting. - Finally, most IT organizations will need to do all of the above and more without dedicated AI budgets.

In the Komprise survey, only 30% of IT leaders said they will increase the budget for AI. Therefore, cloud-based, turnkey AI services will grow in popularity along with no-code or low-code AI platforms which don’t require extensive coding knowledge. As well, continually analyzing and right-placing unstructured data into the most cost-effective storage will free up funds for AI.

- Continued Growth in Data Generation

With exponential growth in generated data, we foresee heightened demand for innovative storage solutions. - SMR Drives Entering General Channels

We believe Shingled Magnetic Recording (SMR) drives will make their way into general channels beyond hyperscalers, increasing their share in high-capacity drive sales as businesses embrace their cost-efficiency and density benefits. - Public Cloud Repatriation

As organizations seek greater control over costs, performance, and data sovereignty, we anticipate a growing trend of public cloud repatriation.

- AI-enhanced cyber attacks and data protection tactics

In 2025, data protection will lean more on AI and machine learning to create self-protecting systems capable of proactive monitoring and instant threat responses. We’re already seeing an uptick in ransomware and AI-enabled attacks as well as the use of deepfakes in social engineering attacks, and next year will likely bring more of these. Organisations will reinforce security with zero-trust frameworks, frequent incident response drills, and robust backup and recovery solutions. Immutable storage will remain indispensable for ensuring data resilience and swift recovery in the event of a cyberattack.

Furthermore, micro-segmentation will become a standard practice to divide networks into smaller segments, limiting the impact of a cyberattack if one occurs. In terms of priorities, anti-ransomware measures like immutability, air-gapping, anomaly detection, and malware scanning tools, as well as a zero-trust approach across endpoints are and will remain essential defenses. Additionally, automation will be increasingly integrated into data protection workflows to enhance efficiency and reduce human error. Enterprises will focus on AI-powered storage to enhance real-time analytics and maintain a competitive edge. - The continued rise of hybrid cloud

The adoption of the hybrid cloud model will continue in 2025 due to its flexibility, scalability, and cost-efficiency. New tools like standardized APIs, self-service portals, and cloud storage gateways have made it easier to manage hybrid cloud environments. However, more work is still needed to streamline integration between cloud and on-premises systems.

The hybrid cloud model has highlighted the need for cost optimization as data volumes grow, and the necessity of robust cloud security. New challenges are expected in 2025 in this area, such as the need for consistent security practices across hybrid cloud infrastructures to manage risks effectively. Additionally, the complexity of managing a hybrid cloud infrastructure will likely increase data sprawl and waste resources and will require skilled professionals to manage them. Data protection will continue to be a top priority. The focus will be on implementing robust backup and recovery solutions, encryption, and access controls to protect critical data across hybrid cloud infrastructures. - Advancements at the edge

We can expect further advancements in edge computing, particularly with AI and machine learning integrations. Edge computing is already reshaping business operations, given its ability to process data closer to the source, resulting in faster response times and lower latency. Naturally, new standards will emerge to strengthen edge data protection and cybersecurity implementations.

However, this growth is likely to create new data protection challenges. With more devices connecting to edge networks, organizations will need to channel resources into advanced security measures, like threat detection, robust backups, encryption, and access controls to mitigate risks and protect data at the edge. Additionally, interoperability between diverse edge devices will be the key to seamless communication and efficient data flow. For sectors that rely on real-time analytics, such as retail, edge storage will offer a competitive advantage.

- Unified Data Will Spur Deeper AI Insights

So far the current AI initiatives have unlocked only a fraction of the value that Enterprises can derive in the fullness of the time. The largest barrier to unlocking this amazing value is Data Siloes. 2025 will mark the year where Enterprises take their Data Strategies serious in order to innovate with AI faster and outpace their competition. - Better Business Outcomes Means Unprecedented AI Investment

Spending on AI has the largest potential ROI in terms of business outcomes. In 2025, companies will prioritize AI spending over everything else. Those who treat AI as a core investment—not just an experimental add-on—will be the ones to lead their industries forward. To do that, they need to build intelligent data infrastructure into their IT environments so they have future-proofed data strategy. The stakes are high and so are the expectations. Not playing is simply not an option. - Age of AI Will Make Trust and Security a MUST

Security and governance can’t be an after thought. Having great practices around data integrity, quality and security will be the theme for 2025 AI initiatives. Companies must be proactive and prioritize data integrity and governance to prevent AI’s promise from becoming its peril. - AI Technologies Will Move into Industry-Specific Solutions

These specialized applications will require unified data pipelines and interoperable systems, emphasizing the need to overcome siloed data structures. The businesses that create industry-aligned AI solutions will not only lead their markets but also set new standards for innovation.- Healthcare: AI will increasingly assist with personalized medicine, analyzing complex datasets like patient histories, genetic profiles, and environmental factors to recommend tailored treatments.

- Finance: AI is expected to become critical for fraud detection and risk management, enabling firms to analyze transactions in real-time with unprecedented accuracy.

- Manufacturing: Predictive maintenance powered by AI will transform production lines, ensuring efficiency and reducing downtime.

Panzura

Petra Davidson

Global Head of Marketing

The increasing adoption of hybrid cloud frameworks within enterprises is driving demand for file data solutions that offer greater flexibility, cost-efficiency, resilience, and support for diverse usage scenarios across cloud, core, and edge environments.

- In 2025, we’ll witness the emergence of sophisticated “self-healing” storage solutions amid the confluence of storage and security. These will not only make data more resilient at a time of increasing global technological risk and unpredictability, but also proactively detect, investigate, and resolve cyber incidents. Expect to see direct integration of data security features into storage infrastructure like real-time threat intelligence, storage-focused threat-hunting for anomaly detection, and automated response mechanisms.

- This year, we anticipate a surge in edge computing integrations with hybrid cloud storage. This trend will be fueled by the need to reduce latency and bandwidth costs. AI workloads will dynamically shift between edge and cloud, leveraging the strengths of each environment. Furthermore, seamless edge-to-cloud integration will facilitate end-to-end automation across multi-cloud and edge deployments.

- In addition, the increasing adoption of Retrieval-Augmented Generation (RAG) for AI applications will drive the demand for hybrid cloud storage solutions. RAG models rely on efficient access to large, diverse datasets containing high quality information. Hybrid cloud storage infrastructure provides the necessary scalability, performance, and cost-effectiveness to support the demanding data access requirements of RAG systems.

Peer Software

Jimmy Tam

CEO

- Rise of Data Orchestration for AI and ML

As more organizations turn to AI for everything from better-informed decision-making and operational efficiency, it’s becoming clear that data needs to be managed more effectively. With data creation becoming ubiquitous, automated data orchestration will gain prominence to aggregate and streamline disparate data sources into AI engines. This will be essential for customizing large language models (LLMs) using methods like Retrieval-Augmented Generation (RAG), tailoring these tools for specific industries or companies. - Transition to Active-Active Data Systems

Traditional backup methods, such as snapshots, are becoming less effective with growing data volumes. Organisations are finding it increasingly challenging to meet recovery point and recovery time objectives and maintain high availability with these approaches. Active-active systems, which incorporate real-time synchronization of data across locations, will emerge as critical not only for reducing recovery times, but also ensuring seamless operations and managing massive datasets. - Focus on Reducing Data Sprawl at the Edge

As distributed workforces and applications continue to grow; companies will increasingly prioritise controlling edge data sprawl. Intelligent systems will relocate unused data from edge locations to centralised or cloud storage, optimising resource use and minimising costs.

- Increased Focus on AI and Machine Learning

AI-Specific Storage Features: Storage controllers might include specialized hardware or software to accelerate AI workloads, particularly in edge devices where on-device processing is crucial. Imagine a USB drive that can perform basic image recognition or natural language processing tasks on the data it already contains thanks to its integrated AI capabilities. - Enhanced Performance and Efficiency

PCIe 5.0 and Beyond: Expect wider adoption of PCIe 5.0 for SSDs, enabling even faster data transfer rates. On the enterprise level, expect PCIe 6.0 to double the speeds to meet the demand of AI workloads. These storage servers will likely integrate cold plate conduction cooling or even immersive cooling which places the entire server in a non-conductive liquid. - Customization and Differentiation

Tailored Acceleration: Computational Storage will evolve to add accelerators on to SSDs for highly specific tasks that could be handled by the CPU but are offloaded to one or more SSD(s) to handle as a background process without adding substantial cost or power consumption. The accelerated SSDs can handle large volumes of data much faster, all while consuming less power than traditional processors, something that will be in high demand as AI use cases expand in 2025.

- Standard in Data Centers: S3-based Tape Libraries

Standardization plays a major role in the data center sector. This applies not only to hardware, but also to software. The need for data archiving in data centers will increase as data volumes grow rapidly due to applications such as AI and HPC. Concepts based on established tape technology and standardized object-based software interfaces will be used to enable the active use of archived data. Data storage based on scalable and rack-mountable tape libraries that are designed for use in data centers and can be integrated via standardized software interfaces such as S3, will become indispensable in data centers. - Energy requirements in data centers are beyond imagination

All forecasts show that energy requirements in data centers will continue to rise dramatically. In particular, the rapidly increasing use of GPUs for AI applications will have a considerable impact on energy requirements. To compensate for this, energy-efficient storage systems such as tape libraries, which are homogeneously integrated via standardized S3 software interfaces, are increasingly being used in data centers.

Tape-based Active Archive Complements the Fastest AI Storage

QStar believes the use of AI to provide added insight into multiple types of data will be a major driving force in many IT departments over the next year and into the future. The use of tier 1 primary storage and new tier 0 GPU based storage will require significant data sets or project data to be available for relatively short periods of time during processing. At other times, this data has to be stored securely and be readily available when next needed, but also stored at low-cost, due to the size of the data sets involved.

Multi-node tape-based active archive solutions provide everything an AI environment requires, by using many tape drives in parallel to increase significantly raw performance. Tape media is the lowest cost and most secure form of storage. AI applications can choose to access this data through a file system (SMB or NFS) or S3 API protocol.

- The AI hangover is settling in – Living (and working) with the reality of AI

AI may have grabbed the headlines in 2024, but in 2025 organizations are going to get real about how they want to use AI—and the realities of implementing it.

In 2025, organizations are going to end their AI exploration phase to instead take a deep, realistic look at their need for the technology and how it will meaningfully help their business and customers.

They’ll find that their best minds will not be replaced by AI, but will see how well AI can amplify their expertise. While AI doesn’t create the idea, AI can help make the idea a reality faster, so we’re going to start seeing businesses tapping virtual agents and copilots for the tedious work while letting humans do what they do best—be creative. - We’re talking the data race v. the arms race

In the last year, there has been a frenzy around AI, with investors and organizations throwing cash at the buzzy technology. But the real winners are those who saw past the “buzz” and focused on actionable takeaways and what will actually help their organization.

We’re finding now that the gold rush isn’t the technology itself, it’s the data that feeds AI and the value it presents. In 2025, organizations that take a more pragmatic approach to AI—and its underlying data infrastructure—will be best prepared to fuel new insights and power discovery.

Those who are leading the data race are the ones who are not only leveraging every scrap of their collected data for differentiated AI outcomes, but those who have an infrastructure and process in place for effectively doing so—managing, organizing, indexing, and cataloging every piece of it.

- Balancing AI demands with energy-efficient storage

As AI workloads grow, so do the energy demands and costs associated with them, pushing organizations to seek cost-saving, energy-efficient strategies. One key focus will be data storage, as vast datasets are crucial for AI but costly to maintain.

Organizations will increasingly turn to scalable, low-power storage solutions, leveraging cold storage for less frequently accessed data to cut energy consumption. However, this “cold” data won’t stay idle; it will be proactively recovered for reuse, re-monetization, and model recalibration as business needs evolve.

By balancing high-performance access with efficient cold storage, companies can meet AI demands while reducing costs and environmental impact.

- Transition from Data storage to Data platform

Organizations will continue transitioning from heritage storage-centric models to data platforms integrating on-premise and cloud data services to optimize costs and enable new business capabilities. - Proliferation of Multicloud Strategies

Organizations will increasingly adopt multicloud strategies to enable competitive advantage while reducing risk and costs. A critical enabler will be making data available to application services, including AI, in the cloud while maintaining data governance. - Continued Embedding of AI in ALL

Things Related to Data Organizations will increasingly adopt data platforms with rich APIs to integrate with AI to deliver data intelligence that improves data governance and security, legal discovery, and ransomware detection, among other use cases. AI will also enable new insights to better inform IT operations, dramatically improving efficiency and reducing costs.

- A new view emerges on Storage Density

Moving beyond simple “capacity per drive” or “capacity per rack unit,” Storage “Capacity/Power-Density” (TB/W-volume) and “Performance per TB per W” (Throughput/TB/W) will become more critical in architecting storage solutions, given the space and power needed to feed data to AI - “Power Scarcity” will ignite a renaissance in system/solution design

The current trends for power per rack/system are unsustainable. Innovations in cooling solutions and thermal management have already been on the rise for the past few years. But the power density and total power consumption of AI chips is exacerbating the problems with both total power supply for data centers and with cooling the equipment in the racks. Hardware systems and components designers will be forced to get more creative in their new systems to eke out every drop of power efficiency. - Given the previous point, AI will NOT be the answer to everything

AI absolutely will garner a huge share of wallet, but simply cannot be the single path for infra spending and growth.

- Object storage will emerge as the go-to data storage model for AI application developers

Enterprises, government agencies, and cloud service providers are seeking to demonstrate a return on their investments in AI and machine learning projects. As a result, application developers are under significant pressure to improve efficiency and outpace the competition.

Time-to-market is key, with modern designs centered on cloud-native, container-based services. For these applications, API-based methods are a natural fit for accessing external business logic, databases, networking, security services, and now data storage.

No developer wants to deal with capacity, performance or other limitations inherent to legacy file and block storage. The flat, virtually unlimited namespace that object storage provides is ideal for large-scale data lakes and AI tools.

I predict that object storage will become the de-facto storage model of choice for high-performance AI applications in 2025, especially for model training and fine-tuning. - The next phase of ransomware defense will focus on cyber resilience against data exfiltration

Immutable storage has proven to be a game-changer in the fight against ransomware, as it provides an unbreakable defense against encryption attacks by ensuring backups remain impervious to modification or deletion.

That said, as organizations adopt new defenses like immutable storage, cybercriminals don’t just give up; they adapt.

We’re now witnessing one such adaptation, with threat actors currently shifting from encryption-based attacks toward more sophisticated data exfiltration tactics, where sensitive data is stolen rather than encrypted, and immutability alone can’t stop them. I predict that while immutable storage will remain a powerful and essential tool in the fight against ransomware, going forward, it must be paired with additional layers of active cyber resilience to address emerging threats like data exfiltration. Organizations need to secure sensitive data wherever it exists — in production, in transit, and even in stored backups. - EU data privacy regulations will drive a new wave of data decentralization

Just as GDPR pushed organizations to rethink their approach to data privacy, the new European NIS-2 and DORA regulations will soon force organizations to adopt stronger cybersecurity measures, improve incident responsiveness, and increase their resilience to cyber-attacks.

The increased emphasis on data sovereignty will have the likely side effect of greatly complicating data transfers for EU organizations, especially those handling cross-border data flows.

As a result, in 2025, we will see organizations move to establish new, separate EU data centers to comply with stringent data sovereignty, cyber resiliency, and cross-border transfer requirements.

- AI Agents Will Be Heating Up – But They’ll Need To Be Controlled

By 2025, AI agents will no longer operate without control. Companies will require accountability, traceability, and governance. Agent Context Protocol (ACP), introduced by Scalytics Connect, will become the standard for controlling autonomous AI agents. Every agent’s action will be logged, auditable, and verifiable — a critical development for compliance-driven industries like healthcare, finance, and government. - Data Lake Market Share Will Shrink as Edge Processing Expands

The days of centralizing data in lakes and marts are numbered. Cloud repatriation started in 2024, and 2025 will bolster this trend, enterprises will shift their AI strategies to focus on processing data where it lives. This shift will be driven by the need for cost efficiency, privacy, and regulatory compliance. Scalytics Connect will lead the way, enabling organizations to process data at the edge, not in centralized warehouses, which will reduce the need for ETL pipelines and data migrations. - Real-Time AI Workflows Will Become the New Standard

AI-driven enterprises will demand faster, event-driven decision-making. The ability to process and act on live data streams will become a competitive advantage. In 2025, Data in Motion will fully replace batch processing as streaming-first AI workflows become standard. Scalytics Connect, with its real-time, Kafka-based protocol, will enable AI models and agents to process live data as it flows, providing faster insights, smarter AI agents, and reduced operational latency.

- Global Data Explosion and Storage Shortage

The world is generating data at unprecedented volumes, with an expected 400 zettabytes by 2028. This rapid growth in data creation, driven by advancements in AI, is outpacing the growth of storage capacity, leading to a potential storage shortage crisis. Organizations will need to develop long-term capacity plans to ensure they can store and monetize their data effectively. - Trust in AI and Data Integrity

As AI becomes more integrated into business functions, the trustworthiness of the data it relies on becomes crucial. Ensuring data is retained and available long-term is essential for validating AI’s trustworthiness and complying with legal requirements. Companies focusing on trustworthy AI principles will face a surge in data, making scalable storage innovations critical. - Storage Innovation and Environmental Impact

The continuous data boom will challenge data centers, which will need to innovate to manage rising power density needs and environmental concerns. Higher areal density hard drives can increase data capacity without the need for new data center sites, leading to significant cost savings and reduced environmental impact.

- Kubernetes as Cloud Operating System

Kubernetes will establish itself as the universal cloud operating system, with enterprises standardizing on it for both stateless and stateful workloads. This will be driven by enterprises migrating out of proprietary cloud stacks such as VMware and looking for abstraction on top of public clouds. This convergence will drive demand for sophisticated storage orchestration capabilities that can seamlessly span on-premises and cloud environments while delivering enterprise-grade reliability and performance. Storage solutions will need to support automated operations while maintaining strict security and multi-tenancy requirements. Storage needs to operate in the way Kubernetes does, with declarative policies and high degree of flexibility, fully abstracting underlying storage media or services underneath. In other words, Kubernetes storage needs to operate in “serverless” fashion. - AI Storage Shifts from Training to Inference

The AI storage landscape will undergo significant transformation as organizations shift focus from training to inference workloads. This will drive new requirements for storage systems that can efficiently handle diverse I/O patterns – from high-throughput sequential reads for model loading to low-latency random access for real-time inference. Storage solutions will need to provide consistent sub-millisecond latency at scale while automatically managing data placement across performance tiers. Multi-tier storage architectures and storage systems with advances tiering capabilities will become more prominent. We already see that with some of the new products offered by Pure, Simplyblock, or Hammerspace. - Storage Cost Becomes a Problem with AI and Data Lakes

Storage cost optimization will become a board-level priority as organizations face exponential growth in storage demands from AI, data lakes, and cloud-native applications. This shift will drive accelerated adoption of intelligent storage platforms capable of automatically optimizing data placement across performance tiers while maintaining strict application SLAs. While object storage has traditionally served as a cost-effective solution for data lakes, its performance limitations will push organizations to seek alternatives that balance cost and performance. Organizations will increasingly seek solutions that combine advanced storage efficiency techniques – including erasure coding, deduplication, and automated tiering – with granular cost allocation capabilities to enable precise chargeback and budgeting controls.

- Energy Efficient Storage Will Enable AI Growth

The quantity and scale of power-hungry AI workloads are growing quickly while the availability of affordable and sustainable power is emerging as one of the greatest limiting factors. To maximize AI capabilities and business opportunities, IT organizations will prioritize energy efficiency as a critical requirement while recognizing large-scale data storage as an obvious target of opportunity. Most of the world’s data is known to be infrequently accessed but all data stored on flash and disk continuously consume power, even when idle. Tape, which already costs a fraction of flash and disk, consumes no power during idle, making it the ideal medium for infrequently accessed data. With newly introduced, cloud-based subscriptions and on-prem object-based solutions, tape is now completely accessible to any IT organization and will prove to be an important enabler for growing AI. - Tape Storage Adoption Will Accelerate

The resurgence of magnetic tape will accelerate as one of the few opportunities for IT organizations to reduce data storage acquisition and operating costs, particularly with respect to power consumption. With its ability to affordably store massive volumes of data using minimal energy, tape storage will become a necessity in a sustainable and efficient data management strategy. Organizations will continue to recognize the value of moving a greater share of infrequently-accessed data to tape, reducing their reliance on disk and flash storage to save power and lower the cost per TB of data stored. This shift will not only lower data center energy consumption but also create opportunities to repurpose power and cooling capacity to support AI workloads and other critical infrastructure. - Tape Will Play an Essential Role in AI Compliance

Government regulation of AI has begun in earnest and is only expected to accelerate. Organizations will increasingly be required to keep data from all aspects of the AI workload including input sources, model training, inferencing, content generation and more. Since this data will be primarily for safe keeping and infrequently accessed, immutable object-based tape will be the medium of choice as it is capable of storing data at great scale, delivers the lowest-possible cost per TB stored, provides the longest shelf life and does not consume any power at idle.

- By 2025, Broadcom’s strategies with VMware products will enable new technologies to flourish. Most organizations currently using VMware tools are frustrated with the massive price increases and are actively seeking alternatives. This transition will take 3-5 years, but all indications are that many are already taking the leap.

- With the exponential growth of edge data, enterprises will prioritize localized compute capabilities to enhance data processing. There will be more workloads at the edge that will require advanced data management approaches, AI, and GPU support. As edge infrastructure expands, the associated attack surface will also grow, necessitating heightened security measures. CIOs must adopt comprehensive security frameworks that address vulnerabilities at the edge without compromising overall IT integrity.

- As companies reassess their cloud commitments, we predict a notable trend towards repatriating workloads from the cloud back to on-premises infrastructures by 2025. This shift will be driven by cloud lock-in challenges and the need for greater control over data and costs, with HCI technologies playing a crucial role in facilitating this transition.

- Cost-Efficient Auto-Tiering for Long-Term Data

Organizations will rely on advanced auto-tiering to manage growing data volumes. These solutions will automatically move data between performance and archival storage, reducing costs while ensuring quick access to datasets needed for AI and other applications requiring long-term data retention. - Seamless Multi-Cloud Integration

Flexibility across multiple clouds will become critical. Platforms enabling easy migration and integration across public, private, and hybrid clouds, along with interoperability between providers, will help organizations optimize costs, avoid vendor lock-in, and adapt to evolving business needs. - Scalable Snapshot Capabilities

The need for massive snapshot scalability will surge as businesses prioritize rapid data recovery and ransomware protection. Solutions supporting hundreds of thousands of snapshots without degrading performance will become essential for managing mission-critical and sensitive data.

- Software-Defined Storage (SDS) Becoming a First-in-the-list approach to storage

As enterprises increasingly shift toward hybrid and multi-cloud environments, traditional hardware-based storage systems no longer meet the agility, scalability, manageability and cost-efficiency demands of modern IT infrastructures.

SDS, which runs on standard servers & networks and decouples data from the underlying hardware, offers unmatched flexibility in deploying, managing and scaling storage resources across on-premises data centers and cloud environments. And for many hardware/datacenter refresh and datacenter consolidation projects SDS is becoming a first-in-the-list option. We will continue seeing a transition towards fully automated, software-defined storage solutions with an emphasis on performance, security, manageability, APIs and cost reduction across larger and more complex infrastructure projects. - AI Will Continue to Proliferate

In 2025, AI will continue to proliferate across all industries, driving new opportunities and challenges. The integration of AI into storage systems will be particularly transformative, with AI-powered solutions becoming increasingly common for optimizing performance, enhancing security and ensuring data reliability. This increase in AI workloads will lead to a surge in demand for high-performance storage solutions that can support these data-intensive applications, including large language models (LLMs), machine learning model training and real-time data analytics. This will increase the requirements to data storage technologies in order to handle AI’s specific needs for speed, scalability and efficiency. - 2025 Will Be the Year of the Move from Broadcom VMware to Other Cloud Orchestration Platforms, mainly KVM-based

Enterprises increasingly move away from traditional virtualization platforms like Broadcom VMware to open-source, no-vendor lock-in cloud management/orchestration platforms – such as CloudStack, OpenStack, OpenNebula, Proxmox and Kubernetes, which are all KVM-based. Even other commercial or non-open source alternatives like RedHat OpenShift and HPE Morpheus VM Essentials are KVM-based.

KVM is the default hypervisor for the majority of the large scale cloud-native companies in SaaS/PaaS/IaaS/ec-commerce and such. And now it is becoming the preferred hypervisor in many traditional enterprises due to its openness, performance, capabilities, flexibility and very wide usage.

In 2025 we’ll see many more organizations of all types which will increasingly adopt cloud-native orchestration platforms, such as the ones listed above. This trend is driven by the requirements to reduce cost, optimize operational efficiency and profit margins, increase performance and reduce vendor lock-in.

- Innovation Momentum

Building on the intensified design-in activities we’ve seen this year, we expect to see a surge in new project launches in 2025. This is particularly true for industrial SSDs, with a growing need for enhanced performance features, advanced security measures, and robust reliability. - European Recovery

As a European manufacturer, we expect the recovering European market to drive increased demand across various sectors. This growth reflects the strengthening confidence in Europe’s industrial and technological capabilities. - Geopolitical Impact

With geopolitical tensions between the USA and China expected to intensify further, trade wars and tariffs may continue to disrupt global markets. In this context, European manufacturers could emerge as a strong alternative, with growing awareness of the need to bolster Europe’s semiconductor industry.

- Rethinking the Three-Tier Architecture

The three-tier block architecture has effectively supported global IT systems for over three decades. Yet, as we edge closer to fully operational AI, its inefficiencies become glaringly apparent. To harness the full potential of AI, we must rethink and overhaul our platform designs from the ground up - Thermal Efficiency and Processing

One of the most pressing issues in current system architectures is the waste of power in processor clocks, which often operate in an unnecessary, artificial wait state. This inefficiency stems from processors being too fast for the tasks they are performing, consuming excess energy without yielding additional computational benefits.

The remedy requires a shift towards function-specific edge devices that integrate servers directly into the processing stack, minimizing wasted resources and optimizing power usage. - Function-Specific Edge Devices

Developing function-specific edge devices (function on silicon) is crucial for optimising AI operations at scale and to the edge. These devices are tailored to perform specific tasks, reducing the need for general-purpose processing power and allowing for more efficient execution of AI models. They can be integrated closely with localised servers, creating a seamless processing environment that enhances speed and reduces latency.

- Storage Budgets Will Lose Out in 2025

The confluence of factors in 2024—including AI, ransomware, and skyrocketing VMware licensing fees—will continue forcing IT storage budgets to deliver more with less, prioritizing cost-efficiency and simplicity. Organizations will need to store vast amounts of unstructured data while compensating for increased software license fees and AI hardware expenses. This data proliferation will require enterprises to acquire mission-critical storage with significantly more competitive economics amid strained IT budgets. - Cloud Costs will drive Data Repatriation

As organizations evaluate Edge, Core, and Cloud strategies, many will revisit public cloud initiatives to both enhance security and reduce costs. This shift will drive the accelerated adoption of private cloud and on-premise storage deployments for data redundancy as well as significant cost savings over the public cloud. - Digital Sovereignty and Privacy Become a Matter of National Security

Governments worldwide will strive for greater control over their digital assets, cultural representation, and influence in AI models. This will necessitate the broad adoption of high-performance, high-capacity, archival-grade storage for critical digital asset management. While governments will drive legal policies around data governance and retention, AI representation and training will also become priorities for faith-based organizations, churches, NGOs, universities, research institutions, and enterprises.

- Unstructured content drives SDS, protocol collaboration and storage management

There is no doubt that the exponential growth of unstructured data is having knock-on effects on the storage industry. This data is now arriving from all sides: OTT, advertising, social media posts, video sharing, data science, medical and public sectors. This continued surge will demand more scalable, flexible and cost-effective storage platforms, and this is where SDS (Software Defined Storage) addresses the market by decoupling storage hardware and software. SDS can manage vast amounts of unstructured data efficiently with features such as automation and policy-driven management. We feel the provision of SDS is expected to rise, and providers will need to offer protocol collaboration alongside increased security and performance. Data silos are migrating to single platforms requiring deeper storage management as layers of Flash and HDD are merged, often globally. - AI and RDMA pushing adoption of 400Gbe and 800Gbe

From an AI perspective, high-speed transfers, distributed training and inference benefit from the increased bandwidth offered by 400Gbe and 800Gbe. 100Gbe is now widely used within servers and clients, having started as network infrastructure components, and we see the same movement with 400/800. RDMA, and by extension, SMB Direct, facilitates very high network performance with CPU offloading, NVMe prices will reduce. Card manufacturers are committed to faster topologies, and we believe with price reductions, deployments will continue to expand and become readily available. - SMB over QUIC will offer greater security and greater flexibility

Microsoft’s decision to make SMB over QUIC broadly available – no longer restricting it to Azure IaaS – unblocks broad adoption in other public clouds and on-premises. Organizations need greater unstructured data security and SMB over QUIC requires TLS 1.3, encryption, and certificates, not just user passwords. SMB features like data compression, multi-channel, and continuous availability work seamlessly over QUIC with no new behaviours required of users or applications. Windows 10 support ends in October and its replacement will soon be running on a billion devices, all capable of using SMB over QUIC.

- Emergence of Cost-Efficient High-Capacity Storage to Support AI Workflows

The exponential growth of large collections of unstructured data to feed AI workloads will demand scalable and cost-efficient storage solutions. Versity predicts organizations will increasingly adopt modern, hardware-agnostic systems to support AI workflows without being locked into proprietary platforms. - Sustainability as a Strategic Storage Priority

In 2025, organizations will continue prioritizing environmentally sensitive storage solutions, incorporating low-energy technologies like tape, optical glass, ceramic, and DNA storage. Versity is committed to leading this shift. Low energy consumption will be particularly significant in energy-hungry AI workflows. - Simplified and Open Storage Ecosystems

As organizations look to streamline operations, open-source and vendor-agnostic storage solutions will dominate. Versity anticipates a strong preference for systems that are simple to manage, integrate with existing platforms, and eliminate vendor lock-in.

- Cloud resource and cost optimization will continue top top priority

- Megatrend of migrating all workloads to the cloud

- Dynamic/elastic infrastructure for AI/ML will emerge as the key factor for enabling scale and economics

- Businesses will focus on achieving cost stability to navigate uncertain economic times

To achieve this, IT leaders should invest in platforms and tools that offer real-time visibility into cloud usage and costs. Features like automated alerts for unusual spending patterns and dashboards that consolidate expenditure data will allow businesses to stay in control and predict their cloud spending. By strategically placing the correct workloads in the cloud and ensuring data is in the right place at the right time at the right cost, businesses can optimize efficiency while managing financial risk. Strategic planning will also require building flexibility into IT operations with scalable solutions that adjust to changing demands without incurring punitive costs. Additionally, clear contracts with transparent terms will be critical. IT leaders should carefully evaluate their technology agreements to avoid hidden fees and rigid commitments that could strain their budgets. - Businesses will increasingly focus on closely examining what digital sovereignty truly entails, as it becomes a strategic priority amid evolving geopolitical shifts

When we talk about digital sovereignty, we must consider all its dimensions: technological autonomy, operational independence and data sovereignty. This includes both the hardware on which data is stored and the networks over which it is transmitted. In practice, it is extremely difficult to achieve sovereignty equally in all three dimensions. The focus should be less on implementing a supposedly inherently sovereign solution and more on first defining exactly what a sovereign IT infrastructure means for one’s own organisation. Businesses should start with the basics by fully understanding the relevant local regulations. By taking this approach, companies can embrace the principle of digital sovereignty without getting lost in the ever-changing details of standards and regulations. - Businesses will seek to break free from vendor lock-in to maintain agility and control under budget and regulatory pressures

In 2024, organizations across sectors such as airports, banks, and emergency services experienced significant disruptions due to cloud outages, highlighting the risks of relying on a single cloud provider. IT leaders are expected to turn to hybrid and multi-cloud strategies in 2025 to improve resilience and manage costs more effectively. Breaking free from vendor lock-in will be essential, as many major providers impose significant barriers, such as high data migration costs (e.g. egress and retrieval fees) and technical challenges when transitioning data back to on-premises or to another cloud. A resilient tech stack will allow companies to store and access their data exactly when and where they need it, enabling greater operational agility. By maintaining the freedom to switch between cloud providers, organisations can not only optimise performance but also ensure they are always leveraging the best available solutions, safeguarding their competitiveness in a dynamic business environment.

- it enables storage to be shared across multiple servers offering greater flexibility and utilization of storage resources, and

- demonstrations show that disaggregated storage delivers the performance needed to keep GPU processing fully saturated. Over time these external storage architectures will become standard with HDD for active archives and with flash for performance workloads and will ultimately migrate to fabric as opposed to SAS given the convenience and distance benefits of fabrics.

- Cloud-to-On-premises Repatriation

Cloud-to-on-premises repatriation is becoming a prominent trend as businesses increasingly move workloads from the cloud to on-premises or private cloud solutions. This shift is driven by rising cloud costs, prompting companies to seek more cost-effective alternatives, as well as security concerns over potential cloud breaches, which are pushing businesses toward safer, controlled environments. Additionally, stricter data sovereignty regulations require sensitive data to remain in-house. The availability of affordable hardware is further enabling this transition, making it both practical and cost-effective while offering businesses greater control and enhanced security. - More Companies will Embrace Cloud-Native Data Platforms on-premises with Kubernetes

In 2025, more businesses will adopt cloud-native data platforms running on-premises or in colocation facilities, leveraging Kubernetes to provide a cloud-like experience with elasticity and flexible scaling within data centers. This approach facilitates the separation of compute and storage, enabling seamless workload mobility between on-premises and cloud environments while utilizing existing cloud expertise. By combining flexibility, agility, and control, this hybrid model addresses the growing demand for faster and more scalable operations. - Data Sovereignty will Force Companies to Rethink Cloud Strategies

As data privacy laws tighten, companies will face mounting legal pressure to keep data within national borders, prompting a significant shift in cloud strategies. This change will be driven by the weaponization of tax laws, as governments use tax policies to enforce data sovereignty, and by companies’ growing risk aversion, avoiding even tokenized data crossing borders to mitigate legal and security risks. In regions lacking cloud service provider presence, businesses will increasingly adopt colocation, running cloud-native solutions in local data centers. The rise of data sovereignty regulations will compel companies to prioritize in-country data storage and processing solutions to ensure compliance and security.

- AI-Powered Edge Solutions Will Enable Industry-Specific Innovation

As Edge AI matures, businesses will increasingly adopt industry-specific AI solutions to solve unique challenges within their sectors. For example, in healthcare, Edge AI will enable faster diagnostics and continuous remote patient monitoring without relying on cloud connectivity. Similarly, in retail, localized AI will power personalized customer interactions by analyzing real-time data from in-store devices. Industries such as agriculture, transportation, and energy will follow suit, creating targeted applications that leverage Edge AI’s low-latency, secure, and cost-efficient capabilities to unlock new value and innovation. - Edge AI Will Continue to Power Smarter Decision-Making

In 2025, Edge AI will play a greater role in enabling autonomous systems to make independent, real-time decisions with minimal human intervention. From self-driving cars navigating complex environments to smart factories optimizing production processes, Edge AI will deliver localized intelligence that operates efficiently even in places where network connectivity is limited. This autonomy will reduce reliance on cloud connectivity and improve operational efficiency across industries. As AI models become more advanced, Edge AI will drive innovation by empowering devices and systems to analyze data, detect patterns and respond without centralized oversight. - Edge AI Will Boost Small Businesses