SK AI Summit: SK hynix Introduces 1st 16-High HBM3E

Providing samples in early 2025

This is a Press Release edited by StorageNewsletter.com on November 13, 2024 at 2:02 pmThis report, published on November 4, 2024, was written by TrendForce Corp.

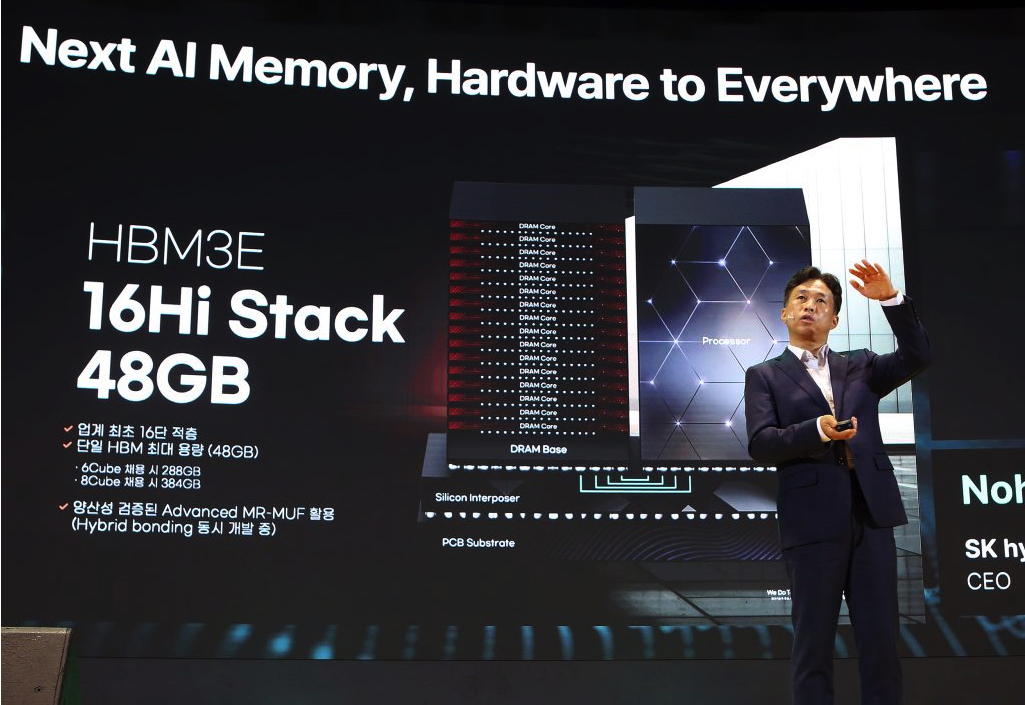

South Korean memory giant SK hynix, Inc. has introduced the industry’s 1st 48GB 16-high HBM3E at SK AI Summit in Seoul, Korea, which is the world’s highest number of layers followed by the 12-high product, according to its press release.

According to SK hynix CEO Kwak Noh-Jung, though the market for 16-high HBM is expected to open up from the HBM4 generation, the company has been developing 48GB 16-high HBM3E in a bid to secure technological stability and plans to provide samples to customers early next year, the press release noted.

In late September, SK hynix announced that it has begun mass production of the world’s first 12-layer HBM3E product with 36GB.

On the other hand, SK hynix is expected to apply Advanced MR-MUF process, which enabled the mass production of 12-high products, to produce 16-high HBM3E, while also developing hybrid bonding technology as a backup, Noh-Jung explained.

According to him, firm’s 16-high products come with performance improvement of 18% in training, 32% in inference vs 12-high products.

Noh-Jung made the introduction of SK hynix’s 16-high HBM3E during his keynote speech at SK AI Summit today, titled “A New Journey in Next-Generation AI Memory: Beyond Hardware to Daily Life.” He also shared the company’s vision to become a “Full Stack AI Memory Provider“, or a provider with a full lineup of AI memory products in both DRAM and NAND spaces, through close collaboration with interested parties, the press release notes.

It is worth noting that the firm highlighted its plans to adopt logic process on base die from HBM4 generation through collaboration with a top global logic foundry to provide customers with best products.

A previous press release in April notes that the firm has signed a memorandum of understanding with TSMC for collaboration to produce next-gen HBM and enhance logic and HBM integration through advanced packaging technology. The company plans to proceed with the development of HBM4, or the sixth gen of the HBM family, slated to be mass produced from 2026, through this initiative.

To further expand the memory giant’s product roadmap, it is developing LPCAMM2 module for PC and data center, 1cnm-based LPDDR5 and LPDDR6, taking full advantage of its competitiveness in low-power and high-performance products, according to the press release.

The company is readying PCIe 6th gen SSD, high-capacity QLC-based eSSD and UFS 5.0.

As powering AI system requires a sharp increase in capacity of memory installed in servers, it revealed in the press release that it is preparing CXL Fabrics that enables high capacity through connection of various memories, while developing eSSD with ultra-high capacity to allow more data in a smaller space at low power.

It is also developing technology that adds computational functions to memory to overcome so-called memory wall. Technologies such as Processing near Memory(PNM), Processing in Memory(PIM), Computational Storage, essential to process enormous amount of data in future, will be a challenge that transforms structure of next-generation AI system and a future of AI industry, according to the press release.

Read more

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter