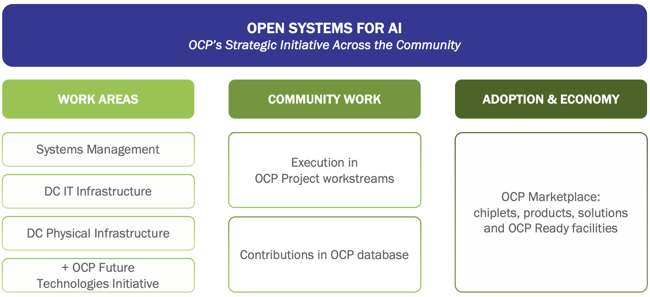

Open Compute Project Foundation AI Initiative

With contributions of Nvidia and Meta

This is a Press Release edited by StorageNewsletter.com on October 24, 2024 at 2:00 pmThe Open Compute Project Foundation (OCP) announced an expansion of its Open Systems for AI Initiative, with approved contributions from Nvidia Corp., including the Nvidia MGX-based GB 200-NVL72 platform and in-progress contributions from Meta.

The OCP launched this community effort January 2024, with leadership provided by Intel, Microsoft, Google, Meta, Nvidia, AMD, ARM, Ampere, Samsung, Seagate, SuperMicro, Dell and Broadcom.

The objective for the OCP community with the Open System for AI initiative is to establish commonalities and develop open standardizations for AI clusters and the data center facilities that host them, advancing sustainability and enabling the development of a multi-vendor supply chain that advances the market adoption.

Nvidia has contributed MGX based GB 200-NVL72 rack and compute and switch tray designs, while Meta is introducing Catalina AI Rack architecture for AI clusters. The contributions by Nvidia and Meta, along with efforts by the OCP community, including other hyperscale operators, IT vendors and physical data center infrastructure vendors, will form the basis for developing specs and blueprints for tackling the shared challenges of deploying AI clusters at scale. These challenges include new levels of power density, silicon for specialized computation, advanced liquid-cooling technologies, larger bandwidth and low-latency interconnects, and higher-performance and capacity memory and storage.

“We strongly welcome the efforts of the entire OCP community and the Meta and Nvidia contributions at a time when AI is becoming the dominant use case driving the next wave of data center build-outs. It expands the OCP Community’s collaboration to deliver large-scale HPC clusters tuned for AI. The OCP, with its Open Systems for AI Strategic Initiative, will impact the entire market with a multi-vendor open AI cluster supply chain that has been vetted by hyperscale deployments and optimized by the OCP Community. This significantly reduces the risk and costs for other market segments to follow, removes the silos, and is very much aligned with OCP’s mission to build collaborative communities that will streamline deployment of new hardware and reduce time-to-market for adoption at scale,” said George Tchaparian, CEO, OCP.

NVIDIA’s contribution to the OCP community builds upon existing OCP ORv3 specs to support the ecosystem in deploying high compute density and efficient liquid cooling in the data center.

Nvidia’s contributions include:

- Its reinforced rack architecture that provides 19″ EIA support with expanded front cable volume, the high-capacity 1400A bus bar, an Nvidia NVLink cable cartridge, liquid-cooling blind mate multi-node interconnect volumetrics and mounting, and blind mate manifolds

- Its 1RU liquid-cooled MGX compute and switch trays, including a modular front IO Bay design, the compute board form factors with space for a 1RU OCP DC-SCM, liquid-cooling multi-node connector volumetrics, blind mate UQD (universal quick disconnect) float mechanisms and narrower bus bar connectors for the switch trays.

“Nvidia’s contributions to OCP helps ensure high compute density racks and compute trays from multiple vendors are interoperable in power, cooling and mechanical interfaces, without requiring a proprietary cooling rack and tray infrastructure – and that empowers the open hardware ecosystem to accelerate innovation,” said Robert Ober, chief platform architect, Nvidia.

Meta’s in-progress contribution includes the Catalina AI Rack architecture, which is specifically configured to deliver a high-density AI system that supports GB 200.

“As a founding member of the OCP Foundation, we are proud to have played a key role in launching the Open Systems for AI Strategic Initiative, and we remain committed to ensuring OCP projects bring forward the innovations needed to build a more inclusive and sustainable AI ecosystem,” said Yee Jiun Song, VP engineering, Meta.

The OCP community has been actively engaged in building open large-scale HPC platforms, and the AI use case is a natural extension of the community’s activities. As the AI-driven buildout moves along, trends show AI-accelerated systems to be deployed out to the edge, and on-premises at enterprise data centers, ensuring low latency, data recency and data sovereignty. At-scale problems discovered by hyperscale data center operators are solved by the OCP community bringing innovations to all AI data centers such as modularity of servers, precise time keeping, security, liquid cooling and specialized Chiplet based System in Package (SiP).

“The market for advanced computing equipment to deliver on the promise of AI is moving rapidly, with vendors and hyperscale operators each developing their own custom solutions. While this rapid pace is essential to create a differentiated offering and maintain competitive advantage, it risks fracturing the supply chain into silos, raising costs, and reducing efficiencies. In addition, the potential environmental impact of such a large data center buildout must be attenuated. The timing is right, for collaborative innovations to drive efficiencies using less power, water and lower carbon footprint to impact the next gen of AI clusters that will be deployed by the hyperscale data center operators and also cascade to enterprise deployments. The contributions by NVIDIA and Meta and their continued engagement in the OCP community has the potential to benefit many market segments,” said Ashish Nadkarni, group VP and GM, WW infrastructure, IDC.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter