Recap of 54th IT Press Tour in Colorado and California

With 9 companies: Arcitecta, BMC, Cohesity, Hammerspace, Quantum, Qumulo, Solix, StoneFly and Weka

By Philippe Nicolas | April 4, 2024 at 2:00 pm This article was written by Philippe Nicolas, initiator, conceptor and co-organizer of the event launched in 2009.

This article was written by Philippe Nicolas, initiator, conceptor and co-organizer of the event launched in 2009.

The 54th edition of The IT Press Tour took place in the Denver area, CO, and the bay are in California early March and it was a good opportunity to meet and visit 9 companies all playing in IT infrastructure, cloud, networking, data management and storage plus AI. Executives, leaders, innovators and disruptors met shared company strategy refresh and product update. These 9 companies are by alpha order Arcitecta, BMC, Cohesity, Hammerspace, Quantum, Qumulo, Solix, StoneFly and Weka.

Arcitecta

One of the sessions organized in Denver, with Arcitecta, has been the opportunity to get a company and product update from Jason Lohrey, CEO and CTO, based in Australia and his team, both based in the USA and in Europe. Founded more than 25 years ago in Melbourne, Australia, with a strong background during all these data explosion years, the firm is recognized for its expertise in advanced data management with Mediaflux, supporting a variety of data sources and exposed with various access methods. The product is hardware agnostic, working in the data path, coupled with a homegrown dedicated database, extensively excels with metadata management and discovery, with security features and strong persistent data models. Mediaflux also brings advanced data placement capabilities with tiering and migration to align cost of storage with value of data.

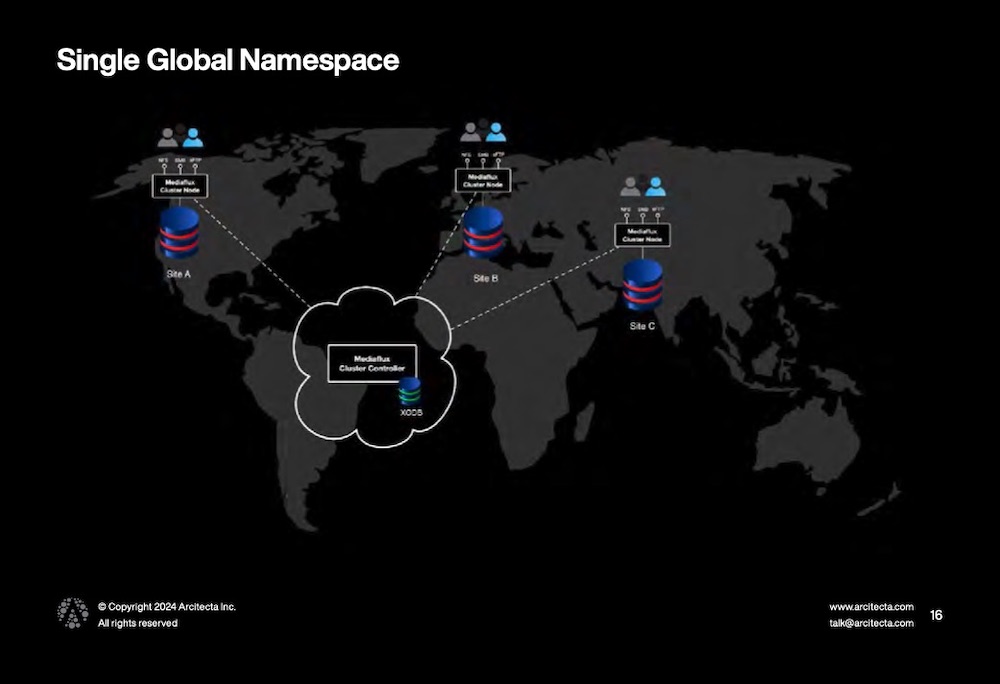

The team has designed XODB, its highly scalable data catalog with data and metadata versioning and advanced indexing to provide a super fast easy file search. Being in the data path, Mediaflux implements a global namespace that simplifies file access and navigation and therefore access persistence. The product is clearly one of the richest in the industry and at the same time, it suffers from some go-to-market and visibility limitations. They’re working on it for a few quarters and it seems to pay off with new deals and partners.

Beyond network file management and virtualization, the company also developed a special module named Limewire that is able to transfer big files over long distances at a really high speed, in fact a WAN file transfer function. It received the Most Complete Architecture award at the SuperComputing Asia 2024 conference for the Data Mover Challenge. The mission was to transfer 2TB of genome and satellite data with multiple data types and sizes across servers located in various countries connected by 100Gb/s international research and education networks.

In 2023, ESRF – the European Synchrotron Radiation Facility – has chosen Mediaflux to glue NAS, GPFS and Spectra Logic tape libraries via Backpearl. The global namespace built on top of all these storage devices and sites offers the flexibility to expose files from any storage units and write to any of them from all clients and move data across them based on workflows and other policies. Users leverage the XODB database to build an extensive metadata catalog and accelerate data access. Mediaflux is paramount at ESRF being the heart of any files oriented workflow. As of today, the product is deployed out of band and will move in the data path in the coming future.

The company has secured deals with Princeton university, the library of congress and NASA.

In addition to XODB created in 2010, Lohrey and his team decided to write its own access methods protocols such as SMB, NFS, sFTP and S3 and others are coming as well to have better controls and embed potentially some special hooks.

As the data environment becomes more and more complex and large with tons of files and big ones, the aim of Arcitecta is to control, optimize and deliver a reduced response time in this file access need and it is a real mission. The idea was to build XODB, central to metadata and be horizontal across any file oriented storage units, S3 included. At that scale, doing any OS base commands became a nightmare and doesn’t match the need. Everything you need to do must be controlled by this central database that delivers a very fast response time whatever is the volume of files.

For 2024, the team focused on delivering Mediaflux Applications for Web leveraging Javascript to address specific needs and vertical requirements. Of course, it’s impossible to avoid, AI will land into the product with the support of vectors in XODB.

Click to enlarge

BMC

We also met BMC Software at their Innovation Labs in Santa Clara, CA, and it was the opportunity to meet Ayman Sayed, president and CEO, and several members of his executive team to get an update on the company and product strategy around AI, automated IT operations, mainframe and open systems solutions.

Scrutinizing the market closely, the company demonstrated a real agility the last few years with new solutions and specific acquisitions tailored to new IT challenges. It is obviously the case for its Helix and AMI product lines in a very deep and wide connected IT world.

The team is clearly positioned in the IT operations domain, already ranked as aa leader by several analysts, with several product iterations: AIOps, ServiceOps, DevOps, DataOps and AutonomousOps.

Ram Chakravarti, CTO at BMC, spent time explaining how Generative AI feeds BMC’s strategy. We all remember that all users and vendors are convinced by that for a few decades, we mean the importance of data and it’s even more critical with the explosion and ubiquity of AI. It also means the integration or connection of various Ops oriented solutions thanks to AI as data are all the common part of all these.

For AIOps, the company is uniquely positioned with open systems and mainframes solutions but also for cloud to edge. The Helix product line targets AIOps with an observability role coupled with some AI engines with a new product iteration HelixGPT for AIOps.

This new core Helix solution also targets ServiceOps with HelixGPT for ServiceOps.

In DevOps, Helix Control-M, Jobs-as-Code and HelixGPT for Change Risk and Helix Change Management fit the need and the team prepares BMC AMI DevX with GenAI for code modernization and connectors.

In DataOps, one of the stats provided by the firm insisted on the fact that almost 50% of big data projects never moved to production. But 40% of firms are managing data as a business asset confirming that IT is a central component to any business, no surprise here, but not to just support the business but to accelerate and gain market share, in fact a key and sometimes a paramount element. The vendor promotes a horizontal approach with an orchestration layer on top of another key component, the metadata management piece. This is achieved with Helix Control-M for Data Orchestration, DataOps and also BMC AMI Cloud. Helix Control-M with GenAI for no-code workflow generation will be released soon.

And AutonomousOps with Helix Control-M for Automation and HelixGPT for Virtual Agent.

Clearly Control-M is at the heart of Helix to provide a comprehensive automation approach and coupled with GenAI will be able to deliver a real complete experience and new values. On the mainframe side, AMI GenAI, AMI AIOps and AMI Cloud cover the modernization of the domain. It is complemented by the AMI Cloud Product Suite with AMI Cloud Data, Cloud Vault and Cloud Analytics which are the result of the Model9 acquisition in 2023, one year after our visit with the software company in Israel.

The company is convinced that AI is the perfect companion of data but it has to be deployed, configured and used in the right way as some many divergences could happen. It means that real expertises are needed.

Click to enlarge

Cohesity

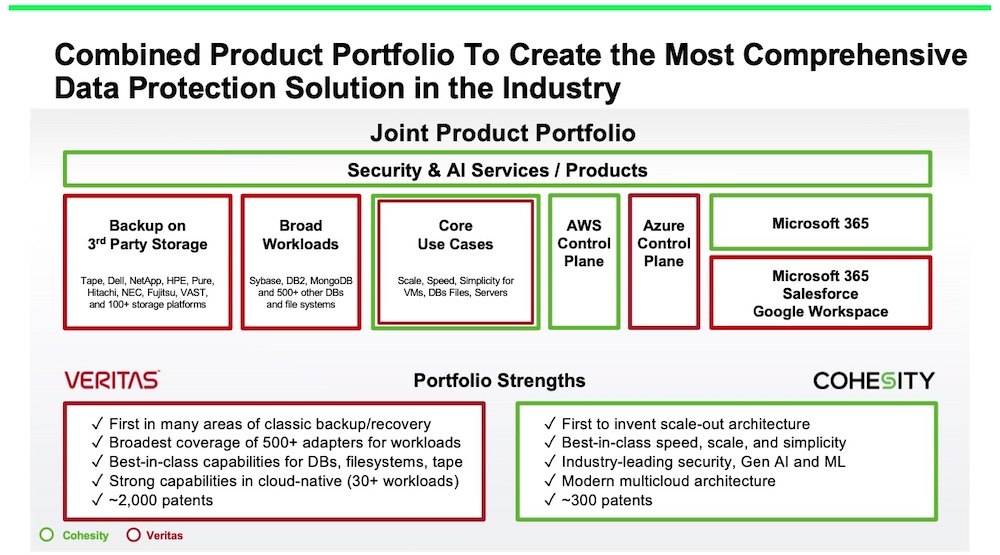

This session was exceptional as the non-yet effective merger deal between Cohesity and Veritas Technologies is supposed to finalize before the end of the year. We speak with Sanjay Poonen, CEO, and some members of his executive team, especially a dedicated time with the CFO Eric Brown, in this special moment for the storage and data management market segment.

Poonen confirmed that the talk between the 2 companies started several months ago and took time as the deal required some financial developments.

As we wrote recently when this big announcement was made, all 3 companies – The Carlyle Group, Veritas Technologies and Cohesity – suffer in their domain.

First Carlyle with their surprising investment in 2015 for $8 billion to break the link with Symantec and make Veritas a separate business. But since that date, the financial firm still looked to making a gain on this without real and visible success and we’re approaching the famous 10 years threshold.

Second, Veritas Technologies, in enterprise backup and recovery software business, with an impressive faithfull NetBackup installed base didn’t appear to have the capability to find a new blue ocean. In the backup space, they had difficulties to deliver cloud, container, SaaS applications backup solutions on time, inviting them to first resell, distribute and integrate some partners’ solutions illustrating their inertia in our demanding agile world. They then offered something but probably too late and at the same time we saw Cohesity, Rubrik, HYCU and a few others taken off with a modern data protection approach. Veritas has missed the object storage opportunity thanks to Symantec frozen period. They started a project, delivered a product, even changed the product name and finally gave up. VxVM and VxFS, still the reference in disk and file system management with lots of lovers, became a bit confidential especially in the modern IT era and cluster technology had a flat life. What a shame but they hit a wall.

Third, Cohesity, one of the recent gems in data management software as listed in the Coldago Gem list and storage unicorn for several years, also had some difficulties to pass the next gear. They raised $805 million in 7 rounds at an impressive valuation but what could be the next step when the valuation is so high. Acquisition is tough and this valuation can protect you but also prevent you from making moves. Nobody was ready to acquire Cohesity for $7 billion, 5 or even $3 billion. IPO seems to be also delicate based on the uncertain business climate. For our readers, Cohesity has made confidential S1 in December 2021 and they finally never made progress at least from what we can see. So they also hit a wall for different reasons.

But all these 3 entities had a serious motivation to create a deal and make this deal happen to save all 3 destinies. The transaction is valued at over $3 billion that invites Cohesity to raise about $1 billion in equity and $2 billion in debt. But $3 billion is far below what Carlyle paid for Veritas in 2016.

The story is not ended for the investment firm as they stay in the Cohesity capital structure and at the board level. And as a next step, we could imagine an IPO to allow Carlyle to fully monetize this investment that was started almost 10 years ago. And they made it, Cohesity will own the Veritas Technologies NetBackup business, the rest storage foundation, cluster product and Backup Exec, will be under a new entity named DataCo. At the end, Cohesity will be the name of the new entity, the CEO will be Sanjay Poonen the current CEO of Cohesity and Veritas CEO Greg Hughes will be a board member. So Veritas would have found a path, Carlyle would have made some money on this deal and still own a portion of the new Cohesity ready to make more when the IPO will be official and finally the firm will become a data management software public giant, the dream comes true for Mohit Aron, with obstacles and god hand, but he would have done it.

Click to enlarge

Hammerspace

The company took advantage of this session in Denver, CO, to refresh the group of the recent HyperScale NAS announcement and pre-announce a few others coming elements.

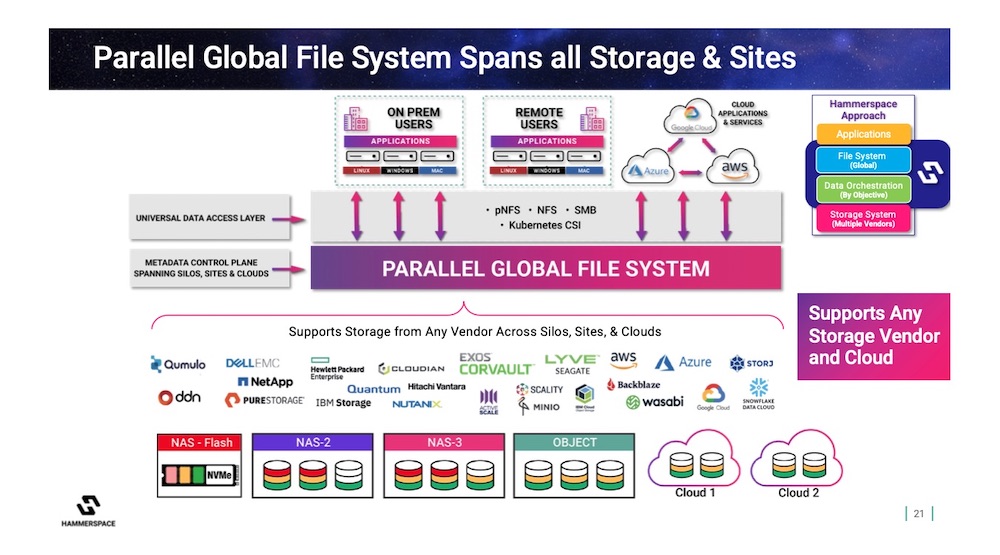

The first dimension of the company’s solutions is its ability to span file-oriented storage units and expose a global single view whatever is the back-end, appliance, software or even cloud entities. The company claims to provide both best worlds, HPC and scale-out NAS, in one model with its HyperScale NAS model and they confirm that parallelism is key to deliver high throughput and bandwidth as scale, especially for AI. So the team has identified key elements in the domain and illustrates once again that asymmetric architecture – separated multiple metadata and data servers – coupled with parallelism is able to deliver the performance promises.

This is what is shared with the case of large training LLMs and Gen AI models that require real high performance. The firm was able to demonstrate and deliver a dedicated architecture fueled by pNFS, with 1,000 NFSv3 storage entities controlled by a set of high availability metadata servers, all connected to a farm of 4,000 computing clients, each equipped by 8 Nvidia GPUs. The configuration shown is impressive providing 12.5TB/s based on standard-based components that seemed to be a critical criteria for that user. For some of our readers, pNFS existed in the NFS protocol specification for a very long time, more than 15 years ago probably, and appeared in NFSv4.1. Some of us remembered the pNFS problem statement paper published by Garth Gibson in July 2004. This Hammerspace active play in the domain leverages all the work done by Tonian Systems, founded in 2010, who developed a software metadata director and got acquired by Primary Data in 2013. This later morphed into Hammerspace in 2018 and still operates under this name.

A second example was given with an existing 32 nodes scale-out NAS exposing files to 300 clients. Hammerspace brings pNFS configuration and doubles the performance, able to support 600 clients. Now the team claims a self certification with Nvidia GPUDirect as Nvidia only validates configurations with hardware-based products. This is the first dimension of Hammerspace, everything relates to pNFS.

The second domain is covered by GDE for Global Data Environment and its data orchestration capability that provides a global view to local files across sites, data-centers, departments, file systems and file servers or NAS.

The story continues as secondary storage has been added via a S3-to-Tape layer and partnerships with Grau Data, PoINT Software & Systems and QStar Technologies. These 3 references, well deployed and adopted by the market, allows Hammerspace to go further in its comprehensive data model approach. And the team even goes beyond this tape world with a partnership with Vcinity to provide fast file transfer over long distance with the coupling of Vcinity units on both sides and RDMA connection in between.

We expect some news soon from the company and a long time promise from David Flynn about client access protocol and we hope the Rozo Systems acquisition at the end of 2022 will also bring new differentiators for users.

The firm is very active on the market and growing fast addressing different needs and different verticals and clearly gained lots of visibility thanks to clever marketing operations, activities and strategy. It would make sense for our readers to also check this article that clarifies a few things in file systems.

Hammerspace was elected as a challenger in the cloud file storage category and a specialist in the high performance file storage one in the last Coldago Research Map 2023 for File Storage.

Click to enlarge

Quantum

The company also met in Colorado updated the group on its strategy and gave us a product update about Myriad, its recent scale-out NAS software, ActiveScale, its object storage software, and DXi, its dedupe backup appliances.

As already mentioned a few times, the impact of Jamie Lerner, CEO, is highly visible as Quantum today is very different from the one we know for many years. It’s all about software with some SDS models in all product departments fueled by a specific external growth and of course key internal developments. It was also a key moment to understand where the company is going in terms of AI integrations as it is a common end-users request. And it seems to converge with Lerner’s strategy started several years ago when he joined the company mid-2018.

Beyond AI, the firm’s product lines continue to address primary and secondary storage needs with respectively Myriad, StorNext and video surveillance solutions then ActiveScale, DXi and of course tape libraries. Except the last element, all others are essentially software but even for tapes the team had added serious functions recently. One other common piece across all these products is flash that has been a market reality for several years for primary of course but also secondary storage as RPO/RTO still are 2 key metrics.

Covering AI demands, the firm wishes to position Myriad coupled optionally with ActiveScale when volumes of data are huge being the data lake to feed the data preparation, the training and finally the inference phases.

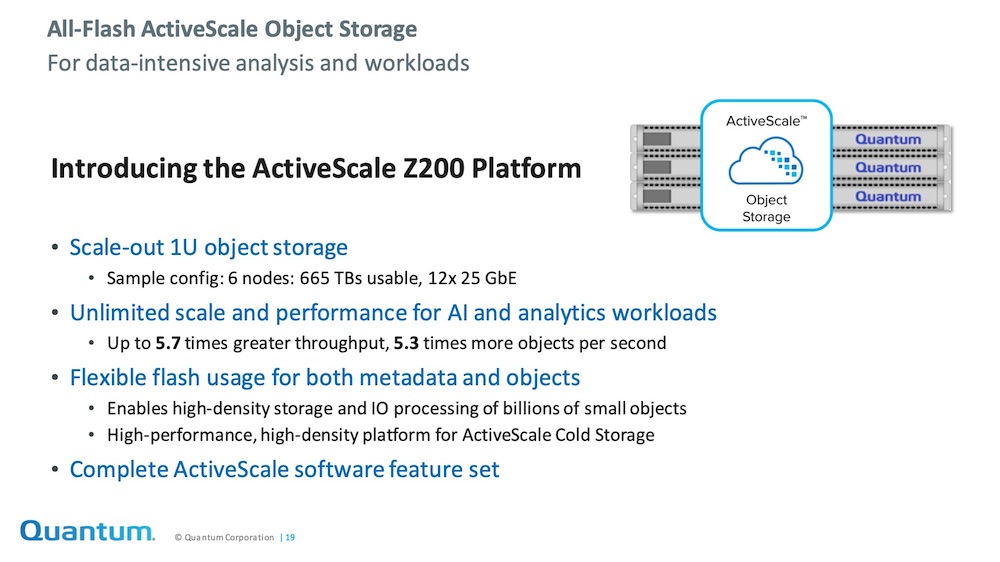

On the high capacity and unstructured data side, beyond the cold data and storage strategy unveiled several months ago with new major features such as object on tape, RAIL and 2D erasure coding and some real successes, ActiveScale continues to evolve and sign interesting deals. The one with Amidata in Australia with 3 geos across the country illustrates perfectly the high resiliency, distribution and promises of the solution. The product team also just introduced the Z200, a full flash model, in 1U increment starting with 3 nodes and 430TB able to scale to unlimited active and cold objects and capacity. It’s worth mentioning that the company acquired ActiveScale from Western Digital for just $2 million in 2020 as mentioned on page 42 of the 10-K 2020 annual report, and this investment is largely amortized.

On the file storage side, the Myriad engineering team has made some progress with the coming POSIX client, a parallel piece of software inspired by the StorNext one and the validation of Nvidia GPUDirect, key for AI environments. We were surprised by this direction as Myriad was designed to be a full NAS solution and adding this client flavor breaks this philosophy a bit. But we understand that performance at clients level requires parallelism in the access and data distribution. Again for high demanding workloads this is paramount.

The company also plans to update some other products and will announce this in a few weeks.

Quantum was elected as a leader in the last Coldago Research Map 2023 for Object Storage and a challenger in the high performance file storage in the recent Coldago Research Map 2023 for File Storage.

Click to enlarge

Qumulo

The Qumulo session, organized at Kleiner Perkins in Menlo Park, CA, gave us the opportunity to again meet Kiran Bhageshpur, CTO, after several meetings with him when he was CEO of Igneous. The story is different here but still is centered on unstructured data.

Qumulo has made a significant turn several years ago leaving appliance and on-premise models to promote full software and adopted the cloud. It gives them the ability to run anywhere and deliver the promises of SDS. This is why it chose to run on any cloud and solidified server partnerships with Arrow, Supermicro, Cisco, Dell, Fujitsu and HPE among others. As of today, the installed base reaches approximately 1,000 customers and it covers all industries.

Company’s offering on Azure is strategic, also known as ANQ for Azure Native Qumulo, the solution brings all aspects of the product users know for years on-premises but at a really attractive cost. Announced in November 2023, the company signed 26 customers in 12 weeks meaning more than 2 per week and for such a solution the ratio is very good.

The team promotes a hybrid approach with on-premises deployments coupled with public cloud ones, all instances being connected, exchanging data and providing a transparent user experience. Promoting the ability to run and scale anywhere, the company also tuned its pricing model to sell a base, then increments of course but rent ephemeral resources when some peak demands occur. The Azure solution is a full SaaS offering with some recent additions like hot and cold data, a progressive pay-as-you-go pricing, claiming to deliver a better TCO than on-premises configurations and even lower than other Azure file oriented services. This new option illustrates very well the elasticity of the product and we expect similar possibilities on other clouds soon.

Speaking about hot and cold data, the selection of files and associated migration would become a key feature and require additional capabilities inside a Qumulo cluster, potentially across clusters and why not coupled with external file servers for hot and/or cold data. And at the same time, even if the firm currently collaborates with a few partners in the domain, it should announce a file tiering and management official partnership in a few weeks now to be the internal engine for such data mobility needs.

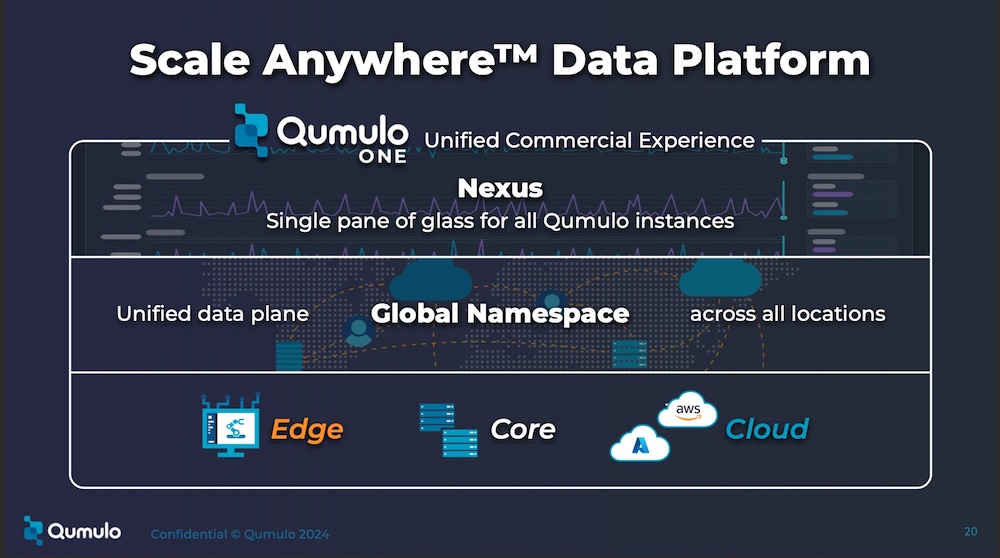

Among the recent key product news, we need to insist on the global namespace capability and Nexus, a global console to manage all deployed instances, wherever they run. On the GNS aspect, the first iteration exposes all files and access from all sites but a propagation from one site. It will be extended by multi-sites writing capabilities in the future to cover more use cases.

We understand that the firm wishes to support AI workloads, which is very difficult to avoid today. The product support NFS over RDMA and we didn’t see directions to support Nvidia GPUDirect yet. So it appears that the company adopts a dual strategy here, providing storage for AI and adding AI in storage to boost performance and user experiences.

The vendor was elected as a leader in the high performance file storage category and a challenger in the enterprise file storage one in the last Coldago Research Map 2023 for File Storage.

Click to enlarge

Solix

Founded in 2002, Solix continues to operate and grows under the radar in the enterprise data management space coming from the structured data need. Historically on-premises the team has adopted the cloud and now supports Azure, GCP, AWS, Oracle and IBM clouds.

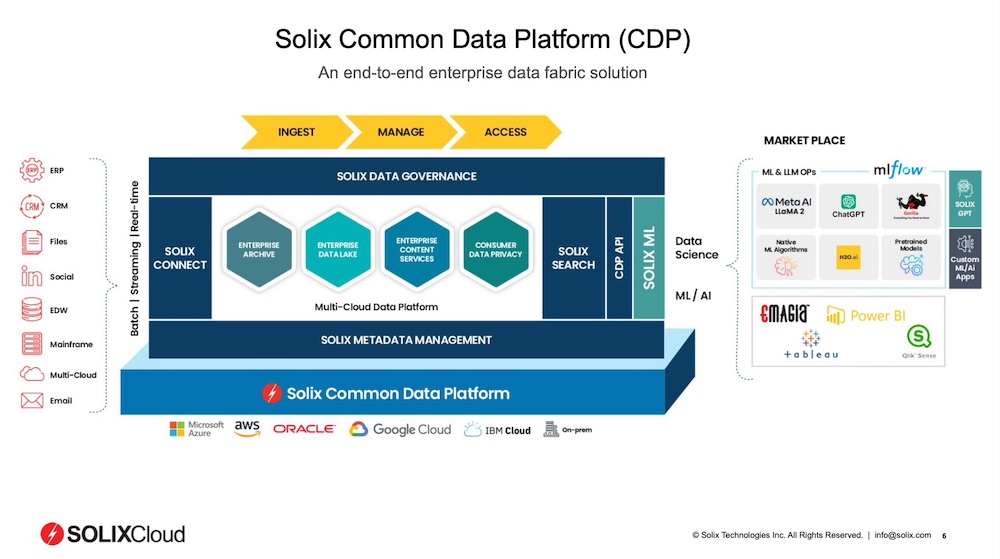

The strategy relies on the company’s CDP, for Common Data Platform, that supports plenty of data sources with hundreds of connectors with advanced metadata management and data governance.

Strong in structured data, the team also has jumped into unstructured data and thus offers a pretty comprehensive data management approach. They added the SolixCloud that relies on the 5 public clouds listed above whatever is the data nature exposed and processed by ERPs, CRMs, EDWs, Email, communications and social applications or just files. SolixCloud couples 4 key functions: enterprise archiving, enterprise data lake, enterprise content services and consumer data privacy.

In enterprise archiving, Solix is one of the rare players to offer ILM for structured data, significantly reducing TCO and boosting hot primary data sets. It’s pretty easy to imagine a configuration with 2 databases, one for production and one for archiving, the first being always small with active data and the second accumulating data from the primary. In practice, it means running SQL queries on hot databases with good performance as the database is maintained small and optimized, but also running similar queries on the archiving instance not impacted the primary one and finally the possibility to merge results. The company remains one of the few independent players in the domain, others have been acquired like Applimation by Informatica in 2009, OuterBay by HP in 2006, Princeton Softech by IBM in 2007 and even Rainstor acquired by Teradata in 2014. Of course we find Delphix or Dell as well and OpenText with the various acquisitions.

For the enterprise data lake, the idea is to be a central integration point and prepare data for specific applications such as ElasticSearch, Snowflake, Redshift or TensorFlow.

On the AI side, the CDP is used to feed Solix GPT and other customer AI/ML applications. GPT offers a new interface to all data prepared and controlled by CDP.

Click to enlarge

StoneFly

Founded a few decades ago in California, StoneFly is very confidential, operating under the radar, addressing SMB and SME market segments. The team has actively participated in the development of iSCSI and they even own the iscsi.com domain.

The company designs SAN, NAS, unified storage and HCI products and more recently object storage based on commodity servers running their StoneFusion OS, currently in the 8th major iteration. It extended this backup/recovery and DR solutions fueled by partnerships like Acronis, Commvault, HYCU, Veeam, VMware and cloud providers such as AWS or Azure.

The philosophy of the company is to offer dedicated appliances targeting specific use cases and this model seems to be a successful one with more than 10,000 installations worldwide.

Surfing on the iSCSI wave, the team initially released some IP oriented SAN storage units and later more classic storage offerings. It particularly insisted on the resiliency of deployed systems with integrated high availability, BC and DR. It means the development of key core features such as fine grain replication, clustering and RAID software layers that can be combined and configured to support pretty large data environments. The engineering group also has designed some scale-up and scale-out solutions with some disaggregated models.

On the hypervisor side, the firm is agnostic working with VMware of course but also Hyper-V, KVM, Citrix or their own StoneFly Persepolis.

With the pressure of ransomware, they added an air gap option to be added to existing solutions. The last product news covers the Secure Virtualization Platform with the dual hardware controllers that are isolated and setup independently. The first controller supports the production and the secondary operates as a target receiving data from the first instance even within the same chassis.

Pricing is very aggressive that explains why StoneFly is a commercial success being profitable for many many years without any need to be more visible.

Weka

The new session at the firm’s HQ in Campbell, CA, reserved some good news for the group.

The company clearly leads the modern data platform pack for technical scientific and advanced workloads present in HPC and AI domains. They historically designed a parallel file system named WEKAfs leveraging several key industry innovations with flash, NVMe, container, RDMA, networking and CPU of course. Their unique model really shook the established positions and they’ve been adopted by many key large users. At the same time, they’ve also been chosen by enterprises as their solution appeared to be well aligned with commercial needs at scale.

Started with on-premises validation, the product really works everywhere on-premises of course but in the cloud being a pure software approach fully hardware agnostic.

Again Weka has an interesting trajectory with real performance numbers seen by users and validated by official public instances such as the recent SPEC entity. These recent results are spectacular in various domains illustrating that the firm’s platform is really universal. All these official numbers are publicly available on the SPEC web site.

Native with a Posix client, the team also has added NFS with Ganesha and SMB with probably today the best market SMB stack coming from Tuxera and an additional S3 API. This multiple flavor gives credibility to Weka in the U3 – Universal, Unified and Ubiquitous, storage model. The other way to present this is its ability to offer multiple access methods, support multiple workloads, consolidate usages, connect to various storage entities…

It is also perfectly illustrated by a real very demanding environment, the famous U2 at Sphere in Las Vegas, NV, with impressive configuration to sustain high throughput. This deal was secured by Hitachi Vantara who OEMed the Weka Data Platform. To list a few of these huge numbers, WEKA demonstrated 16k video resolution with 160,000 square feet LED display, 167,000 independent audio channels, 412GB/s video streaming data for 1.5PB. Beyond Hitachi, Weka also partners with different resellers like Dell, HPE, Lenovo and Supermicro and Cisco for special channel activities.

On the cloud side, new players accelerate with GPU clouds, GPU-as-a-service or AI-as-a-service and the firm has signed with some of them like N3Xgen cloud.

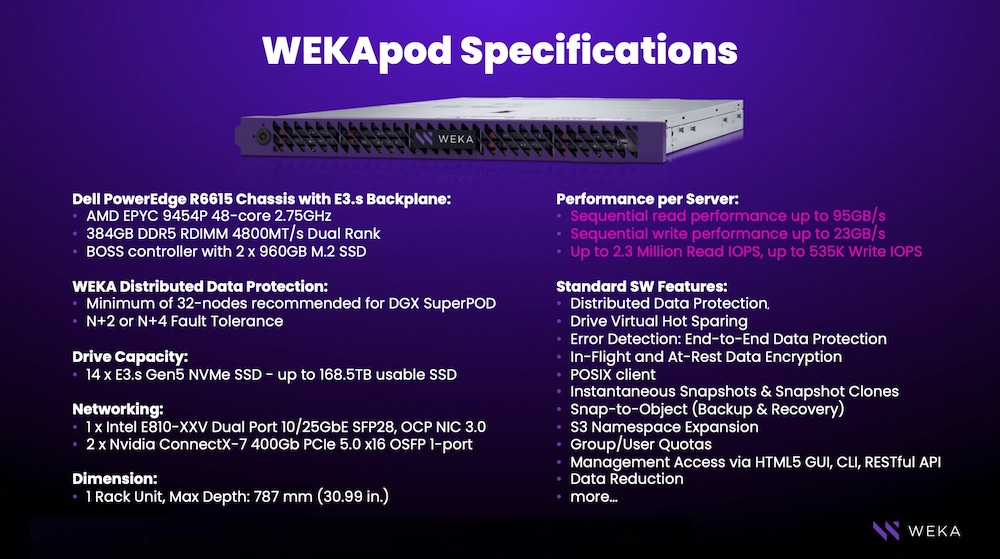

To continue on this, the company already validated by Nvidia has announced the WEKApod, a hardware platform running Weka software. With that, NVidia officially certifies the solution with SuperPOD with H100 systems and again performance numbers are impressive. Nvidia also is an investor within WEKA since the Series C round in 2019. Each hardware server is a 1U Dell R6615 machine delivering more than 95GB/s in read bandwidth and 23GB/s in write for 2.3 million IO/s in read mode. A cluster supports a minimum of 8 nodes so 1PB usable capacity, 765GB/s and 18.3 million IO/s and for the superPOD configuration 32 nodes are recommended to optimize data distribution with the firm’s distributed data protection. This configuration demonstrates density advantages and is able to replace more classic approaches with a single rack in write or even 1/4 rack in read mode.

As the platform becomes more and more universal with proven performance numbers, we anticipate new use cases and verticals in the coming months.

Weka was elected as a leader in the high performance file storage category in the last Coldago Research Map 2023 for File Storage.

Click to enlarge

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter