GigaOm Radar for High-Performance Cloud File Storagev4.0

Leaders NetApp, Hammerspace, Qumulo, Weka, Panzura and Nusani selected and analyzed

This is a Press Release edited by StorageNewsletter.com on November 16, 2023 at 2:02 pm This market report, published on November 8, 2023, was written by Max Mortillaro, an independent industry analyst with a focus on storage, multi-cloud and hybrid cloud, data management, and data protection, and Arjan Timmerman, an independent industry analyst and consultant with a focus on helping enterprises on their road to the cloud (multi/hybrid and on-prem), data management, storage, data protection, network, and security, for GigaOm.

This market report, published on November 8, 2023, was written by Max Mortillaro, an independent industry analyst with a focus on storage, multi-cloud and hybrid cloud, data management, and data protection, and Arjan Timmerman, an independent industry analyst and consultant with a focus on helping enterprises on their road to the cloud (multi/hybrid and on-prem), data management, storage, data protection, network, and security, for GigaOm.

GigaOm Radar for High-Performance Cloud File Storagev4.0

An Assessment Based on the Key Criteria Report for Evaluating Cloud File Storage Solutions

1. Summary

File storage is a critical component of every hybrid cloud strategy, and enterprises often prefer it over block and object storage, in particular for big data, AI, and collaboration. We therefore decided to focus our assessment of the cloud-based file storage sector on two areas: on big data and AI in this report on high-performance cloud file storage; and on collaboration in our companion Radar on distributed cloud file storage.

Cloud providers didn’t initially offer file storage services, and this spurred multiple storage vendors to jump in with products and services to fill that gap. The requirements that emerged during the Covid-19 pandemic are still relevant: with the increasing need for data mobility and the large number of workloads moving across on-premises and cloud infrastructures, file storage is simply better – easier to use and more accessible than other forms of storage.

Lift-and-shift migrations to the cloud are increasingly common scenarios, and enterprises often want to keep the environment as identical as possible to the original one. File storage is a key factor in accomplishing this, but simplicity and performance are important as well.

File systems still provide the best combination of performance, usability, and scalability for many workloads. It is still the primary interface for the majority of big data, AI/ML, and HPC applications, and today, it usually offers data services such as snapshots to improve data management operations.

In recent years, file systems also have become more cloud-friendly, offering better integrations with object storage, which enables better scalability, a better balance of speed and cost, and advanced features for data migration and disaster recovery.

Both traditional storage vendors and cloud providers now offer file services or solutions that can run both on-premises and in the cloud. Their approaches are different, though, and it can be very difficult to find a solution that both meets today’s needs and can evolve to face future challenges. Cloud providers generally offer the best integration across the entire stack but also raise the risk of lock-in, and services are not always the best in class. On the other hand, solutions from storage vendors typically provide better flexibility, performance, and scalability, but they can be less efficient or lack the level of integration offered by an end-to-end solution.

This is our 4th year evaluating the cloud file space in the context of our Key Criteria and Radar reports. This report builds on our previous analysis and considers how the market has evolved over the last year.

This Radar report highlights key high-performance cloud file storage vendors and equips IT decision-makers with the information needed to select the best fit for their business and use case requirements. In the corresponding GigaOm report, Key Criteria for Evaluating Cloud File Storage Solutions, we describe in more detail the capabilities and metrics that are used to evaluate vendors in this market.

All solutions included in this Radar report meet the following table stakes – capabilities widely adopted and well implemented in the sector:

- Reliability and integrity

- Basic security

- Access methods

- Snapshots

- Kubernetes support

2. Market Categories and Deployment Types

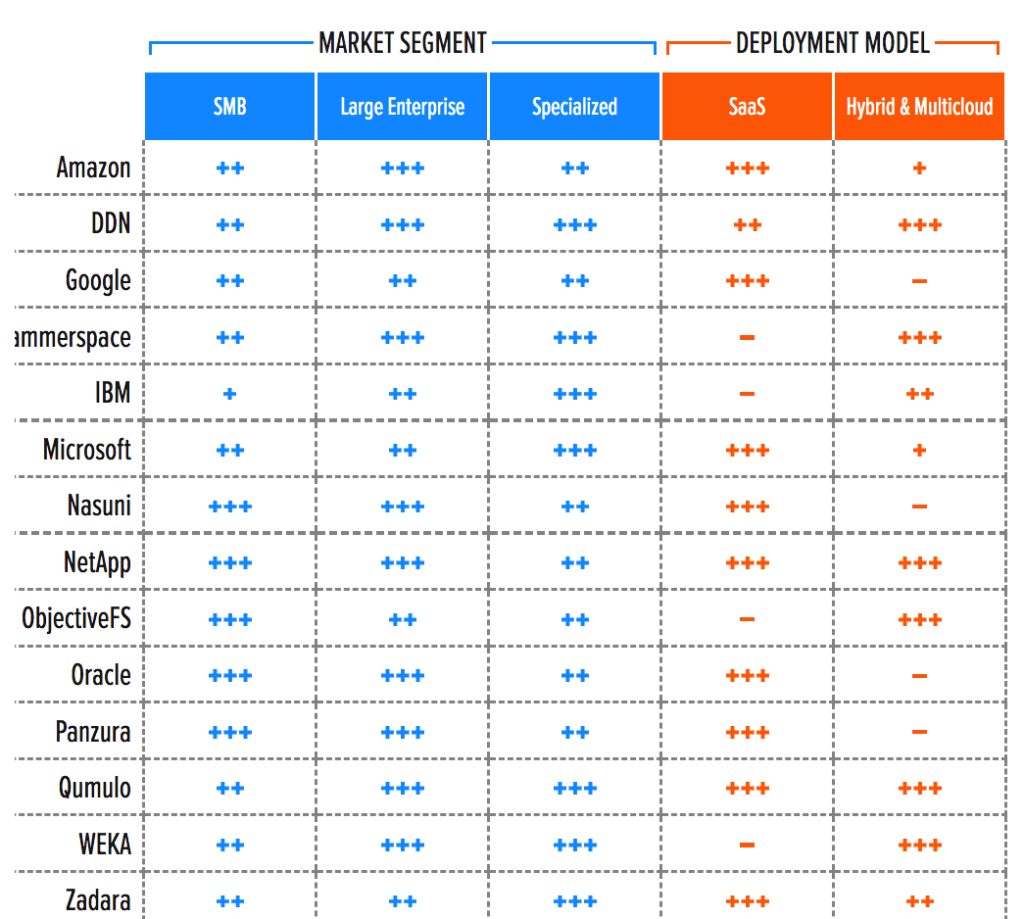

For a better understanding of the market and vendor positioning (Table 1), we assess how well high-performance cloud file storage solutions are positioned to serve specific market segments and deployment models.

For this report, we recognize 3 market segments:

- SMB: In this category, we assess solutions on their ability to meet the needs of organizations ranging from small businesses to medium-sized companies. Also assessed are departmental use cases in large enterprises where ease of use and deployment are more important than extensive management functionality, data mobility, and feature set.

- Large enterprise: Here, offerings are assessed on their ability to support large and business-critical projects. Optimal solutions in this category will have a strong focus on flexibility, performance, data services, and features to improve security and data protection. Scalability is another big differentiator, as is the ability to deploy the same service in different environments.

- Specialized: Optimal solutions will be designed for specific workloads and use cases, such as big data analytics and high-performance computing.

In addition, we recognize 2 deployment models for solutions in this report:

- SaaS: The solution is available in the cloud as a managed service. Often designed, deployed, and managed by the service provider or the storage vendor, it is available only from that specific provider. The big advantages of this type of solution are its simplicity and the integration with other services offered by the cloud service provider.

- Hybrid and multicloud: These solutions are meant to be installed both on-premises and in the cloud, allowing customers to build hybrid or multicloud storage infrastructures. Integrating with a single cloud provider could be limited compared to the other option and more complex to deploy and manage. On the other hand, these solutions are more flexible, and the user usually has more control over the entire stack with regard to resource allocation and tuning. They can be deployed in the form of a virtual appliance, like a traditional NAS filer but in the cloud, or as a software component that can be installed on a Linux VM – that is, a file system.

Table 1. Vendor Positioning: Market Segment and Deployment Model

+++ Exceptional: Outstanding focus and execution

++ Capable: Good but with room for improvement

++ Limited: Lacking in execution and use cases

– Not applicable or absent

3. Key Criteria Comparison

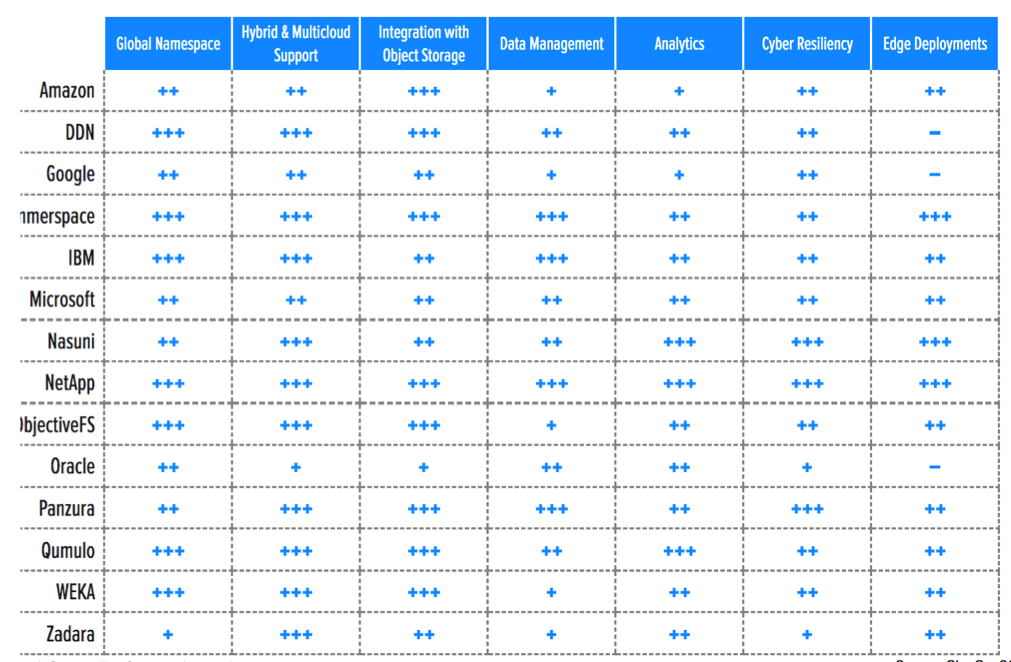

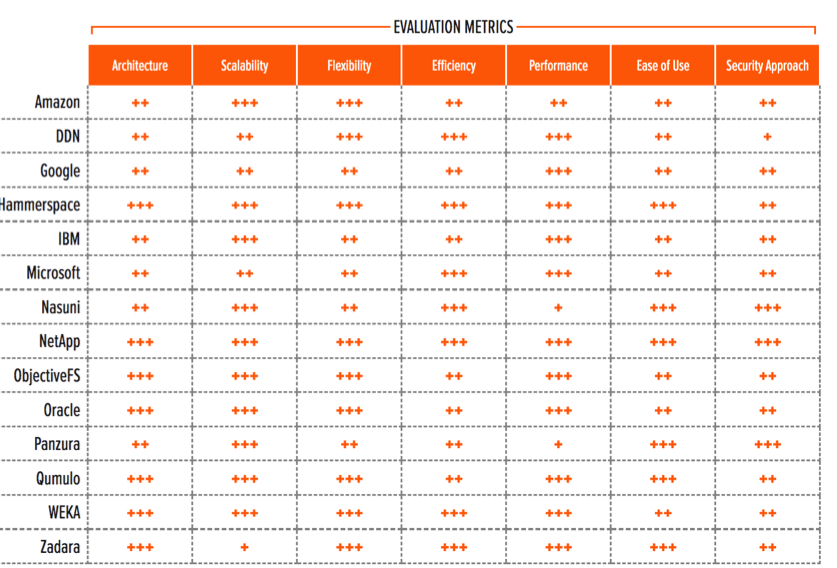

Building on the findings from the GigaOm report, Key Criteria for Evaluating Cloud File Storage Solutions, Tables 2, 3, and 4 summarize how each vendor included in this research performs in the capabilities we consider differentiating and critical in this sector.

Key criteria differentiate solutions based on features and capabilities, outlining the primary criteria to be considered when evaluating a cloud file storage solution.

Evaluation metrics provide insight into the non-functional requirements that factor into a purchase decision and determine a solution’s impact on an organization.

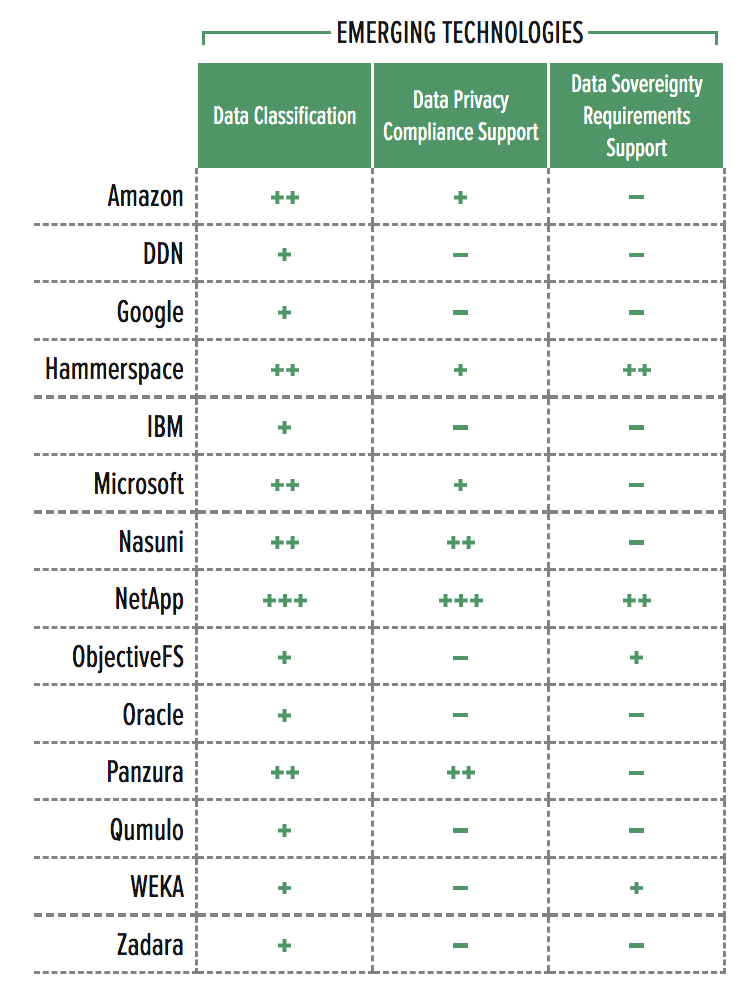

Emerging technologies show how well each vendor takes advantage of technologies that are not yet mainstream but are expected to become more widespread and compelling within the next 12 to 18 months.

The objective is to give the reader a snapshot of the technical capabilities of available solutions, define the perimeter of the market landscape, and gauge the potential impact on the business.

Table 2. Key Criteria Comparison

+++ Exceptional: Outstanding focus and execution

++ Capable: Good but with room for improvement

++ Limited: Lacking in execution and use cases

– Not applicable or absent

Table 3. Evaluation Metrics Comparison

+++ Exceptional: Outstanding focus and execution

++ Capable: Good but with room for improvement

++ Limited: Lacking in execution and use cases

– Not applicable or absent

Table 4. Emerging Technologies Comparison

+++ Exceptional: Outstanding focus and execution

++ Capable: Good but with room for improvement

++ Limited: Lacking in execution and use cases

– Not applicable or absent

By combining the information provided in the tables above, the reader can develop a clear understanding of the technical solutions available in the market.

4. GigaOm Radar

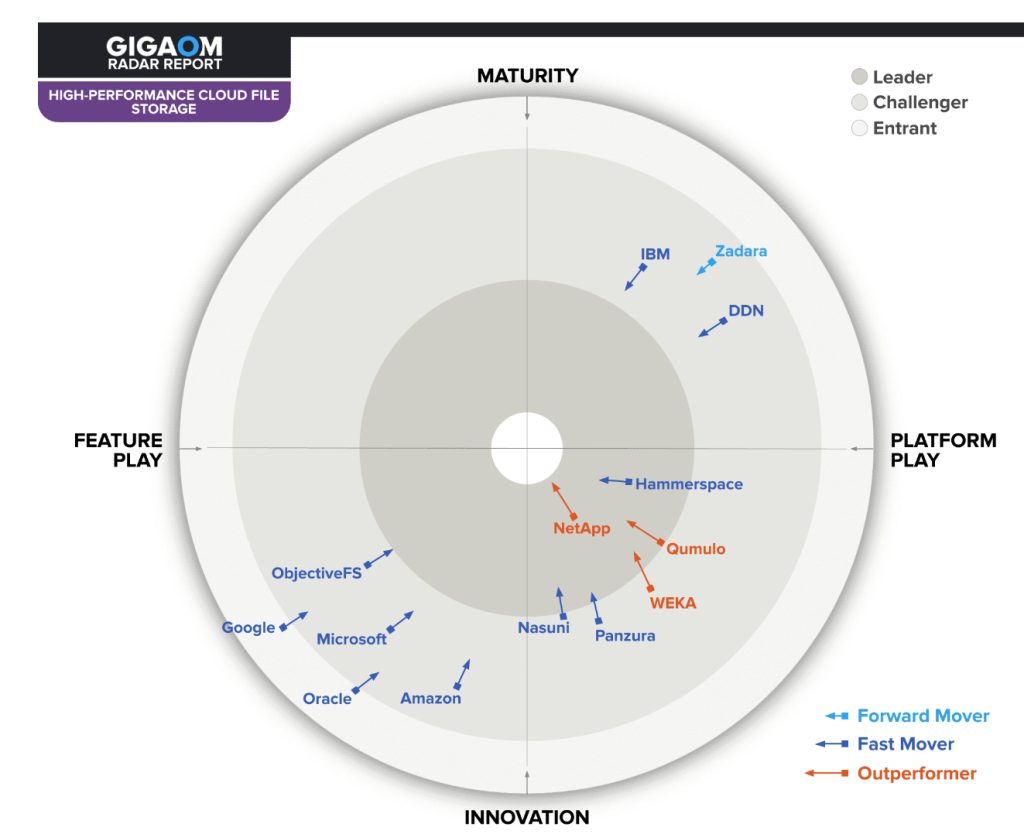

This report synthesizes the analysis of key criteria and their impact on evaluation metrics to inform the Radar graphic in Figure 1. The resulting chart is a forward-looking perspective on all the vendors in this report based on their products’ technical capabilities and feature sets.

The Radar plots vendor solutions across a series of concentric rings, with those set closer to the center judged to be of higher overall value. The chart characterizes each vendor on two axes – balancing Maturity versus Innovation and Feature Play vs. Platform Play – while providing an arrow that projects each solution’s evolution over the coming 12 to 18 months.

Figure 1. GigaOm Radar for High-Performance Cloud File Storage

As you can see in the Radar chart in Figure 1, vendors are mostly Fast Movers or Outperformers. Compared to last year, vendors haven’t significantly changed in terms of positioning, but the pace of innovation has increased, with more ambitious roadmaps across the board.

In the Innovation/Platform Play quadrant are Hammerspace, Nasuni, NetApp, Panzura, Qumulo, and Weka.

Hammerspace provides one of the best global namespace implementations with seamless policy-based data orchestration for replication and tiering. It has significantly improved its support for high-performance implementations thanks to the acquisition of RozoFS in 2023 as well as innovations in the Linux kernel for a client-based parallel file system architecture for NFS.

Nasuni continues to deliver a balanced and well-engineered platform that is both massively scalable and includes excellent ransomware protection capabilities. The company is working on data management improvements on its near-term roadmap that will help complete its feature set.

NetApp still demonstrates its commitment to execution against an ambitious roadmap by delivering new capabilities and, at the same time, consolidating and simplifying management interfaces. Its enterprise-grade cloud file system based on NetApp Ontap offers seamless interoperability across clouds and on-premises deployments and is now available as a first-party offering on Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

Panzura offers a highly scalable solution that boasts a comprehensive set of capabilities such as proactive ransomware protection and an advanced data management suite. Though they can also address cloud file systems requirements, their respective architectures may pose challenges in addressing the performance, throughput, and latency requirements of demanding workloads typically running on cloud file systems.

Qumulo offers an enterprise-grade solution with a broad set of services covering data replication, data mobility, data integration, and analytics. The solution is now consumable as a service on Azure; besides a compelling roadmap, Qumulo will gradually implement key features (including long-awaited data compression) through 1Q24.

The Weka Data Platform still remains a solution of choice for high-performance workloads such as AI/ML, HPC, genomics and drug discovery in life sciences, content protection in media entertainment, and high-frequency trading (HFT), whether on-premises, in hybrid deployments, or in clouds such as AWS, Azure, GCP, and OCI. The company’s roadmap includes awaited and welcome improvements in the data management space.

In the Innovation/Feature Play quadrant are solutions that only partially cover some of the GigaOm key criteria. These include 4 hyperscalers – AWS, GCP, Microsoft Azure, and Oracle Cloud Infrastructure (OCI) – as well as one SDS solution, ObjectiveFS. The positioning of the hyperscalers in this area reflects that each has a more or less comprehensive portfolio of cloud file system services, though those services are intended for specific use cases and thus don’t always include the full spectrum of key criteria.

AWS offers a very comprehensive cloud file systems portfolio, with services oriented at cloud-native file storage (Amazon EFS) and compatibility with existing file systems (Amazon FSx for NetApp ONTAP, Windows Server, Lustre, and OpenZFS). Its 2023 improvements focus primarily on improved performance and resiliency.

GCP now offers a first-party offering based on NetApp’s Ontap storage OS, which is branded Google Cloud NetApp Volumes and can be consumed in a much easier fashion. This is a logical progression after the 2019 launch of NetApp Cloud Volumes Service for Google Cloud, a fully NetApp-supported offering, but then provided as a third-party solution. The other offering, Google Filestore, has been improved in the last year, but the solution could be expanded further.

Microsoft offers several cloud file system solutions – Azure NetApp Files, Azure Files, and Azure Blob, each with multiple performance and cost tiers – that deliver great flexibility in terms of consumption and deployment options. Among these, the most mature enterprise-grade offering is Azure NetApp Files, a Microsoft and NetApp collaboration with almost global availability. Microsoft’s other offerings have more limited namespace sizes, but the company is working on increasing those limits. The company also launched an Azure Managed Lustre offering in July 2023.

OCI offers File Storage, which was built around strong data durability, high availability, and massive scalability. What’s interesting about this solution currently is its robustness and its focus on data management, while other areas need further development to catch up with the competition. Oracle also offers relevant options for HPC-oriented organizations with its OCI HPC File System stacks, and it provides an OCI ZFS image as well.

The only non-hyperscaler in this area of the Radar, ObjectiveFS’s solution has primarily shown under-the-hood stability and performance improvements. Designed with a focus on demanding workloads, and with support for on-premises, hybrid, and cloud-based deployments, the solution will suit organizations seeking strong performance, though it does lack data management capabilities.

The third group of vendors are in the Maturity/Platform Play quadrant: DDN, IBM, and Zadara.

DDN maintains its strong focus on AI and HPC workloads with an updated Lustre-based Exascaler EXA6 appliance, which delivers scalability, performance, and multitenancy – key capabilities for these types of workloads. The company also offers a cloud-based Exascaler appliance.

IBM Spectrum Scale, the cloud file system with the greatest longevity, continues to demonstrate its relevance with steady improvements, including snapshot immutability, ransomware protection, and containerized S3 access services for high-performance workloads, with concurrent file and object access.

Zadara delivers its solution as a service primarily via MSPs, with some deployments directly on customer premises. Its comprehensive platform integrates storage (file, object, and block), compute, and networking with great analytics and excellent data mobility and data protection options.

5. Vendor Insights

Amazon

It offers a robust portfolio of file-based services that includes Amazon FSx, Amazon EFS, and Amazon File Cache. The FSx family provides 4 popular file system offerings: Windows File Server, NetApp Ontap, Lustre, and OpenZFS. These services can be managed and monitored through the FSx console, which provides unified monitoring capabilities across all 4 FSx offerings.

FSx for Windows File Server provides fully managed native Windows file-sharing services using the server message block (SMB) protocol. In 2023, the solution showed significant performance improvements in both throughput and IOPS.

FSx for NetApp Ontap provides a proven, enterprise-grade cloud file storage experience for the AWS platform that is natively integrated into the AWS consumption model. It offers superior technical capabilities thanks to NetApp’s presence on all major cloud platforms. The solution follows Ontap principles and scales to multiple petabytes in a single namespace.

FSx for Lustre implements a POSIX-compliant file system that natively integrates with Linux workloads and is accessible by Amazon EC2 instances or on-premises workloads. The solution is linked with AWS S3 buckets, whose objects are then transparently presented as files. In 2023, Amazon added a File Release capability that enables tiering to AWS S3 by releasing file data that is synchronized with S3 from Lustre.

FSx for OpenZFS brings OpenZFS native capabilities to the cloud, including snapshots, cloning, and compression. The offering simplifies migration of OpenZFS-based data-intensive Linux workloads to AWS. It now also supports multiple-AZ file systems to increase resilience for business-critical applications.

EFS is a massively parallel file storage solution based on the NFS 4.1 protocol and is consumable as a fully managed service. It can scale up to petabytes, and file systems can be hosted within a single AZ or across multiple AZs when applications require multizone resiliency. Centralized data protection capabilities are available with AWS Backup, and data mobility is supported with AWS DataSync. Finally, Amazon File Cache provides a high-speed cache for datasets stored anywhere, whether in the cloud or on-premises.

Analytics and monitoring are handled through several endpoints. The AWS console provides a general overview, with specialized consoles for Amazon EFS or FSx presenting a variety of metrics. Logging and auditing can be performed through the AWS Cloudwatch consoles.

Some of the services provide snapshot immutability (which can be used for ransomware mitigation and recovery), but configuration, recovery, and orchestration must be performed manually.

Strengths: Offers an extensive set of cloud file storage solutions that can address the needs of a broad spectrum of personas and use cases, providing great flexibility and compatibility with popular file system options through its FSx service while also delivering a cloud-native experience with EFS and hybrid cloud options with Amazon File Cache.

Challenges: Amazon’s extensive portfolio requires organizations to properly understand the company’s offerings and the alignment of services to specific use cases. The rich ecosystem is also complex, with analytics and management capabilities. The platform can deliver outstanding value, but it requires a thorough understanding of its full potential.

DDN EXAScaler

It is is a parallel file system that offers excellent performance, scalability, reliability, and simplicity. The data platform provided by DDN is designed to enable and accelerate various data-intensive workflows on a large scale. EXAScaler is built using Lustre, a fast and scalable open parallel file system widely popular for scale-out computing. It has been tested and refined in the most challenging HPC environments. The team behind Lustre and EXAScaler, most of whom are now employed by DDN, are highly skilled and dedicated, and continue to work actively.

The EXAScaler appliances combine the parallel file system software with a fast hyperconverged data storage platform in a package that’s easy to deploy and is managed and backed by the leaders in data at scale. Built with AI and HPC workloads in mind, DDN excels in GPU integration, with the first GPU-direct integration. The EXAScaler client is deployed into the GPU node, enabling RDMA and monitoring application access patterns from the GPU client to the disk, providing outstanding workload visibility. DDN is also the only certified and supported storage for Nvidia DGX SuperPOD, a feature that allows DDN customers to run the solution as a hosted AI cloud. DD also has reference architectures to support Nvidia GX Pod and DGX SuperPOD.

EXAScaler’s fast parallel architecture enables scalability and performance, supporting low-latency workloads and high-bandwidth applications such as GPU-based workloads, AI frameworks, and Kubernetes-based applications. Moreover, the solution can grow with data at scale and its intelligent management tools manage data across tiers.

Data security is built in, with secure multitenancy, encryption, end-to-end data protection, and replication services baked into the product and providing a well-balanced solution to the customer. In addition, Lustre’s capabilities around changelog data and audit logs are built into the EXAScaler product, providing better insights for customers into their data. Unfortunately, ransomware protection is not yet completely incorporated into the solution.

Besides the physical EXA6 appliance, a cloud-based solution branded EXAScaler Cloud runs natively on AWS, Azure, and GCP and can be obtained easily from each cloud provider’s marketplace. Features such as cloud sync enable multicloud and hybrid data management capabilities within EXAScaler for archiving, data protection, and bursting of cloud workloads.

Also worth a mention is DDN DataFlow, a data management platform that is tightly integrated with EXAScaler. Although it’s a separate product, a broad majority of DDN users rely on DataFlow for platform migration, archiving, data protection use cases, data movement across cloud, repatriation, and so forth.

Strengths: EXAScaler is built on top of the Lustre parallel file system. It offers a scalable and performant solution that gives customers a secure and flexible approach with multitenancy, encryption, replication, and more. The solution particularly shines thanks to its outstanding GPU integration capabilities, an area where the company is recognized as a leader.

Challenges: Ransomware protection capabilities still need to be adequately implemented.

Google

Previously limited to Google Filestore, high-performance cloud file storage offerings on GCP now include Google Cloud NetApp Volumes. Based on NetApp Cloud Volumes Ontap, Google Cloud NetApp Volumes is a fully managed, cloud-native data storage service that brings NetApp enterprise-grade capabilities directly to GCP. The solution is fully compatible with first-party NetApp services at other hyperscalers and any on-premises or cloud-based Ontap 9.11 deployments, allowing seamless data operations in a true multicloud fashion. Google Cloud NetApp Volumes can also be managed through NetApp BlueXP (comprehensively covered in the NetApp solution review) and is available in 28 regions. It is the only Google service offering SMB, NFS, cloning, and replication capabilities.

Filestore is a NAS solution fully managed for the Google Compute Engine and GKE-powered Kubernetes instances. It uses the NFSv3 protocol and is designed to handle high-performance workloads. With the ability to scale up to hundreds of terabytes, it has 4 different service tiers: basic HDD, basic SSD, high scale SSD, and enterprise. Each tier has different capacity, throughput, and IO/s characteristics. Customers who use the high scale SSD and Enterprise tiers can scale down capacity if it is no longer required.

The solution is native to the Google Cloud environment and is therefore unavailable on-premises or on other cloud platforms. It doesn’t provide a global namespace; customers get one namespace of up to 100TB per share, depending on each tier’s provisional capacity limit.

Filestore has an incremental backup capability (available on basic HDD and SSD tiers) that provides the ability to create backups within or across regions. Backups are globally addressable, allowing restores in any GCP region. There are currently no data recovery capabilities on the high scale SSD tier (neither backups nor snapshots), while the enterprise tier supports snapshots and availability at the regional level. Unfortunately, the enterprise tier can only scale up to 10 TiB per share.

Google recommends organizations leverage ecosystem partners for enterprise-grade data protection capabilities. Data mobility capabilities primarily rely on command-line tools such as remove sync (rsync) or secure copy (scp), and these tools can also be used to copy data to cloud storage buckets, Google’s object storage solution. For larger capacities, customers can use Google Cloud Transfer Appliance, a hardened appliance laden with security measures and certifications. Google also offers a Storage Transfer Service that helps customers perform easier data transfers or data synchronization activities, but capabilities appear to be limited compared to data migration and replication tools available in the market.

Filestore includes a set of REST APIs that can be used for data management activities. Data analytics provides basic metrics and the ability to configure alerts.

The solution implements industry-standard security features, but there are no capabilities for auditing user activities (except manually parsing logs) or for protecting against ransomware. Organizations can, however, create Google Cloud storage buckets with the Bucket Lock functionality and use data mobility tools to copy data to the object store.

Note that several vendors included in this report allow their high-performance cloud file storages to run on GCP. This provides greater flexibility to organizations leveraging GCP as their public cloud platform, though none of these solutions are currently provided as a first-party service (which would be normally operated and billed to the customer by Google).

Strengths: Google Cloud file storage capabilities are improving, partly thanks to the availability of Google Cloud NetApp Volumes. Filestore is an exciting solution for organizations that rely heavily on GCP. It provides a native experience with high throughput and sustained performance for latency-sensitive workloads.

Challenges: Filestore has improved, but it still has limitations in scalability and customization. Users needing highly scalable storage should consider other options.

Hammerspace

It creates a global data environment by producing a global namespace with automated data orchestration to place data when and where needed. It is powered by coupling a parallel global file system with enterprise NAS data services to provide high performance anywhere data is used without sacrificing enterprise data governance, protection, and compliance requirements. Hammerspace helps overcome the siloed nature of hybrid cloud, multicloud region, and multicloud file storage by providing a single file system, regardless of a site’s geographic location or whether storage provided by any storage vendor is on-premises or cloud-based, and by separating the control plane (metadata) from the data plane (where data actually resides). It is compliant with several versions of the NFS and SMB protocols and includes RDMA support for NFSv4.2.

The company acquired RozoFS in May 2023 and incorporated RozoFS technology into its solution. Customers can now deploy new DSX EC-Groups, which provide high-performance data stores with significantly improved data efficiency thanks to the use of erasure coding. The firm’s solution is designed to provide the high performance and scalability for data storage that is needed in both HPC and AI training, checkpointing, and inferencing workloads.

The solution lets customers automate using objective-based policies that enable them to use, access, store, protect, and place data around the world via a single global namespace, and the user doesn’t need to know where the resources are physically located. The system is monitored in real time to see if data and storage use are in alignment with policy objectives, and it performs automated background compliance remediation in a seamless and transparent way. High-performance local access is provided to remote applications, AI models, compute farms, and users through the use of local metadata that is consistently synchronized across locations. Even if data is in flight to a new location, the users and applications perform read/write operations to the metadata servers and can work with their files while they are in flight.

The product is based on the intelligent use of metadata across file system standards and includes telemetry data (such as IO/s, throughput, and latency) as well as user-defined and analytics-harvested metadata, allowing users or integrated applications to rapidly view, filter, and search the metadata in place instead of relying on file names. In addition, the vendor supports user-enriched metadata through its Metadata Plugin. Custom metadata will be interpreted by he company and can be used for classification and to create data placement, DR, or data protection policies.

Hammerspace can be deployed at the edge, on-premises, or to the cloud, with support for AWS, Azure, GCP, Seagate Lyve, Wasabi, and several other cloud platforms. It can use its own storage nodes, any existing third-party vendor block, file, or object storage system in the data center, or a wide range of cloud storage for the data stores. It implements share-level snapshots as well as comprehensive replication capabilities, allowing files to be replicated automatically across different sites through the firm’s Policy Engine. Policy-based replication activities are available on-demand as well. These capabilities allow organizations to implement multisite, active-active disaster recovery with automated failover and failback.

Integration with object storage is also a core capability of Hammerspace: data can be replicated or saved to the cloud as well as automatically tiered on object storage to reduce the on-premises footprint and save on storage costs.

Company’s cyber-resiliency strategy relies on native immutability features, monitoring, and third-party detection capabilities. Mitigation functions include undelete and file versioning, which allow users to revert to a file version not affected by ransomware-related data corruption. Vendor’s ability to automate data orchestration for recovery is also a core part of the Hammerspace feature set.

The product is available in the AWS, Azure, and Google Cloud marketplaces and integrates with Snowflake.

Strengths: Parallel Global File System offers a very balanced set of capabilities with replication and hybrid and multicloud capabilities through the power of metadata.

Challenges: Built-in, proactive ransomware detection capabilities are currently missing.

IBM Storage Scale

It offers a scalable and flexible software-defined storage solution that can be used for high-performance cloud file storage use cases. Built on the robust and proven IBM global parallel file system (GPFS), the product can handle several building blocks on the back end: IBM NVMe flash nodes, Red Hat OpenShift nodes, capacity, object storage, and multivendor NFS nodes.

The solution offers several file interfaces, such as SMB, NFS, POSIX-compliant, and HDFS (Hadoop), as well as an S3-compatible object interface, making it a versatile choice for environments with multiple types of workloads. Data placement is taken care of by the Storage Scale clients, which spread the load across storage nodes in a cluster. The company recently introduced containerized S3 access services for high-performance cloud-native workloads and now also supports concurrent file and object access.

The solution offers a single, manageable namespace and migration policies that enable transparent data movement across storage pools without impacting the user experience.

Storage Scale supports remote sites and offers various data caching options as well as snapshot support and multisite replication capabilities. The solution includes policy-driven storage management features that allow organizations to automate data placement on the various building blocks based on the characteristics of the data and the cost of the underlying storage. It includes a feature called Transparent Cloud Tiering that allows users to tier files to cloud object storage with an efficient replication mechanism.

The solution includes a management interface that provides monitoring capabilities for tracking data usage profiles and patterns. Comprehensive data management capabilities are provided through an additional service, IBM Watson Data Discovery.

In version 5.1.5 of Storage Scale, the company introduced a snapshot retention mechanism that prevents snapshot deletion at the global and fileset level, effectively bringing immutability, and thus basic ransomware protection capabilities, to the platform. Early warning signs of an attack can be provided by Storage Insights or Spectrum Control. Both solutions can analyze current I/O workload against a previous usage baseline and help provide indications that an attack is in progress. Organizations can set up alerts that indicate an attack may be happening by combining multiple triggers.

The solution continues to be popular within the HPC community, and the firm also positions Storage Scale as an optimized solution for AI use cases. Finally, Storage Fusion, a containerized version of Storage Scale (and also consumable in an HCI deployment model), enables edge use cases.

Strengths: Storage Scale continues to see active development and a steady pace of releases with noteworthy improvements, such as ransomware protection capabilities. Excellent cross-platform support that extends beyond x86 architectures.

Challenges: Advanced analytics capabilities are lacking and must be developed.

Microsoft Azure

It offers a number of SaaS-based cloud file storage solutions through its Azure Storage portfolio, which aims to address different use cases and customer requirements. Most of these solutions offer different performance tiers. Recently, the portfolio saw the addition of the Azure Managed Lustre offering.

Azure Blob provides file-based access (using REST, NFSv3.0, and HDFS via the ABFS driver for big data analytics) to an object storage back end with a focus on large, read-heavy sequential-access workloads, such as large-scale analytics data, backing up and archiving, media rendering, and genomic sequencing. This solution offers the lowest storage cost among Microsoft’s cloud file storage solutions. The solution also supports native SFTP transfers.

Azure Files uses the same hardware as Azure Blob but offers 2 share types: NFS, and SMB. NFS shares implement full POSIX filesystem support with the NFSv4.1 protocol; REST support is available on SMB shares, but not yet on NFS shares. The solution is oriented toward random access workloads.

Azure NetApp Files consists of a first-party solution jointly developed by Microsoft and NetApp, using Ontap running on firm’s bare metal systems, fully integrated in the Azure cloud. This solution offers all the benefits customers expect from NetApp, among which are enterprise-grade features, full feature parity with on-premises deployments, and other public cloud offerings based on Ontap.

Global namespaces are supported with Azure File Sync through the use of DFS Namespaces; DFS-N namespaces can also be used to create a global namespace across several Azure file shares. Aside from Azure Blob, there is no global namespace capability available to federate the various solutions and tiers offered across the Azure cloud file storage portfolio (Azure Blob offers a hierarchical namespace that can allow customers to use NFS and SFTP on object storage data). Besides Azure File Sync, Azure offers a variety of data replication and redundancy options.

While Azure NetApp Files relies on Ontap, both Azure Blob and Azure Files are based on an object storage back end. Automated tiering capabilities are partially present across the Azure Files offerings; however, Azure Blob offers tiering based on creation, last modified, and even last accessed, with both policy-based tiering to a cheaper tier and automated tiering up to a hotter tier on access.

The storage portfolio offers rich integration capabilities through APIs for data management purposes. Observability and analytics are handled via the Azure Monitor single-pane-of-glass management interface, which also incorporates Azure Monitor Storage Insights.

Azure Files services provide incremental read-only backups as a way to protect against ransomware. Up to 200 snapshots per share are supported, and a soft delete feature (recovery bin) is also available.

Finally, the on-premises Azure File Sync solution can be used for edge deployments.

Strengths: Offers a broad portfolio with multiple options, protocols, use cases, and performance tiers that allow organizations to consume cloud file storage in a cost-efficient manner. Also offers enterprise-grade multicloud capabilities with its first-party Azure NetApp Files solution.

Challenges: There are no global namespace management capabilities to abstract the underlying file share complexity for the end user. There are also limitations based on the different share types, although Microsoft is working on increasing maximum volume sizes. The various offerings can appear very complex and therefore intimidating for smaller organizations.

Nasuni

It presents a SaaS solution designed for enterprise file data services. This solution boasts an object-based global file system that supports various file interfaces, including SMB and NFS, as its primary engine. It is seamlessly integrated with all major cloud providers and is compatible with on-premises S3-compatible object stores. Firm’s SaaS solution provides a reliable and efficient platform for managing enterprise file data services, enabling businesses to streamline their digital operations.

The company’s solution consists of a core platform with add-on services across multiple areas, including ransomware protection and hybrid work, with data management and content intelligence services planned. Many customers implement the solution to replace traditional NAS systems and Windows File Servers, and its characteristics enable users to replace several additional infrastructure components as well, such as backup, DR, data replication services, and archiving platforms.

The company offers a global file system called UniFS, which provides a layer that separates files from storage resources, managing one master copy of data in public or private cloud object storage while distributing data access. The global file system manages all metadata – such as versioning, access control, audit records, and locking – and provides access to files via standard protocols such as SMB and NFS. Files in active use are cached using firm’s Edge Appliances, so users benefit from high-performance access via existing drive mappings and share points. All files, including those in use across multiple local caches, have their master copies stored in cloud object storage so they are globally accessible from any access point.

The Management Console delivers centralized management of the global edge appliances, volumes, snapshots, recoveries, protocols, shares, and more. The web-based interface can be used for point-and-click configuration, but te vendor also offers a REST API method for automated monitoring, provisioning, and reporting across any number of sites. In addition, the Nasuni Health Monitor reports to the Management Console on the health of the CPU, directory services, disk, file system, memory, network, services, NFS, SMB, and so on. The vendor also integrates with tools like Grafana and Splunk for further analytics, and it has recently announced a more formal integration with Microsoft Sentinel for sharing information on security, cyberthreats, and other event information from Nasuni Edge Appliance (NEA) devices. Data management capabilities are being integrated, and thanks to firm’s purchase of data management company Storage Made Easy in June 2022, there will be even more integrations in the coming months.

The company provides ransomware protection in its core platform through its Continuous File Versioning and its Rapid Ransomware Recovery feature. To further shorten recovery times, the company recently introduced Ransomware Protection as an add-on paid solution that augments immutable snapshots with proactive detection and automated mitigation capabilities. The service analyzes malicious extensions, ransom notes, and suspicious incoming files based on signature definitions that are pushed to Edge Appliances, automatically stops attacks, and gives administrators a map of the most recent clean snapshot to restore from. A future iteration of the solution (on the roadmap) will implement AI/ML-based analysis on edge appliances.

Edge Appliances are lightweight VMs or hardware appliances that cache frequently accessed files using SMB or NFS access from Windows, macOS, and Linux clients to enable good performance. They can be deployed on-premises or in the cloud to replace legacy file servers and NAS devices. They encrypt and de-duped files, then snapshot them at frequent intervals to the cloud, where they are written to object storage in read-only format.

The Access Anywhere add-on service provides local synchronization capabilities, secure and convenient file sharing (including sharing outside of the organization), and full integration with Microsoft Teams. Finally, the edge appliances also provide search and file acceleration services.

Strengths: File system solution is secure, scalable, and protects against ransomware. Edge appliances enable fast and secure access to frequently used data. Ideal for businesses seeking efficient and reliable file storage.

Challenges: The solution focuses primarily on distributed data and data availability and is not tuned to deliver high performance, high throughput, and low latency to performance-oriented workloads. Data management capabilities, although being integrated quickly, are still a point of attention.

NetApp

It continues to deliver a seamless experience across on-premises and public cloud environments with BlueXP, a unified control plane that comprises multiple storage and data services offered via a single SaaS-delivered multicloud control plane.

Among services offered in BlueXP, customers can find Cloud Volumes Ontap (CVO), based on Ontap technology, and also 1st-party services on hyperscalers such as AWS (Amazon FSx for Ontap), Azure (Azure NetApp Files), and Google, with the recently added Google Cloud NetApp Volumes (available in 14 regions currently with target of all regions globally in 1CQ24) and the only Google service offering SMB, NFS, and replication capabilities). The 1st-party offerings are tightly integrated into hyperscaler management and API interfaces to offer a seamless experience to cloud-native users.

BlueXP also supports a host of other data services, such as observability, governance, data mobility, tiering, backup and recovery, edge caching, and operational health monitoring. The company recently added a sustainability dashboard to BlueXP, showing power consumption (kWh), direct carbon usage (tCO2e), and heat dissipation (BTU). It also shows carbon mitigation percentages and potential gains from recommended actions (such as enabling caching, de-dupe, and so forth).

Cloud Volumes Edge Cache is a CVO version that implements a global namespace that abstracts multiple deployments and locations regardless of distance. Several intelligent caching mechanisms combined with global file-locking capabilities enable a seamless, latency-free experience that makes data accessible at local access speeds from local cache instances. Ontap has been architected to support hybrid deployments natively, whether on-premises or via cloud-based versions such as CVO. Tiering, replication, and data mobility capabilities are outstanding and enable a seamless, fully hybrid experience that lets organizations decide where primary data resides, where infrequently accessed data gets tiered to, and where data copies and backups used for DR should be replicated to.

Integration with object storage is a key part of the solution, and policy-based data placement allows automated, transparent data tiering on-premises with NetApp StorageGRID, or in the cloud with AWS S3, Azure Blob Storage, or Google Cloud Storage, with the ability to recall requested files from the object tier without application or user intervention. Object storage integration also extends to backup and DR use cases. With Cloud Backup, backup data can be written to object stores using block-level, incremental-forever technology. Cloud Volumes Ontap and Amazon’s 1st-party implementation, FSx for NetApp Ontap, also allow for file/object duality for workloads such as GenAI, allowing unified access to NFS/SMB data from S3-enabled applications and eliminating the need to copy or refactor NAS data for S3 access.

Data management capabilities are enabled by consistent APIs that allow data copies to be created as needed. The platform also offers strong data classification and analytics features in all scanned data stores. Organizations have the ability to generate compliance and audit reports such as DSARs; HIPAA and GDPR regulatory reports also can be run in real time on all Cloud Volumes data stores.

The BlueXP platform provides advanced security measures against ransomware and suspicious user or file activities when combined with the native security features of Ontap storage, including immutable snapshots. A new feature in BlueXP is ransomware protection, which provides situational awareness of security and user behavior activities to help identify risks and threats and instruct how to improve an organization’s security posture and remediate attacks.

The solution supports flexible deployment models that also take into consideration edge use cases. BlueXP edge caching provides remote locations with fast and secure access to a centralized data store with local caching of often-used data for that location, all under centralized control. Customers’ distributed workforces get transparent access to an always up-to-date data set from any remote location. The solution has a lightweight edge presence, and it is easy to provision anywhere in the world with security, consistency, and visibility.

Strengths: Available as a first-party offering on all 3 major hyperscalers, the vendor offers an unrivaled set of enterprise-grade capabilities that enable seamless data mobility and a consistent, simplified operational experience. Cyber resiliency, data management, and data protection capabilities increase the overall value of the platform to offer an industry-leading cloud file storage experience.

Challenges: Despite notable improvements, the firm’s portfolio still remains complex to newcomers, though using BlueXP as an entry point is an effective way to remediate this perception issue.

ObjectiveFS

It offers a cloud file storage platform that supports on-premises, hybrid, and cloud-based deployments. Its POSIX file system can be accessed as one or many directories by clients, and it uses an object store on the back end. Data is written directly to the object store without any intermediate servers. ObjectiveFS runs locally on servers through client-side software, providing local-disk-speed performance. The solution scales simply and without disruption by adding ObjectiveFS nodes to an existing environment. The solution is massively scalable to thousands of servers and petabytes of storage.

The solution focuses on multiple use cases and includes ML, financial simulations, and cluster computation. It also covers more traditional use cases, such as unstructured file storage, persistent storage for Kubernetes and Docker, data protection, analytics, and software development tools.

The firm offers a global namespace where all of the updates are synchronized through the object store back end. The solution supports cloud-based and on-premises S3-compatible object stores, such as IBM Public Cloud, Oracle Cloud, Minio, AWS S3, Azure, Wasabi, Digital Ocean Spaces, and GCP, allowing customers to select either the Azure native API or the S3-compatible API. Support for AWS Outposts as well as S3 Glacier Instant Retrieval was added in version 7 of ObjectiveFS.

The company uses its own log-structured implementation to write data to the object store back end by bundling many small writes together into a single object. The same technique can then be used for read operations by accessing only the relevant portion of the object. The solution also uses a method called compaction, which bundles metadata and data into a single object for faster access. Storage-class-aware support ensures that policies can be used to implement intelligent data tiering and move data across tiers based on usage. To ensure performance requirements are met, the vendor offers several levels of caching that can be used together.

Users can deploy the solution across multiple locations (multiregion and multicloud). The solution delivers flexible deployment choices, allowing storage and compute to run in different locations and across different clouds.

From a cyber-resiliency standpoint, the product is based on log-structured implementation and built-in immutable snapshots, providing recovery capabilities in case of a ransomware attack. It also provides comprehensive security features, such as data in-flight and at-rest encryption. The solution supports multitenancy, and therefore, data is encrypted using separate encryption keys, making it accessible only to the tenant that owns the data.

One of company’s most interesting features is the inclusion of a workload adaptive heuristics mechanism that supports hundreds of millions of files and tunes the file system to ensure consistent performance is delivered regardless of the I/O activity profile (read versus write, sequential versus random) or the size of the files, handling many small files or large terabyte-sized files at the same performance levels.

Strengths: Provides a highly scalable and robust solution at consistent performance levels regardless of the data type. It delivers flexible deployment options and great support for multitenant deployments.

Challenges: Data management capabilities are missing.

Oracle Cloud Infrastructure

Oracle provides high-performance cloud file storage options via 3 offerings: OCI File Storage, Oracle HPC File System stacks, and Oracle ZFS.

File Storage is the cloud file storage solution developed by the company for its OCI platform. Delivered as a service, the solution provides an automatically scalable, fully managed elastic file system that supports the NFSv3 protocol and is available in all regions. Up to 100 file systems can be created in each availability domain, and each of those file systems can grow up to 8EB. Optimized for parallelized workloads, the solution also focuses on HA and data durability, with 5-way replication across different fault domains.

File Storage supports snapshots as well as clones. The clone feature allows a file system to be made available instantaneously for read and write access while inheriting snapshots from the original source. It makes copies immediately available for test and development use cases, allowing organizations to significantly reduce the time needed to create copies of their production environment for validation purposes. No backup feature currently exists, although third-party tools can be used to copy data across OCI domains, regions, OCI Object Storage, or on-premises storage. In June 2023, the company announced the availability of policy-based snapshots for OCI File Storage to manage the entire lifecycle of snapshots, match data retention policies, and better manage space.

Data management capabilities are available primarily via the use of REST APIs. These can be combined with the clone feature to automate fast copy provisioning operations that execute workloads on copies of the primary data sets. A management console provides an overview of existing file systems and provides usage and metering information, both at the file system and mount target levels. Administrators also get a view of system health with performance metrics and can configure alarms and notifications in the general monitoring interface for OCI.

OCI HPC File System stacks are dedicated to HPC workloads in organizations that require the use of traditional HPC parallel file systems, such as BeeGFS, IBM Spectrum Scale, GlusterFS, or Lustre. The offering has been recently enriched with support for 3 new high-performance file systems: BeeOND (BeeGFS on demand over RDMA), NFS File Server with High Availability, and Quobyte. The 1st 2 are available via the Oracle Cloud Marketplace Stacks (via a web-based GUI) and Terraform-based templates, while Quobyte is available only via Terraform-based templates.

Organizations also can opt for the Oracle ZFS image option, a marketplace image that can be configured as bare metal or a VM and supports ZFS, now also available in a highly available format (ZFS-HA). Each image can scale to 1,024TB, providing support for NFS and SMB with AD integration. The solution fully supports replication, snapshots, clones, and cloud snapshots, with several DR options.

Strengths: OCI offers an attractive palette of cloud file services, starting with File Storage, which will be particularly appealing to organizations building on top of the OCI platform. HPC-related file system stacks are a great alternative to DIY deployments, making those popular file systems easily deployable to better serve cloud-based HPC workloads.

Challenges: There are currently no particular cyber-resiliency capabilities on File Storage.

Panzura

It offers a high-performance hybrid cloud global file system based on its CloudFS file system. The solution works across sites (public and private clouds) and provides a single data plane with local file operation performance, automated file locking, and immediate global data consistency. It was recently redesigned to offer a modular architecture that will gradually allow more data services to integrate with the core Panzura platform seamlessly. Firm’s recent release of Panzura Edge, a mobile gateway for CloudFS files, demonstrates the company’s continued focus on enablement for widely distributed teams reliant on mobile devices for large file collaboration, such as healthcare point-of-care, and remote site construction and engineering.

The solution implements a global namespace and tackles data integrity requirements through a global file-locking mechanism that provides real-time data consistency regardless of where a file is accessed worldwide. It also offers efficient snapshot management with version control and allows administrators to configure retention policies as needed. Backup and DR capabilities are provided as well.

The company utilizes S3 object stores and is compatible with various object storage solutions, whether hosted in the public cloud or on-premises. One of its key features, cloud mirroring, allows users to write data to a secondary cloud storage provider, ensuring data availability in case of a failure in one of the providers. Additionally, the vendor provides options for tiering and archiving data.

It offers advanced analytics capabilities through its Data Services. This set of features includes global search, user auditing, monitoring functions, and one-click file restoration. The services provide core metrics and storage consumption data, such as the frequency of access, active users, and the environment’s health. The firm also offers several API services for data management, enabling users to connect their data management tools. Panzura Data Services can detect infrequently accessed data, allowing users to act appropriately.

Data Services provides robust security capabilities to protect data vs. ransomware attacks. Ransomware protection is handled with a combination of immutable data (a WORM S3 back end) and read-only snapshots taken every 60s at the global filer level, regularly moving data to the immutable object store, and allowing seamless data recovery in case of a ransomware attack through the same mechanism—backup—an organization would use to recover data under normal circumstances. These are complemented with Panzura Protect, which currently supports detection of ransomware attacks and delivers proactive alerts. In the future, this product will also support end-user anomaly detection to detect suspicious activity.

The solution also includes a secure erase feature that removes all deleted file versions and overwrites the deleted data with zeros, a feature available even with cloud-based object storage.

One of the new capabilities of the solution is Panzura Edge, which extends CloudFS directly to users’ local machines.

Strengths: Offers a hybrid cloud global file system with local-access performance, global availability, data consistency, tiered storage, and advanced analytics, helping organizations manage their data footprint more effectively.

Challenges: The solution is a cloud file storage solution for enterprise workloads that can complement high-performance systems but is not designed specifically to meet high-performance requirements, such as high throughput, high IO/s, and ultra-low latency.

Qumulo

It has developed a software-based, vendor-agnostic, high-performance cloud file storage that can be deployed on-premises, in the cloud, or even delivered through hardware vendor partnerships. The solution provides a comprehensive set of enterprise-grade data services branded Qumulo Core, which handle core storage operations (scalability, performance) as well as data replication and mobility, security, ransomware protection, data integration, and analytics. Recently, the company released its Azure Native Qumulo (ANQ). Delivered as a cost-effective, fully managed service, ANQ delivers exabyte-scale storage with predictable, capacity-based SaaS pricing, and the benefits of seamless data mobility delivered by the vendor’s platform.

The firm supports hybrid and cloud-based deployments. In addition to ANQ, organizations can deploy a Qumulo cluster through their preferred public cloud marketplace (the solution supports AWS, Azure, and GCP). AWS Outposts is also supported, and a comprehensive partnership with AWS is also in place. The vendor is expanding its delivery models as well through STaaS partnerships with HPE GreenLake and others.

The solution scales linearly, from both a performance and a capacity perspective, providing a single namespace with limitless capacity that supports billions of large and small files and provides the ability to use nearly 100% of usable storage through efficient erasure code techniques. It also supports automatic data rebalancing when nodes or instances are added. The namespace enables real-time queries and aggregation of metadata, greatly reducing search times.

Data protection and replication and mobility use cases are well covered and include snapshots and snapshot-based replication to the cloud, continuous replication, and disaster recovery support with failover capabilities. Qumulo SHIFT is a built-in data service that moves data to AWS S3 object stores with built-in replication, including support for immutable snapshots. SHIFT allows bidirectional data movements to and from S3 object storage, providing organizations with more flexibility and better cost control. The company also has built-in native support for S3, allowing access to the same data that may also be accessed via NFS or SMB.

The vendor includes a comprehensive set of REST APIs that can be used to perform proactive management and automate file system operations. The solution comes with a powerful data analytics engine that provides real-time operational analytics (across all files, directories, metrics, users, and workloads), capacity awareness, and predictive capacity trends, with the ability to “time travel” through performance data. With Qumulo Command Center, organizations can also easily manage their Qumulo deployments at scale through a single management interface.

Advanced security features include read-only snapshots that can be replicated to the cloud as well as audit logging to review user activity. Immutable snapshots, snapshot-locking capabilities (protection vs. deletion), and support for multitenancy were implemented in 2023.

Several key capabilities or core components of high-performance cloud file storage are currently on company’s roadmap. Among others, the firm is working on implementing a global namespace that supports geographically distributed access to data anywhere, disruptively reduced pay-as-you-go cloud pricing, and the ability to elastically scale and pay only for the performance used in ANQ.

Strengths: Offers a comprehensive cloud file storage solution that is simple to manage and implement. Its rich and complete data services set, combined with a broad choice of deployment models, makes it one of the most compelling cloud file storage offerings currently available. Its roadmap for 2024 looks good.

Challenges: Although the solution is very rich, some important features are still on the roadmap. Among these, data reduction improvements should be mentioned. The firm plans to remediate this by implementing inline data compression in the future.

Weka

Its Data Platform is a high-performance cloud file storage architecture that delivers AFA performance in a software-defined storage solution capable of running on-premises, in the cloud, or both. It is built on the Weka File System (WekaFS) and deployed as a set of containers; it offers multiple deployment options on-premises (bare metal, containerized, virtual) as well in the cloud, with support for AWS, Azure, GCP, and OCI. All deployments can be managed through a single management console regardless of their location, and all offer a single data platform with mixed workload capabilities and multiprotocol support (SMB, NFS, S3, POSIX, GPU Direct, and Kubernetes CSI).

The solution implements a global namespace that spans performance tiers with automatic scalability in the cloud, presenting users with a unified namespace that abstracts the underlying complexity and enables transparent background data movements between tiers.

The global namespace natively supports and expands into S3 object storage bidirectionally, thanks to dynamic data tiering, which automatically pushes cold data to the object tier. Both tiers (flash-based and object) can scale independently. A feature called Snap-To-Object allows data and metadata to be committed to snapshots for backup, archive, and asynchronous mirroring. This feature can also be used for cloud-only use cases in AWS, Azure, GCP, and OCI to pause or restart a cluster, protect against single availability zone failure, migrate file systems across regions, or even migrate file systems between different clouds.

Data management capabilities primarily include the ability to create copies of the data through snapshots, for example in DevOps use cases where jobs or functions are executed against data copies instead of the primary data set. It is expected that, in the future, the company will improve some of its capabilities in this area, notably around metadata tagging, querying, and so on. In addition, some of these capabilities could be offloaded to third-party engines–for example, by running data analysis from ML-based engines that can then augment the metadata of datasets residing on the Data Platform.

API integrations (including serverless) are possible through firm’s APIs. On the analytics side, vendor’s monitoring platform captures and provides telemetry data about the environment, with the ability to deep dive into certain metrics all the way down to file system calls. The solution implements cyber-resiliency capabilities with Weka Snap-To-Object and Weka Home. This latter collects telemetry data (events and statistics) and provides proactive support in case of detected warnings and alerts. the company can also detect encryption within the underlying storage devices in each storage host by detecting alteration of the block checksum. And, as noted earlier, Snap-to-Object protects data with immutable object-based snapshots that help safeguard data against ransomware attacks.

The firm’s platform supports edge aggregation deployments, which enable smaller-footprint clusters to be deployed alongside embedded IoT devices. Future plans include architectural optimizations that will enable compact, resource-efficient edge deployments.

This versatile solution is particularly useful in demanding environments that require low latency, high performance, and cloud scalability, such as AI/ML, life sciences, financial trading, HPC, media rendering and visual effects, electronic design and automation, and engineering DevOps.

Strengths: Has architected a robust and seamlessly scalable high-performance storage solution with comprehensive deployment options, automated tiering, and a rich set of services via a single platform that eliminates the need to copy data through various dedicated storage tiers. Its single namespace encompassing file and object storage reduces infrastructure sprawl and complexity to the benefit of users and organizations alike.

Challenges: Data management remains an area for improvement.

Zadara

Its Edge Cloud Services is an interesting solution that is aimed primarily at MSPs (that subsequently provide it as a service to their customer base) and some larger enterprises. The solution is available globally through more than 500 cloud partners on six continents and consists of an elastic infrastructure layer comprising compute, networking, and storage capabilities for which cost is based on usage. The storage offering, named zStorage, consists of one or more virtual private storage arrays (VPSAs) that can be deployed on SSD, HDD, and hybrid media types. VPSAs are able to serve block, file, and object storage simultaneously. Various VPSAs can be created, each with its own engine type (which dictates performance) and its own set of drives, including spares.

Global namespaces are supported for file-based storage, with a capacity limit of up to 0.5PB, after which a new namespace must be created. Although customers can, in theory, use a 3rd-party object storage gateway to store files on the Zadara object store tier (and therefore circumvent this limitation), there is no native multiprotocol access capability.

The solution offers thinly provisioned snapshots as well as cloning capabilities, which can be local or remote. The snapshot-based asynchronous remote mirroring feature enables replication to a different pool within the same VPSA, to a different local or remote VPSA, or even to a different cloud provider. The replicated data is encrypted and compressed before being transferred to the destination. The solution also allows for many-to-many relationships, which enables cross-VPSA replication. Cloning capabilities can be used for rapid migration of volumes between VPSAs because the data can be made available instantly (although a dependency with the source data remains until all of the data has been copied in the background).

Native backup and restore capabilities leverage object storage integration with AWS S3, Azure Blob Storage, Google Cloud Storage, Zadara Object Storage, and other S3-compatible object stores. Object storage can be used by Zadara for audit and data retention purposes. The company supports AWS Direct Connect as well as Azure ExpressRoute, both of which allow a single volume to be made available to workloads residing in multiple public clouds, enabling the use of a single dataset across multiple locations or clouds. When deployed on flash, zStorage supports an auto-tiering capability that recognizes hot data and places it on the flash/high-performance tier, while less frequently accessed data is tiered either on lower-cost HDDs or S3-compatible object storage.

Zadara File Lifecycle Management services provide data management and analytics capabilities to the solution, including growth trends (overall and by file type), capacity utilization across several metrics, and usage statistics by owners and groups. Those reports allow organizations to identify unused data as well as orphaned data (data without an owner assigned to it).

The company natively supports access auditing of files accessed through NFS and SMB. Audited data is segregated or made accessible only by administrators and can be uploaded to a remote S3 repository for long-term retention. Its file snapshots are read-only and customers can use volume clones to guard against snapshot deletion, but there are currently no snapshot lock-retention capabilities. Accidental or malicious snapshot deletion can, however, be partially prevented through the use of strict role-based access controls. Although no native ransomware protection capabilities exist, firm’s partners with Veeam to provide such protection through Veeam’s Scale-Out Backup Repository immutability features.

Company’s Federated Edge Program allows MSPs to rapidly deploy Zadara at the edge, enabling MSPs to provision a turnkey infrastructure closer to their customers while adhering to the Zadara Cloud operating model. the vendor provides the necessary hardware and software, and revenues are shared between Zadara and Federated Edge partners.

Finally, the firm is working on several improvements including snapshot lock retention. One of them should enhance its monitoring through the use of an ML-based engine that analyzes and parses alert patterns before informing the administrators. Another planned improvement will bring cost analysis and cost optimization recommendations to its File Lifecycle feature.

Strengths: Edge Cloud Services delivers comprehensive file storage capabilities via a platform with rich compute, storage, and networking support. Remote cloning and mirroring capabilities provide a seamless experience complemented by object storage tiering and multicloud support. Analytics provides multidimensional information about trends, capacity, and user statistics. File auditing functionalities with long-term retention can be useful for legal purposes.

Challenges: The 0.5PB capacity limit on namespaces can become an operational hurdle for organizations with many teams working on very large datasets, such as cloud-based AI, HPC, and big data workloads.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter