MLperf Released First Round of Storage Benchmark Submissions

Leaders DDN, Nutanix, Weka IO and Micron

This is a Press Release edited by StorageNewsletter.com on October 11, 2023 at 2:02 pm![]() This report was posted on September 19, 2023 by Ray Lucchesi from Silver Consulting, Inc.

This report was posted on September 19, 2023 by Ray Lucchesi from Silver Consulting, Inc.

MLperf released their first round of storage benchmark submissions early last month. There’s plenty of interest how much storage is required to keep GPUs busy for AI work. As a result, MLperf has been busy at work with storage vendors to create a benchmark suitable to compare storage systems under a “simulated” AI workload.

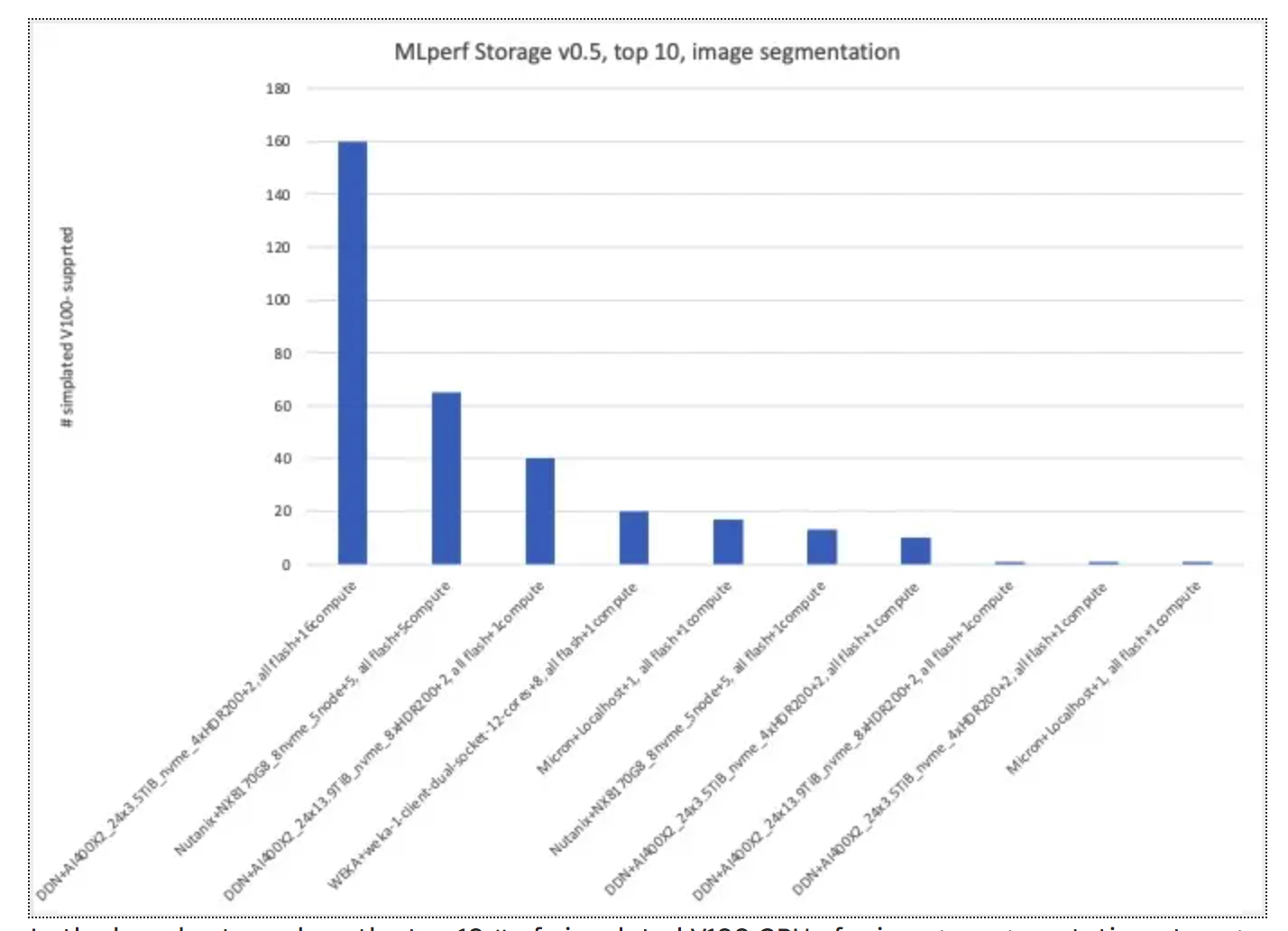

For the v0.5 version, they have released 2 simulated DNN training workloads one for image segmentation (3D-Unet [146MB/sample]) and the other for BERT NLP (2.5KB/sample).

The GPU being simulated is a NVIDIA V100. What they showing with their benchmark is a compute system (with GPUs) reading data directly from a storage system.

By using simulated (GPU) compute, the benchmark doesn’t need physical GPU hardware to run. However, the veracity of the benchmark is somewhat harder to depend on.

But, if one considers, the reported benchmark metric, # supported V100s, as a relative number across the storage submissions, one is on more solid footing. Using it as a real number of V100s that could be physically supported is perhaps invalid.

The other constraint from the benchmark was keeping the simulated (V100) GPUs at 90% busy. MLperf storage benchmark reports, number of samples/second,Mb/s metrics as well as # simulated (V100) GPUs supported (@90% utilization).

In the bar chart, we show the top 10 # of simulated V100 GPUs for image segmentation storage submissions, DDN AI400X2 had 5 submissions in this category.

The interesting comparison is probably between DDN’s #1 and #3 submission.

The #1 submission had smaller amount of data (24X3.5TB = 64TB of flash), used 200Gb/s IB, with 16 compute nodes and supported 160 simulated V100s.

The #3 submission had more data (24X13.9TB=259TB of flash),used 400Gb/s IB with one compute node and supports only 40 simulated V100s.

It’s not clear why the same storage, with less flash storage, and slower interfaces would support 4x the simulated GPUs than the same storage, with more flash storage and faster interfaces.

I can only conclude that the number of compute nodes makes a significant difference in simulated GPUs supported.

One can see a similar example of this phenomenon with Nutanix #2 and #6 submissions above. Here the exact same storage was used for 2 submissions, one with 5 compute nodes and the other with just one but the one with more compute nodes supported 5x the # of simulated V100 GPUs.

Lucky for us, the #3-#10 submissions in the above chart, all used one compute node and as such are more directly comparable.

So, if we take #3-#5 in the chart above, as the top 3 submissions (using one compute node), we can see that the #3 DDN AI400X2 could support 40 simulated V100s, the #4 Weka IO storage cluster could support 20 simulated V100s and the #5 Micron NVMe SSD could support 17 simulated V100s.

The Micron SSD used an NVMe (PCIe Gen4) interface while the other 2 storage systems used 400Gb/s IB and 100GbE, respectively. This tells us that interface speed, while it may matter at some point, doesn’t play a significant role in determining the # simulated V100s.

Both the DDN AI4000X2 and Weka IO storage systems are sophisticated storage systems that support many protocols for file access. Presumably the Micron SSD local storage was directly mapped to a Linux file system.

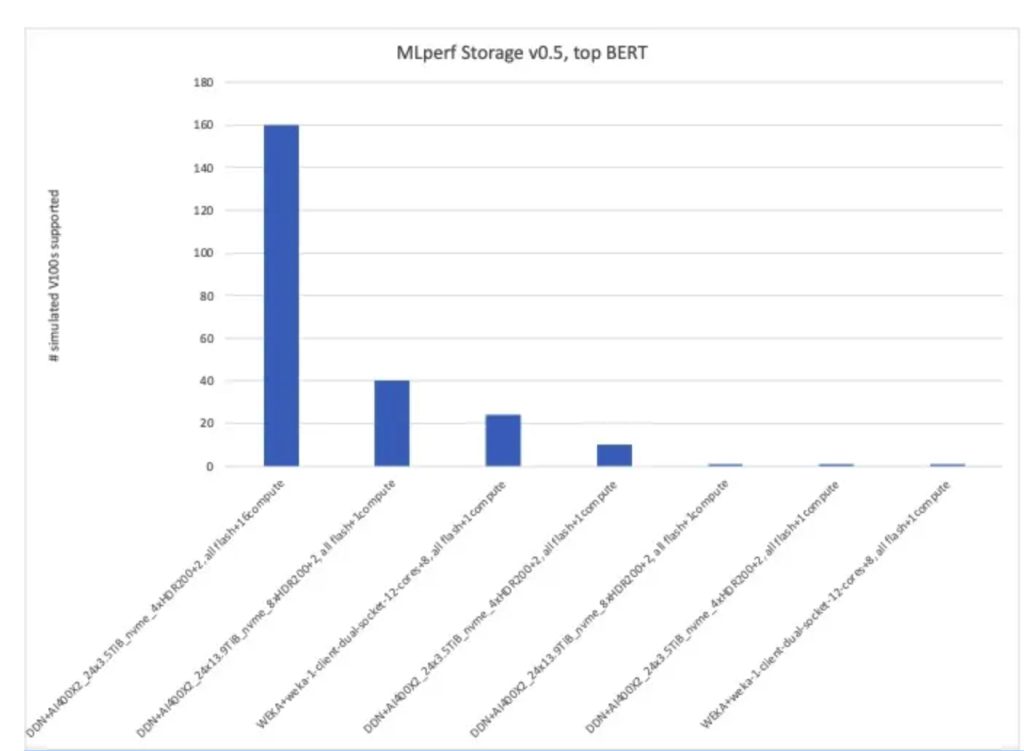

The only other MLperf storage benchmark that had submissions was for BERT, a natural language model.

In the chart, we show the # of simulated V100 GPUs on the vertical axis. We see the same impact here of having multiple compute nodes in the #1 DDN solution supporting 160 simulated V100s. But in this case, all the remaining systems, used one compute node.

Comparing the #2-4 BERT submissions, both the #2 and #4 are DDN AI400X2 storage systems. The #2 system had faster interfaces and more storage than the #4 system and supported 40 simulated GPUs vs. the other only supporting 10 simulated V100s.

Once again, Weka IO storage system came in at #3 (2nd place in the one compute node systems) and supported 24 simulated V100s.

A couple of suggestions for MLperf:

There should be different classes of submissions one class for only one compute node and the other for any number of compute nodes.

I would up level the simulated GPU configurations to A100 rather than V100s, which would only be one gen behind best in class GPUs.

I would include a standard definition for a compute node. I believe these were all the same, but if the number of compute nodes can have a bearing on the number of V100s supported, the compute node hardware/software should be locked down across submissions.

We assume that the protocol used to access the storage oven IB or Ethernet was standard NFS protocols and not something like GPUDirect storage or other RDMA variants. As the GPUs were simulated this is probably correct but if not, it should be specfied.

I would describe the storage configurations with more detail, especially for SDS systems. Storage nodes for these systems can vary in storage as well as compute cores/memory sizes which can have a significant bearing on storage throughput.

To their credit this is MLperfs first report on their new storage benchmark and I like what I see here. With the information provided, one can at least start to see some true comparisons of storage systems under AI workloads.

In addition to the new MLperf storage benchmark, MLperf released new inferencing benchmarks which included updates to older benchmark NN models as well as a brand new GPT-J inferencing benchmark. I’ll report on these next time.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter