From AWS, Reduce Archive Cost with Serverless Data Archiving

Present more cost-efficient option with serverless data archiving on Amazon Web Services.

This is a Press Release edited by StorageNewsletter.com on July 21, 2023 at 2:01 pm![]() Blog authored by Rostislav Markov, principal architect, professional services, AWS, and

Blog authored by Rostislav Markov, principal architect, professional services, AWS, and

![]() Davíd Ramírez, software architect and architecture leader, Capgemini, Getronics, and Liberty Insurance.

Davíd Ramírez, software architect and architecture leader, Capgemini, Getronics, and Liberty Insurance.

For regulatory reasons, decommissioning core business systems in financial services and insurance (FSI) markets requires data to remain accessible years after the application is retired.

Traditionally, FSI companies either outsourced data archiving to 3rd-party service providers, which maintained application replicas, or purchased vendor software to query and visualize archival data.

In this blog post, we present a more cost-efficient option with serverless data archiving on Amazon Web Services (AWS). In our experience, you can build your own cloud-native solution on Amazon Simple Storage Service (Amazon S3) at one-fifth of the price of 3rd-party alternatives. If you are retiring legacy core business systems, consider serverless data archiving for cost-savings while keeping regulatory compliance.

Serverless data archiving and retrieval

Modern archiving solutions follow the principles of modern applications:

- Serverless-first development, to reduce management overhead.

- Cloud-native, to leverage native capabilities of AWS services, such as backup or DR, to avoid custom build.

- Consumption-based pricing, since data archival is consumed irregularly.

- Speed of delivery, as both implementation and archive operations need to be performed quickly to fulfill regulatory compliance.

- Flexible data retention policies can be enforced in an automated manner.

AWS Storage and Analytics services offer the necessary building blocks for a modern serverless archiving and retrieval solution.

Data archiving can be implemented on top of Amazon S3) and AWS Glue.

- Amazon S3 storage tiers enable different data retention policies and retrieval SLAs. You can migrate data to Amazon S3 using AWS Database Migration Service; otherwise, consider another data transfer service, such as AWS DataSync or AWS Snowball.

- AWS Glue crawlers automatically infer both database and table schemas from your data in Amazon S3 and store the associated metadata in the AWS Glue Data Catalog.

- Amazon CloudWatch monitors the execution of AWS Glue crawlers and notifies of failures.

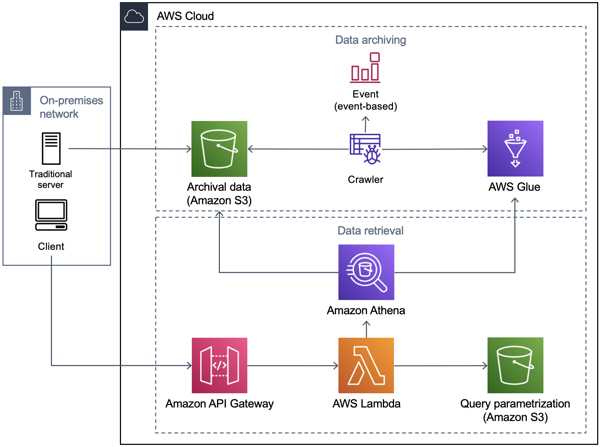

Figure 1 provides overview of solution:

Once the archival data is catalogued, Amazon Athena can be used for serverless data query operations using standard SQL.

- Amazon API Gateway receives the data retrieval requests and eases integration with other systems via REST, HTTPS, or WebSocket.

- AWS Lambda reads parametrization data/templates from Amazon S3 in order to construct the SQL queries. Alternatively, query templates can be stored as key-value entries in a NoSQL store, such as Amazon DynamoDB.

- Lambda functions trigger Athena with the constructed SQL query.

- Athena uses the AWS Glue Data Catalog to retrieve table metadata for the Amazon S3 (archival) data and to return the SQL query results.

How we built serverless data archiving

An early build-or-buy assessment compared vendor products with a custom-built solution using Amazon S3, AWS Glue, and a user frontend for data retrieval and visualization.

The total cost of ownership over a 10-year period for one insurance core system (Policy Admin System) was $0.25 million to build and run the custom solution on AWS compared with >$1.1 million for 3rd-party alternatives. The implementation cost advantage of the custom-built solution was due to development efficiencies using AWS services. The lower run cost resulted from a decreased frequency of archival usage and paying only for what you use.

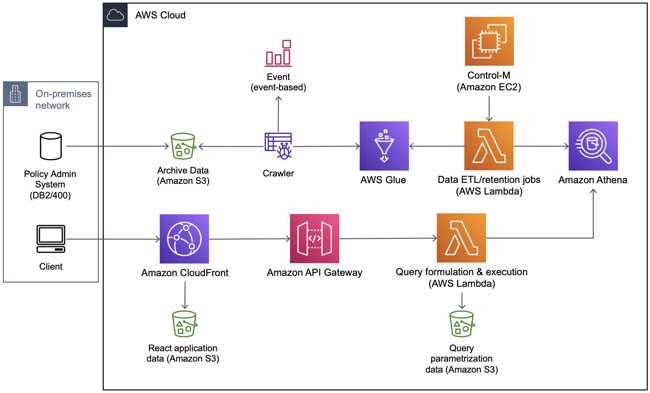

Data archiving solution was implemented with AWS services:

- Amazon S3 is used to persist archival data in Parquet format (optimized for analytics and compressed to reduce storage space) that is loaded from the legacy insurance core system. The archival data source was AS400/DB2 and moved with Informatica Cloud to Amazon S3.

- AWS Glue crawlers infer the database schema from objects in Amazon S3 and create tables in AWS Glue for the decommissioned application data.

- Lambda functions (Python) remove data records based on retention policies configured for each domain, such as customers, policies, claims, and receipts. A daily job (Control-M) initiates the retention process.

Figure 2. Exemplary implementation of serverless data archiving

and retrieval for insurance core system

Retrieval operations are formulated and executed via Python functions in Lambda. The following AWS resources implement the retrieval logic:

- Athena is used to run SQL queries over the AWS Glue tables for the decommissioned application.

- Lambda functions (Python) build and execute queries for data retrieval. The functions render HMTL snippets using Jinja templating engine and Athena query results, returning the selected template filled with the requested archive data. Using Jinja as templating engine improved the speed of delivery and reduced the heavy lifting of frontend and backend changes when modeling retrieval operations by ~30% due to the decoupling between application layers. As a result, engineers only need to build an Athena query with the linked Jinja template.

- Amazon S3 stores templating configuration and queries (JSON files) used for query parametrization.

- Amazon API Gateway serves as single point of entry for API calls.

The user frontend for data retrieval and visualization is implemented as web application using React JavaScript library (with static content on Amazon S3) and Amazon CloudFront used for web content delivery.

The archiving solution enabled 80 use cases with 60 queries and reduced storage from 3TB on source to 35GB on Amazon S3.

The success of the implementation depended on the following key factors:

- Appropriate sponsorship from business across all areas (claims, actuarial, compliance, etc.)

- Definition of SLAs for responding to courts, regulators, etc.

- Minimum viable and mandatory approach

- Prototype visualizations early on (fail fast)

Conclusion

Traditionally, FSI companies relied on vendor products for data archiving. In this post, we explored how to build a scalable solution on Amazon S3 and discussed key implementation considerations. We have demonstrated that AWS services enable FSI companies to build a serverless archiving solution while reaching and keeping regulatory compliance at a lower cost.

AWS services covered in this blog:

Managing your storage lifecycle

Querying Amazon S3 Inventory with Amazon Athena

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter