AWS: Route Traffic to Amazon FSx for NetApp Ontap Multi-AZ File System Using Network Load Balancer

Core architecture principle of building highly available applications on AWS is to use multi-Availability Zone (AZ) architecture.

This is a Press Release edited by StorageNewsletter.com on December 20, 2022 at 2:01 pm By Jay Horne, senior storage solutions architect, WW specialist organization, AWS

By Jay Horne, senior storage solutions architect, WW specialist organization, AWS

A core architecture principle of building highly available applications on AWS is to use a multi-Availability Zone (AZ) architecture. More and more customers are migrating their file data to Amazon FSx for NetApp ONTAP and have asked for more flexibility in how they connect their networks to their Multi-AZ deployment type from outside of their Amazon Virtual Private Cloud (VPC). Using Network Loader Balancer (NLB) can help simplify connectivity with your multi-AZ FSx for ONTAP file systems.

Click to enlarge

With the Multi-AZ deployment option, your file system spans 2 subnets in different Availability Zones, and it has a 3rd private floating IP address range that is used for NFS, SMB, and management (for example, when using NetApp management tools to administer your file system). The floating IP addresses aren’t associated with any specific subnet. Instead, they automatically route to the active file server’s Elastic Network Interface (ENI) to make sure of a seamless failover between file servers. To access these floating IP addresses from outside of the file system’s Amazon Virtual Private Cloud (Amazon VPC) (for example, from an on-premises network), they must leverage AWS Transit Gateway to route traffic destined for your file system. However, for customers who don’t have a Transit Gateway, this post walks through another method to enable NFS, SMB, and management access from outside of your file system’s deployment VPC that doesn’t involve all of the routing required by Transit Gateway by using a Network Load Balancer (NLB).

FSx for ONTAP also offers a Single-Availability Zone deployment option. Single-AZ file systems are built for use cases that need storage replicated within an AZ but don’t require resiliency across AZs, such as development and test workloads. Multi-AZ file systems provide a simple storage solution for workloads that need resiliency across AZs in the same AWS Region, and they are recommended for your primary production data sets.

In this post, I walk through setting up a Multi-Availability Zone NLB, which will provide a DNS name that can be used to access your file system’s data over NFS and SMB, thereby simplifying network configuration for access from outside of the deployment VPC. There are costs associated with NLB, and you’ll also see a model for how you can anticipate these extra costs.

Prerequisites

You should already have the following resources configured and running:

-

An FSx for ONTAP Multi-AZ file system created. If not, then you can create one by following the creating FSx for ONTAP file systems section of the FSx for ONTAP user guide.

Important: When creating the file system, you must choose an endpoint IP address range that is outside of the default 198.19.x.x Endpoint IP address range, as it’s reserved for NLB internal operations. In this post, I use 100.64.55.0/24.

-

A Storage Virtual Machine (SVM) created and running with an IP address in the private IP address range, a SVM running with a standard private IP address subnet, and volumes and shares created for SMB and NFS.

Creating an NLB for your SVM

Your FSx for ONTAP is composed of a file system, one or more SVMs that host your data, and one or more volumes that contain your data. Each SVM has its own separate configuration for authentication and access control, serving as an independent virtual file server. For Multi-AZ file systems, each SVM has an IP address in the private IP address range for servicing NFS and SMB traffic (and for using NetApp management tools). To simplify NFS and SMB routing, you’ll set up an NLB for each of your SVMs.

Complete the following steps to set up an NLB:

-

Gathering resource identifiers

-

Create NLB target groups

-

Setting ‘connection termination on deregistration’

-

Creating your NLB

-

Validating data access using the NLB

1. Gathering resource identifiers

a. First, you’ll gather resource identifiers that will be used when configuring the NLB. From the Amazon FSx console, select your FSx for ONTAP file system.

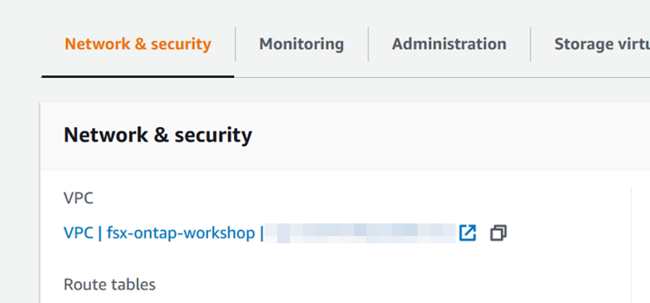

b. From the File system summary page, select the Network and security tab and note the VPC used. You’ll need this later in the process.

c. On the same page, under Preferred subnet, select the Network interface link.

On the network interfaces page on the Amazon Elastic Compute Cloud (Amazon EC2) console, note the security group ID associated with the ENI of your preferred subnet.

Make sure that the security group associated with this ENI is configured to allow inbound traffic for NFS and SMB for the IP address range (CIDR range) of the subnet where you intend to create the NLB. The File system access control with Amazon VPC section of the FSx for ONTAP user guide summarizes which protocols and ports are needed for different FSx for ONTAP traffic.

d. Back in the File system summary page, select the Storage virtual machines tab and select the SVM for which you want to create an NLB to enter the Storage virtual machine summary Then, find the Endpoints section on the SVM summary page and note the Management IP address (the Management, NFS, and SMB IP address are all the same). You’ll need this later.

2. Creating NLB target groups

Now that you have the SVM identifier and have validated your security group, you’ll create target groups for your NLB. Each target group is used to route requests to one or more registered targets. You’ll create your NLB after this. Then, you’ll create one target group for each entry listed in the following table.

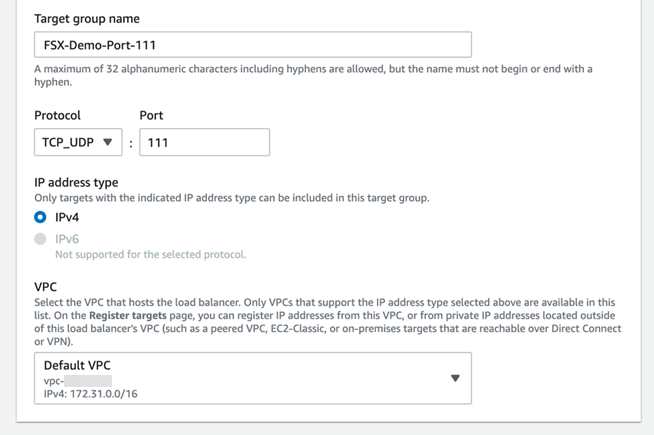

a. In the Amazon EC2 management console, find the Load Balancing section under the side navigation tab, and select the Target Groups page, then select the Create target group

You’ll create one Target group for each entry in the following table. The following steps show you the options that you should select while creating each target group.

|

Port number |

Protocol |

Service name |

Components that are involved in communication |

|

2049 |

TCP and UDP |

NFSV4 or NFSV3 |

NFS clients |

|

111 |

TCP and UDP |

RPC (required only by NFSV3) |

NFS clients |

|

445 |

TCP |

SMB |

SMB clients and CIFS |

b. For each target group, select IP addresses under Basic configuration, then provide a Target group name, use IPv4, and specify the VPC for your FSx for ONTAP file system. You can leave Health checks default, or change it according to your preference. Learn about Health checks for your target groups in the Elastic Load Balancing user guide.

c. On the Register targets page, select ‘Other private IP address’ for Network, and ‘All’ for Availability Zone. Under Allowed ranges, provide the SVM’s management IP address that you noted earlier.

On the same page, select the Include as pending below option for each target group. The port will already be provided.

d. Repeat this process for each target group that you must create, listed by the combination of port numbers and protocols in the table above.

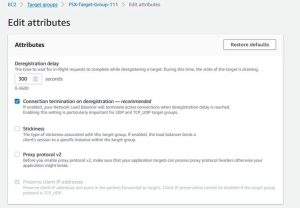

3. Setting ‘connection termination on deregistration’

Now, you must make sure that all of the target groups have ‘connection termination on deregistration’ enabled.

a. On the Target groups page of the Amazon EC2 management console, select each target group that you created earlier—one at a time—and under the Actions menu, and select Edit attributes.

b. On the Edit Attributes page, select the checkbox for Connection termination on deregistration, then select Save changes.

c. Repeat this process for each target group that you created.

4. Creating your NLB

With target groups created, you can now create your NLB.

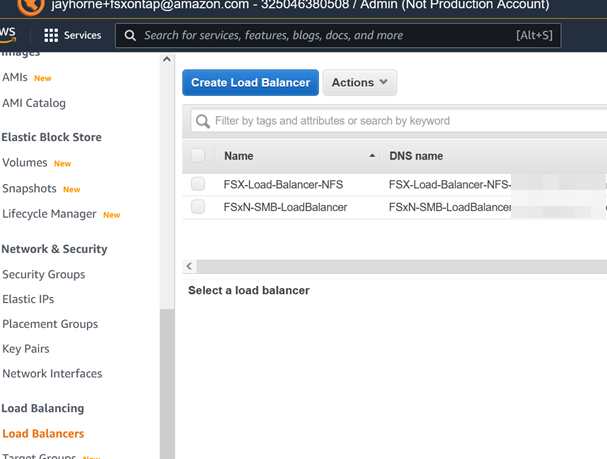

a. In the Amazon EC2 management console, select the Load Balancers On the Load Balancers page, select Create Load Balancer.

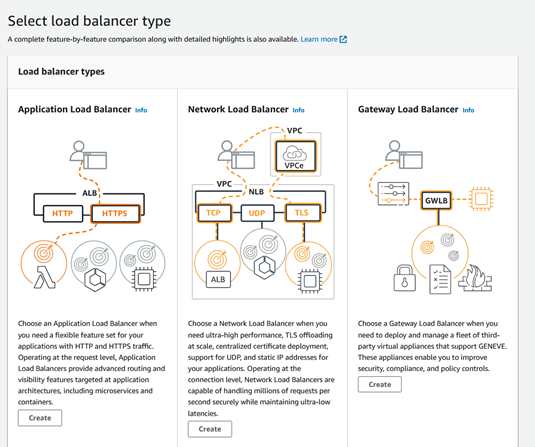

b. For load balancer type, select Network Load Balancer and select Create.

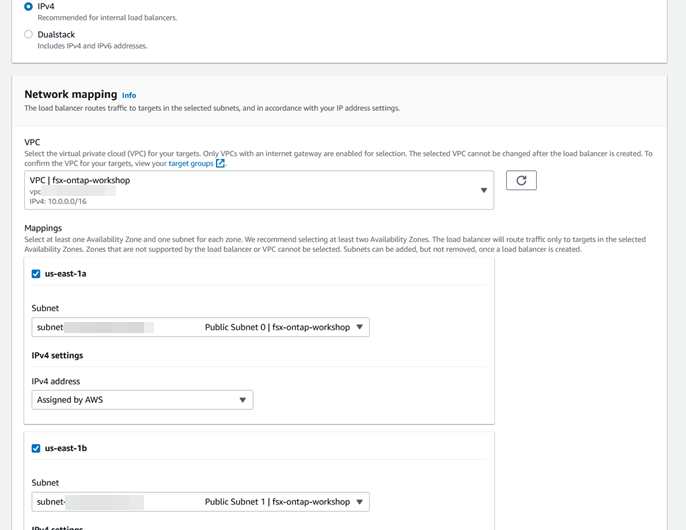

c. In Basic configuration, provide a name for the load balancer, and for Scheme choose ‘Internal’. Leave the IP address type as ‘IPv4’. In Network mapping, select the deployment VPC of your file system. For Mappings, select the subnets of the preferred and standby subnets of your file system.

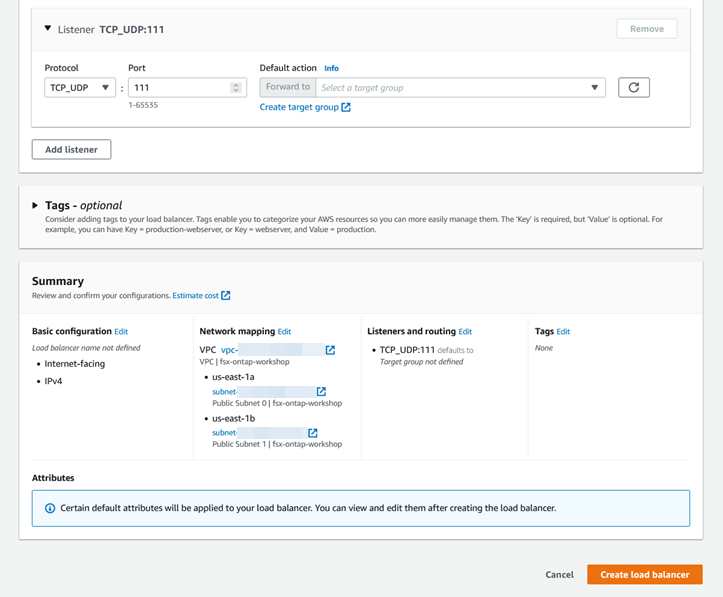

d. Under Listeners and routing, add listeners for the protocols and ports from the table provided when creating your target groups. For the Default action of each protocol and port combination, choose the target group that you created with the same mappings to associate for the “Forward to” action. When you’re finished, select Create load balancer.

Your NLB will take approximately five minutes to create.

5. Validating data access using the NLB

a. Once your NLB is created, you can validate that the DNS is resolvable from one of your clients using NSLOOKUP or a similar tool by following the steps documented in the What’s the source IP address of the traffic that Elastic Load Balancing sends to my web servers? Premium Support knowledge center guide. This guide is also how you can get the IP addresses of your NLB, in case you want to use the IP address rather than the DNS name.

b. Finally, validate that you can use the DNS name of your NLB to mount a volume over NFS or SMB. See Mounting volumes in the FSx for ONTAP user guide on how to mount over NFS or SMB. You shouldn’t use the DNS or IP address of the SVM management/NFS/SMB IP, instead you should use the DNS of the load balancer.

Cost considerations for AWS resources provisioned in this setup

As a cost exercise of using NLB, assume that an organization already uses Direct Connect (or S2S VPN) for connecting their on-premises data centers with AWS. Assume that end users in this scenario transfer approximately 50TB per month of data to FSx for ONTAP over TCP connections established via the NLB. Considering an average of 200 new TCP connections/mn and 15s of average connection duration, we can work out the additional cost/month incurred by this organization:

Figure 1: NLB Diagram

Click to enlarge

Unit conversions

- Processed bytes/NLB for TCP: 50TB/monthx1,024GB in a TB x 0.00136986 months in an hour = 70.136832GB/hour

- Average number of new TCP connections: 200/mn/(60 seconds in a minute) = 3.33/second

Pricing calculations

- 136,832GB/hour/1GB processed bytes/hour per LCU = 70.136832 TCP processed bytes LCUs

- 33 new TCP connections/800 connections per LCU = 0.0041625 new TCP connections LCUs

- 33 new TCP connections x 15s = 49.95 active TCP connections

- 95 active TCP connections/100000 connections per LCU = 0.0004995 active TCP connections LCUs

- Max (70.136832 TCP processed bytes LCUs, 0.0041625 new TCP connections LCUs, 0.0004995 active TCP connections LCUs) = 70.136832 max TCP LCUs

- 136832 TCP LCUs x 0.006 NLB LCU price x 730 hours per month =$307.20 (for TCP Traffic per Network Load Balancer)

- Max (0 UDP processed bytes LCUs, 0 new UDP Flow LCUs, 0 active UDP Flow LCUs) = 0.00 max UDP LCUs

- Max (0 TLS processed bytes LCUs, 0 new TLS connections LCUs, 0 active TLS connections LCUs) = 0.00 max TLS LCUs

- 1 Network Load Balancers x $307.20= $307.20

- 1 load balancers x $0.027 x 730 hours in a month = $19.71

Total LCU charges for all Network Load Balancers (monthly): $307.20

Total hourly charges for all Network Load Balancers (monthly): $19.71

Total monthly cost: $326.91

Monitoring setup availability

You can use CloudWatch metrics for your Network Load Balancer to monitor the health of your NLB and create alarms. Some important metrics are noted here.

|

HealthyHostCount |

The number of targets that are considered healthy. |

|

UnHealthyHostCount |

The number of targets that are considered unhealthy. |

|

ActiveFlowCount |

The total number of concurrent flows (or connections) from clients to targets. This metric includes connections in the SYN_SENT and ESTABLISHED states. TCP connections aren’t terminated at the load balancer, so a client opening a TCP connection to a target counts as a single flow. |

Conclusion

In this post, I showed you how to leverage NLBs as an alternative to AWS Transit Gateway (TGW) to access a Multi-AZ FSx for ONTAP file system from outside of the file system’s deployment VPC. By using an NLB we can access NFS, SMB, and management traffic through a DNS hostname (or IP address) that exists within the subnets native to the VPC. This solution simplifies the network configuration for customers who do not use TGW today or do not want to manage a static route entered into the TGW route table. I also showed you how to do a cost analysis of using an NLB to inform your migration planning. For instructions on how to use Transit Gateway for the same use case, see our user guide documentation on Accessing data from outside the deployment VPC.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter