SSD and NAS: Improvement or Pointless Expense

Do you have SSDs in your NAS?

This is a Press Release edited by StorageNewsletter.com on March 13, 2020 at 2:11 pm This blog was posted by Ben DeLaurier, director of support, Buffalo Americas, Inc., and published on March 3, 2020.

This blog was posted by Ben DeLaurier, director of support, Buffalo Americas, Inc., and published on March 3, 2020.

SSD and NAS – Revolutionary Improvement or Pointless Expense

“Do you have SSDs in your NAS?”

We get this question a lot. And I mean a lot.

There’s a lot of buzz for SSD and it isn’t hard to see why. Small, fast things like roadsters, fighter jets, cheetahs, and SSDs appeal to a certain primal appreciation for something built for speed. We’d all rather have a speedboat than a supertanker. It’s just cooler, at least right up to the point you have a couple million barrels of oil to haul around.

So, about that question: the answer is no for one simple reason: Buffalo doesn’t want to charge you a huge cost premium for what is likely to be way too small for your needs along with a negligible improvement in performance.

Bold words, I know. Let’s unpack that performance statement a little bit. Everyone knows that SSDs are way, way faster than HDDs. And this is true, for a given value of ‘faster’.

The primary difference is the time that it takes for a given block of data to be located and the read or write begun. On a spinning HDD, a physical arm must traverse a physical distance and position the write head in precisely the right location. In an SSD, this is more or less instantaneous. Where this really shines is in situations where the data to be read or written is scattered across the drive, in locations that are not physically close, thus requiring a repositioning of the read head more frequently.

And this is really great, in certain use cases. Use cases where data is read more than written, and where small amounts of unrelated data need to be produced at any given moment with little tolerance for wait times measured in anything bigger than milliseconds. Databases, email, web servers, all have this usage profile and can benefit greatly from SSD.

The problem is, that’s not what people use NAS for.

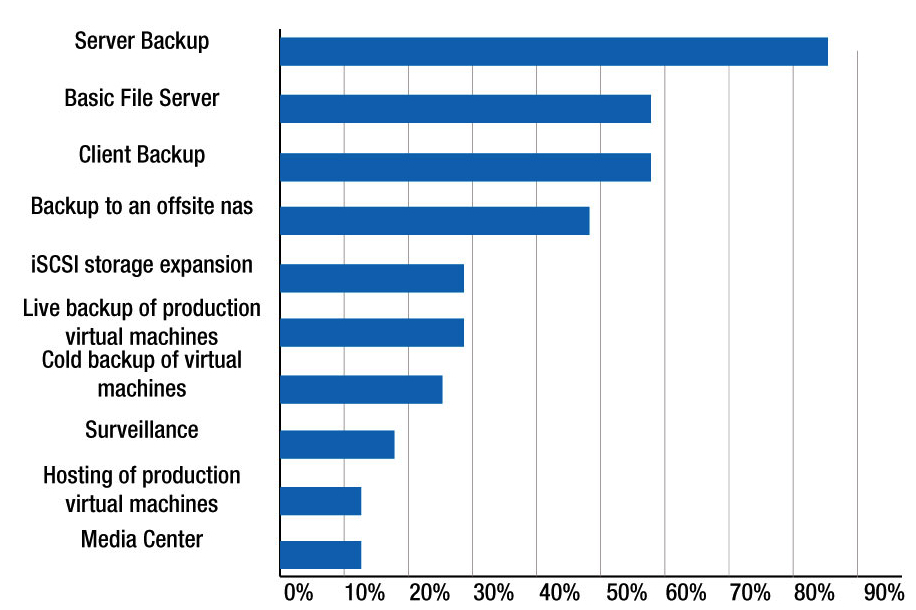

The primary use cases are either write-intensive (backup) or not especially time-sensitive (file server) or as an add-on to existing servers that may already have small amounts of speedy storage but not a lot of space (iSCSI expansion).

In these cases, the cost premium for SSD just isn’t justified, and it is a huge premium. A NAS class HDD can be had for right at $0.02 a gigabyte or less (that’s two cents), with cheap, slower SSDs costing around 4x that, and super-fast top tier M.2 SATA storage at 16 times the cost. (Source)

This is all well and good but we may still want more speed in those use cases, and we DO have something for you there, so don’t put your wallet away quite yet.

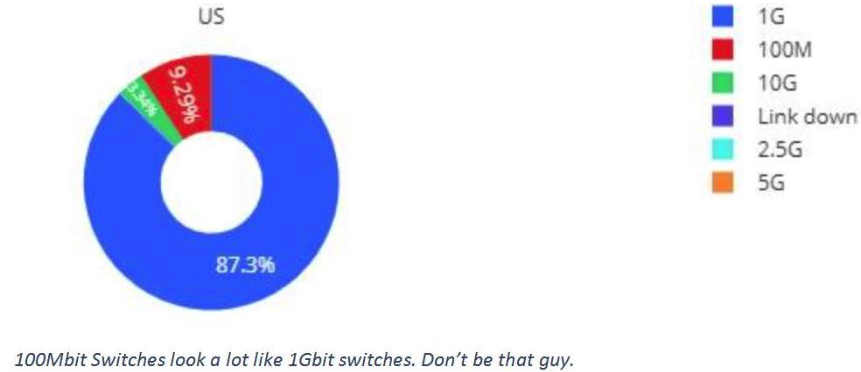

In terms of throughput in MB/s, all our current gen business class NAS units can easily saturate the slowest link in the data chain, the thing that makes a NAS a NAS, that being the network. At 100% link saturation, a 1GbE link will max out around 110MB/s provided there is zero other traffic on the network, which is unlikely. That’s not the worst part of this though. Recent usage data from systems in the field show that a significant number of users are still on 100Mb networks, which have been obsolete for nearly 20 years, and certainly haven’t been mainstream for at least 10.

So it’s not surprising, with nearly 10% of users running networks a decade out of date, that there’s a significant desire to get something superfast and to heck with the cost. Snazzy fast drives are certainly the most conspicuous and ‘fun’ upgrade to contemplate. Yet SSD, for most likely use cases, isn’t going to solve the issue on a 100Mb network and won’t help at all with throughput on a 1GbE network, either. In short, the drives aren’t the bottleneck.

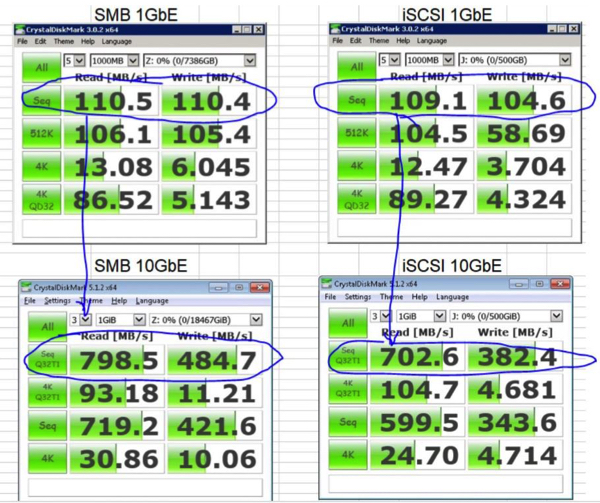

That’s ok though. Our TS5010 and TS6000 units have built in 10GbE NICs and we sell an inexpensive 8 port 10GbE switch. Seriously, read the reviews. No, it doesn’t do link aggregation for speed, but it does do it for redundancy. You don’t need to do upgrade your whole network to 10GbE, either. Just build a small 10GbE network for iSCSI traffic or among your biggest backup sources.

For 10GbE, we see sequential reads and writes (medium to large file copies) jump from 110MB/s to nearly 8x that for reads and 3-4x that for writes. Random 4k access is considerably slower as expected, but still respectable. This is real world stuff, not massively theoretical numbers like 2.19×10^6 IO/s, which are achieved with the most expensive drives built for boutique applications and the lowest possible operation size. If you are going to spend that kind of money, I recommend buying an actual server, not an SMB oriented storage appliance.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter