Digital Storage Outlook 2017 – Spectra Logic

Enterprise SSDs to displace 2.5-inch 15,000rpm HDDs, tape to have impact, LTO-8 to come

This is a Press Release edited by StorageNewsletter.com on July 10, 2017 at 2:39 pmThis report, Digital storage Outlook 2017, was written on June 2017 by Spectra Logic Corp.

Executive Summary

Predicting the next Oscar or Super Bowl winner is a favorite social pastime and markets exist whereby a person can profit from prescience. Predicting weather is still imperfect, but has come a long way.

Predicting future storage needs and the technologies to satisfy them may not be the hot new game night activity, but it is important to storage and media manufacturers, application developers, and all sizable users of storage capacity. Understanding future costs, technologies, and applications is vital to today’s planning.

The Size of the Digital Universe

• A 2012 IDC report, commissioned by EMC, predicts more than 40ZB of digital data in 2020. While this report took a top-down appraisal of the creation of all digital content, Spectra projects that much of this data will never be stored or will be retained for only a brief time. Data stored for longer retention, furthermore, is frequently compressed. As also noted in the report, the ‘stored’ digital universe is therefore a smaller subset of the entire digital universe as projected by IDC.

The Storage Gap

• While there will be great demand and some constraints in budgets and infrastructure, Spectra’s projections show a small likelihood of a constrained supply of storage to meet the needs of the digital universe through 2026. Expected advances in storage technologies, however, need to occur during this timeframe. Lack of advances in a particular technology, such as magnetic disk, will necessitate greater use of other storage mediums such as flash and tape.

Storage Apportionment and Tiering

• Economic concerns will increasingly push infrequently accessed data onto lower cost media tiers. Just as water seeks its own level, data will seek its proper mix of access time and storage cost.

• Different storage technologies will compete for position, but Spectra envisions four distinct storage tiers that will define data migration workflows and long-term storage.

High-Level Conclusions

SSD will completely own the portable, smartphone, and tablet sector, and will eventually replace all magnetic disk in laptop and desktop storage.

Two-and-half-inch 15,000rpm magnetic disk will be completely displaced by enterprise flash.

Three-and-half-inch magnetic disk will continue to store a majority of enterprise and cloud data requiring online or nearline access if, and only if, the magnetic disk industry is able to successfully deploy technologies that allow them to continue the downward trend of cost per capacity.

• The flash industry will likely improve its manufacturing costs associated with 3D NAND, thereby allowing it to continue its downward trend of cost per capacity.

• Tape has the easiest commercialization and manufacturing path to higher capacity technologies, but will require continuous investment in drive and media development. The size of the tape market will result in further consolidation, perhaps leaving only one drive and two tape media suppliers.

• Optical disc will remain a niche technology, primarily in the media and entertainment sector, unless great strides can be made in the production cost of the new higher capacity discs.

• No new storage technologies will have significant impact on the storage digital universe through 2026 with the possible exception of solid-state technologies whose characteristics straddle DRAM and flash.

Overview

Spectra has been in storage for nearly 40 years. The firm was an innovator in robotics, automation, and interfaces. From bringing Winchester disks and SCSI tape to minicomputers, to manufacturing the world’s largest tape library and private cloud object storage, the company takes a technology and media agnostic view of storage.

The firm provides robotics for storage media and front-end interfaces to access data stored on conventional disk, SMR disk, helium-enhanced disk, and tape. Its development partnerships and customer requests provide a broad glimpse at future direction. As a manufacturer and integrator, the firm cannot ignore costs and trends in storage performance and pricing.

This is the second edition of an annual overview of trends and predictions in storage media development and availability.

Trends, Tiers, and Contenders

There are some clear storage tiers being established in both the enterprise network and public cloud systems. While today it makes sense to demark these tiers by technology, future cost trends will blur the lines and allow technology contenders to intrude on the others’ turf.

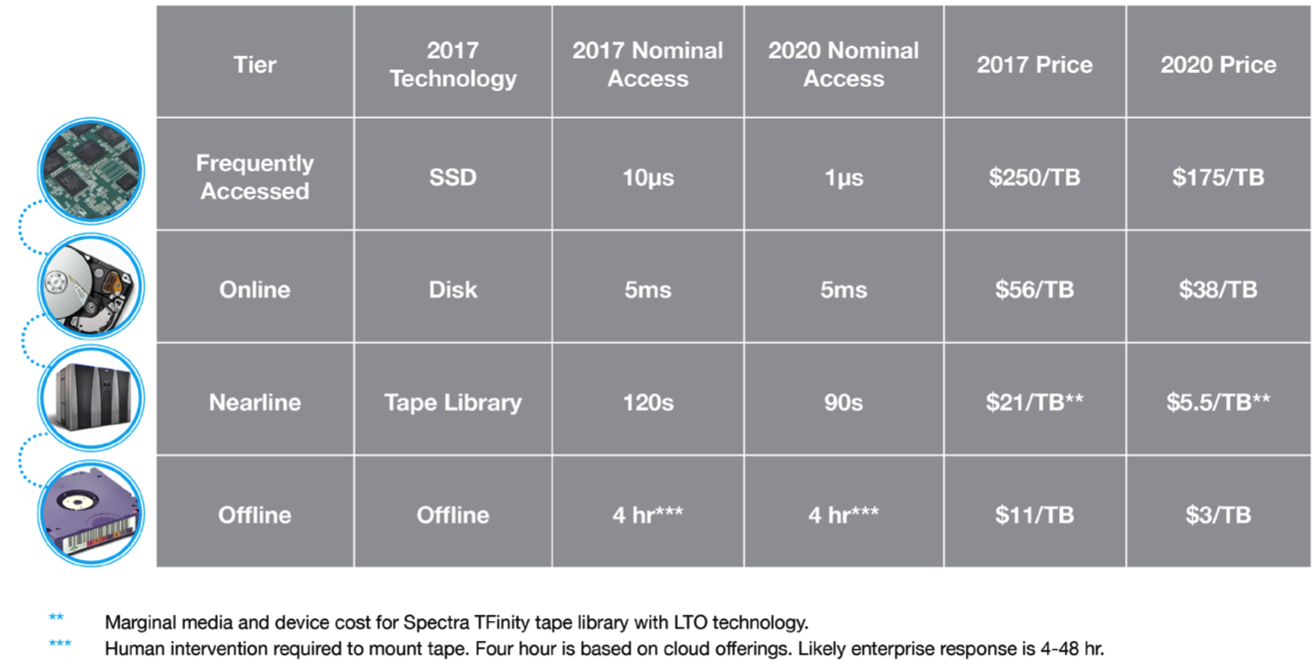

Figure 1: Media tier use and technologies

Tier 0: Frequently Accessed

Without mechanical components, enterprise solid-state technologies are able to exhibit high IO/s with low latency. This makes them ideal candidates for database storage, a primary application of this tier.

SSD devices have displaced, and will continue to displace the 2.5-inch 15,000rpmHDDs until the volume of these drives reaches a point where it will no longer be viable to incur the R&D costs for future products. Considering the network latency intrinsic to a cloud deployment, this tier is only viable when it is on- premises to an application running in the same environment, or when it is provided by the cloud provider to an application running in that cloud environment.

Tier 1: Online

Almost all data in cloud storage or on a corporate network is stored on magnetic disk and will continue to be as long as HDD manufacturers can maintain a sizable differential of its cost compared to flash.

Protecting against the intrusion of flash into this market is directly correlated to the cost spread they are able to maintain.

Tier 2: Nearline

Transactional processing is never done from this tier. In order to work efficiently, bulk data must be moved from this tier for manipulation and to this tier for storage.

In this tier, longevity, upfront costs, and ongoing maintenance costs are of greater concern than other tiers. Tape has historically been positioned in this space, and should continue to meet these requirements as long as adequate R&D investment is made in both drive and tape media development. Recently released optical disc technology shows promise in meeting many requirements aside from that of cost as it lacks the roadmap to achieve increased throughput and capacity that is seen in other technologies. Additionally, optical touts longer media life than tape with longer term backward read compatibility. As will be discussed, this technology can only displace tape if the cost of the media (discs) is substantially reduced.

Tier 3: Offline

This tier is infrequently accessed. Its primary application is DR, but the archive of a completed project, dated sales and financial data, or compliance-dictated retention of regulated data, are appropriate for this lowest cost tier. Requirements are essentially the same as automated archive, except it is even more sensitive to cost.

As such this tier has primarily been satisfied with tape technology. In a typical workflow, tapes are vaulted or shipped to an offsite location on a pre-determined schedule. For DR, the storage location should be physically distant from the primary site. This site would typically not need to have a library or even drives in order to read the tapes.

Small to medium-size enterprises have found it increasingly popular to electronically transfer to a cloud provider. The cloud provider may be using tape technology to provide this service to their customers, such that it allows the customer to utilize the technology in a more efficient manner. To the degree that bandwidth costs to the cloud drops, the number of customers willing to move data to the cloud for DR purposes will increase.

Cloud Offerings

Cloud services can be thought of as another deployment of the same top three tiers and, given the dominance of Amazon Web Services in that space, it’s worth comparing the associated AWS offerings. Note that Amazon’s methods are proprietary and this is not a description of technologies used, rather a suggestion of technologies and contenders to provide a certain level of service.

• Frequently Accessed – EBS (Elastic Block Storage) is clearly the high-Performance tier. This space has been served by 15,000rpm magnetic disk, but Spectra predicts it will increasingly move to enterprise flash.

• Online – S3 (Simple Storage Service) compares to online enterprise disk. Consumer flash and other solid-state devices will increasingly encroach but with caveats discussed below, disk should retain price superiority and maintain dominance.

• Nearline – Amazon Glacier cloud storage offers the lowest per-capacity cost and advertises a three-to five-hour restoration window. Tape or optical media in an automated library are possible technologies that cloud providers could use to satisfy a support level agreement for this tier.

Storage Technologies

Serving a market sector of more than $35 billion, the storage device industry has exhibited constant innovation and improvement. This section discusses current technologies and technical advances occurring in the areas of SSD, magnetic disk, magnetic tape, optical disc and future technologies, as well as Spectra’s view of what portion of the stored digital universe each will serve.

Solid-State

The fastest growing technology in the storage market is NAND flash. It has capabilities of durability and speed that find favor in both the consumer and enterprise segments. In the consumer space it has become the de facto technology for digital cameras, smartphones, tablets, and more recently laptops and desktop computers.

In the enterprise storage segment, it is now firmly seated as the storage technology of choice for database applications that benefit from its low-latency characteristics. Yet some of the established markets for this technology are in decline or have reached maturity. Declining markets include digital cameras, MP3 music players, USB sticks, and tablet computers. The smartphone market continues to grow year over year, but at a slower rate than previously. It is important for the flash business to continue its growth trajectory because these new devices entering the market require greater amounts of storage. For example, an introductory-level smartphone may come with 16GB of storage. It is essential that over time this capacity be expanded to 32GB and further, as these levels of phones drive the biggest volume. Furthermore, the technology will need to expand into market areas once dominated by disk. Currently, consumer-grade SSDs consisting of flash storage are selling for approximately $0.25/GB, while consumer-grade magnetic disk drives are selling for approximately $0.025/GB. The displacement of disk technology by flash in the consumer laptop segment will drive even more growth of the flash market through 2020.

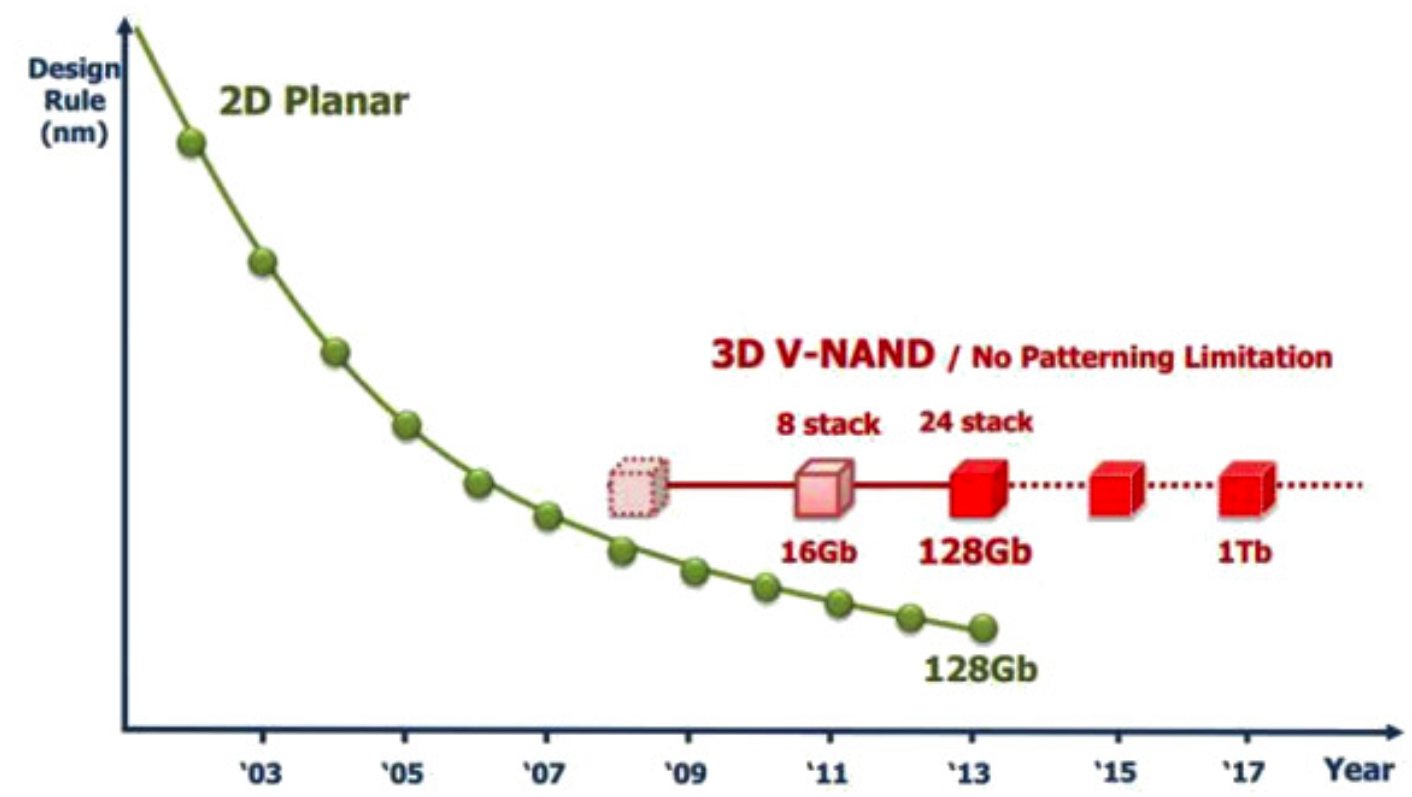

Figure 2: Flash Capacity and Die Density

Samsung’s presentation, Ushering in 3D Memory Era with V-NAND, August. 2013

As a solid-state technology, flash is restricted by the same limitations of lithography present in the manufacturing of processors and DRAM. As the flash cells become smaller, they experience more leakage across cells and also contain fewer electrons per cell. To compensate for these effects at higher die densities, more cells have to be reserved for error correction purposes. At some point it provides no value to increase the cell count as all of the additional cells are consumed by error correction. This point for NAND flash appears to be around 15nm. At 16nm, a 128Gb die has been created and is shipping from several vendors.

The struggle to increase capacity beyond this on a fixed area is analogous to the issue that many cities face with limited real estate, and the answer is the same – build upward. 3D NAND can be thought of as adding more floors (stacks) to a die, such that the capacity can be increased without decreasing the line widths. In the first versions of this product, stacks have been increased in order to regain lost margin. It remains unclear to what extent this technology will continue the NAND flash roadmap and whether it will provide further decreases in cost. There is currently a 512GB device on the market that is fabricated with 64 layers.

If yields similar to 2D planer technology can be attained with the higher capacity dies, this would allow a single semi-conductor fabrication facility to produce a greater amount of storage capacity on existing equipment. This is important, as the cost for building a new fab is typically billions of U.S. dollars.

Given that 3D NAND will also reach maturation at some point, the question becomes whether there are other possible solid-state technologies that will pick up where it leaves off. Recently Intel and Micron announced a new technology they call 3D XPoint (3D Crosspoint). Though information regarding the actual working mechanisms are sketchy, what is known is that a memory cell is made of material whose resistance level changes because of a bulk change in its atomic or molecular structure. Intel has indicated that this technology will have 10x lower latency, 3x the write endurance, 4x more writes per second, and as little as 30% the power consumption of competing NAND flash.

The type of memory is known as Resistive RAM, also ReRAM or RRAM. In an effort to compete with 3D XPoint, Samsung has announced the Z-SSD family of products. It is based on standard 3D NAND technology with special circuitry to provide similar higher-performance latencies as that of 3D XPoint.

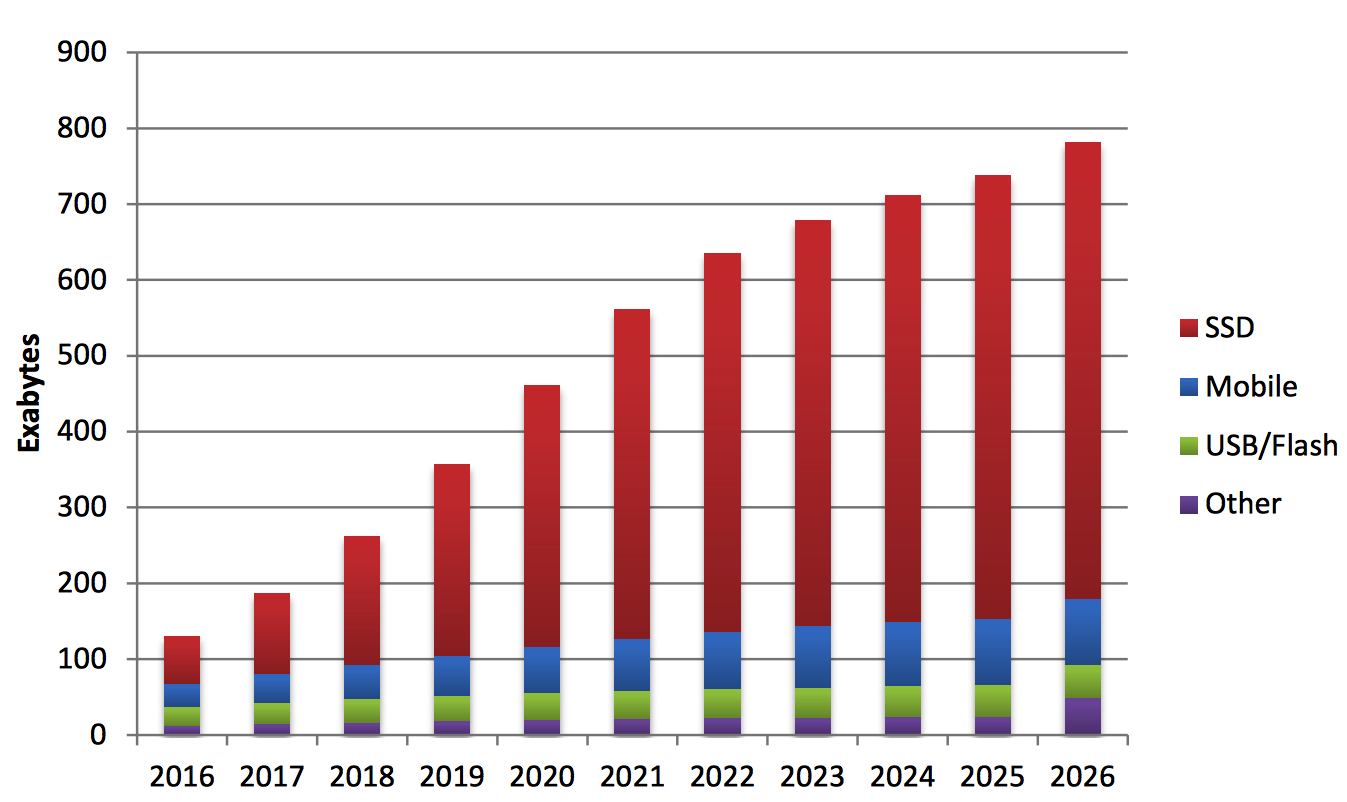

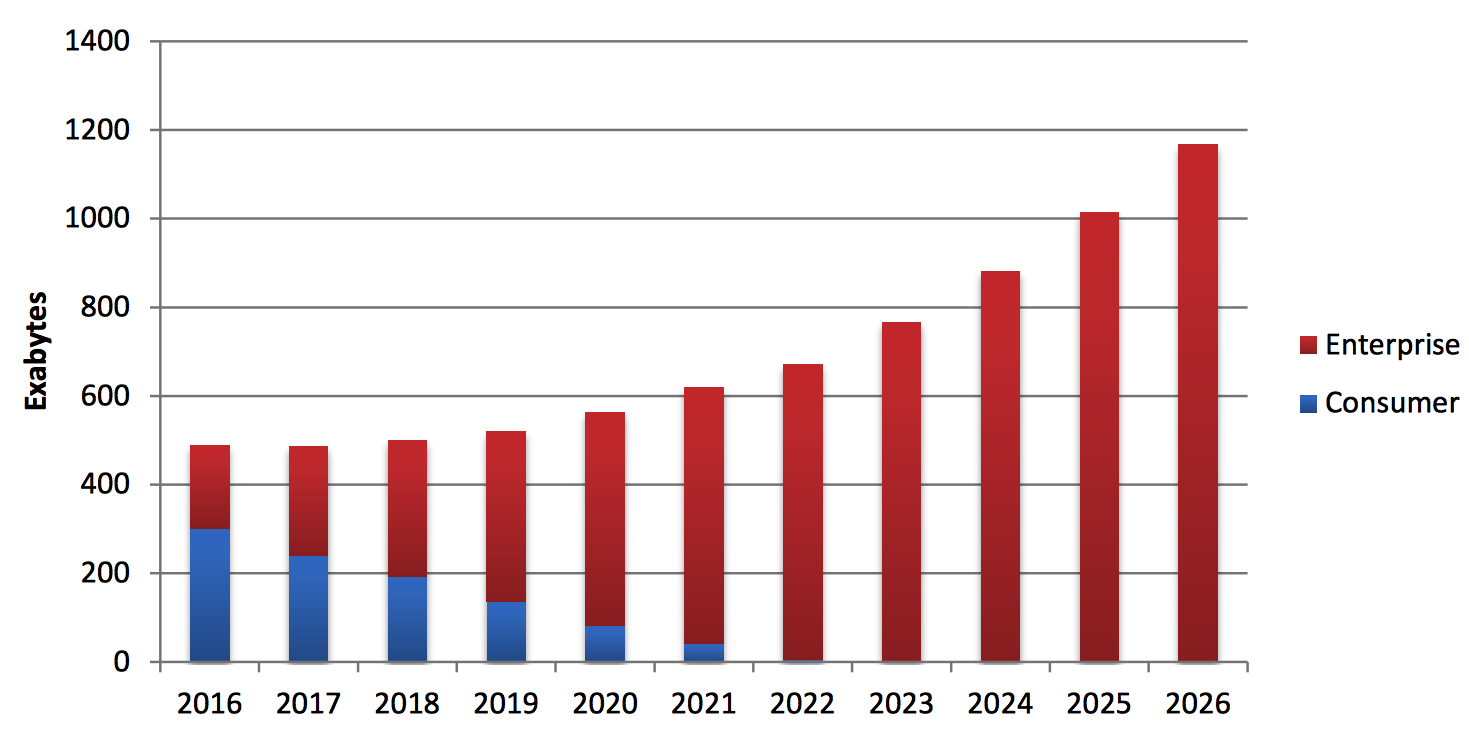

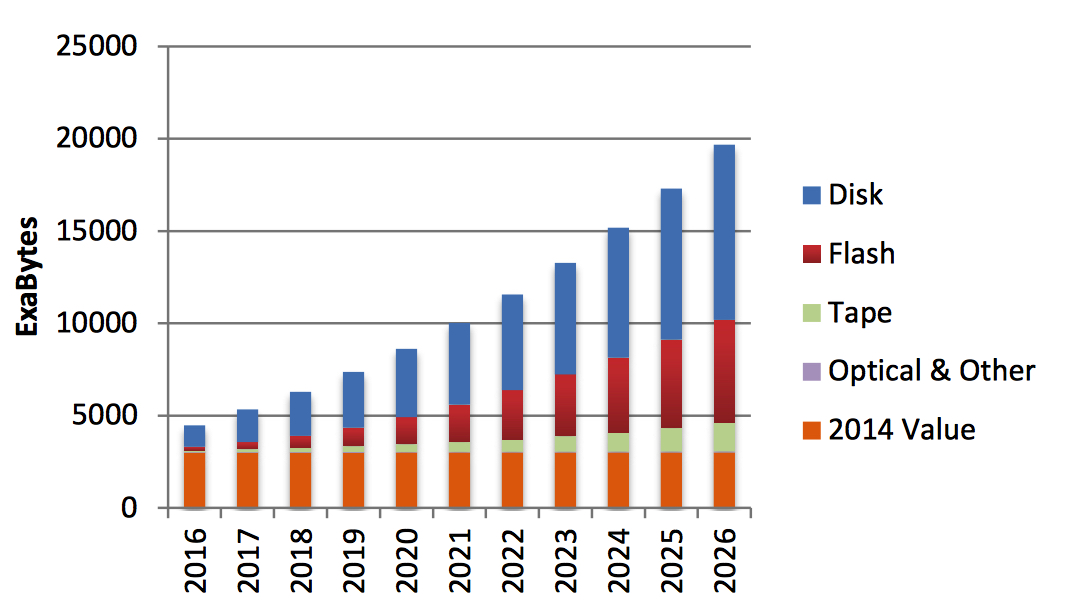

Figure 3: Stored Digital Universe Solid-State Technology

Solid-state storage will have the highest growth rate of all storage mediums in terms of exabytes shipped. Spectra has high confidence that fabrication of 3D NAND will improve and costs will decrease. Estimates are based on a continuing high growth rate through 2020, followed by steady growth at a lower percentage from 2021-2026. There has been much discussion in the industry regarding flash manufacturing capacity and possible supply limitations. Some of these limitations were felt in the fourth quarter of 2016, whereby demand outstripped supply. Substantial investment was made in 2016 by all major flash vendors in an effort to greatly expand their manufacturing capacities. These additional investments, along with technical advances allowing for more capacity per piece, are the basis for the increasing demand this technology will be seeing through 2020.

Magnetic Disk

After years of consolidation, the disk drive industry now has two [three – Editor] major providers (Seagate, HGST) and one smaller provider (Toshiba) [and Western Digital – Editor].

The industry is being challenged by flash on three fronts:

1. The remaining 2.5-inch 15,000rpm enterprise drives used for database storage are continuing to be displaced by enterprise flash solutions. These flash solutions are packaged either as SSDs that are drop-in replacements for disk drives or as PCIe-connected drives. The bus-connected storage further eliminates the storage network stack and its latency. Future solid-state technologies, such as 3D XPoint, will improve even further upon this by being hosted directly on the memory bus. As they get closer to the CPU, these technologies blur the distinction between storage and system memory.

2. The second challenge to this industry is at the opposite end of the market: client-side drives that are placed into remote devices such as laptops and desktops. Much of this displacement has already occurred with the advent of flash-based smart phones and tablets replacing laptops and desktops. For every tablet sale, which is equipped with a flash drive instead of a disk drive, one HDD sale is lost. A second wave of this disruption is underway in which the laptops and, to a lesser degree, desktops are now being sold with solid-state vs. magnetic disk storage. Only a few years ago, an Apple MacBook had a standard configuration that included a HDD with an expensive upgrade option to a SSD. Today it is the opposite and in some cases the HDD is not even an option.

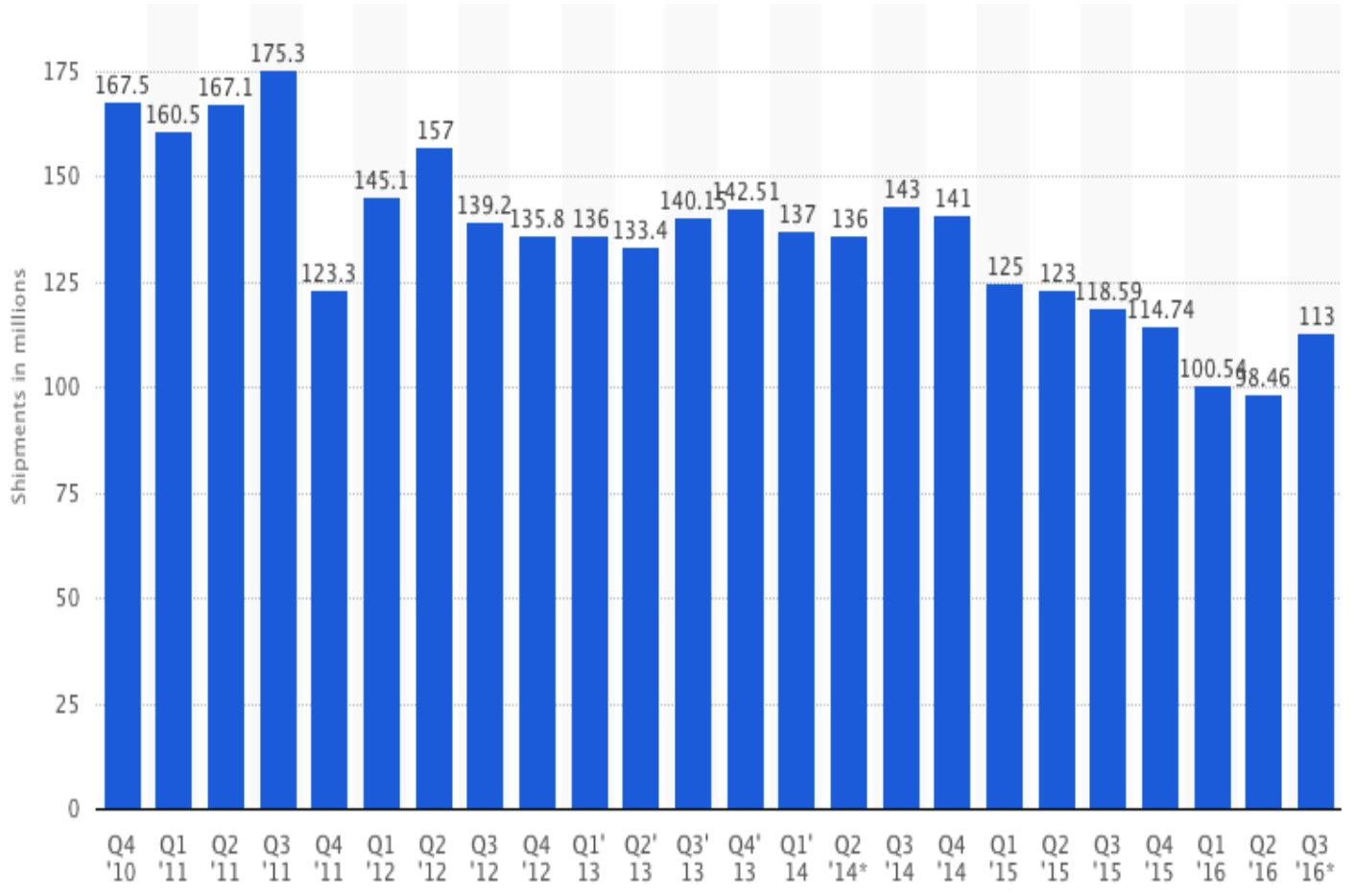

3. A longer term effect the industry faces is in the removal, not displacement, of disk drives in home gaming devices and digital video recorders. This disruption is not due to flash, but rather the availability of higher bandwidth Internet service to the home. This higher bandwidth will open up the possibilities of gaming from cloud servers, not from local gaming devices, and streaming from media on demand rather than from a DVR. Another area where disk drives are being displaced is laptops and desktops where many HDDs have been underutilized by up to 75%. As storage moves from local machines to the cloud, they will move from being underutilized to fully utilized, which will result in fewer drive volumes and overall capacities shipped. The following chart shows an overall decline of about 75 million HDDs year to year from 2015 to 2016.

Figure 4: Worldwide Disk Drive Shipments

(Source: Statista)

By 2020, the disk industry will be servicing a singular market, predominantly for large IT shops and cloud providers. In order to maintain that market, their products must maintain current reliability, while at the same time continuing to decrease their per-capacity cost. Protection of market share requires a multiple cost differential over consumer solid-state disk technologies.

Over the last few years, disk drive manufacturers have introduced three technologies to increase capacity on the standard platter size. First, helium-filled drives encounter less friction than air to generate less heat and internal turbulence, and run more efficiently, resulting in a higher capacity device. Second, SMR drives write tracks like shingles on a roof, such that the surface area between traditional disk tracks are eliminated resulting in higher capacity. Lastly, one vendor is delivering variable capacity drives whereby each drive of a designated capacity arrives at a slightly different capacity point. For example, 6TB drives purchased might have capacities ranging from 6TB to 6.4TB. The manufacturer formats a disk to its potential instead of downsizing it to a pre-specified capacity.

Two of these technologies, SMR and variable capacity drives, create challenges for standard disk environments such as those driven by RAID controllers. All three of these technologies can be considered one-time capacity improvers, hence, do not have longer-term roadmaps leading to greater capacity devices. It is Spectra’s understanding that the maximum capacity achieved with helium will be 14TB and the combination of helium and SMR, will be approximately 16TB in a 3.5-inch form factor disk. In order to continue to decrease the per-capacity cost, a fundamentally new technology will have to be introduced. The technology with the most potential is Heat Assisted Magnetic Recording.

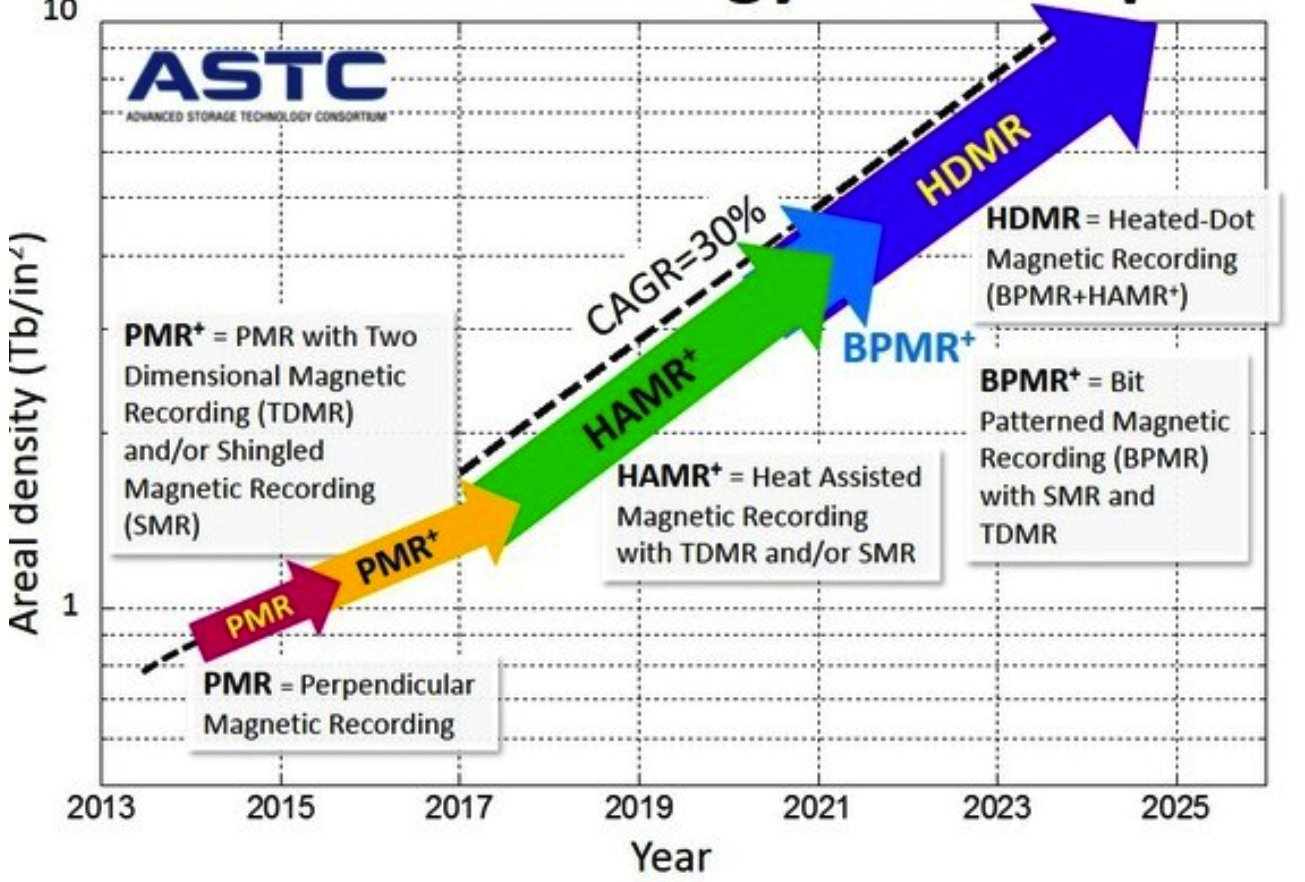

Figure 5: ASTC Technology Roadmap

(Source: ASTC)

HAMR increases the areal density of a disk platter by heating the target area with a laser. This heated area is more receptive to a change in magnetic properties (reduced coercivity), allowing a lower and more focused charge to ‘flip’ a smaller bit.

For many years the disk industry has been investing heavily in HAMR technology, realizing its importance for the product roadmap.

The launch date for drives reaching market has been postponed several times and now stands at late-2018 for a device in the 16TB capacity range. Various technology demonstrations have been performed over the years, suggesting that the hurdles remaining revolve around the capabilities required to manufacture devices at high volume, low cost, and with high reliability. HAMR demonstrations have achieved 1.6Tbpsi with 2,600,000 flux changes per inch (kFCI) and 400,000 tracks per inch (TPI). When HAMR drives become available, they will further challenge RAID controllers as the rebuild times for 20TB, 30TB, and perhaps, 50TB devices, will become prohibitively long.

An orthogonal technology being introduced by disk drive manufacturers is network-attached disk drives. The capacity of these drives are the same as those currently shipping. The physical and logical interfaces have been changed, but the drive itself has not changed. From a network interconnect perspective these drives present two 1GbE interfaces. They have the same form factor and connectors that are used by SAS disk drives, allowing the same hot-pluggable connectors and carriers to be utilized. This physical implementation enables large organizations to centralize on a single network interconnect (Ethernet).

Furthermore, the JBOD chassis designed for this technology are much simpler than traditional storage controller designs as they require no motherboard, processors, DRAM, or HBAs. The typical network-attached JBOD device contains two 10GbE switches for redundancy that externally present two 10GbE interfaces to interconnect among chassis. Internally, each switch fans out to one of the Ethernet ports connected to each disk drive such that a failure of a drive interface or even an entire 10GbE network does not prevent communication to all drives through the other switch and network.

In terms of the logical interface, the two disk market leaders have taken different approaches. Seagate has provided these drives to market with a logical interface, known as Kinetic, which provides a key/value-store-like interface that hides the complexity of the drive from the application. This interface, along with the associated drives, has seen limited adoption. Western Digital on the other hand has demonstrated the operation of an Object Storage Device component of a clustered object storage system on the front-end processor of their network-attached disk drives. Their approach is to provide an environment whereby a large customer could download and run custom code on this processor and talk to the disk drive through a software API provided by the drive manufacturer. Given that major cloud providers have voiced security concerns associated with having third-party software in their systems, this approach might find favor as it enables the cloud provider to customize the disk drive to meet their needs. The following statement from ceph.com provides an indication of where this technology is headed.

Today, an Ethernet-attached HDD drive from WDLabs is making this architecture a reality. WDLabs has assembled over 500 drives from the early production line and assembled them into a 4PB (3.6 PiB) Ceph cluster running Jewel and the prototype BlueStore storage backend. WDLabs has been working on validating the need to apply an open source compute environment within the storage device and is now beginning to understand the use cases as thought leaders such as Red Hat work with the early units.

Figure 6: Stored Digital Universe Magnetic Disk

As seen above, Spectra is predicting a very aggressive decrease in the shipped aggregate capacity of consumer magnetic disk as flash takes over that space. Capacity increases in enterprise storage, whether in the online or nearline tier, will not maintain a pace that will allow the disk industry to realize revenue gains.

Some reservations are warranted as to the market delivery of HAMR technology and whether it will be able to restart the historical cost trends seen in disk for decades. If the industry is unable to cost effectively and reliably deliver on this technology, the intrusion of flash into its space will be greater, especially in the online tier. This could also result in supply concerns on the flash side, such as those experienced in 4Q16.

Tape

The digital tape business for secondary storage has seen year-to-year declines as IT backup has moved to disk-based technology. At the same time, however, the need for tape in the long-term archive market continues to grow. Tape technology is well suited for this space as it provides the benefits of low environmental footprint on both floor space and power; a high level of data integrity over a long period of time; and a much lower cost per gigabyte of storage than all other storage mediums.

A fundamental shift is underway whereby the market for small tape systems is being displaced by cloud-based storage solutions. At the same time, large cloud providers are adopting tape – either as the medium of choice for backing up their data farms or for providing an archive tier of storage to their customers. Cloud providers and large scale-out systems provide high levels of data availability through replication and erasure coding. These methods have proven successful for storing and returning the data ‘as is.’ However, if during the lifecycle of that data, it becomes corrupted, then these methods simply return the data in its corrupted form.

For the tape segment to see growth, a wide-spread realization that ‘genetic diversity,’ defined as multiple copies of digital content stored in diverse locations on different types of media, is required to protect customers’ digital assets.

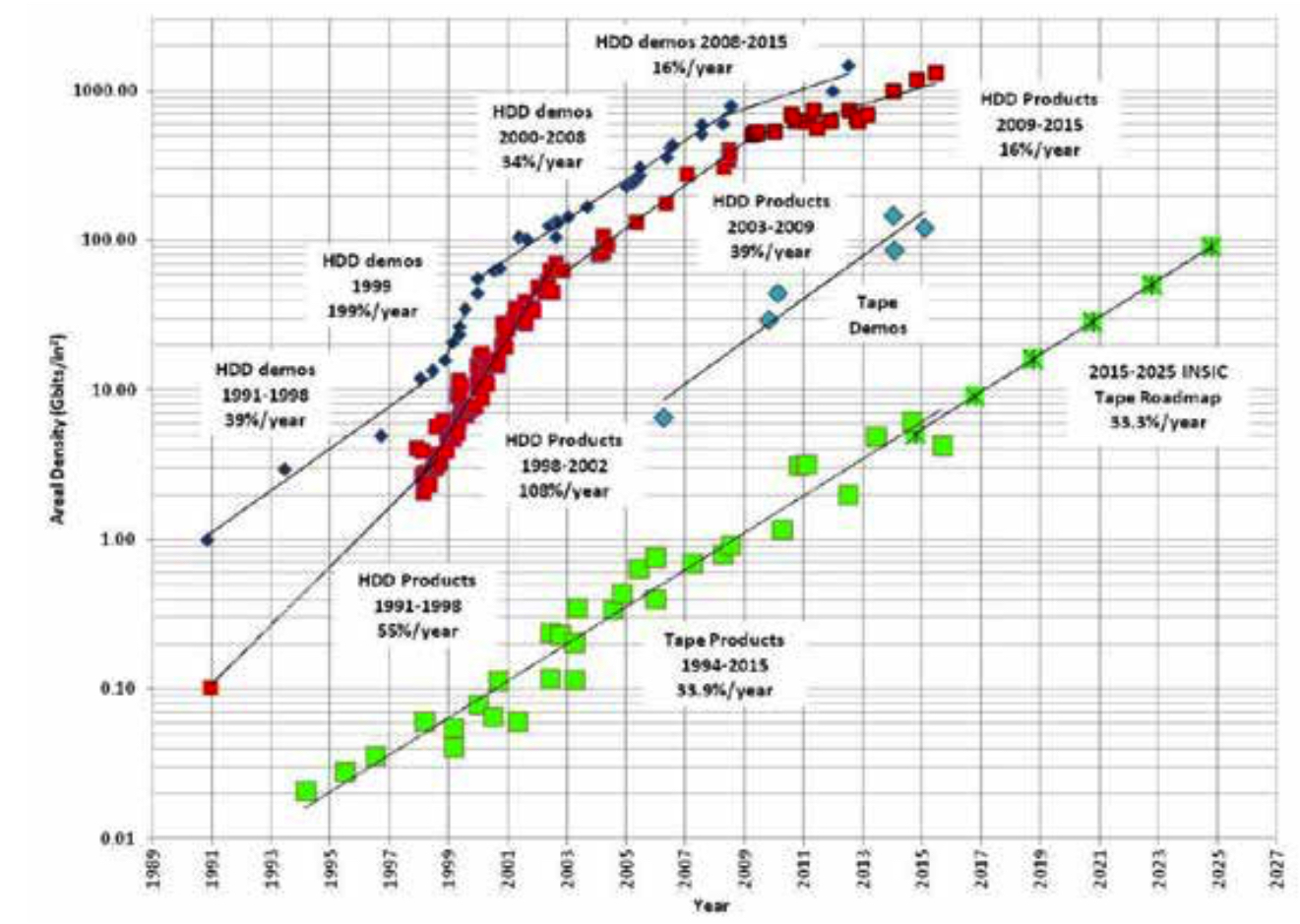

Figure 7: Areal Density Chart (source INSIC)

LTO technology has been and will continue to be the primary tape technology. The LTO consortium assures interoperability for manufacturers of both LTO tape drives and media. In 2015, the seventh generation of this technology was introduced, providing 6TB native (uncompressed) capacity per cartridge. It is expected that an eighth generation of the LTO product LTO-8 will ship in October 2017 timeframe, offering a capacity point of 12TB. IBM will soon be shipping the IBM TS1155 Tape Technology with a native capacity of 15TB. These tape drives are the first to use TMR technology (tunneling magnetoresistance) which should allow the capacity to double four more times. Additionally, these drives will be the first generation to be offered with a native high-speed 10GbE RoCE Ethernet interface.

Customers with high duty-cycle requirements can consider using enterprise drives from IBM, such as IBM’s TS1150 tape technology, which provides 10TB of capacity (native), as well as subsequent variations and generations. It is Spectra’s opinion that IBM will be the sole manufacturer of enterprise tape drives and media in the years to come.

Of all the storage mediums available, tape technology has the potential for capacity improvement. This is singularly due to it having substantially more surface area on which to deposit data than any other medium. Think of almost 3000 feet of tape spooled out compared to seven 3.5-inch disk platters or one optical DVD. This surface area advantage translates into potential capacities of upwards of 220TB of storage per cartridge – recently demonstrated by IBM and Fujifilm. This demonstration did not involve any technology breakthroughs, but rather the movement of tape closer to the operating point of magnetic disk in the Aerial Density Chart.

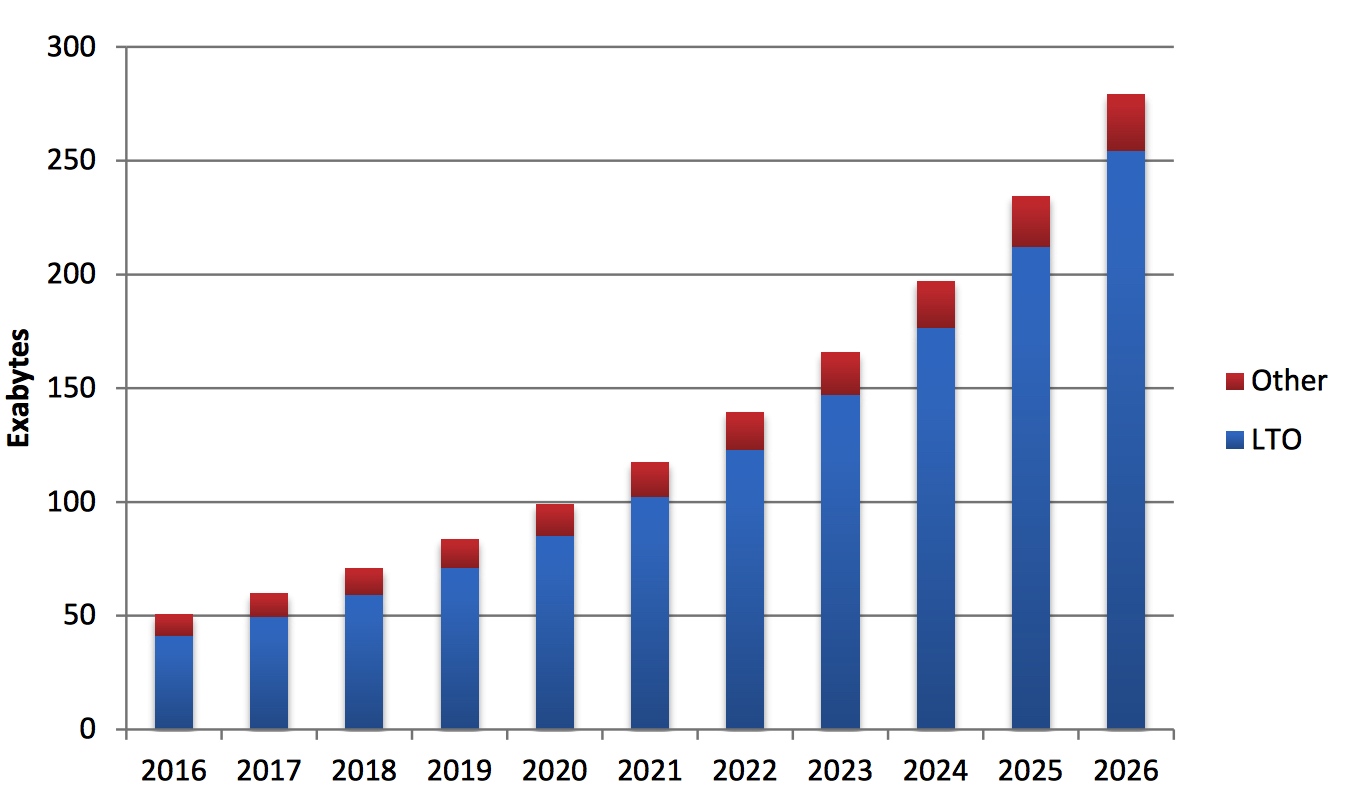

Figure 8: Digital Universe Tape

Tape technology’s potential for capacity improvement promises substantial reductions in the per-capacity cost of tape storage. Unfortunately, the market has seen year-to-year revenue declines for many years and the industry as a whole suffers from the belief that the demand for tape is mostly inelastic. This belief has affected the technology aggressiveness of the product roadmaps and associated media pricing. To remain relevant, the industry needs to expand the price difference between SMR magnetic disk and tape through increased capacity and reduced media costs. Even without a substantial increase in this ratio, tape holds a compelling value proposition over disk in the area of environmental requirements and data longevity. Spectra believes that a probable long-term scenario is one in which flash technology and tape will coexist, and become the prevailing storage technologies for online and archive needs, respectively.

As small and medium enterprises move their DR strategies to the cloud, the volume of small tape systems will decrease. That data, however, will be archived on large tape systems installed by cloud manufacturers. This trend will lead to more aggressive adoption of new tape media as the cloud providers have incentive to lower the cost per gigabyte.

Cloud providers will mostly adopt LTO, and given their strength in purchasing overall tape technology, this will lead to a greater percentage of LTO versus enterprise tape technology. Enterprise tape, with two suppliers, appears to be an over-served market and Spectra predicts that, at some point, the market will converge to one.

Optical

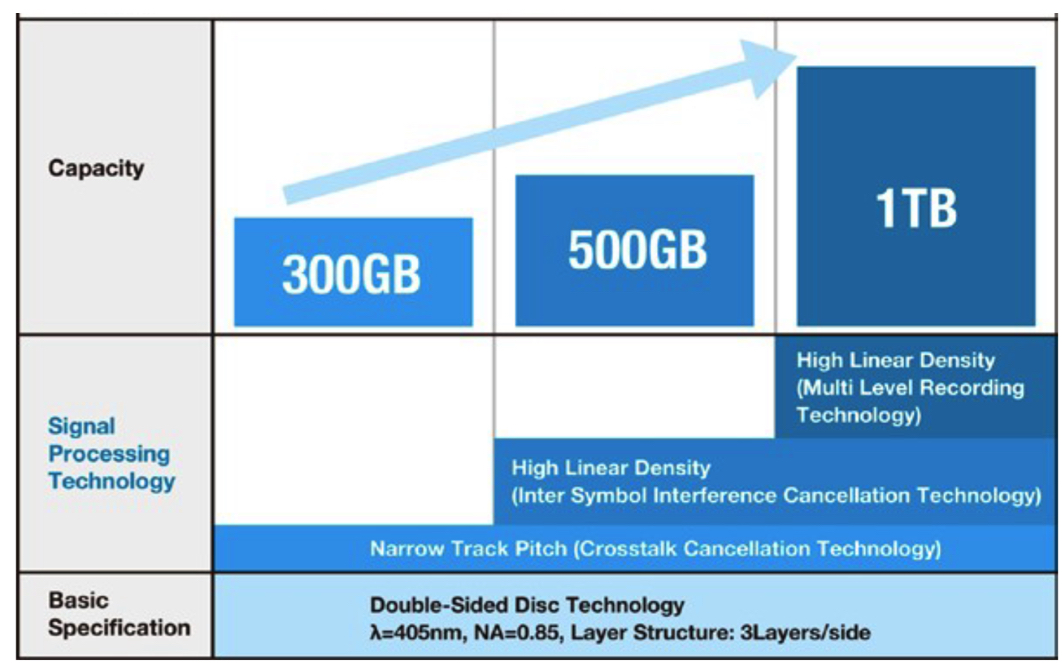

In early 2014, Sony Corporation and Panasonic Corporation announced a new optical disc storage medium designed for long-term digital storage. Trademarked ‘Archival Disc’, it will initially be introduced at a 300GB capacity point and will be write-once. An agreement covers the raw unwritten disk such that vendors previously manufacturing DVD will have an opportunity to produce Archival Disc media. Unlike LTO tape, there is no interchange guarantee between the two drives. In other words, a disc written with one vendor’s drive may or may not be readable with the others. Even if one vendor’s drive is able to read another’s, there may be a penalty of lower performance.

Figure 9: Archival Disc Roadmap

(Source: Sony and Panasonic)

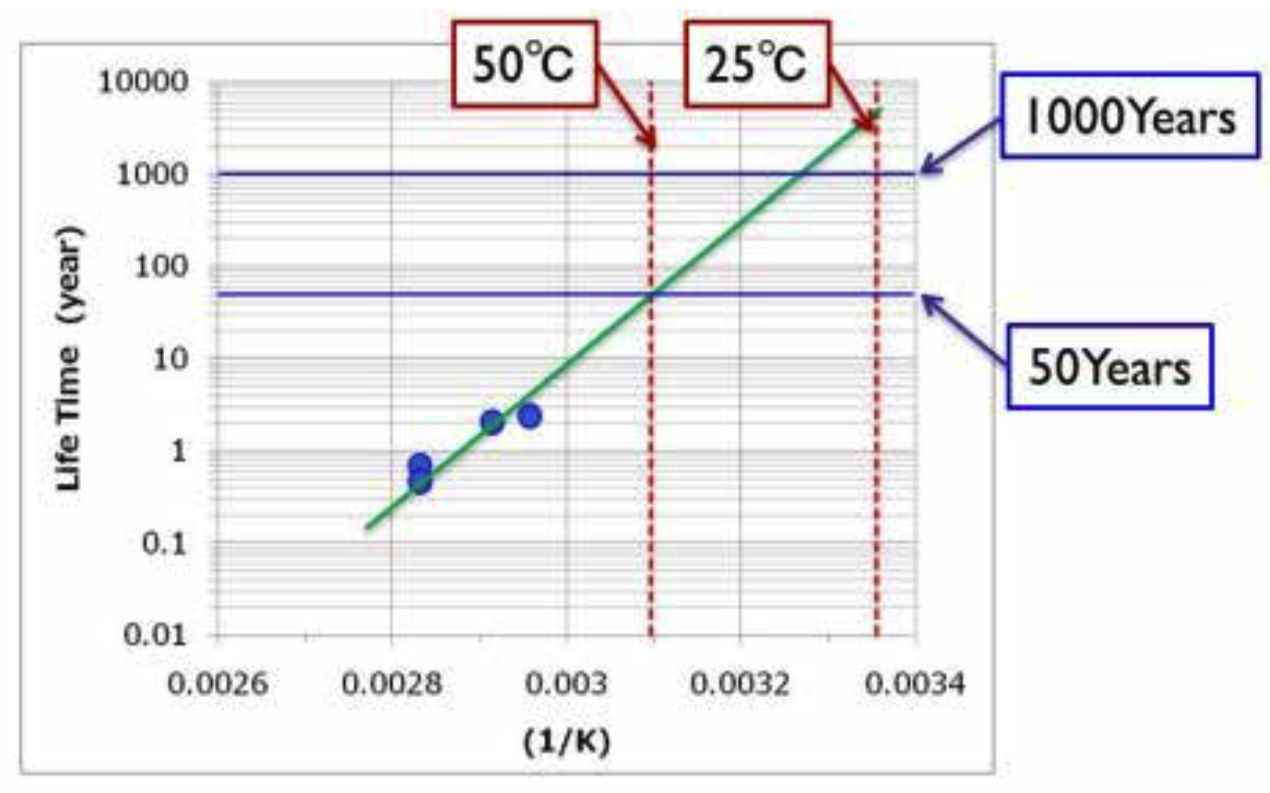

Sony’s solution packages 11 discs into a cartridge that is slightly larger in both width and depth than an LTO tape cartridge. The value proposition of this technology is its longevity and its backward read compatibility. As shown in the following chart, even stored at the extreme temperatures of 50°C (122° Fahrenheit), the disc has a lifetime of 50 years or more. Error rates are still largely unknown.

Sony and Panasonic are also guaranteeing backward read compatibility of all future drives. Unlike disk and tape, the media will not require migration to newer formats and technologies as they become available. This matches the archive mentality of writing once and storing the media for extended recoverability. Best practices in magnetic disk systems, conversely, where the failure rate of the devices considerably increase over time, indicate that data should be migrated between three to five years.

Figure 10: Long-Term Storage Reliability in Archival Disk

(Source: Sony)

For customers that have definitive long-term (essentially forever) archival requirements, the archive disc will find favor. The size of this particular market segment is small compared with the overall market for archival storage. The ability for this technology to achieve greater market penetration is primarily a function of the pricing of the media. Today, the cheapest storage medium is LTO-6 tape with roughly a $25 price for 2.5TB of storage or $.01/GB. In order to be price equivalent, a 300GB archive disc would need to be priced at $3.60 (300GB x $.01/GB). If the media reaches the market at $36 per disc, about the price of today’s 100GB disc, the 10 times differential between this and tape technology will lock archival disc technology into the market niche described above. The longevity advantages of this technology certainly warrant some price premium over tape. Yet for mass adoption of this technology, Spectra imagines its end-user pricing will need to be somewhere between one and a half to two times that of tape – or a disc price of somewhere between $5 and $7. This would imply a cartridge cost (11 discs) of between $60 and $84. As of the time of this writing, no prices can be found on the 3.3TB disc cartridge; however, the previous generation at 1.5TB can be obtained for around $150 or around $100/TB. This places Archival Disc media, in terms of pricing, between flash and disk, and about 10 times more expensive than tape.

In order to achieve a competitive lower price, several things will need to occur. First, it will require that the process for producing discs achieve stability with a fairly high yield. Next, existing DVD lines will need to be converted over to the production archive disc technology. The creation of new lines, and hence large capital investment, will not need to occur, as the technology was inherently designed to be manufactured with existing DVD processes. A decreasing consumer demand for DVD discs has led to a substantial amount of manufacturing capacity. In fact, disc technology manufacturers will need to believe in the elasticity of the market, such that they are willing to lower pricing in order to achieve larger volumes.

Stored Digital Universe Optical Disc

Given the number of manufacturers and the variety of products (such as pre-recorded DVDs, Blue-Ray, etc.), it is difficult to project the stored digital universe for optical disc. To remain consistent with the intent of this paper, Spectra conservatively estimates the storage for this technology at 5EB per year, and that, with the introduction of the Archive Disc, this will grow at a rate of 5EB per year for the next 10 years. This value could change in either direction based on the previous discussion regarding disc pricing.

Future Storage Technologies

Being a $30 billion a year market, the storage industry has and will always continue to attract venture investment in new technologies. Many of these efforts have promised a magnitude of improvement in one or more of the basic attributes of storage, those being cost (per capacity), latency performance, bandwidth performance, and longevity. To be clear, over the last 20 years, a small portion of the overall venture capital investment has been dedicated to the development of low-level storage devices, with the majority dedicated to the development of storage systems that utilize existing storage devices as part of their solution. These developments more align with the venture capital market in that they are primarily software based and require relatively little capital investment to reach production. Additionally, they are lower risk and have faster time-to-market as they do not involve scientific breakthroughs associated with materials, light, or quantum physics phenomenon.

Much of the basic research for advanced development of breakthrough storage devices is university or government funded or is funded by the venture market as purely a proof of concept effort. For example, a recent announcement was made regarding storing data in five dimensions onto a piece of glass or a quartz crystal capable of holding 360TB of data, literally forever. Advanced development efforts continue in attempting to store data into holograms, a technology that has, for a long time, been longer on promises than results. Developments at the quantum level include storing data through controlling the ‘spin’ of electrons. Though these and other efforts have the potential to revolutionize storage, it is difficult to believe that any are mature enough at this point in time to impact the digital universe through at least 2026. Historically many storage technologies have shown promise in the prototype phase, but have been unable to make the leap to production products that meet the cost, ruggedness, performance, and most importantly, reliability of the current technologies in the marketplace. Given the advent of cloud providers, the avenue to market for some of these technologies might become easier (see next section).

Cloud Provider Storage Requirements

Over the period of this forecast, cloud providers will consume, from both a volume and revenue perspective, a larger and larger portion of the storage required to support the digital universe. For this reason, storage providers should consider whether or not their products are optimized for these environments. This brings into question almost all previous assumptions of a storage product category. For example, is the 3.5-inch form factor for disk drives the optimum for this customer base? Is the same level of CRC required? Can the device be more tolerant of temperature variation? Can power consumption and the associated heat generated be decreased? Does the logical interface need to be modified in order to allow the provider greater control of where data is physically placed?

For flash, numerous assumptions should be questioned. For example, what is the cloud workload and how does it affect the write life of the device and could this lead to greater capacities being exposed? Similar to disk, questions should be asked regarding the amount and nature of the CRC and the logical interface as well as the best form factor. Better understanding and tailoring of lower power nodes along with the need for refresh should be understood and tailored to meet cloud providers’ needs.

Regarding the use of tape technology for the cloud, several questions arise such as what is the best interface into the tape system. Given that tape management software takes many years to write and perfect, a higher-level interface, such as an object level REST interface, might be more appropriate for providers that are unwilling to make that software investment. When cloud providers have made that investment, the physical interface to the tape system needs to match their other networking equipment (i.e. Ethernet). Given tape has tighter temperature and humidity specifications than other storage technologies, solutions that minimize the impact of this requirement to the cloud provider should be considered.

Cloud providers have a unique opportunity to adopt new storage technologies, based on the sheer size of their storage needs and small number of localities, ahead of volume commercialization of these technologies. For example, consider an optical technology whereby the lasers are costly, bulky and prone to misalignment, and the system is sensitive to vibration. If the technology provides enough benefit to a cloud provider, it might be able to install the lasers on a large vibration-isolating table with personnel assigned to keep some systems operational and in alignment. In such a scenario, an automated device might move the optical media in and out of the system. In a similar scenario where the media has to be written in this manner but can be read with a much smaller less costly device, the media may be, upon completion of the writing process, moved to an automated robotics system that could aid in any future reads to be done.

Size of Digital Universe

A frequently quoted IDC report, commissioned by EMC in 2012, predicted more than 40ZB of digital data in 2020. This caused many in the industry to wonder whether there would be sufficient media to contain it.

The IoT, new devices, new technologies, population growth, and the spread of the digital revolution to a growing middle class all support the idea of explosive, exponential data growth. Yes, 40ZB seems aggressive, but not impossible.

The 19th Century economist Frederic Bastiat discussed, The Seen and the Unseen. Spectra suggests considering The Stored and the Unstored. The IDC report took a top-down appraisal of the creation of all digital content. Yet, much of this data is never stored or is retained for only a brief time.

For example, the creation of a proposal or slide show will usually generate dozens of revisions – some checked in to versioning software and some scattered on local disk. Including auto-saved drafts, a copy on the email server, and copies on client machines, there might easily be 100 times the original data which will eventually be archived. A larger project will create even more easily discarded data. Photos or video clips not chosen can be discarded or relegated to the least expensive storage. In addition, data stored for longer retention is frequently compressed, further reducing the amount of storage.

In short, though there might indeed be upwards of 40ZB created, when a supply and demand mismatch is encountered, there are many opportunities to synchronize:

• A substantial part of the data created will be by nature transitory, requiring little or very short retention.

• Storage costs will influence retention and naturally sort valuable data from expendable.

• Long-term storage can be driven to lower-cost tiers. Cost will be a big factor in determining what can be held online for immediate access.

• Flash, magnetic disk, and magnetic tape storage is rewritable, and most storage applications take advantage of this. As an example, when using tape for backups, new backups can be recorded over old versions up to 250 times, essentially recycling the storage media.

• The ‘long-tail’ model will continue to favor current storage – as larger capacity devices are brought online, the cost of storing last year’s data becomes less significant. For most companies, all their data from 10 years ago would fit on a single tape today.

Considering all these factors, an extrapolation of current growth trends multiplied by capacity gains, projects the total amount of stored data in 2026 to be closer to 20ZB, building from a projected base of 3ZB in 2014.

Figure 11: Stored Digital Universe

Spectra’s analysis also differs from the larger projections by omitting certain forms of digital storage such as pre- mastered DVD and Blu-ray discs.

Conclusions

There are many interesting storage ideas being pursued in laboratory settings at different levels of commercialization: storing data in DNA, 3D RAM, (5 dimension optical) hologram storage – plus many that are not yet known. Technology always allows for a singular breakthrough, unimaginable by today’s understanding, and this is not to discount that that could transpire.

But corporations, government entities, cloud providers, research institutions, and curators need to plan for data preservation today. It is not that the ‘Hail Mary’ pass never works, but that coaches who build game plans around it have difficulty retaining their jobs.

Long-term budget cycles need to evaluate data growth against the projected costs. Spectra’s projections do not call for shortages or rising media costs. But there are credible risks against expectations of precipitously declining storage costs. Storage is neither free nor negligible and proper designs going forward need to plan for growth and apportion it across different media types, both for safety and economy.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter