How to Protect Data at Rest with Amazon EC2 Instance Store Encryption?

To ensure sensitive data saved on disks not readable by any user or application without valid key

This is a Press Release edited by StorageNewsletter.com on February 20, 2017 at 2:53 pmHow to Protect Data at Rest with Amazon EC2 Instance Store Encryption

This article was published on January 30, 2017 on the AWS Security Blog by Assaf Namer, enterprise solutions architect, Amazon Web Services.

This article was published on January 30, 2017 on the AWS Security Blog by Assaf Namer, enterprise solutions architect, Amazon Web Services.

Encrypting data at rest is vital for regulatory compliance to ensure that sensitive data saved on disks is not readable by any user or application without a valid key.

Some compliance regulations such as PCI DSS and HIPAA require that data at rest be encrypted throughout the data lifecycle. To this end, AWS provides data-at-rest options and key management to support the encryption process. For example, you can encrypt Amazon EBS volumes and configure Amazon S3 buckets for server-side encryption (SSE) using AES-256 encryption. Additionally, Amazon RDS supports Transparent Data Encryption (TDE).

Instance storage provides temporary block-level storage for Amazon EC2 instances. This storage is located on disks attached physically to a host computer. Instance storage is ideal for temporary storage of information that frequently changes, such as buffers, caches, and scratch data. By default, files stored on these disks are not encrypted.

In this blog post, I show a method for encrypting data on Linux EC2 instance stores by using Linux built-in libraries. This method encrypts files transparently, which protects confidential data. As a result, applications that process the data are unaware of the disk-level encryption.

First, though, I will provide some background information required for this solution.

Disk and file system encryption

You can use two methods to encrypt files on instance stores.

The first method is disk encryption, in which the entire disk or block within the disk is encrypted by using one or more encryption keys. Disk encryption operates below the file-system level, is operating-system agnostic, and hides directory and file information such as name and size. Encrypting File System, for example, is a Microsoft extension to the Windows NT OS’s New Technology File System (NTFS) that provides disk encryption.

The second method is file-system-level encryption. Files and directories are encrypted, but not the entire disk or partition. File-system-level encryption operates on top of the file system and is portable across OSs.

The Linux dm-crypt Infrastructure

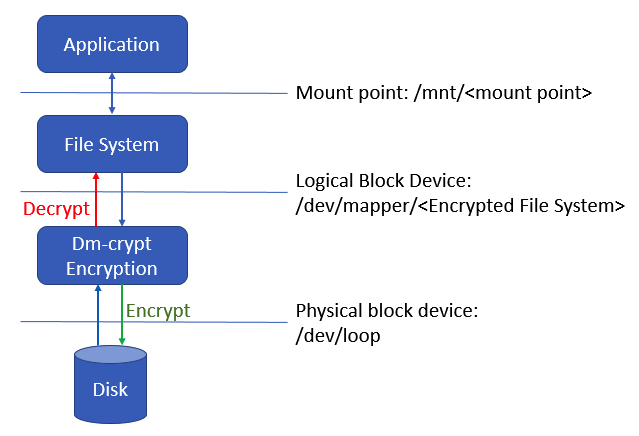

Dm-crypt is a Linux kernel-level encryption mechanism that allows users to mount an encrypted file system. Mounting a file system is the process in which a file system is attached to a directory (mount point), making it available to the OS. After mounting, all files in the file system are available to applications without any additional interaction; however, these files are encrypted when stored on disk.

Device mapper is an infrastructure in the Linux 2.6 and 3.x kernel that provides a generic way to create virtual layers of block devices. The device mapper crypt target provides transparent encryption of block devices using the kernel crypto API. The solution in this post uses dm-crypt in conjunction with a disk-backed file system mapped to a logical volume by the Logical Volume Manager (LVM). LVM provides logical volume management for the Linux kernel.

The following diagram depicts the relationship between an application, file system, and dm-crypt. Dm-crypt sits between the physical disk and the file system, and data written from the OS to the disk is encrypted. The application is unaware of such disk-level encryption. Applications use a specific mount point in order to store and retrieve files, and these files are encrypted when stored to disk. If the disk is lost or stolen, the data on the disk is useless.

Overview of the solution

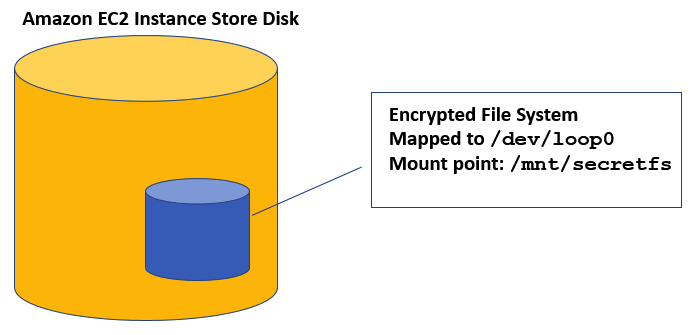

In this post, I create a new file system called secretfs. This file system is encrypted using dm-crypt. This example uses LVM and Linux Unified Key Setup (LUKS) to encrypt a file system. The encrypted file system sits on the EC2 instance store disk. Note that the internal store file system is not encrypted but rather a newly created file system.

The following diagram shows how the newly encrypted file system resides in the EC2 internal store disk. Applications that need to save sensitive data temporarily will use the secretfs mount point (‘/mnt/secretfs’) directory to store temporary or scratch files.

Requirements

This solution has three requirements for the solution to work. First, you need to configure the related items on boot using EC2 launch configuration because the encrypted file system is created at boot time. An administrator should have full control over every step and should be able to grant and revoke the encrypted file system creation or access to keys. Second, you must enable logging for every encryption or decryption request by using AWS CloudTrail. In particular, logging is critical when the keys are created and when an EC2 instance requests password decryption to unlock an encrypted file system. Lastly, you should integrate the solution with other AWS services, as described in the next section.

AWS services used in this solution

I use the following AWS services in this solution:

• AWS Key Management Service (KMS) – AWS KMS is a managed service that enables easy creation and control of encryption keys used to encrypt data. KMS uses envelope encryption in which data is encrypted using a data key that is then encrypted using a master key. Master keys can also be used to encrypt and decrypt up to 4 KBs of data. In our solution, I use KMS encrypt/decrypt APIs to encrypt the encrypted file system’s password. See more information about envelope encryption.

• AWS CloudTrail – CloudTrail records AWS API calls for your account. KMS and CloudTrail are fully integrated, which means CloudTrail logs each request to and from KMS for future auditing. This post’s solution enables CloudTrail for monitoring and audit.

• Amazon S3 – S3 is an AWS storage I use S3 in this post to save the encrypted file system password.

• AWS Identity and Access Management (IAM) – AWS IAM enables you to control access securely to AWS services. In this post, I configure and attach a policy to EC2 instances that allows access to the S3 bucket to read the encrypted password file and to KMS to decrypt the file system password.

Architectural overview

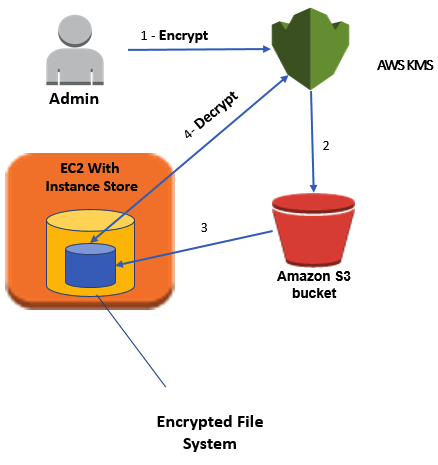

The following high-level architectural diagram illustrates the solution proposed in order to enable EC2 instance store encrypting. A detailed implementation plan follows in the next section.

In this architectural diagram:

- 1 The administrator encrypts a secret password by using KMS. The encrypted password is stored in a file.

- 2 The administrator puts the file containing the encrypted password in an S3 bucket.

- 3 At instance boot time, the instance copies the encrypted file to an internal disk.

- 4 The EC2 instance then decrypts the file using KMS and retrieves the plaintext password. The password is used to configure the Linux encrypted file system with LUKS. All data written to the encrypted file system is encrypted by using an AES-256 encryption algorithm when stored on disk.

Implementing the solution

Create an S3 bucket

First, you create a bucket for storing the file that holds the encrypted password. This password (key) will be used to encrypt the file system. Each EC2 instance upon boot copies the file, reads the encrypted password, decrypts the password, and retrieves the plaintext password, which is used to encrypt the file system on the instance store disk.

In this step, you create the S3 bucket that stores the encrypted password file, and apply the necessary permissions. If you are using an Amazon VPC endpoint for Amazon S3, you also need to add permissions to the bucket to allow access from the endpoint. (For a detailed example, see Example Bucket Policies for VPC Endpoints for Amazon S3.)

To create a new bucket:

- 1 Sign in to the S3 consolehttps://console.aws.amazon.com/s3/home and choose Create Bucket.

- 2 In the Bucket Name box, type your bucket name and then choose Create.

- 3 You should see the details about your new bucket in the right pane.

Configure IAM roles and permission for the S3 bucket

When an EC2 instance boots, it must read the encrypted password file from S3 and then decrypt the password using KMS. In this section, I configure an IAM policy that allows the EC2 instance to assume a role with the right access permissions to the S3 bucket. The following policy grants the correct access permissions, in which your-bucket-name is the S3 bucket that stores the encrypted password file.

To create and configure the IAM policy:

- 1 Sign in to the AWS Management Console and navigate to the IAM console. In the navigation pane, choose Policies, choose Create Policy, select Create Your Own Policy, name and describe the policy, and paste the following policy. Choose Create Policy. For more details, see Creating Customer Managed Policies.

. Json

{

“Version”: “2012-10-17”,

“Statement”: [

{

“Sid”: “Stmt1478729875000”,

“Effect”: “Allow”,

“Action”: [

“s3:GetObject”

],

“Resource”: [

“arn:aws:s3:::

]

}

]

}

The preceding policy grants read access to the bucket where the encrypted password is stored. This policy is used by the EC2 instance, which requires you to configure an IAM role. You will configure KMS permissions later in this post.

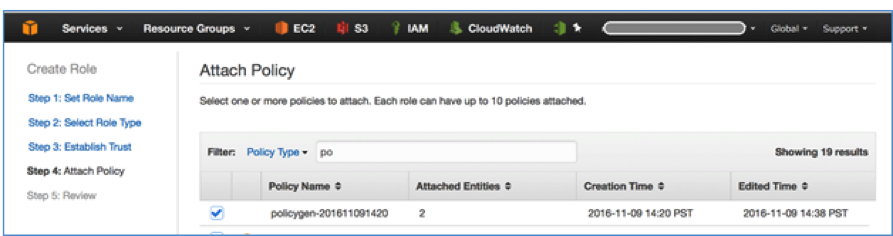

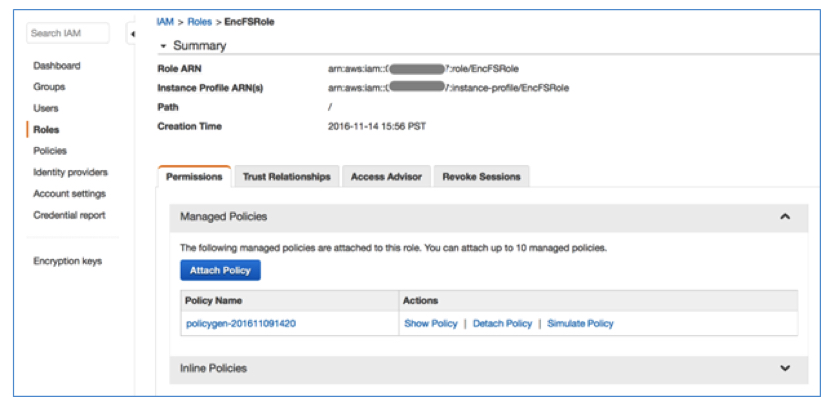

- 2 In the IAM console, choose Roles, and then choose Create New Role.

- 3 In Step 1: Role Name, type your role name, and choose Next Step.

- 4 In Step 2: Select Role Type, choose Amazon EC2 and choose Next Step.

- 5 In Step 3: Established Trust, choose Next Step.

- 6 In Step 4: Attach Policy, choose the policy you created in Step 1, as shown in the following screenshot.

- 7 In Step 5: Review, review the configuration and complete the steps. The newly created IAM role is now ready. You will use it when launching new EC2 instances, which will have the permission to access the encrypted password file in the S3 bucket.

You now should have a new IAM role listed on the Roles page. Choose Roles to list all roles in your account and then select the role you just created as shown in the following screenshot.

Encrypt a secret password with KMS and store it in the S3 bucket

Next, you use KMS to encrypt a secret password. To encrypt text by using KMS, you must use AWS CLI. AWS CLI is installed by default on EC2 Amazon Linux instances and you can install it on Linux, Windows, or Mac computers.

To encrypt a secret password with KMS and store it in the S3 bucket:

• From the AWS CLI, type the following command to encrypt a secret password by using KMS (replace the region name with your region). You must have the right permissions in order to create keys and put objects in S3 (for more details, see Using IAM Policies with AWS KMS). In this example, I have used AWS CLI on the Linux OS to encrypt and generate the encrypted password file.

aws –region us-east-one kms encrypt –key-id ‘alias/EncFSForEC2InternalStorageKey’ “ThisIs-a-SecretPassword” –query CiphertextBlob –output text | base64 –decode > LuksInternalStorageKey

aws s3 cp LuksInternalStorageKey s3://

The preceding commands encrypt the password (Base64 is used to decode the cipher text). The command outputs the results to a file called LuksInternalStorageKey. It also creates a key alias (key name) that makes it easy to identify different keys; the alias is called EncFSForEC2InternalStorageKey. The file is then copied to the S3 bucket I created earlier in this post.

Configure permissions to allow the role to access the KMS key

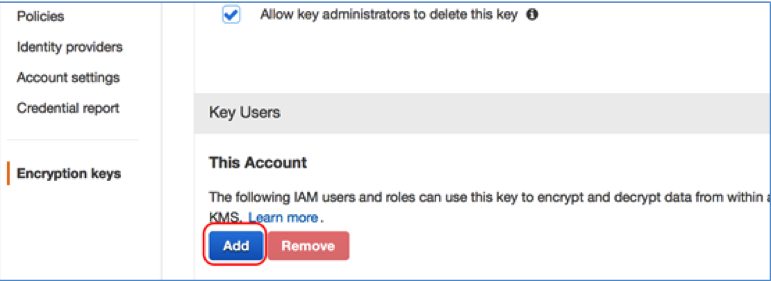

Next, you grant the role access to the key you just created with KMS:

- 1 From the IAM console, choose Encryption keys from the navigation pane.

- 2 Select EncFSForEC2InternalStorageKey (this is the key alias you configured in the previous section). To add a new role that can use the key, scroll down to the Key Policy and then choose Add under Key Users.

- 3 Choose the new role you created earlier in this post and then choose Attach.

- 4 The role now has permission to use the key.

Configure EC2 with role and launch configurations

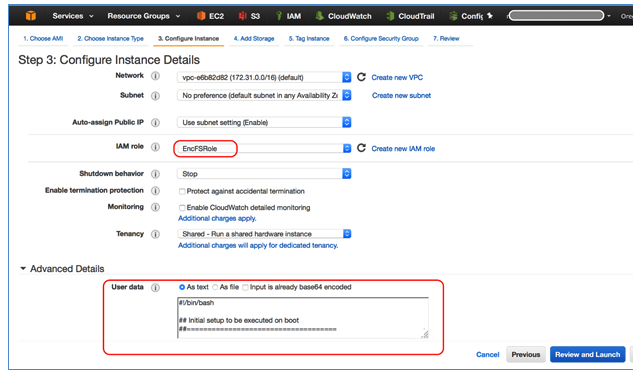

In this section, you launch a new EC2 instance with the new IAM role and a bootstrap script that executes the steps to encrypt the file system, as described earlier in the ‘Architectural overview’ section:

- 1 In the EC2 console, launch a new instance (see this tutorial for more details). In Step 3: Configure Instance Details, choose the IAM role you configured earlier, as shown in the following screenshot.

- 2 Expand the Advanced Details section (see previous screenshot) and paste the following script in the EC2 instance’s User data Keep the As text check box selected. The script will be executed at EC2 boot time.

Bash

#!/bin/bash

## Initial setup to be executed on boot

##====================================

# Create an empty file. This file will be used to host the file system.

# In this example we create a 2GB file called secretfs (Secret File System).

dd of=secretfs bs=1G count=0 seek=2

# Lock down normal access to the file.

chmod 600 secretfs

# Associate a loopback device with the file.

losetup /dev/loop0 secretfs

#Copy encrypted password file from S3. The password is used to configure LUKE later on.

aws s3 cp s3://an-internalstoragekeybucket/LuksInternalStorageKey .

# Decrypt the password from the file with KMS, save the secret password in LuksClearTextKey

LuksClearTextKey=$(aws –region us-east-1 kms decrypt –ciphertext-blob fileb://LuksInternalStorageKey –output text –query Plaintext | base64 –decode)

# Encrypt storage in the device. cryptsetup will use the Linux

# device mapper to create, in this case, /dev/mapper/secretfs.

# Initialize the volume and set an initial key.

echo “$LuksClearTextKey” | cryptsetup -y luksFormat /dev/loop0

# Open the partition, and create a mapping to /dev/mapper/secretfs.

echo “$LuksClearTextKey” | cryptsetup luksOpen /dev/loop0 secretfs

# Clear the LuksClearTextKey variable because we don’t need it anymore.

unset LuksClearTextKey

# Check its status (optional).

cryptsetup status secretfs

# Zero out the new encrypted device.

dd if=/dev/zero of=/dev/mapper/secretfs

# Create a file system and verify its status.

mke2fs -j -O dir_index /dev/mapper/secretfs

# List file system configuration (optional).

tune2fs -l /dev/mapper/secretfs

# Mount the new file system to /mnt/secretfs.

mkdir /mnt/secretfs

mount /dev/mapper/secretfs /mnt/secretfs

- 3 If you have not enabled it already, be sure to enable CloudTrail on your account. Using CloudTrail, you will be able to monitor and audit access to the KMS key.

- 4 Launch the EC2 instance, which copies the password file from S3, decrypts the file using KMS, and configures an encrypted file system. The file system is mounted on /mnt/secretfs. Therefore, every file written to this mount point is encrypted when stored to disk. Applications that process sensitive data and need temporary storage should use the encrypted file system by writing and reading files from the mount point, ‘/mnt/secretfs’. The rest of the file system (for example, /home/ec2-user) is not encrypted.

You can list the encrypted file system’s status. First, SSH to the EC2 instance using the key pair you used to launch the EC2 instance. (For more information about logging in to an EC2 instance using a key pair, see Getting Started with Amazon EC2 Linux Instances.) Then, run the following command as root.

[root@ip-172-31-53-188 ec2-user]# cryptsetup status secretfs

/dev/mapper/secretfs is active and is in use.

type: LUKS1

cipher: aes-xts-plain64

keysize: 256 bits

device: /dev/loop0

loop: /secretfs

offset: 4096 sectors

size: 4190208 sectors

mode: R/W

As the command’s results should show, the file system is encrypted with AES-256 using XTS mode. XTS is a configuration method that allows ciphers to work with large data streams, without the risk of compromising the provided security.

Conclusion

This blog post shows you how to encrypt a file system on EC2 instance storage by using built-in Linux libraries and drivers with LVM and LUKS, in conjunction with AWS services such as S3 and KMS. If your applications need temporary storage, you can use an EC2 internal disk that is physically attached to the host computer. The data on instance stores persists only during the lifetime of its associated instance. However, instance store volumes are not encrypted. This post provides a simple solution that balances between the speed and availability of instance stores and the need for encryption at rest when dealing with sensitive data.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter