CoreWeave Launches AI Object Storage

An object storage service optimized for AI workloads

This is a Press Release edited by StorageNewsletter.com on March 27, 2025 at 2:03 pm This blog post has been written by Jeff Braunstein, storage principal product manager, CoreWeave, Inc.

This blog post has been written by Jeff Braunstein, storage principal product manager, CoreWeave, Inc.

The world of AI is evolving rapidly, and the need for performance-optimized, scalable, and secure storage solutions has never been greater.

At NVIDIA GTC 2025, CoreWeave announced the availability of CoreWeave AI Object Storage, a managed object storage service purpose-built for AI training and inference at scale.

Click to enlarge

AI Object Storage adds to an existing storage product lineup, including the company’s Distributed File Storage and Dedicated Storage products, solutions that are designed to provide scalable storage for AI workloads.

AI Object Storage adds to an existing storage product lineup, including the company’s Distributed File Storage and Dedicated Storage products, solutions that are designed to provide scalable storage for AI workloads.

As AI models grow in size and complexity, traditional cloud storage solutions fall short of the performance demands required to fuel innovation. AI Object Storage was engineered from the ground up to meet these challenges, enabling organizations to accelerate their AI workloads with unprecedented speed and efficiency.

Built for AI from the Ground Up

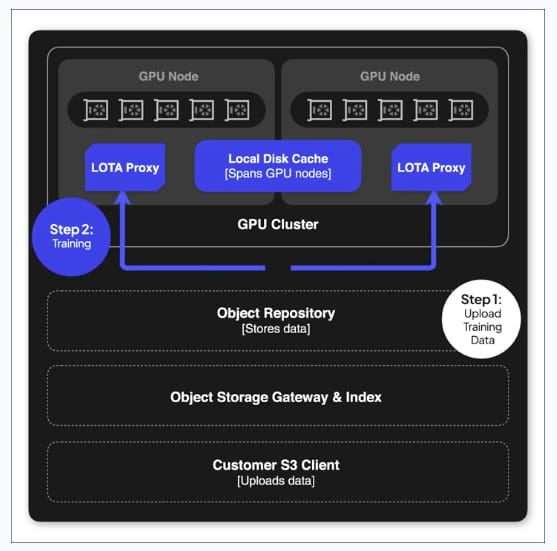

AI Object Storage delivers EB-scale, S3-compatible storage tailored for GPU-intensive AI model training. Designed to integrate with CoreWeave’s NVIDIA GPU compute clusters, it supports performance levels up to 2GB/s per GPU and scales to hundreds of thousands of GPUs. With its Local Object Transport Accelerator (LOTA), AI Object Storage caches frequently used datasets and/or prestages data directly on the local NVMe disks of GPU nodes, reducing network latency and improving training speeds.

Traditional object storage systems often create bottlenecks in data-intensive AI workflows. In contrast, AI Object Storage eliminates these challenges, providing the performance required to maximize GPU utilization while simplifying operations. Its caching technology can operate without requiring additional tools or complex configurations, allowing AI teams to focus on building and refining their models.

Data flow from customer through CoreWeave AI Object Storage and LOTA to Compute nodes

Key Benefits:

- Blazing Fast Performance: Up to 2GB/s per GPU, enabling faster training and inference cycles.

- Scalability: Trillions of objects, EBs of data, and performance that grows with your storage needs.

- Optimized for NVIDIA GPUs: Fully supports the latest NVIDIA GPU architectures, including Blackwell and Hopper.

- Simple and Flexible Pricing: No egress, per-request, or hidden fees-pay only for the storage you use.

- Enterprise-Grade Security: Encryption at rest and in transit, with role-based access control, audit logging, and lifecycle policies.

Streamlined Deployment Across the CoreWeave Ecosystem

AI Object Storage is designed for effortless integration within the CoreWeave Cloud ecosystem, enabling a streamlined experience for AI and ML workloads. It supports CoreWeave Cloud’s SAML/SSO authentication for user management, ensuring secure access and simplified identity federation. A managed Grafana dashboard-available directly from the CoreWeave Console – provides real-time insights into requests per second, bandwidth usage, and response durations, helping teams monitor performance and optimize workloads.

LOTA is integrated with CoreWeave’s Kubernetes Service (CKS), allowing for tight integration between CKS applications and LOTA’s caching capabilities. Developers can also manage their storage infrastructure using the CoreWeave Terraform provider, enabling automation, infrastructure-as-code workflows, and rapid deployment of storage resources alongside GPU compute clusters. With deep integration into CoreWeave’s AI infrastructure, users can orchestrate and scale their workloads without added complexity.

CoreWeave AI Object Storage also includes built-in observability data via managed Grafana dashboards, providing valuable insights about the product’s performance. An example of one of the dashboards is shown below. This dashboard includes insights on requests per second, throughput, and request duration. Data can be dynamically grouped or filtered by bucket, region, operation type, or response code, allowing for deeper analysis and troubleshooting. With these insights, users can better understand usage patterns, identify potential issues, and ensure optimal performance of their AI-driven workloads.

Click to enlarge

Resources:

Details view Release Notes, which include a list of supported regions

Check out product documentation.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter