Pliops Collaboration with University of Chicago vLLM Production Stack to Enhance LLM Inference Performance

KV-Store technology with NVMe SSDs enhances vLLM Production Stack, ensuring high performance serving while reducing cost, power and computational requirements

This is a Press Release edited by StorageNewsletter.com on March 13, 2025 at 2:31 pmPliops Ltd. announced a strategic collaboration with the vLLM Production Stack developed by LMCache Lab, University of Chicago.

Click to enlarge

Aimed at revolutionizing large language model (LLM) inference performance, this partnership comes at a pivotal moment as the AI community gathers for the GTC 2025 conference.

Together, Pliops and the vLLM Production Stack, an open-source reference implementation of a cluster-wide full-stack vLLM serving system, are delivering unparalleled performance and efficiency for LLM inference. The companys contributes its expertise in shared storage and efficient vLLM cache offloading, while LMCache Lab brings a robust scalability framework for multiple instance execution. The combined solution will also benefit from the ability to recover from failed instances, leveraging the firm’ advanced KV storage backend to set a new benchmark for enhanced performance and scalability in AI applications.

“We are excited to partner with Pliops to bring unprecedented efficiency and performance to LLM inference,” said Junchen Jiang, head, LMCache Lab, University of Chicago. “This collaboration demonstrates our commitment to innovation and pushing the boundaries of what’s possible in AI. Together, we are setting the stage for the future of AI deployment, driving advancements that will benefit a wide array of applications.”

Key Highlights of Combined Solution:

- Integration: By enabling vLLM to process each context only once, Pliops and the vLLM Production Stack set a new standard for scalable and sustainable AI innovation.

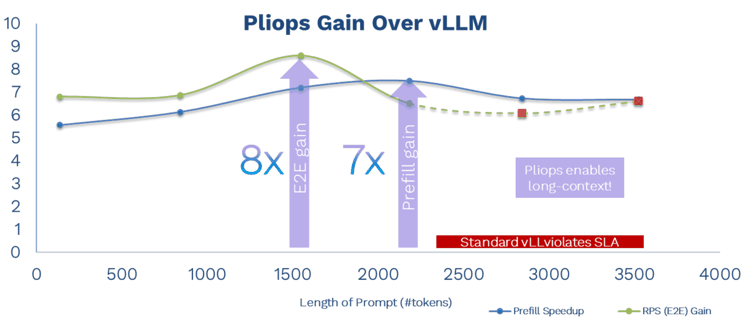

- Enhanced Performance: The collaboration introduces a new PB tier of memory below HBM for GPU compute applications. Utilizing cost-effective, disaggregated smart storage, computed KV caches are retained and retrieved efficiently, significantly speeding up vLLM inference.

- AI Autonomous Task Agents: This solution is optimal for AI autonomous task agents, addressing a diverse array of complex tasks through strategic planning, sophisticated reasoning, and dynamic interaction with external environments.

- Cost-Efficient Serving: Pliops’ KV-Store technology with NVMe SSDs enhances the vLLM Production Stack, ensuring high performance serving while reducing cost, power and computational requirements.

Looking to future, collaboration between Pliops and the vLLM Production Stack will continue to evolve through following stages:

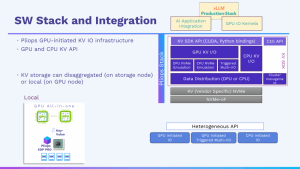

- Basic Integration: The current focus is on integrating Pliops KV-IO stack into the production stack. This stage enables feature development with an efficient KV/IO stack, leveraging the firm’s Lightning AI KV store. This includes using shared storage for prefill-decode disaggregation and KV-Cache movement, and joint work to define requirements and APIs. The company is developing a generic GPU KV store IO framework.

- Advanced Integration: The next stage will integrate Pliops vLLM acceleration into the production stack. This includes prompt caching across multi-turn conversations, as provided by platforms like OpenAI and DeepSeek, KV-Cache offload to scalable and shared key-value storage, and eliminating the need for sticky/cache-aware routing.

“This collaboration opens up exciting possibilities for enhancing LLM inference,” commented Ido Bukspan, CEO, Pliops. “It allows us to leverage complementary strengths to tackle some of AI’s toughest challenges, driving greater efficiency and performance across a wide range of applications.“

Resources:

Blog: Unlocking New Horizons in GenAI with Pliops’ XDP LightningAI

Pliops XDP LightningAI Solution Brief – The New Memory Tier for LLMs (PDF)

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter