Industry Facts 2024

A collection of Data Management and Storage Industry Facts for 2024

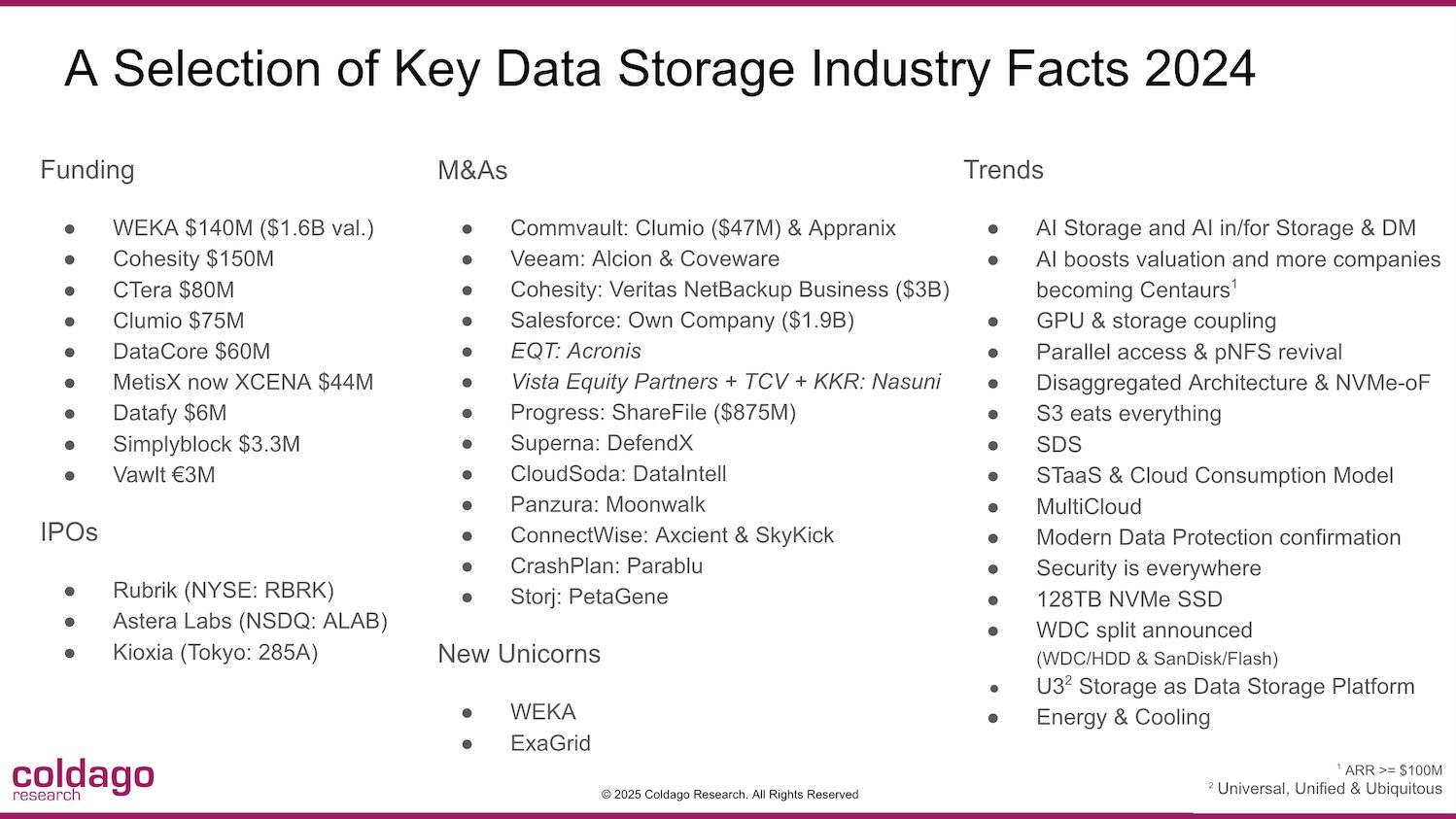

By Philippe Nicolas | January 14, 2025 at 2:01 pmBefore you jump into the list below of Facts 2024 collected from more than 30 companies, we wish to share our summary and observation of the main facts of the past year with an image that captures the essentials of 2024.

Click to enlarge

AirMettle

Donpaul Stephens

Founder & CEO

In 2024, the storage landscape is looking to complete an almost inevitable split like a tech-savvy amoeba: SMEs are snuggling up with service providers for easy, cost-effective solutions, while big players are either yanking their data back home or learning how to play the hybrid cloud game for control and cost efficiency. While everyone talks a good game on AI and analytics, computational storage devices are as niche as ever. Everyone’s fixated on APIs (“How can I do this?”), but when even the US government is talking about getting more efficient – we’ll soon have to answer to the efficiency piper.

Atempo

Renaud Bonnevoie

Director of Product Management

- Hybrid Cloud Adoption Accelerates

Companies have increasingly turned to hybrid cloud architectures, balancing cost efficiency and scalability with the need for data sovereignty. The growing complexity of workloads and the demand for seamless data movement between environments drive this trend. - Security as the Cornerstone of Data Management

With the surge in cyber threats, businesses prioritized solutions combining data backup, advanced ransomware protection, and fast recovery capabilities. Zero Trust architectures emerged as the gold standard for securing IT infrastructures. - Simplification Becomes a Competitive Differentiator

As businesses grapple with data migration and management complexities, solutions emphasizing ease of use and automation have gained significant traction. Streamlined processes and onboarding have become key factors in customer satisfaction and partner success.

Cerabyte

Christian Pflaum

CEO

- Optical data storage is on the rise!

Optical data storage is a reliable method for long-term data storage; depending on implementation, it can be superior to magnetic or solid-state data storage. Several companies have adopted different concepts to write bits with an optical mechanism: Cerabyte, Holomem, Optera, Silica, Folio Photonics, writing data on or into organic or inorganic transparent media by either creating physical bits (holes, nano-cracks), or changing the optical characteristics of the material. - AI accelerates demand for memory and storage!

Energy consumption and performance are recognized as AI bottlenecks, prompting the industry to react accordingly. Additionally, the memory wall (capacity and bandwidth) is limiting the growth of AI, and exploding demand for higher-density solid-state storage products indicates that short — and, subsequently, long-term storage solutions see accelerated demand, especially with the emergence of new data-intensive AI applications. - Long-term data storage continues to be underfunded!

The industry has realized the need to invest in memory and short-term storage solutions, especially solid-state storage solutions. On the other hand, new scalable technologies for low-cost and sustainable data storage lack sufficient funding to address long-term storage needs. This holds true for large data storage solution vendors as well as data storage start-ups. A future shortage of affordable sustainable capacity storage solutions seems to be inevitable assuming the status quo considering the data tsunami on the horizon.

CloudFabrix

Shailesh Manjrekar

CMO

- Data Fabric and Generative AI has respectively, democratized and Unified AIOps, Observability and Security siloed domains

Operational Domains were siloed and needed practitioners to be subject matter experts. Not any more, they can use simple Conversational questions to glean insights. As they say, the new operational intelligence language is “English”. Data Fabric becomes paramount to unify these operational domains. - Data Automation has become the Achilles heel of Observability and AIOps and Observability pipelines are emerging as the saviors

Data Automation includes Data Integration, Data Transformation, Data Enrichment and Data Routing which need Composable Observability Pipelines for real-time telemetry. Lack of this results in low accuracy for predictive and prescriptive automation - Telcos and Service providers are adopting AIOps for Digital Resilience, Service Assurance and Digital Twins for Digital Service Predictions

Telcos and SP’s comprise of complex domains ( Campus, Datacenter, Mobility, Optical) and data-types ( bulkstats, SNMP traps, syslogs, gNMI, OTEL) etc. They are feeling incredible pressure from hyperscalers and want to reinvent Service Assurance and Service Predictions and are enmass adopting AIOps, Observability and Adoption.

Cohesity

James Blake

Head of Global Cyber Resiliency Strategy

- A year of change

2024’s going down in the history books as one of the most eventful years in cybersecurity to date. Cohesity’s acquisition of Veritas’ data business is just one example of a year filled with industry-changing acquisitions, long-awaited IPOs, massive venture capital investments and big takedown moments that had the everyday consumer talking about enterprise security. All of this occurred alongside economic ups and downs and market uncertainty. - The good news

The security level of backup infrastructure has improved significantly in the past twelve months. Important features such as immutable storage are now table stakes and it looks hopeful that ideas such as using a zero-trust to protect backup infrastructures – which are targeted by both nation states in wider attacks and ransomware gangs – are coming on stream, too. - The bad news

Unfortunately, most companies still aren’t building the right processes, integrations and shared responsibility models to handle such devastating cyberattacks in a manner that minimises impact on the organisation. Organizations may have the greatest confidence in their cyber resilience, but the reality is that the majority are paying ransoms and are breaking ‘do not pay’ policies. Cohesity Global Cyber Resilience Report 2024 revealed 83% of respondents said their company would pay a ransom to recover data and restore business processes or do so faster.

Congruity360

Mark Ward

COO

- DSPM turned the security world’s attention on corporate data security versus 3rd party attacks

- Cloud to On-prem migrations accelerated and SMART Migrations became standard – No more Lift and Shift, only move the Right data!

- NYDFS and HiTrust regulations grew FANGS…companies woke up to PII and Sensitive data risk.

CTERA

Aron Brand

CTO

- AI-Driven Infrastructure Transformation

AI’s exponential growth drove sweeping changes in data infrastructure, pushing storage systems to new limits. Enterprises scaled storage capacities into petabytes and exabytes, with next-generation architectures setting benchmarks for real-time processing and model training. - Emergence of Storage as a Service (STaaS)

The move from legacy, hardware-based storage to cloud-delivered STaaS models accelerated in 2024. Enterprises increasingly embraced consumption-based pricing, eliminating capital expenditures in favor of operational flexibility. Leaders in cloud storage expanded their offerings, making scalable, pay-as-you-go storage solutions mainstream for businesses of all sizes. - Integration of AI Automation in Data Management

AI-powered data management tools gained traction as enterprises sought to automate storage provisioning, data classification, and disaster recovery. This wave reduced operational complexity and bolstered compliance capabilities. Key players integrated AI models into enterprise storage solutions, enabling predictive analytics, automated scaling, and proactive threat detection.

DataCore

Abhi Dey

CPO

- The Rise of Composable Storage

The growing adoption of SSDs highlighted the critical need for storage infrastructures that could seamlessly integrate diverse technologies and adapt dynamically to changing workloads. Composable storage emerged as the definitive solution, enabling organizations to pool and reallocate resources in real time without disruption. By breaking down silos and ensuring optimal utilization of both SSDs and HDDs, composable storage allowed businesses to achieve unprecedented flexibility, sustainability, and efficiency in their storage operations. - Object Storage Adapting to the Edge

Object storage evolved beyond its traditional core and cloud implementations to address the demands of edge environments. Containerization technologies, such as Kubernetes, facilitated the deployment of object storage in compact, portable forms at distributed locations. This allowed organizations to process and act on data closer to its origin, reducing latency and improving operational efficiency. Object storage became a vital solution for the growing demands of edge computing. - The Progression of Container-Native Storage

Container adoption surged as enterprises increasingly required storage solutions tailored for Kubernetes and other containerized environments. Container-native storage systems addressed the unique demands of stateful workloads, offering persistence, reliability, and seamless integration with microservices-based architectures. These solutions provided enterprise-grade data services, including automated management, intelligent data placement, and performance optimization, enabling businesses to operate modern applications with greater agility and efficiency.

Datadobi

Steve Leeper

VP of Product Marketing

The acceleration in the growth of unstructured data meant the need for insights was greater than ever. Insights are key for managing the lifecycle of data from creation to archiving. Insights are also key for ensuring the most appropriate data is included in data lakes and data lakehouses that support new workloads in the AI/ML space. It’s not good enough to blindly copy data into a repository that will be used to train or augment a model. Only businesses that could confidently say their data was GENAI-ready achieved success and ROI in 2024.

Fujifilm Recording Media

Rich Gadomski

Head of Tape Evangelism

- Innovation in Tape Storage

In 2024, we continued to see innovation coming from tape library suppliers like IBM with their Diamondback S3 Deep Archive, Spectra Logic Cube with Black Pearl and Quantum’s Scalar i7 RAPTOR. These new and highly dense “rack size” libraries offer S3 compatible interfaces making object-based tape and hybrid clouds a cost-effective and energy-efficient reality. - Sustainability Moved out of the Spotlight

AI pushed sustainability from the center stage spotlight in 2024 as organizations found frustration with overly aggressive and unachievable ESG goals. But a remarkably simple and elegant solution emerged for IT data storage managers in the form of modern tape systems. For the majority of data that quickly becomes cool, cold, or frozen, studies show that eco-friendly tape consumes at least 80% less power and produces over 95% less CO2e than equivalent capacities of primary storage devices. - AI Craze and Related Energy Predicament

While sustainability cooled, AI took off beyond a buzzword in 2024 and into high orbit with after-burning, energy intensive GPUs. With 10X or more energy requirements compared to conventional CPUs, power efficiency needed to be found somewhere. Savvy IT leaders recognized the value of active archive data repositories fueled by energy efficient tape libraries to support data intensive AI workloads.

Hitachi Vantara

Octavian Tanase

Chief Product Officer

AI Made Way for The Year of the Data Platform

Overwhelmed with scaling data infrastructure and modernizing applications, all while managing carbon footprints and IT budgets, organizations are simplifying their approach to storage.

- Data platforms offer a centralized, scalable way to store, manage, and analyze data, regardless of location. By integrating seamlessly with hybrid cloud environments, data platforms empower organizations to break down data silos and unlock the true potential of their information assets.

- New object storage appliances are designed to quickly scale to accommodate vast amounts of unstructured data that is prevalent in fueling numerous AI use cases, and each object is stored with rich metadata, which allows for easier categorization, searchability, and data life cycle management.

- QLC flash technology delivers high-density, cost-effective storage ideal for large-capacity needs. Public cloud replication can seamlessly backup and replicate data to the cloud for enhanced disaster recovery and availability.

- Competitive businesses will also upgrade their on-prem traditional infrastructure to be more modern using agile products such as software-defined storage and S3 object storage, and an automated control plane to create the private cloud part of the hybrid cloud.

Jason Hardy

CTO for AI

The Need for AI Guidance was Apparent

- Many IT and business leaders report feeling unprepared for AI adoption and seek guidance on how to best leverage GenAI, machine learning and other emerging technologies and then identify their best opportunities to optimize potential value.

- A 2024 Enterprise Infrastructure for Generative AI report notes that less than half (44%) of organizations have well-defined and comprehensive policies regarding GenAI, and just 37% believe their infrastructure and data ecosystem are prepared to support their AI ambitions. Compounding the problem is a lack of relevant experience, with over 50% reporting a lack of skilled employees with GenAI knowledge. And hiring people with those valued skill sets can be difficult, too.

- Businesses are looking to highly experienced partners to identify high-impact opportunities and create a strategic roadmap for AI integration and growth while also managing the AI platforms, tools and software to enable them to facilitate the best possible AI model development, deployment, management and outcome.

Jeb Horton

Senior Vice President, Global Services

Customers Reap the Benefits of Flexible Spending Models as Hybrid Cloud Adoption Soars

- Enterprises typically spend most of their IT budgets—a large portion of which is dedicated to infrastructure— on maintaining aging systems rather than growing or transforming their IT practices.

- Respondents from organizations that have yet to adopt ITaaS are much more likely to report a negative impact on their revenues (65% vs. 53%) and reputations (56% vs. 31%) due to system downtime than those from adopters.

- As enterprises increasingly turn to hybrid cloud environments to meet their diverse IT needs, there is a notable shift towards consumption-based models that offer greater agility, lower total cost of ownership (TCO), and simplified operations management.

- According to Fortune Business Insights, the global IaaS market is expected to grow from $156.93 billion in 2024 to $738.11 billion by 2032. This growth is driven by the demand for scalable, cost-effective IT solutions and the surge in cloud adoption.

- The escalating demand for interoperability standards between cloud services and existing systems is driving the growth of hybrid cloud in the market.

- The interoperability standards in cloud services are driving the market to surpass USD 111.98 billion in 2024 and reach a valuation of USD 587.64 billion by 2031.

Huawei

Peter Zhou

President of Data Storage Product Line

All flash Storage applied to more and more use cases

- AI training requires high performance from all flash storage

- High performance object storage is used for HPC/AI where data need to be transferred flexibly cross different domains

- Backup/recovery required shorter windows in Finance and Internet service providers

GenAI’s rise triggered people to reconsider what storage for AI, is it only a high performance file system?

- Customers think I just need a fast file system

- Besides performance, vendor think we should provide more cyber security and data integration tools, e.g. data collections, unified platform for both file and object

Ransomware Protection is a must requirement in storage procurement in Finance

- As a vendor, we found that ransomware protection now become a must to Finance enterprise when they buy new storage systems. E.g. this requirement changed from 10% in 2023 to 85% in 2024

HYCU

Simon Taylor

Founder and CEO

- 2024: A Year of Innovation and Recognition

HYCU stood at the forefront of this transformation, achieving several notable milestones. Our groundbreaking Generative AI Initiative with Anthropic, introduced at IT Press Tour, demonstrated HYCU’s commitment to pushing the boundaries of AI-powered data protection. This collaboration has set new standards for how enterprises can securely implement and protect AI workloads while maintaining data integrity. - Google Cloud recognized HYCU as Partner of the Year for Backup and Disaster Recovery at their annual customer event Google Cloud Next, validating HYCU’s dedication to providing robust cloud data protection solutions

- Gartner positioned HYCU as a Visionary, for the third year, one of only three companies, in their Magic Quadrant for Enterprise Backup and Recovery Software Solutions.

- TechTarget awarded HYCU R-Cloud Gold in their annual Storage Magazine Awards for Backup and Disaster Recovery.

- HYCU introduced the general availability of GitHub and GitLab, adding to existing support for Bitbucket, Terraform, AWS Cloud Formation, CircleCI, and Jira in the HYCU R-Cloud Marketplace. The newest availability provides developers with the broadest data protection capabilities to protect and recover IP, source code, and configurations across an enterprise.

Hydrolix

Antony Falco

VP Marketing

- Data lakes start streaming

Organizations began demanding more actionable insights from the mountains of data they’re paying to store in S3 buckets in 2024. Driven largely by the explosion of log data, companies that rely on rapid response to security threats and shifting user behavior began exploring reliable, cost-effective ways to extract real-time business intelligence from their data lake investments, albeit with mixed results. - Media and gaming go multi-CDN

CDNs changed how content delivery platforms improve the user experience. With the globalization of that experience, media and gaming organizations have started looking for more sensible and scalable ways to manage content delivery across different CDNs, each of which is optimized to the unique needs of specific geographies and users. The trend points to a pattern around simplification of operations. Managing those different CDNs in a coordinated manner is the next challenge. - Data decoupling from compute accelerated

The cost-driven trend of separating compute for ingest, processing and analytics from storage (and into services independent of each other) saw a marked uptick as more providers launched services with decoupled architectures or raced to retrofit existing, tightly-coupled architectures in order to remain competitive. This decoupling allowed different departments—security teams, business intelligence, marketing, data analytics, product management—to each manage their own preferred tools and processes for extracting insights from the same sets of data while managing their own budgetary and performance goals.

Index Engines

Scott Cooper

VP of Field Engineering

- Ransomware Keeps Evolving

Modern ransomware attacks are becoming increasingly sophisticated, employing methods such as intermittent encryption that leave metadata untouched while corrupting the actual content of data. This approach allows ransomware to bypass traditional detection tools, which often rely on surface-level checks like metadata integrity and can fool systems into believing data remains uncompromised. The impact is severe: data recovery efforts become significantly more challenging, often resulting in extended downtime and substantial financial and operational costs. Organizations must understand that their current detection tools may already be outdated and look to solutions that monitor data content for anomalies and changes over time. Without a proactive approach, recovery from these attacks will only become more complex. - AI as a Force for Good and Evil

Artificial intelligence and machine learning are playing a dual role in the cybersecurity landscape. On one side, AI-powered tools are being leveraged to strengthen defenses, predict threats, and automate responses to malicious activity. These tools are key in identifying data anomalies, detecting corruption, and mitigating risks before they escalate. On the other hand, cybercriminals are increasingly employing AI to create more sophisticated, financially motivated attacks that evolve faster than some defenses can keep up. AI allows attackers to identify system vulnerabilities and deploy ransomware quickly and in a far reaching way. The only way to keep up with the bad actors is to utilize the same tools they are as a force for good. - Recovery Over Prevention

The focus of cybersecurity has shifted significantly over the past few years. It’s no longer a matter of if an attack will happen but if an organization can recover and at what cost. Ransomware attackers have moved beyond simple encryption and now use advanced methods to target critical systems and data, making recovery efforts extremely complex. For organizations, this means that prevention alone is no longer enough; the ability to recover quickly, minimize disruption, and ensure data integrity is the new benchmark of success. Cyber resilience plans that integrate proactive data monitoring and AI-driven tools are now must haves for everybody. Organizations that prioritize recovery will see reduced operational downtime, financial impact, and reputational damage, while those unprepared risk data loss and ransom payouts.

Leil Storage

Aleksander Ragel

CEO

- HDD Market Recovery

2024 marked a significant rebound for the HDD market, driven by increased demand from hyperscale data centers and enterprises expanding their storage capacities. - AI-Driven Storage Demand

The proliferation of AI workloads required scalable and efficient storage solutions, providing reliability and performance necessary to handle these data-intensive applications. - Data Security Prioritization

With cyber threats on the rise, the need to secure storage systems has increased, technologies like advanced encryption and real-time monitoring capabilities are becoming a must.

MinIO

Ugur Tigli

CTO

- Object storage has become the dominant storage technology for AI/ML

Every major model is trained on an object store but one (Meta), and they are building their own object store presently. - The public and private cloud are effectively indistinguishable at this point

The tools and technologies are available everywhere (containerization, orchestration, RESTful API, software-defined everything) and the result is enterprises have the option to create the cloud they control or build robust hybrid architectures. - Despite claims to the contrary, the enterprise market is all NVMe at this point

They only things shipping in HDD are replacement disks.

PoINT Software & Systems

Thomas Thalmann

CEO

- Demand for tape storage due to AI and HPC workloads

AI and HPC workloads have significantly increased the need for storage resources. This has resulted in the growing importance of efficient storage systems, such as tape, and tape technology has been increasingly integrated into storage infrastructures. Tape storage systems have not only contributed to the cost-effective storage of large amounts of cold data, but have also optimized energy requirements. - Compliance and cybercrime increase data protection measures

Due to increased compliance requirements and heightened cybercrime risks, companies have invested significantly more in their data protection measures. In particular, solutions have been installed that increase the level of data protection, e.g. through replication, air-gap and media disruption. Increasingly, S3-based tape storage systems have been installed as they are easy to integrate, meet all security requirements and are cost-effective.

Quantum

Tim Sherbak

Enterprise Product Marketing Manager

All-flash cyber resilience took center stage as attacks raged on

- In 2024, cyberattacks like ransomware plagued organizations across industries, leading them to prioritize cyber resilience just as they do prevention. This put data backup in the spotlight, with organizations focusing on quick recovery capabilities to help mitigate disruptions to business operations stemming from attacks.

- Prevention-centered approaches remain important as well, but all-flash storage emerged as an ideal solution to deliver fast recovery and keep business operations running.

- While previously costly for backups, new deduplication-optimized, all-flash appliances now enable ultra-fast recovery of secondary data copies, making comprehensive, cost-effective cyber resilience accessible and allowing organizations of all types to better protect themselves against ransomware attacks.

Qumulo

Steve Phillips

Head of Product Management & Marketing

- Generative AI’s impact

Generative AI has empowered business leaders and IT professionals with transformative capabilities that have made significant productivity improvements, instilling a sense of inspiration and hope for the future. For many organizations embracing AI, adopting data platforms has become imperative to enable cloud AI factories access to high-quality data that requires holistic, end-to-end data curation and management (from ingest to archive) to deliver faster time to insight and innovation. - Emergence of Multicloud As the Norm

Multicloud adoption and regulatory frameworks have driven the requirement for sophisticated data governance practices to manage dispersed datasets while ensuring compliance and seamless integration, enabling transformative business opportunities. - Cloud-Native Data Solutions

As organizations embrace the cloud, they are embracing solutions optimized for cloud data services that deliver the elasticity required to scale performance and capacity, up and down, to meet application and business needs.

ScaleFlux

JB Baker

VP Products & Marketing

- AI is dominating the narrative, though a handful of companies dominate the infrastructure deployment

The hyperscalers and the newer players like Coreweave are building out massive AI infra capacities to provide cloud-based AI-as-a-service infrastructure. Many large scale enterprises are still more focused on scaling capabilities for their existing workloads and mission critical applications. - Power is a problem

Total power supply in the grid is limiting and delaying the deployment of new data centers. The growth in total power demand, exacerbated by the growth in AI, is straining the grid and forcing innovations in power efficiency. Power density in GPUs is challenging not just the power supply, but also the cooling technology. - Quality of data access is just as critical as the data processing engines.

Data is the fuel for the AI engine. The GPUs and TPUs risk low utilization rates, resulting in poor power efficiency and higher “cost per job” when data access (ingress or egress) is stalled or interrupted. Assuring consistently fast loading and flushing of massive data sets is a crucial factor in architecting an AI cluster.

Scalytics

Alexander Alten

CEO and founder

- Federated Learning Becomes a Business Requirement

2024 saw federated learning (FL) shift from experimental to implementation for some business areas handling sensitive data. With regulations like GDPR and HIPAA tightening, companies move slowly away from centralizing data toward processing it locally or in motion. Scalytics Connect is one of the key players in this shift, demonstrating companies how to train AI models or advanced business analytics on decentralized data while maintaining full control, privacy, and compliance. - Rise of Autonomous AI Agents and Agentic RAG

The AI landscape in 2024 was dominated by the emergence of AI agents towards year’s end. These agents went beyond simple task execution and strive to become fully autonomous problem solvers in the next year. Agentic RAG (Retrieval-Augmented Generation), a next-gen AI system, introduced the concept of agents creating sub-agents to complete tasks. Scalytics Connect enabled this innovation by developing the missing layer of governance, context, and control for agent operations, with decentralized workflows and real-time event-driven execution. - Data in Motion Replaces Data at Rest

Data no longer sits idle in data lakes or marts. In 2024, the concept of Data in Motion became essential for AI-driven enterprises. Instead of copying data between systems, enterprises embraced in-situ processing at the edge and in-flight data processing, enabled by tools like Apache Flink. Scalytics Connect supports this transformation by leveraging Apache Kafka protocols to process streaming data directly where it resides, keeping data secure, reducing transfer costs, enabling real-time AI decision-making, and integrating the data in motion paradigm in-situ to Apache Flink and Apache Spark, and his commercial products.

Simplyblock

Rob Pankow

CEO

- AI’s Impact on Storage

The growth of AI workloads in 2024 fundamentally changed enterprise storage requirements, with organizations discovering that traditional storage architectures created severe bottlenecks for GPU utilization. This drove widespread adoption of NVMe-first architectures for AI training workloads, with enterprises seeking solutions that could deliver consistent sub-millisecond latency and high throughput at scale. This trend put a strong growth and preference to software-defined architectures where scaling out is much easier compared to traditional hardware-bound storage systems. - Public Cloud Growth Continues

Public cloud providers continued their strong growth trajectory in 2024, with AWS growing on average 18% YoY, while Azure and Google Cloud grew over 30%. Nearly all large enterprises now maintain some public cloud footprint. The multi-cloud and hybrid cloud scenarios are here to stay with many enterprises stuck “in-between the clouds”. Some choose to keep on-prem deployments for sovereignty and cost reasons and some are using multiple hyperscalers to maintain negotiating power. On the other hand we also saw an increase in workload repatriation, particularly for data-intensive applications, as organizations sought to optimize costs and performance across hybrid environments. Some examples are 37signals or GEICO. - NVMe Adoption Peaking

NVMe solidified its position as the standard for high-performance workloads in 2024, with particularly strong adoption in AI and database deployments. Organizations increasingly leveraged local NVMe storage for transactional workloads, seeking to minimize latency and maximize IOPS for their most demanding applications. Many databases are trying to natively work with NVMes while forgetting many of the benefits that reliable storage systems provide. Enterprises running variety of databases and stateful systems are stuck in between database vendors offering their cloud services and orchestrating multitude of other workloads themselves with lots of point solutions. This situation drives high level of inefficiencies in the cloud while prompting a need for better solutions.

Spectra Logic

Ted Oade

Director of Product Marketing

- Global Energy Demand Outpaces Supply

Global energy demand is rising at a rate outpacing the growth of the world’s energy supply. This imbalance is fueled by the rapid adoption of technologies such as artificial intelligence, cloud computing, and electric vehicles. This gap threatens productivity and raises sustainability concerns, as our current demand for power is increasingly straining electric grids and driving up energy costs. Without solutions that address both energy supply and usage efficiency, these imbalances will only worsen, putting technological progress at risk. - AI Servers Require Significant Energy and Cooling

AI is consuming much more power than traditional servers due to the heavy computational load required for tasks like model training. AI servers can use up to 14 times more energy than traditional servers and generate much more heat, creating a burden on cooling systems. The dual challenge of higher energy costs with the increased cooling demands compounds the strain on already stressed power grids, making energy efficiency a must do for ever increasing AI operations. - Tape Storage is Energy Efficient

Magnetic tape storage is proving to be one of the most energy-efficient solutions available today, particularly for managing cold data, information that is rarely accessed but still needs to be stored. Compared to disk-based systems, tape libraries consume the same amount of energy but offer from 10x to 50x the storage capacity, making them an optimal solution for reducing energy usage in data centers. By shifting cold data to tape, organizations can free up energy while also repurposing cooling resources to support energy-intensive AI servers. For both on-premises and cloud environments, tape offers a scalable and sustainable solution for managing energy challenges as AI and data demands continue to grow.

StorPool

Boyan Ivanov

CEO

- Software-Defined Storage Became First Choice This Year for Block, File and Object

2024 saw a significant shift in how modern IT teams view distributed, software-defined storage (SDS), with more companies adopting it for block, file and object storage due to its scalability, flexibility, and resilience. It is becoming a “first-in-the-list” approach to storing data for many use cases.As data volumes grow and various applications emerge from AI to security, IT infrastructure becomes more complex and the demands to its underlying components increases. The modern enterprise has considerably higher requirements for speed, uptime, API-driven manageability, self-service, security and operational efficiency. These are easier to achieve with a software-centric approach to data storage, which drives the increasing demand for such solutions.

- The Convergence of Cybersecurity and Storage

With an increase in cyberthreats, such as ransomware, data breaches and insider attacks, the set-it-and-forget-it method of data storage is no longer enough. By implementing cybersecurity measures – such as advanced encryption, updated access controls, data masking, AI-Powered Threat Detection, Activity Logging and more – directly into storage infrastructures, organizations are better prepared to respond to breaches, reducing the potential damage from cyberattacks. - GenAI Requires Low Latency Storage for Effective LLM RAG Inferencing

As demand for real-time AI services grows, ensuring that LLM transactional performance meets the required scale is a key driver in the advancement of AI technologies.High transactional performance has become a necessity for LLMs retrieval augmented generation (RAG) inferencing, which has become requisite in fighting LLM hallucinations and highly accurate responses. Storage systems that scale linearly while maintaining consistent low latencies are essential.

StorONE

Gal Naor

CEO

The data management and storage landscape in 2024 was shaped by increasing demands for security, AI integration, and cloud scalability. Here are three key directions defining the industry’s response to these challenges:

- AI-Driven Storage Optimization

The use of AI in storage management has grown significantly, with platforms now leveraging AI to maximize drive performance, automate resource allocation, and optimize costs. One key capability is automated tiering, which ensures that frequently accessed data remains on high-performance storage while older, less-accessed data is moved to lower-cost tiers. This approach is especially critical as organizations manage vast amounts of data that must be retained for extended periods. - Unified Platforms for Cloud Integration

A major trend in 2024 is the adoption of unified storage platforms capable of operating across all public, private, and hybrid clouds. These platforms simplify management, reduce costs, and ensure robust disaster recovery, helping organizations dynamically scale to meet growing data demands without compromising performance. - Advanced Data Protection and Security

With modern threats like ransomware escalating, security features such as advanced encryption, immutable backups, and real-time threat detection are now integral to storage solutions. Platforms that were designed to address today’s evolving threats and comply with regulations like GDPR and FDIC mandates are proving essential for data protection.

Swissbit

Martin Schreiber

Head of Product Management & Technical Marketing MEM & MCU

- Recovery

The market showed clear signs of recovery after the high inventory levels of 2023. In 2024, demand was improving but remained highly selective, driven by the specific needs of individual verticals. - Renewed Focus

There was a significant resurgence in design-in activities throughout 2024. After a period of stagnation in 2023, customers reignited their focus on new projects and innovations, driving a wave of development efforts aimed at long-term, strategic goals. - Shift to the Edge

AI use cases underwent a substantial transformation, with applications such as model training and deployment increasingly moving away from centralized data centers to the Edge. This shift underscored the growing need for low-latency, decentralized data processing to support emerging technologies and real-time decision-making.

TrueNAS (iXsystems)

Brett Davis

EVP

- AI Predominantly Drives Need for Storage Capacity, Not Performance

As AI has accelerated quickly through the hype cycle, companies using AI have quickly realized that — outside of LLM training pipelines — no specialty “AI Storage” is needed for the other 95% of AI workloads, from training optimization to RAG and general purpose inferencing. Existing flash and hybrid storage already provides plenty of performance headroom in most cases. That said, AI generated content creation is driving additional data growth – creating the need for high capacity storage tiers beyond low latency tiers and parallel cluster file systems. - Broadcom VMware Acquisition Impact on Storage

Extreme price increases and uncertainty in VMware licensing fees have driven the search for lower cost storage options, moving economics to the forefront of storage buying criteria to offset additional software costs. Additionally, the negative experience with VMware has rekindled concerns about being locked-in to storage vendor ecosystems, driving elevated interest in open storage systems due to flexibility and non-proprietary interoperability. - Demand for Hard Drives Still Persists

While flash demands increase, the still-unbeaten superior economics of hard drives keeps them tremendously viable for capacity tiers, especially when paired with flash in a caching tier. Hard drive demand did not dip in 2024, as organizations still need to juggle explosive data growth that is typically outpacing their storage budgets.

Tuxera

Duncan Beattie

Market Development Manager

Microsoft made the most dramatic security changes to Windows remote storage in 25 years

- The SMB protocol now requires signing by default to prevent relay attacks and data tampering

Furthermore, the NTLM protocol was deprecated and a replacement local Kerberos system was announced. But it didn’t stop there: SMB can now block all use of NTLM, preventing harvesting of credentials. Organisations can also mandate SMB encryption from clients, throttle password guessing attempts through SMB, require minimal SMB dialects instead of relying on negotiation, and use SMB over QUIC with TLS 1.3 and certificates in all environments, not just Azure IaaS. These many layers combine to provide significant defensive improvements and move the industry forward through Windows’ and SMB’s broad reach. - SMB performance records broken

Direct performance has often been compromised due to the complexity of deployment, adhering to the CIFS/SMB protocol standards defined by Microsoft, and the diverse client operating systems and advanced configurations. These factors collectively deplete resources. However, this year has witnessed a significant advancement in SMB. It is now feasible to achieve over 11GB/Sec to a Windows workstation and over 5GB/Sec to a Mac workstation. This level of delivery is crucial for M&E, Medical, and AI applications as resolutions and data rates continue to rise. SMB clustering, being process heavy, has been previously very limited and has also advanced considerably to more than thirty nodes, resulting in over 400GB/Sec to the network. - NVMe wider adoption by vendors

NVMe offers an exciting glimpse into the near future of storage platforms, including PCIe high-density arrays with low latency and parallelism, NVMe-oF (NVMe over Fabrics) with RDMA and TCP over Ethernet, and HCI workflows for virtualization alongside Cloud and Edge deployments. Pricing is becoming more realistic as additional vendors adopt the new format, although cost remains a significant factor. NVMe, being PCIe-based, has also introduced a steep learning curve for adopters. NVMe is reshaping the storage industry by delivering unparalleled performance and efficiency, thereby fostering innovation.

Vawlt

Ricardo Mendes

CEO

A real concern I have is that in order to get the benefits of evolving AI technologies, organisations may want to relax (or, in fact, are already relaxing) their approach to data privacy, data control and operational sovereignty. Because of the high costs and complexity of running such solutions, they are likely to be made available centrally by single entities that have the infrastructure and the knowledge to do so. Correspondingly, one must consider the costs and threats that entities may incur, not only in providing sensitive data to train models and so on, but also in becoming operationally dependent on these entities. All this change and challenge opens the doors to a level of control that organisations have on the world and in peoples’ lives, which is clearly undesirable (to say the least). We actually already have a taste of this with the dependency entire companies have created by relying on single cloud providers. While single-cloud concentration is already a grave concern, the new level of reliance driven by AI-powered tools and solutions can be even worse. Moreover, these entities (and/or their technologies) could manipulate the data, and so validate the “truth” at a level that would be almost impossible to roll back from. Will we, as citizens, accept this as inevitable? Or will we realise privacy-preserving models operating over encrypted data (the dream)? Will this even be possible (a crazy evolution of things in the space of privacy-preserving computation and homomorphic encryption)? These are complex questions, for sure – but I believe that they do matter.

Versity

Meghan McClelland

VP Product

- Expansion of Object-to-Tape Workflows

The adoption of object-to-tape workflows surged in 2024 as organizations prioritized cost-effective, long-term data storage solutions. Versity, with its open-source S3 gateway, was at the forefront of this trend. - Lowering Cost and Carbon Footprint

With its focus on sustainability, Versity enabled customers to store data on the lowest-cost, lowest-carbon storage technologies (Object Storage on Tape). This approach not only crushed the cost of cloud storage but also helped organizations meet aggressive carbon-reduction goals. - Modern, Open, and Vendor-Agnostic Architecture

In 2024, Versity continued to deliver industry-leading simplicity and openness with its modern software architecture. It provided drop-in replacements for aging legacy systems (HPE DMF, IBM HPSS, IBM TSM, Oracle HSM, Quantum StorNext) while adding open-source S3 gateways, cross-platform compatibility, a modern GUI for easy management, and outstanding support. The platform’s hardware, media, cloud provider, and primary filesystem agnostic approach eliminates vendor lock-in.

Volumez

John Blumenthal

Chief Product & Business Officer

- The root cause of cloud provider platform cost and performance challenges is unbalanced systems, leading to overprovisioning and waste.

- Many workloads are stuck on-prem and unmigratable due to performance, availability, and cost limitations – balancing IaaS will create a new performance and economic capability that will cause workloads to move.

- GPU utilization is below 40%, making GPUs one of the scarcest resources and significantly driving up AI/ML costs

XenData

Phil Storey

CEO

- Increased Cloud Media Archive Adoption but mainly in the US

With our Media and Entertainment clients in the USA with multiple petabytes of media archives, we have seen a significant increase in planning for and implementing migration from on-premises storage to public cloud object storage. This trend has not occurred to the same extent outside the USA where on-prem disk and LTO tape continue to dominate for media archives. - Repatriation from the Cloud

Even though many US media organizations are planning for a move to public cloud storage, we continue to see movement in the reverse direction from others. The cost of keeping growing multi-petabyte archives in public cloud has meant that they are migrating content back to on-prem solutions which are more cost effective at scale. - AI Enablement

In 2024, we saw a growing interest in AI enablement of file-based and object storage archive systems. The challenge for data storage suppliers will be offering AI solutions with ease of use and good ROI.

Zadara

Yoram Novick

CEO

- AI is Shifting Focus to Inference at the Edge

The rapid evolution of artificial intelligence has shifted the spotlight from resource-heavy model training in public clouds and massive data centers to optimizing inference on localized devices. At the core of this shift is AI inference, the process of generating new responses and insights by utilizing trained AI models. Advancements in small language models are central to this trend, enabling AI on devices with limited computing power. This shift empowers businesses to deploy localized intelligence that operates independently of cloud connectivity and deliver faster, more efficient, context-aware insights, driving innovation across industries. - Edge AI for Low Latency and Real-Time Decision-Making

Latency has long been a challenge for cloud-based AI due to the requirement to transfer data back and forth to servers located in the cloud. Edge AI solves this challenge by processing data locally on edge devices, delivering ultra-low latency responses crucial for applications where every millisecond matters. For instance, autonomous vehicles rely on Edge AI to make split-second decisions based on local sensor data, and manufacturing operations benefit from real-time defect detection and predictive maintenance. By reducing latency, Edge AI provides faster decision-making and a significant competitive advantage, especially in industries requiring real-time responsiveness. - Edge AI Adoption for Privacy, Security, and Cost Reduction

Edge AI minimizes privacy concerns by keeping sensitive data on the home device, eliminating the need to transmit information to cloud servers and eliminating the risks associated with security breaches or compliance failures. This is particularly beneficial for sectors handling confidential data, such as healthcare and finance. Additionally, Edge AI reduces costs by minimizing bandwidth usage and cloud infrastructure usage. By offering secure, cost-effective AI, Edge AI solutions are attractive for organizations, especially those having devices at remote environments where connectivity costs can be significant.”

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter