MinIO AIStor, Purpose Built for AI and Data Workloads

Extends S3 API to include ability to PROMPT objects.

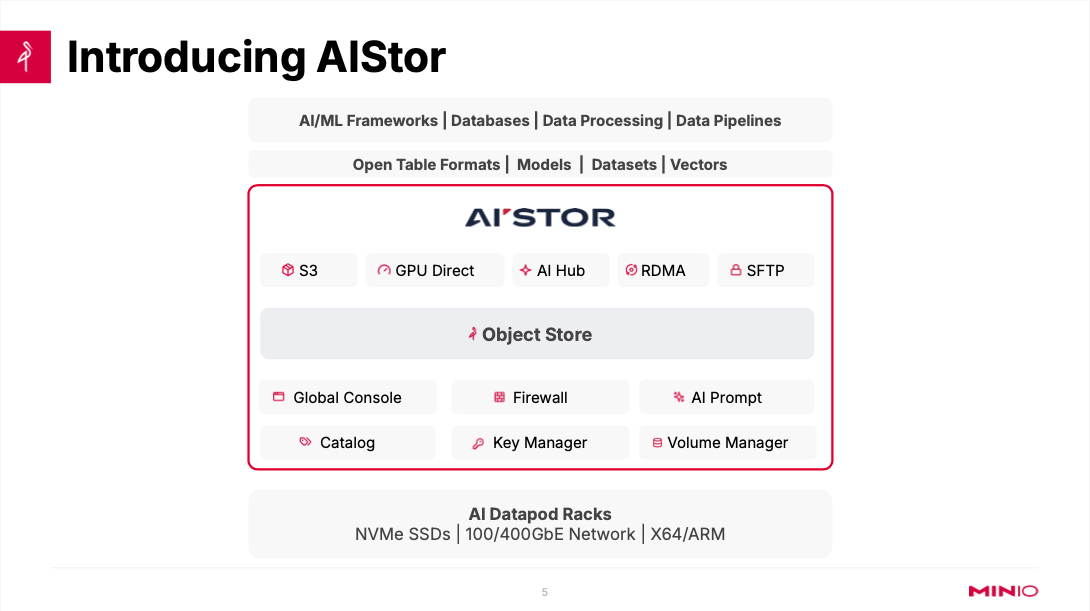

This is a Press Release edited by StorageNewsletter.com on November 14, 2024 at 2:03 pmMinIO, Inc. announced AIStor, an evolution of its Enterprise Object Store designed for the exascale data infrastructure challenges presented by modern AI workloads. AIStor provides new features, along with performance and scalability improvements, to enable enterprises to store all AI data in one infrastructure.

Recent research from MinIO underscores the importance of object storage in AI and ML workloads. Polling more than 700 IT leaders, the company found that the top 3 reasons motivating organizations to adopt object storage were to support AI initiatives and to deliver performance and scalability modeled after the public clouds. The firm is the only storage provider solving this new class of AI-scale problems in private cloud environments. This has driven the company to build new AI-specific features, while also enhancing and refining existing functionality, specifically catered to the scale of AI workloads.

“The launch of AIStor is an important milestone for MinIO. Our object store is the standard in the private cloud and the features we have built into AIStor reflect the needs of our most demanding and ambitious customers,” said AB Perisamy, co-founder and CEO. “It is not enough to just protect and store data in the age of AI, storage companies like ours must facilitate an understanding of the data that resides on our software. AIStor is the realization of this vision and serves both our IT audience and our developer community.”

While MinIO was already designed to manage exascale data, these advances make it easier to extend the power of AI to introduce new functionality across applications without needing to manage separate tools for analysis.

Of particular note is the introduction of a new S3 API, promptObject. This API enables users to “talk” to unstructured objects in the same way one would engage an LLM moving the storage world from a PUT and GET paradigm to a PUT and PROMPT paradigm. Applications can use promptObject through function calling with additional logic. This can be combined with chained functions with multiple objects addressed at the same time. For example, when querying a stored MRI scan, one can ask “where is the abnormality?” or “which region shows the most inflammation?” and promptObject will show it. The applications are almost infinite when considering this extension. This means that application developers can exponentially expand the capabilities of their applications without requiring domain-specific knowledge of RAG models or vector databases. This will dramatically simplify AI application development while simultaneously making it more powerful.

Click to enlarge

Additional new and enhanced capabilities in MinIO AIStor include:

- AIHub: a private Hugging Face API compatible repository for storing AI models and datasets directly in AIStor, enabling enterprises to create their own data and model repositories on the private cloud or in air-gapped environments without changing a single line of code. This eliminates the risk of developers leaking sensitive data sets or models.

- Updated Global Console: a completely redesigned user interface for the company that provides extensive capabilities for Identity and Access Management (IAM), Information Lifecycle Management (ILM), load balancing, firewall, security, caching and orchestration, all through a single pane of glass. The updated console features a new MinIO Kubernetes operator that further simplifies the management of large scale data infrastructure where there are hundreds of servers and tens of thousands of drives.

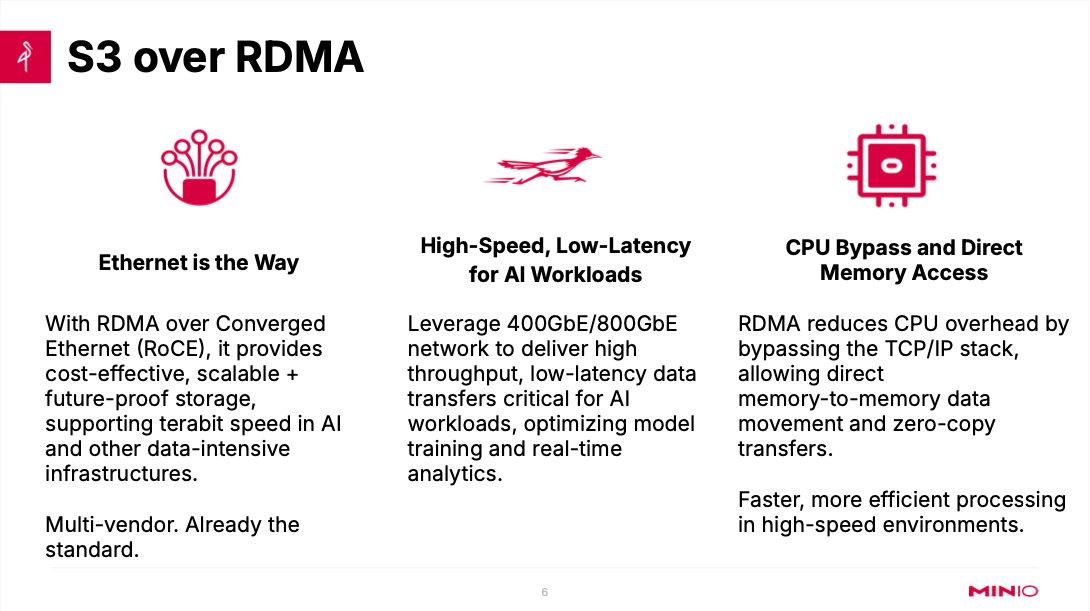

- Support for S3 over Remote Direct Memory Access (RDMA): enables customers to take full advantage of their high-speed (400GbE, 800GbE, and beyond) Ethernet investments for S3 object access by leveraging RDMA’s low-latency, high-throughput capabilities, and provides performance gains required to keep the compute layer fully utilized while reducing CPU utilization.

“AMD EPYC processors provide unparalleled core density, exceptional storage performance and I/O, aligned with high performance compute requirements,” said Rajdeep Sengupta, director, systems engineering, Advanced Micro Devices, Inc. “We have deployed the MinIO offering to host our big data platform for structured, unstructured, and multimodal datasets. Our collaboration with MinIO optimizes AIStor to fully leverage our advanced enterprise compute technologies and address the growing demands of data center infrastructure.”

The AIStor launch follows a series of major announcements in the AI storage space starting with the release of its reference architecture for large scale data AI data infrastructure, MinIO DataPod. The vendor also demonstrated its compute extensibility with announcements supporting optimizations for Arm-based chipsets and a customer win announcing it was powering the Intel Tiber AI Cloud. Collectively, these announcements frame MinIO’s leadership in the AI storage space and its place at the center of the AI ecosystem.

More info on the blog.

Comments

This news is a big one for the industry and for MinIO. To put things in context, our audience has to realize that MinIO is by far the largest adopted object storage solution on the planet. By several metrics, except revenue, MinIO breaks walls and finally participated to the success of the Amazon S3 API for the last, almost, 10 years. Arrived on the market 10 years ago after Caringo or Cleversafe, founded around 2005, MinIO is synonym of standard as many developers, architects, project leaders and engineering teams, pick their software as a foundation for their projet, to design a prototype or to run ubiquitous storage service. It's a real success, no doubt.

Beyond this universal adoption, for a few years now, they surfed on the Kubernetes wave and became a reference for storage service in this domain.

Now the story is different, as AI eats everything and popups in all IT projects as users wish to leverage AI to boost and modernize their applications and more globally improve their productivity thus their business impacts. In a nutshell, every company has an AI project and every IT vendors must have an AI story. So no surprise MinIO has jumped into the domain and for quite some time, many ML and GenAI projects adopted MinIO. And as AI means a real data deluge, the cloud trajectory for users suffered with the explosion of cost.

The team realized that they can't just offer the same software for AI and they decided to offer a new animal with several new things in it, they named it AIStor, a new commercial software. In other words, modern data workloads require a modern storage approach especially at scale, at hyperscale.

AIStor embodies a convergent product as we used to say that AI means 2 things for storage: Storage for AI and AI in or for Storage, here you have both.

As the press release listed above, AIStor lands on the market with several advanced features:

- A new S3 API, promptObject, able to create interaction, interrogating the content without relying on vector databases or RAG approaches. We can even say that the GET model evolves into the PROMPT one,

- AIHub to offer a private Hugging Face API directly on the on-premises storage avoiding data externalization,

- A new GUI, very intuitive and comprehensive, as we feel it when played with it at the show,

- and S3 over RDMA, a real innovation considering that IOs in AI domain requires low latency, high throughput with limited CPU usage. We speak here about RoCE v2. And we anticipate some other developments with Nvidia.

Click to enlarge

The battle continues among storage vendors with different approaches and even if MinIO claims that S3 is a must in the AI domain, we see other companies promoting file-based solutions pushing NFS with specific developments, Vast Data is a good example here, or leveraging parallel file storage with industry standard pNFS like Hammerspace or soon NetApp and even Dell or other commercial ones like Panasas (VDURA) or Weka or open source flavors with DDN fueled by Lustre. But the interesting aspect of this is that these file based players also invest and develop advanced S3 foundation as they realize that the dataset size and the way to work with data fit the object model. We close the loop, it appears that S3 is here again and as a reader, you probably read an article "S3 eats everything" published in 2019.

Click to enlarge

In the pure object storage landscape, vendors with HDD-based philosophy such as Cloudian, DDN with WOS a few years ago, DataCore, Dell, Hitachi Vantara or Scality to name a few finally introduced new flash-based S3 storage. They don't have choice in reality. Others, more recent, offer flash-based S3 storage solutions like Huawei, Pure Storage or Vast Data as they provide S3 instance since the origin of their product.

Recent news illustrate a strategic move for MinIO with ARM support, Intel partnership for the Tiber AI Cloud, remember Intel sits at the MinIO board, soon AMD and for sure some synergies with Nvidia.

The other comment is related to the moment and place of this MinIO's announcement. The team chose to announce its AIStor during the KubeCon conference this week in Salt Lake City UT. As the HPC and now AI big event is next week with Supercomputing in Atlanta, GA, it seems a bit unconnected. And it would have been a perfect date to celebrate the 10 years of the company at SC as the firm was officially launched November 22, 2014, exactly 10 years in a few days.

On the company side, speaking with AB Periasamy, CEO and co-founder, he confirms that the last $103 million round raised in January 2022, almost 3 years ago, oversubscribed 3x, is not touched at all, just producing interest. So the business is good and we anticipate some acceleration soon...

What a trajectory since our first meeting with AB in December 2015.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter