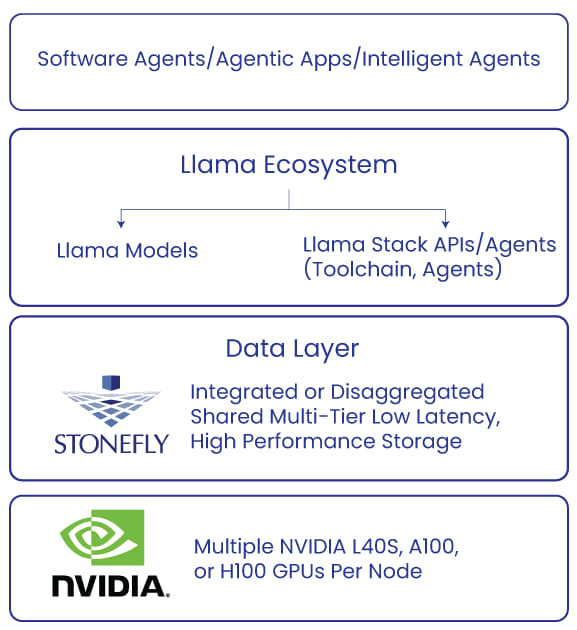

Llama Ecosystem on StoneFly Nvidia GPU-Based AI Servers

With integrated shared NVMe storage

This is a Press Release edited by StorageNewsletter.com on October 22, 2024 at 2:00 pmStoneFly, Inc. announces the integration of its Nvidia GPU-powered AI servers with the LLama ecosystem.

Click to enlarge

This integration brings the full capabilities of LLama’s AI and ML tools to StoneFly’s scalable, and modular AI server platform, providing enterprises with an AI solution with integrated optional storage for demanding data-centric applications.

The company’s AI servers, powered by Nvidia L40s, A100, and H100 GPUs, integrate with the LLama stack, enabling enterprises to streamline various stages of AI development, including model training, fine-tuning, and production deployment. The LLama stack provides open-source AI models and software, while StoneFly’s modular AI servers offer the necessary performance and optional integrated multi-tiered, shared/dis-aggregated storage, and scalability to manage these workloads in real-time AI applications.

Built and Tested for Data Scientists, Analysis, Developers, and Enterprise Use-Cases

Creating and testing AI applications requires storage for large data sets, scalability, and ransomware protection which makes the TCO high and ROIs challenging. The firm’s AI servers solve these challenges with a consolidated turnkey solution.

The reference architecture shows the simplicity and efficiency of using the Llama ecosystem on the company’s AI servers streamlining AI development, testing, and utilization in enterprise data centers.

Benefits of Using StoneFly AI Servers for Llama Ecosystem

StoneFly’s Nvidia GPU AI servers are designed to deliver performance for AI and ML applications, including natural language processing (NLP) and large-scale data analytics. The integration with the LLama ecosystem improves this by providing compatibility with AI tools and frameworks.

- High-Speed Data Processing: The built-in dual-controller, active/active, multiple GPU per node architecture ensures faster data throughput and processing with zero bottlenecks, making it for AI/ML applications that demand high compute power and real-time performance.

- Flexible Storage Options: The StoneFly AI servers support optional integrated and dis-aggregated shared multi-tiered hot and cold storage. This enables users to set up storage in the same appliance or in a dis-aggregated shared repository/appliance facilitating users to tailor their AI environment as needed.

- Optional Ransomware-Proof Security: StoneFly’s Air-Gapped Vault, Always On-Air Gapped backups, and immutable storage technology ensures that critical AI and ML workloads remain safe from cyber threats, providing robust ransomware-proof data protection for LLama workloads.

- Easy to Set Up and Manage: The AI servers are easy to set up and come with an intuitive real-time graphical interface that provides detailed insights into system resource usage, such as CPU and network performance, simplifying ongoing management.

- Cost-Effective AI Servers: StoneFly AI servers offer a lower initial purchase cost compared to other AI servers in the market with similar specs, reducing CapEx. Flexible storage options allow users to configure storage within the same appliance or extend it through modular repositories, helping minimize unnecessary hardware costs. Additionally, these servers scale without the need for forklift upgrades, ensuring that enterprises can grow as needed while maintaining a lower TCO.

Availability:

StoneFly’s AI servers with LLama ecosystem support are available.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter