OCP Global Summit 2024: XConn Technologies and MemVerge Showcasing CXL Memory Sharing Solution for AI Workloads

Using Apollo CXL 2.0 switch supporting both CXL 2.0 and PCIe Gen 5 and Memory Machine X software with Global IO-free Shared Memory Objects (GISMO) capability

This is a Press Release edited by StorageNewsletter.com on October 21, 2024 at 2:01 pmXConn Technologies and MemVerge, Inc. announced a joint demonstration of an industry’s 1st scalable Compute Express Link (CXL) memory sharing solution.

Specifically designed to supercharge AI workloads, this demo will highlight how CXL technology can enhance performance, scalability and efficiency of memory, while reducing TCO for AI applications, in-memory databases and large-scale data analytics workloads.

Specifically designed to supercharge AI workloads, this demo will highlight how CXL technology can enhance performance, scalability and efficiency of memory, while reducing TCO for AI applications, in-memory databases and large-scale data analytics workloads.

The 2024 OCP Global Summit, held from October 15-17, 2024, San Jose Convention Center, San Jose, CA, serves as the premier global event for innovation in open hardware and software. At this event, the 2 companies were demonstrating how their cutting-edge CXL memory sharing solution can unlock performance gains for AI-driven workloads, positioning it as a game-changing development for data centers and high-performance computing environments.

During the event, Jianping Jiang, SVP, business and product, XConn, was also detailing the benefits of composable memory for AI workloads during his presentation, Enabling Composable Scalable Memory for AI Inference with CXL Switch, to be presented during OCP on October 17.

“AI workloads are among the most resource-intensive applications, and our scalable CXL memory sharing solution addresses the growing need for increased memory bandwidth and capacity,” said Gerry Fan, CEO, XConn Technologies. “At the OCP Global Summit, we will show how our collaboration with MemVerge not only improves AI model inference performance but also enables more efficient memory usage, reducing infrastructure costs while maximizing compute power.”

Charles Fan, CEO and co-founder, MemVerge, added: “The rise of AI has transformed the demands on memory architectures. Our partnership with XConn brings a new class of solutions featuring fabric-attached CXL memory with sharing enabled by our Memory Machine X software. This innovation unlocks the performance potential of AI workloads by enabling seamless scalability, reducing latency, and dramatically improving system throughput.“

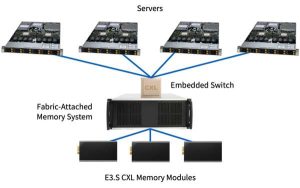

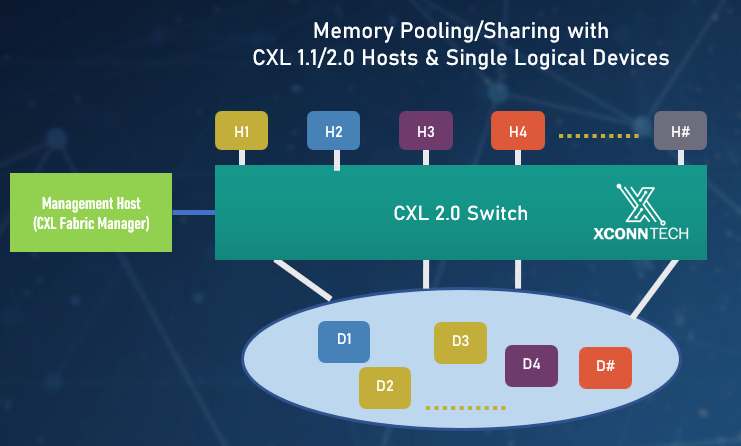

The focal point of the demonstration will be the XConn Apollo CXL 2.0 switch, a first-of-its-kind hybrid solution supporting both CXL 2.0 and PCIe Gen 5 in a single design. AI and HPC environments stand to benefit significantly from this technology, as it eliminates memory bottlenecks, boosts memory bandwidth, and enhances system efficiency. The Apollo switch is engineered to enable AI models to process larger datasets faster and efficiently, driving improvements in applications such as DL, AI model inference, real-time data analytics, and in-memory databases.

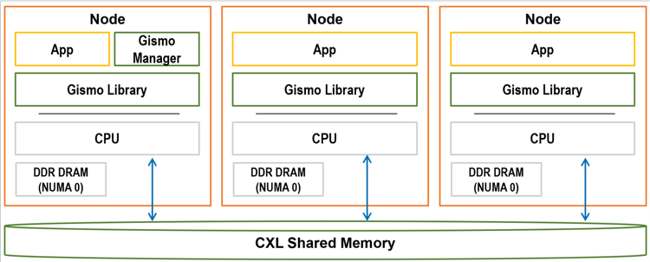

In tandem, MemVerge’s Memory Machine X software was highlighting during the demonstration as the software’s Global IO-free Shared Memory Objects (GISMO) capability allows multiple nodes to have direct access to the same CXL memory region while providing cache coherence.

GISMO (Global IO-free Shared Memory Object)

Demos of Composable Memory Systems powered by XConn Apollo switch was shown in the exhibit floor at Innovation Village location FN-125 and FN-126. Separately a full rack of computing system with 2 dual socket servers and up to 12TB CXL Memory running MemVerge’s GISMO was shown at booth B31 Innovation Village hosted by Credo Technologies.

Demos of Composable Memory Systems powered by XConn Apollo switch was shown in the exhibit floor at Innovation Village location FN-125 and FN-126. Separately a full rack of computing system with 2 dual socket servers and up to 12TB CXL Memory running MemVerge’s GISMO was shown at booth B31 Innovation Village hosted by Credo Technologies.

For customer samples and/or Apollo reference boards, contact Xconn. If you’re ready to test drive a shared memory system, PoCs are available from MemVerge upon request.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter