OCP Global Summit 2024: Panmnesia CXL-Enabled AI Cluster Including CXL 3.1 Switches

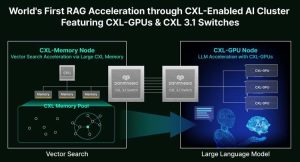

CXL-enabled AI cluster comprises ‘CXL-Memory Node’ equipped with CXL memory expanders, and ‘CXL-GPU Node’ equipped with CXL-GPUs.

This is a Press Release edited by StorageNewsletter.com on October 21, 2024 at 2:00 pmAt OCP Global Summit 2024, Panmnesia, Inc., a Korean fabless startup that develops CXL switch SoCs and CXL IP, presented a 1st CXL-enabled AI cluster featuring CXL 3.1 switches.

The OCP Global Summit, which Panmnesia is attending this year, is hosted by OCP (Open Compute Project), a world’s largest data center hardware development council. During the event, relevant global companies discuss solutions for building a cost-effective and sustainable data center IT infrastructure. At this year’s event, which is particularly focused on AI-related topics, Panmnesia was seeking to expand its global customer base by unveiling a demonstration of its CXL-enabled AI cluster to accelerate RAG, a next-gen AI application utilized in services such as ChatGPT.

Intensifying AI competition highlights necessity of CXL

In light of the growing importance of AI services, companies are striving to enhance the quality of their AI services. In recent years, there have been repeated attempts to achieve higher accuracy, especially by increasing the size of AI models or utilizing more data. This has led to an increased demand for memory in enterprises. To increase memory capacity, enterprises added more servers in general. However, the addition of further servers resulted in unnecessary expenditure for companies, as they are required to purchase a range of server components beyond the memory itself. This is where CXL (Compute Express Link), the next-gen of connectivity, comes in. In contrast to the previous method, CXL offers a new way to expand memory. With CXL, companies just need to purchase the memory and the CXL device, rather than spending money on additional server components. As a result, CXL is attracting interest from major IT companies that have suffered from the costs associated with inefficient memory expansion.

CXL solutions for AI

While CXL is gaining global attention, Panmnesia has also attracted interest in the industry for its leadership in the development of CXL technology. The company’s first garnered attention by introducing the world’s first full system framework ‘DirectCXL’ with CXL 2.0 switches at the 2022 USENIX Annual Technical Conference. The firm further solidified its leadership in CXL technology by unveiling a world’s 1st system that includes all types of CXL 3.0/3.1 components at the 2023 SC (Supercomputing) Exhibition. Furthermore, the company announced CXL solutions to accelerate AI in response to industry demand. First, at the Flash Memory Summit (FMS) 2023, the firm demonstrated the acceleration of recommendation systems, one of the most commercially used AI applications, on their CXL 3.0/3.1 framework. Subsequently, at CES 2024, Panmnesia won the CES Innovation Award for announcing a CXL-enabled AI accelerator that reduces data movement overhead by processing data close to memory. Most recently, at the OCP/OpenInfra Summit this year, the company introduced CXL-GPU, a solution that expands GPU system memory through CXL technology, further solidifying Panmnesia’s position as the leader of CXL solution for AI.

CXL-Enabled AI Cluster

At OCP Global Summit 2024, Panmnesia showcased the CXL-enabled AI Cluster, which represents the cutting edge of the company’s AI-focused CXL technology.

The CXL-enabled AI cluster comprises a ‘CXL-Memory Node’ equipped with CXL memory expanders, and a ‘CXL-GPU Node’ equipped with CXL-GPUs. The CXL-Memory Node provides large memory capacity through multiple CXL memory expanders, while the CXL-GPU Node accelerates AI model inference/training via numerous CXL-GPUs.

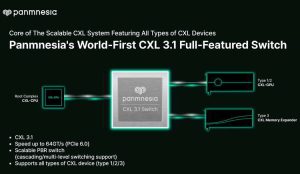

To construct this AI cluster, Panmnesia employed its 2 main products: the CXL IP and the CXL switch. Firstly, The firm’s CXL IP is embedded in each system device to enable CXL functionality. Since the CXL IP optimizes the communication process among the devices, it allows for memory expansion without sacrificing performance, all while remaining cost-effective.Next, Panmnesia’s CXL 3.1 switch is used to interconnect various types of devices aforementioned. Note that this involves more than just physical connections. Specifically, CXL categorizes accelerators such as GPUs as Type 2 devices and memory expanders as Type 3. In order to interconnect these different types of devices together, the switch must support the features required by each type of device. Since the company’s CXL 3.1 switches meet these requirements, it is able to configure the CXL-enabled AI clusters consisting of different types of devices. In addition, the firm’s CXL 3.1 switches play a crucial role in enhancing scalability across multiple nodes (servers). This is achieved through support for CXL 3.1 features designed for high scalability, such as multi-level switching and port-based routing.

“This is the world’s first AI cluster featuring CXL 3.1 switches and also the world’s first complete system with CXL-GPU, an AI acceleration solution powered by CXL,” company representative stated.

The CXL 3.1 switch chips will also be available to customers in the 2H25.

OCP Global Summit demonstration: Acceleration of RAG, cutting-edge AI model, on CXL-enabled AI cluster

At the OCP Global Summit, Panmnesia is bringing up a world’s 1st demonstration of RAG acceleration on their CXL-enabled AI cluster. RAG is a next-gen LLM (large-scale language model) currently under development and in use by various companies such as OpenAI and Microsoft. It could become a major application in the industry nowadays, as it addresses the inherent limitations of existing LLMs, namely the hallucination phenomenon.

To briefly explain the concept of RAG, it searches for information related to the user’s input in a database containing a vast amount of data and uses the search result to improve the accuracy of the LLM’s response.

“Our demonstration will show that Panmnesia’s CXL-enabled AI cluster can accelerate all stages of the RAG application, by utilizing various types of CXL devices,” said a Panmnesia representative.

According to the firm’s explanation, the searching process can be efficiently accelerated by the large memory expanded through CXL, while CXL-GPUs accelerate the LLM.

“Our CXL 3.1 switches and high-performance CXL IP allow us to connect dozens or even 100s of devices, effectively accelerating the latest AI applications like RAG at the data center level,” said an official, Panmensia. “Through our participation in the OCP Global Summit, we aim to strengthen our existing global partnerships and expand our customer base.”

The company will be showcasing its CXL-enabled AI cluster and demonstrations at 2024 OCP Global Summit, taking place from October 15 to 17 in San Jose, CA, USA.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter