Alluxio Enterprise AI V.3.2 Accelerates GPUs Anywhere with 97%+ GPU Utilization

Features new native integration with Python ecosystem and expanded cache management.

This is a Press Release edited by StorageNewsletter.com on July 18, 2024 at 2:01 pmAlluxio, Inc. announced the availability of the latest enhancements in its Enterprise AI.

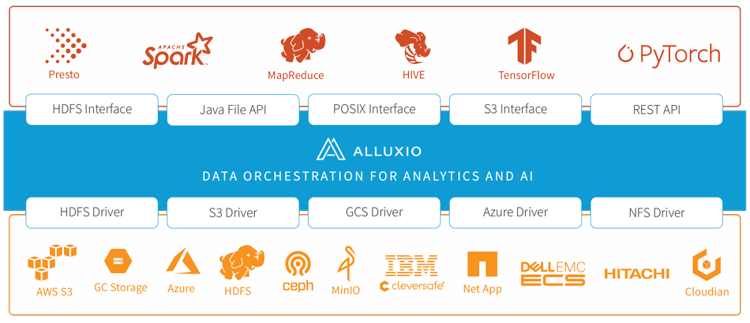

Version 3.2 showcases the platform’s capability to utilize GPU resources universally, improvements in I/O performance, and competitive end-to-end performance with HPC storage. It also introduces a new Python interface and sophisticated cache management features. These advancements empower organizations to fully exploit their AI infrastructure, ensuring peak performance, cost-effectiveness, flexibility and manageability.

AI workloads face several challenges, including the mismatch between data access speed and GPU computation, which leads to underutilized GPUs due to slow data loading in frameworks like Ray, PyTorch and TensorFlow. Enterprise AI 3.2 addresses this by enhancing I/O performance and achieving over 97% GPU utilization. Additionally, while HPC storage provides good performance, it demands significant infrastructure investments. it offers comparable performance using existing data lakes, eliminating the need for extra HPC storage. Lastly, managing complex integrations between compute and storage is challenging, but the new release simplifies this with a Pythonic filesystem interface, supporting POSIX, S3, and Python, making it easily adoptable by different teams.

“At Alluxio, our vision is to serve data to all data-driven applications, including the most cutting-edge AI applications,” said Haoyuan Li, founder and CEO. “With our latest Enterprise AI product, we take a significant leap forward in empowering organizations to harness the full potential of their data and AI investments. We are committed to providing cutting-edge solutions that address the evolving challenges in the AI landscape, ensuring our customers stay ahead of the curve and unlock the true value of their data.”

Enterprise AI includes following key features:

-

Leverage GPUs anywhere for speed and agility – Enterprise AI 3.2 empowers organizations to run AI workloads wherever GPUs are available, ideal for hybrid and multi-cloud environments. Its intelligent caching and data management bring data closer to GPUs, ensuring efficient utilization even with remote data. The unified namespace simplifies access across storage systems, enabling seamless AI execution in diverse and distributed environments, allowing for scalable AI platforms without data locality constraints.

-

Comparable performance to HPC storage – MLPerf benchmarks show Enterprise AI 3.2 matches HPC storage performance, utilizing existing data lake resources. In tests like BERT and 3D U-Net, Th compzny delivers comparable model training performance on various A100 GPU configurations, proving its scalability and efficiency in real production environments without needing additional HPC storage infrastructure.

-

Higher I/O performance and 97%+ GPU utilization – Enterprise AI 3.2 enhances I/O performance, achieving up to 10GB/s throughput and 200,000 IO/s with a single client, scaling to 100s of clients. This performance fully saturates 8 A100 GPUs on a single node, showing over 97% GPU utilization in large language model training benchmarks. New checkpoint RW support optimizes training recommendation engines and large language models, preventing GPU idle time.

-

New filesystem API for Python applications – Version 3.2 introduces the Alluxio Python FileSystem API, an FSSpec implementation, enabling seamless integration with Python applications. This expands Alluxio’s interoperability within the Python ecosystem, allowing frameworks like Ray to easily access local and remote storage systems.

-

Advanced cache management for efficiency and control – The 3.2 release offers advanced cache management features, providing administrators precise control over data. A new RESTful API facilitates seamless cache management, while an intelligent cache filter optimizes disk usage by caching hot data selectively. The cache free command offers granular control, improving cache efficiency, reducing costs, and enhancing data management flexibility.

“The latest release of Enterprise AI is a game-changer for our customers, delivering unparalleled performance, flexibility, and ease of use,” said Adit Madan, director, product. “By achieving comparable performance to HPC storage and enabling GPU utilization anywhere, we’re not just solving today’s challenges – we’re future-proofing AI workloads for the next generation of innovations. With the introduction of our Python FileSystem API, Alluxio empowers data scientists and AI engineers to focus on building groundbreaking models without worrying about data access bottlenecks or resource constraints.”

“We have successfully deployed a secure and efficient data lake architecture built on Alluxio. This strategic initiative has significantly enhanced the performance of our compute engines and simplified data engineering workflows, making data processing and analysis seamless and more efficient,” said Hu Zhicheng, data architect, Geely (parent company of Volvo). “We are honored to collaborate with Alluxio in creating an industry-leading data and AI platform, driving the future of data-driven intelligent development.”

Alluxio Enterprise AI version 3.2 is available for download.

Resources:

Trial version

Documentation

GPU utilization rate testing tool

Blog: What’s New In Alluxio Enterprise AI 3.2: GPU Acceleration, Pythonic Filesystem API, Write Checkpointing and More

Webinar registration link

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter