Optimal RAID Solution with Xinnor xiRAID and High Density Solidigm QLC SSDs

Paired Solidigm D5-P5336 61.44TB drives with Xinnor xiRAID software-based RAID solution for data centers, findings in RAID-5 configuration in solution brief.

This is a Press Release edited by StorageNewsletter.com on July 15, 2024 at 2:01 pmBy Daniel Landau, senior solutions architect, Xinnor Ltd., and Sarika Mehta, senior storage solutions architect, Solidigm

Data is the fuel that feeds AI engines to generate models. Accessing this data in a fashion that’s both power-efficient and fast enough to keep power-hungry AI deployments running is one of the top challenges that the storage industry is tackling today.

![]()

AI deployments are struggling to keep up with the power demands of the processing needed to generate AI models. Any component that can offer relief in the power budget is a key contributor to accelerating model generation and production.

Solidigm QLC SSDs offer read performance equivalent to TLC NAND-based drives while offering higher density and, consequently, a power efficiency advantage. But customers also want added data protection promised by high reliability and the ability to recover in case of a drive failure. To address these concerns, we paired Solidigm D5-P5336 (1) 61.44TB drives with Xinnor xiRAID, (2) a software-based RAID solution for data centers. We are publishing our findings in a RAID-5 configuration in this solution brief.

High-density QLC advantage

The Solidigm D5-P5336 is an industry’s largest-capacity solid-state PCIe drive available today. With capacities up to 61.44TB, these drives enable some customers to compact their hard drive-based data centers by over 3x. Even though hard drives are available in >20TB capacities, many of the deployments will have to short stroke the HDDs in order to achieve desired performance. That, combined with a superior annual failure rate (AFR), gives Solidigm QLC technology great advantages.

With read speeds that saturate PCIe 4.0 bandwidth the same as their TLC counterparts and write speeds that are far beyond what hard drives can accomplish, QLC SSDs offer unique advantages for expensive AI data pipelines that need to be kept busy with data. Typical hard drive RW bandwidth is a few 100s MB each, and random IO/s would range in the low 100s. Upgrading infrastructure from hard drives to all flash is a win, especially if you are at risk of starving your AI infrastructure of data due to slower storage speeds. Idling power-hungry GPUs that are waiting on data from hard drives is a cost that deployments should account for.

In addition to performance, QLC offers physical space savings that are unparalleled. A 2U rack server can accommodate anywhere from 24 to 64 of the 61.44TB Solidigm D5-P5336 SSDs, depending on form factor, essentially offering anywhere between 1 and 3.5PBs of storage. In locations where data center real estate is precious and regulations have been put in place to restrict their expansion, the Solidigm D5-P5336 can offer capacity scaling without changing the physical footprint.

xiRAID advantage

Designed to handle the high level of parallelism of NVMe SSD, xiRAID is a software RAID engine that leverages AVX (Advance Vector Extensions) technology available in all modern x86 CPU and combines it with Xinnor’s innovative lockless data path, to provide fast parity calculation with minimal system resources.

The innovative architecture of xiRAID enables to exploit the full performance of NVMe SSDs even in compute intensive parity RAID implementations like RAID-5, 6 and 7.3 (3 drives for parity). Hardware RAID cards are bound to using the 16 lanes of the PCIe bus they are connected to. As such, they can effectively address only up to 4 NVMe SSD (each with 4 lanes). When more drives are added to the RAID, performance is limited by design. Being a software-only solution, xiRAID is not subject to this limit and at the same time, it frees up a precious PCIe slot in the server, that can be used to connect more network or accelerator cards.

Different from other software RAID implementation, xiRAID distributes the load evenly across all the available CPU cores, allowing maximum performance both in normal as well as in degraded mode, with minimal CPU load. xiRAID is also extremely flexible as it supports any RAID level, any drive capacity and any PCIe generation; additionally, the user can select the optimal strip size to achieve best performance and minimize write amplification, based on expected workload, RAID level, and RAID size.

Our RAID-5 test results with xiRAID show a design implementation goal of making maximum raw NVMe performance available to the host.

D5-P5336 61.44TB SSD

Performance results

Performance results

Each Solidigm D5-P5336 61.44TB SSD provides read bandwidth of 7GB/s and write bandwidth of 3GB/s. Conventional RAID deployments with RAID cards would strip away a large portion of this performance. When paired with xiRAID in RAID 5, up to 100% of the SSD’s raw read performance and up to 80% of the raw write performance of 5 drives remains available to the host. In addition to the performance, the host doesn’t have to compromise on reliability or the ability to recover the data in case of a failure.

Our configuration consists of 5 Solidigm D5-P5336 61.44TB drives in a RAID 5 xiRAID volume. We used a strip size of 128KB. For the detailed hardware and test configuration, see Appendix.

|

Metric – RAID-5 (4+1) 61.44TB D5-P5336 |

Result |

|---|---|

|

Read bandwidth (GB/s) |

Up to 36.8 |

|

Write bandwidth (GB/s) |

Up to 12.3 |

|

Read KIO/s |

Up to 5,175 |

|

Write KIO/s |

Up to 131 |

Table 1. RAID-5 (4+1) performance

What is remarkable is that xiRAID was able to incur minor read-modify-write cycles on these large-indirection-unit SSDs and therefore control the write amplification tightly for sequential workloads. This makes it a great fit for write workloads that are sequential in nature and reads that are either random or sequential.

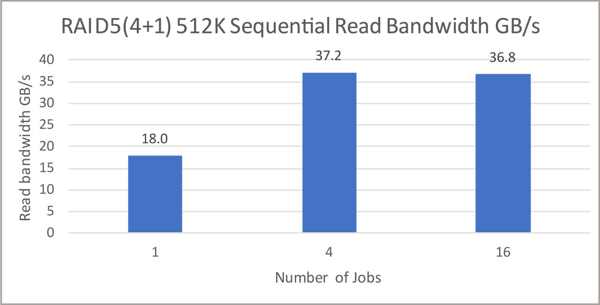

Figure 1. RAID 5 (4+1) sequential read bandwidth

For the sequential read performance with RAID-5 shown in Figure 1, we measured read performance equivalent to that of the raw drive performance for 5×61.44TB Solidigm D5-P5336 drives; essentially a 0% loss with the RAID-5 volume. We used 512KiB block size to capture the read bandwidth. RAID 5 strip size was set to 128KiB.

In AI deployments, having high read bandwidth can help with reading in the data during the data ingest phase to prep it for model development. It can also speed up supplying additional data in a Retrieval Augmented Generation (RAG) model to generate an output using the new data as its context.

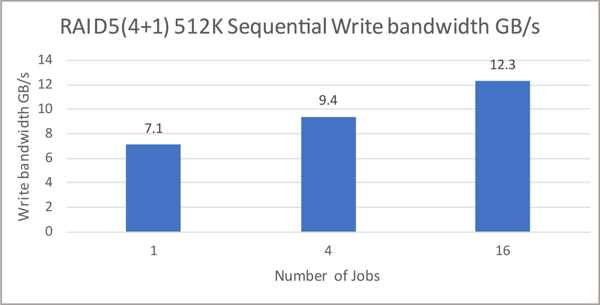

Figure 2. RAID-5 (4+1) sequential write bandwidth (IO size = 512KB)

Sequential write performance shown in Figure 2 for large sequential writes reached up to 80% of raw drive performance. We used 512KB block size to capture the write bandwidth. RAID-5 strip size was set to 128KB. High write bandwidth can speed up checkpointing the AI model development phase.

It is critical to select the correct strip size for the workload to obtain maximum performance out of the RAID volume and incur the least amount of write amplification. This would significantly reduce the write performance that the host sees.

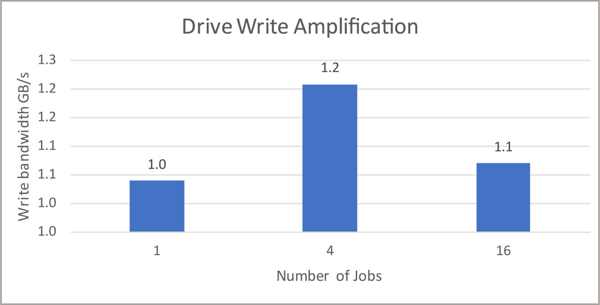

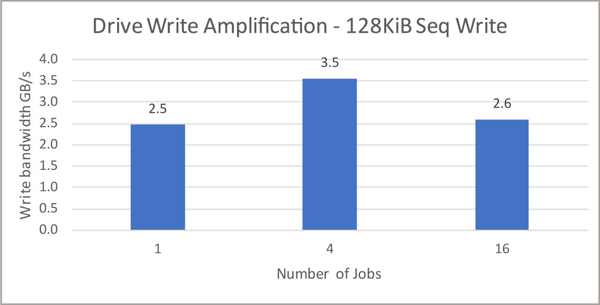

Figure 3. RAID 5 (4+1) write amplification for sequential workload (IO size = 512KB)

For example, selecting a 128KiB strip size with a workload that has IO size of 128KiB would incur a read- modify-write penalty for each of the writes being striped across the RAID volume. The penalty is in two places: the 1st with RAID having to read the old strip and checksum values, modify them with the new values, and then write it back; and the 2nd when the IO is unaligned on the drive, causing the drive to incur read-modify-writes.

An SSD’s write amplification is calculated by capturing its SMART information log before and after the workload. The SMART log provides NAND write and host write info. After calculating NAND write and host write for the workload by subtracting before values from after values, the following formula provides write amplification for the workload.

Write Amplification Factor (WAF) = NAND writes for the workload / Host writes for the workload

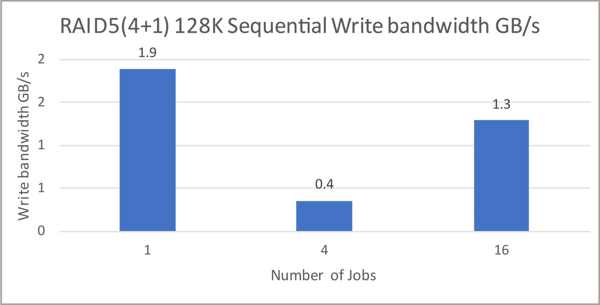

Figure 4 shows an impact to the performance from not aligning IO size to be a multiple of the strip size and number of drives in the RAID volume. To showcase the effects of unaligned IO, 128KB sequential writes were issued to the 5 drive RAID-5 volume with a strip size of 128KB.

In this scenario, 128KB IO gets written to one of the strips within a stripe via RAID’s read-modify-write procedure. Along with the data, the checksum strip within the same stripe will be rewritten as well. 2/5 NVMe drives will receive IO and checksum for one 128KB IO. For each subsequent 128KB IO, one strip within the stripe gets updated and the same checksum strip gets rewritten. To fill one stripe, 4 strips will be written with 128KB data and checksum strip within a given stripe will be updated four times.

In contrast, for IO size of 512 KB being issued to a 5 drive RAID-5 volume with strip size of 128KB, each checksum strip is written exactly once. This reduces computational resources required and write amplification.

During this process, if either strip or checksum is unaligned with the SSD’s page size, the SSD may need to perform a read-modify-write internally. This would cause further degradation in performance and would increase the write amplification.

Figure 4. RAID-5 (4+1) sequential write bandwidth (IO size = 128KB)

Figure 5 shows only a fraction of the performance equivalent to a single drive’s raw performance, in contrast to the full performance capability seen earlier.

Write amplification captured for the test results from Figure 4 is shown in Figure 5 below and is much higher than that seen in Figure 4. This shows the cascading impact of not optimizing for IO size, strip size, and the number of drives in the RAID volume.

Figure 5. RAID-5 (4+1) write amplification for sequential workload (IO size = 128KB)

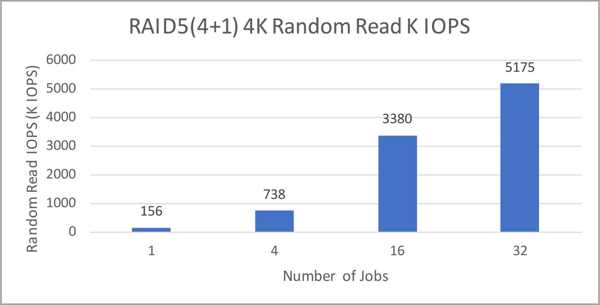

The same concepts apply to random performance. Read performance with Solidigm D5-P5336 and xiRAID is the same as 100% of the raw read performance of the SSDs, as shown in Figure 6.

Figure 6. RAID-5 (4+1) random read IO/s

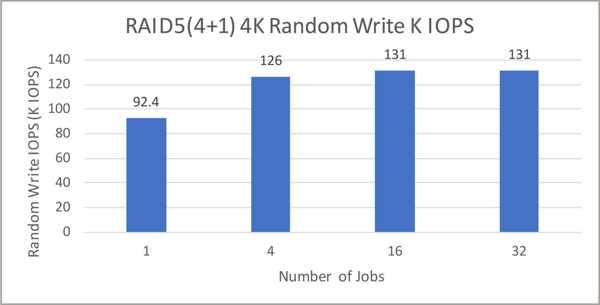

Up to 80% of raw random write performance of the 5 SSDs is available to the host through the RAID volume. 20% loss is equivalent to one drive in the volume. One drive is used as parity drive and the host has only four drives where the data will be written to.

Figure 7. RAID 5 (4+1) random write IOs

Conclusion

The Solidigm D5-P5336 with xiRAID offers customers an ability to take advantage of infrastructure efficiency benefits that the D5-P5336 has to offer without compromising on reliability. This solution is an excellent fit for applications that require high sequential bandwidth and excellent random or sequential read performance.

It is critical to apply the strip size for the RAID volume that best fits your workload. Customers with big data analytics, content delivery networks, high performance computing, and AI deployments can leverage these drives in their infrastructure to improve overall efficiency while providing best-in-class density per watt.

Appendix

RAID volume preconditioning steps and configuration

Table 2. System configuration

|

Icelake system configuration |

|

|---|---|

|

System |

Manufacturer: Supermicro Product name: SYS-120U-TNR |

|

BIOS |

Vendor: American Megatrends International, LLC. Version: 1.4 |

|

CPU |

Intel Xeon Gold 6354 2 x sockets @3GHz, 18 cores/per socket |

|

NUMA nodes |

2 |

|

DRAM |

Total 320G DDR4@3200 MHz |

|

OS |

Ubuntu 20.04.6 LTS (Focal Fossa) |

|

Kernel |

5.4.0-162-generic |

|

NAND SSD |

5 x Solidigm D5-P5336 61.44TB, FW Rev: 5CV10081 |

|

fio version |

3.36 |

xiRAID Configuration

xiRAID Classic Version 4.0.4 (3)

We used 5×61.44TB P5336 drives in RAID-5 configuration. All the drives we assigned to the same NUMA node.

Drive Preparation and FIO Configuration

For consistently reproducible results, we preconditioned the RAID volume using principles of SSD preconditioning using FIO. (4) The following steps were performed to precondition the RAID volume prior to sequential and random workloads respectively.

1. Format each drive.

2. Sequentially fill the drives 2 times. See sample fio script to fill the drives.

[global]

rw=write

bs=512K

iodepth=64

direct=1

ioengine=libaio

group_reporting

[job1]

filename=/dev/nvme0n1

[job2]

filename=/dev/nvme1n1

[job3]

filename=/dev/nvme2n1

[job4]

filename=/dev/nvme3n1

[job5]

filename=/dev/nvme4n1

3. Create RAID-5 volume on 5 drives with strip size of 128K.

xicli raid create -n raid_numa0 -l 5 -d /dev/nvme0n1 /dev/nvme1n1 /dev/nvme2n1 /dev/nvme3n1 /dev/nvme4n1 -ss 128

4. RAID initialization step can take up to a few hours to complete depending on the size of the RAID volume. To check status use following command.

xicli RAID show

See sample output in the figure below. Column ‘state’ shows that it is initialized. This indicates that initialization is complete. You can still perform IO to the RAID volume before it is fully initialized. For partially initialized volume performance is degraded.

Click to enlarge

5. Run sequential workloads. Sample FIO workload config is shown below. For our testing we changed number of jobs and queue depth to sweep through different number of jobs.

Sequential Write

[global]

rw=write

bs=512K

iodepth=64

direct=1

ioengine=libaio

runtime=4500

ramp_time=1500

numjobs=16

offset_increment=1%

group_reporting

numa_mem_policy=local

[job1]

numa_cpu_nodes=0

filename=/dev/xi_raid_numa0

Sequential Read

[global]

rw=read

bs=512K

iodepth=64

direct=1

ioengine=libaio

runtime=4500

ramp_time=1500

numjobs=16

offset_increment=1%

group_reporting

numa_mem_policy=local

[job1]

numa_cpu_nodes=0

filename=/dev/xi_raid_numa0

6. Random preconditioning is executed prior to running random workloads.

[global]

rw=randwrite

bs=4K

iodepth=64

direct=1

ioengine=libaio

runtime=57600

numjobs=16

group_reporting

[job1]

numa_cpu_nodes=0

filename=/dev/xi_raid_numa0

7. Run random workloads. Sample fio workload config is shown below. For our testing we changed number of jobs and queue depth to sweep through different number of jobs.

Random Write

[global]

rw=randwrite

bs=4K

iodepth=64

direct=1

ioengine=libaio

runtime=4500

ramp_time=1500

numjobs=16

group_reporting

numa_mem_policy=local

[job1]

numa_cpu_nodes=0

filename=/dev/xi_raid_numa0

Random Read

[global]

rw=randread

bs=4K

iodepth=64

direct=1

ioengine=libaio

runtime=4500

ramp_time=1500

(1) Solidigm D5-P5336 Specification: Soldigm D5-P5336 QLC SSD

(2) Xinnor xiRAID link

(3) Xinnor xiRAID Classic Documentation

(4) FIO https://github.com/axboe/fio

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter