Radar for Enterprise Object Storage

Outperformers Scality, MinIO and Weka, and 15 more companies analyzed

This is a Press Release edited by StorageNewsletter.com on July 5, 2024 at 2:03 pmPublished on June 7, 2024, this market report was written by Kirk Ryan and Whitney Walters, analysts at GigaOm.

GigaOm Radar for Enterprise Object Storagev5.0

Executive Summary

As the volume and variety of data continue to grow exponentially, organizations are facing unprecedented challenges in managing and storing their data efficiently. Amid this influx, object storage has emerged as a critical solution for unstructured data, which can include images, videos, and files.

Object storage is a cloud-based system that allows users to store and retrieve data of any size in the form of objects. Unlike traditional block-based storage systems, object storage is optimized for large-scale data repositories, making it ideal for big data, IoT, and cloud-native applications.

Applications today need to store data safely and access it from anywhere, from multiple applications and devices concurrently, while next-gen microservices-based applications often use object storage as their primary data repository. Moreover, HPC applications – like big data analytics and AI – are also big consumers of object storage, and the new flash media types introduced in high-performance object storage systems strengthen the case for the use of object storage in high-performance, high-value workloads. Thus, the key characteristics of enterprise object stores have changed, with much more attention paid to performance, ease of deployment, security, federation capabilities, and multitenancy than in the past. In addition, edge use cases are becoming more prevalent. Tiny object storage installations at the edge, serving small Kubernetes clusters and IoT infrastructure, are surfacing.

As the technology continues to evolve, it will play a pivotal role in shaping the future of enterprise data management. This is especially true in the context of modern object storage platforms that offer additional capabilities for file data via support for NFS, SMB, and other file protocols. This evolution looks set to continue as organizations seek to build their data pipelines on unified storage platforms that simplify the overall management and governance of data at scale.

In today’s digital age, data is the lifeblood of business. Object storage is essential for organizations to unlock the true value of their data, drive innovation, and stay competitive.

CxOs will want to leverage object storage to:

- Enhance customer experiences through personalized analytics and recommendations.

- Drive business innovation through data-driven insights and AI applications.

- Ensure BC and compliance through robust and scalable data storage solutions.

Object storage has revolutionized the way enterprises store, manage, and retrieve their data. As the demands for data storage continue to grow, it has become an essential component of any modern IT infrastructure. Object storage matters to C-level executives and IT professionals alike. C-level executives will appreciate its strategic benefits – increased agility, reduced costs, and improved business resilience. IT professionals will appreciate the technical capabilities and innovations that make object storage a robust and scalable solution for their organizations.

This is our 5th year evaluating the object storage space in the context of our Key Criteria and Radar reports. This report builds on our previous analysis and considers how the market has evolved over the last year.

This Radar report examines 18 of the top object storage solutions and compares offerings vs. the capabilities (table stakes, key features, and emerging features) and nonfunctional requirements (business criteria) outlined in the companion Key Criteria report. Together, these reports provide an overview of the market, identify leading enterprise object storage offerings, and help decision-makers evaluate these solutions so they can make a more informed investment decision.

Market Categories and Deployment Types

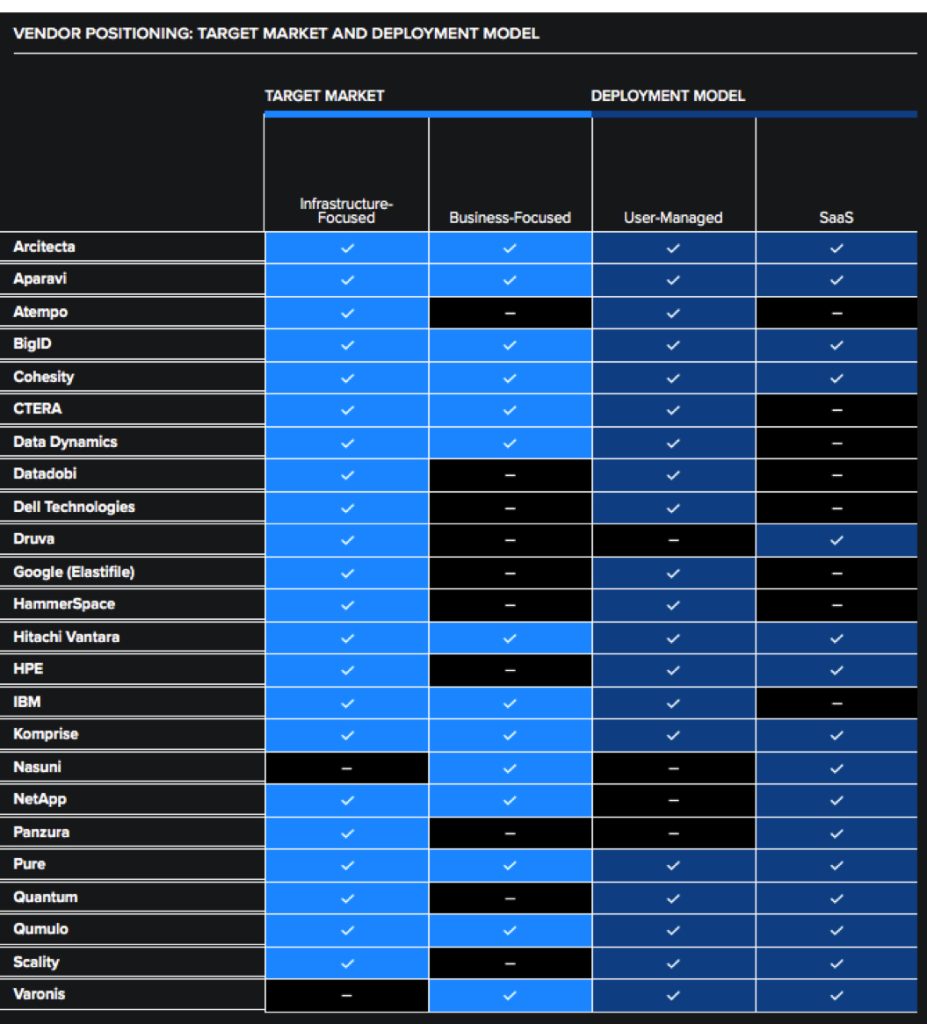

To help prospective customers find the best fit for their use case and business requirements, we assess how well enterprise object storage solutions are designed to serve specific target markets and deployment models (Table 1).

For this report, we recognize the following market segments:

- SMB: In this category, we assess solutions on their ability to meet the needs of organizations ranging from small businesses to medium-sized companies. Also assessed are departmental use cases in large enterprises where ease of use and deployment are more important than extensive management functionality, data mobility, and feature set. Characteristics like ease of use, simple deployment options like preconfigured appliances, and low entry point for basic configurations are among the most important here.

- Large enterprise: Here, offerings are assessed on their ability to support large and business-critical environments. Optimal solutions in this category have a strong focus on flexibility, scalability, ecosystem, multitenancy, and features that improve security.

- CSP: These solutions are designed for multitenancy, integration with third-party solutions, ease of management at scale, and monitoring and chargeback capabilities. In this segment, automation and advanced monitoring are also important because the business needs of the CSP should be taken into account.

- MSP: These solutions are also designed for multitenancy, integration with third-party solutions, ease of management at scale, and monitoring and chargeback capabilities. In this segment, flexibility in the deployment and licensing models is also important because the business needs of the local MSP should be taken into account.

In addition, we recognize the following deployment models:

- Physical appliance: In this category, we include solutions that are sold as fully integrated hardware and software stacks with a simplified deployment process and support.

- Virtual appliance: This refers to a virtualized appliance, either a VM or container that provides the capabilities of a dedicated physical appliance.

- Public cloud image: Similar to a virtualized appliance, a public cloud image is a cloud-native, optimized image that is available from the cloud provider’s marketplace for ease of deployment and commercial consumption, typically under the customer’s existing cloud subscription agreement.

- Software only: This refers to a software solution installed on top of 3rd-party hardware and OS. In this category, we include solutions with hardware compatibility matrices and precertified stacks sold by resellers or directly by hardware vendors.

- SaaS: This describes a deployment where the provider is responsible for managing everything. This includes the underlying storage infrastructure, typically hardware, software, security, and maintenance.

- Self-managed: The customer is responsible and has full control over the storage hardware and software. This could involve on-premises storage or cloud storage options that the customer IT team(s) manage.

Table 1. Vendor Positioning: Target Markets and Deployment Models

Table 1 components are evaluated in a binary yes/no manner and do not factor into a vendor’s designation as a Leader, Challenger, or Entrant on the Radar chart (Figure 1).

“Target market” reflects which use cases each solution is recommended for, not simply whether that group can use it. For example, if an SMB could use a solution but doing so would be cost-prohibitive, that solution would be rated “no” for SMBs.

Decision Criteria Comparison

All solutions included in this Radar report meet the following table stakes – capabilities widely adopted and well implemented in the sector:

- Access controls

- Indexing and search

- Data protection and optimization

- Encryption and security

- Remote and geo-replication

- Scale-out architecture

- S3 and other protocols

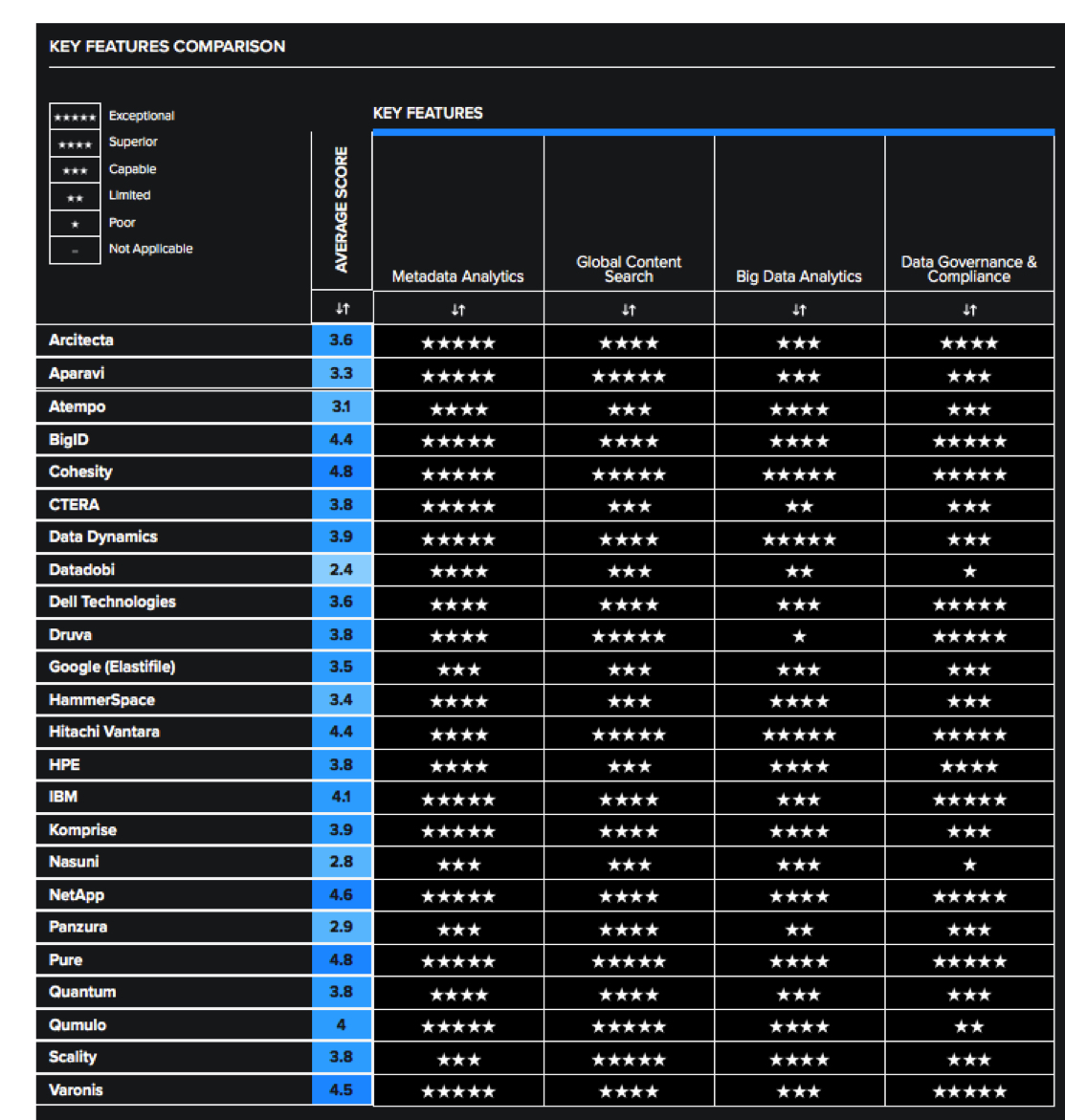

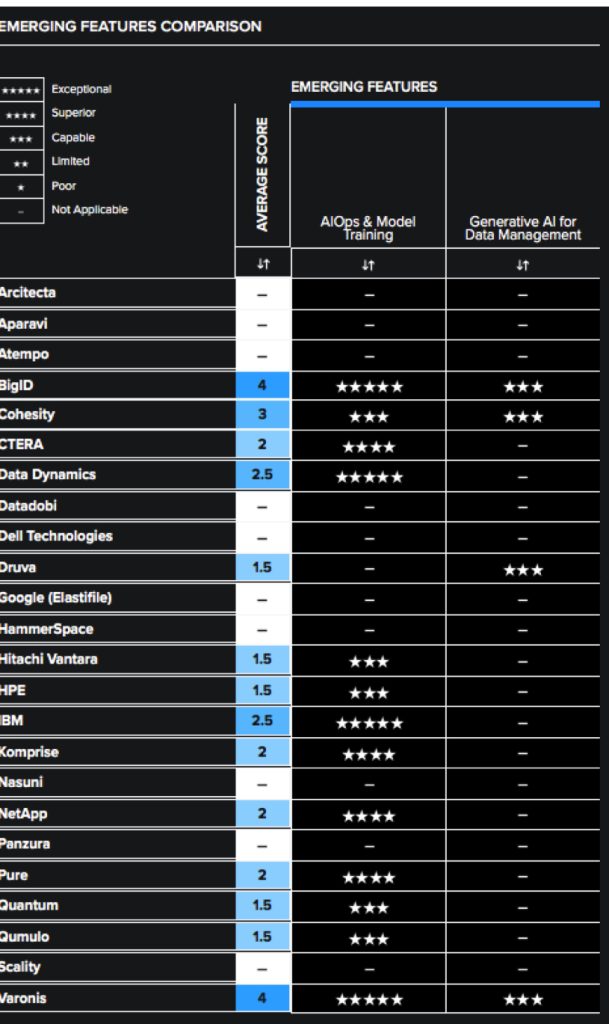

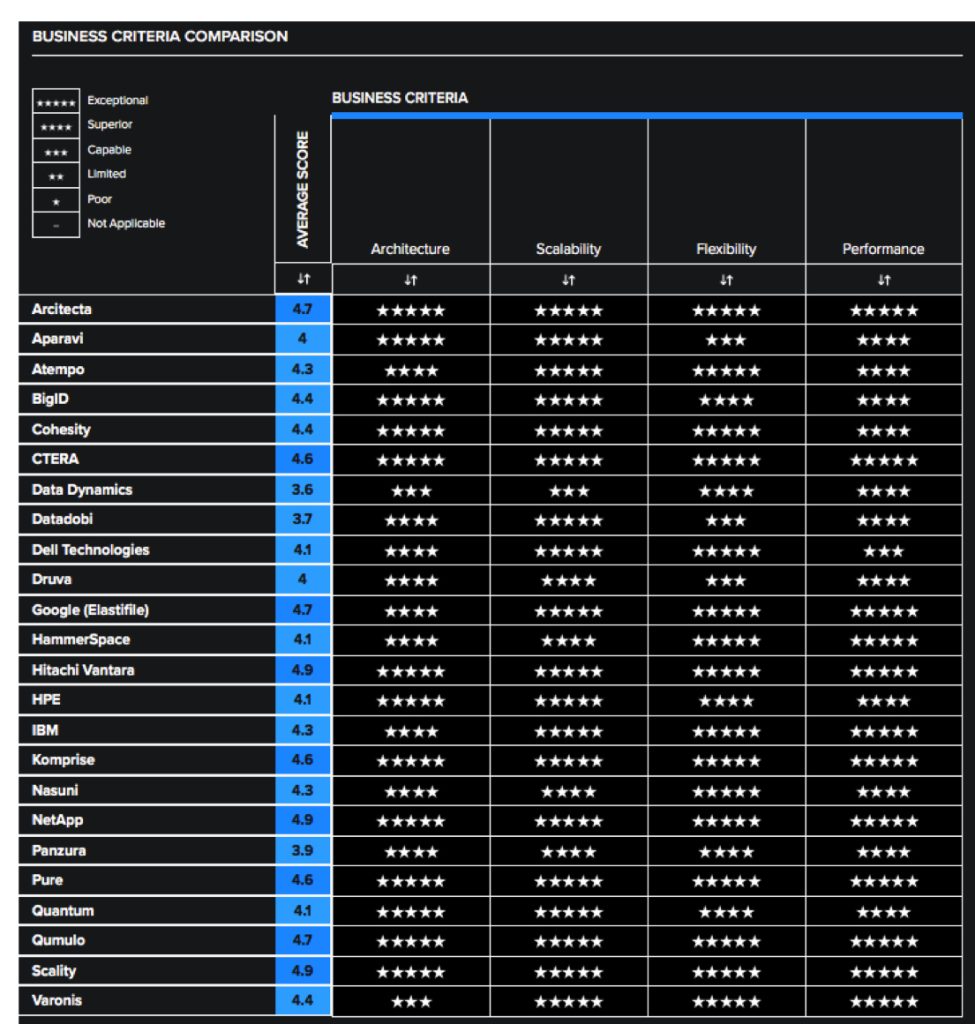

Tables 2, 3, and 4 summarize how each vendor included in this research performs in the areas we consider differentiating and critical in this sector. The objective is to give the reader a snapshot of the technical capabilities of available solutions, define the perimeter of the relevant market space, and gauge the potential impact on the business.

- Key features differentiate solutions, highlighting the primary criteria to be considered when evaluating an enterprise object storage solution.

- Emerging features show how well each vendor is implementing capabilities that are not yet mainstream but are expected to become more widespread and compelling within the next 12 to 18 months.

- Business criteria provide insight into the nonfunctional requirements that factor into a purchase decision and determine a solution’s impact on an organization.

These decision criteria are summarized below. More detailed descriptions can be found in the corresponding report, GigaOm Key Criteria for Evaluating Enterprise Object Storage Solutions.

Key Features

- Kubernetes support: In large-scale storage deployments, containerization with Kubernetes helps manage and orchestrate storage resources efficiently across a distributed environment. This ensures scalability and simplifies storage provisioning.

- Workload optimization: Optimizing storage workloads distributes data across storage tiers based on performance and access needs. This ensures frequently accessed data resides on faster storage, while less critical data can be stored on more economical tiers.

- Auditing: Auditing tracks all data access and storage activities. This provides transparency for regulatory compliance and helps identify suspicious activity to mitigate security risks.

- Versioning: Versioning creates historical snapshots of your data. This allows you to recover previous versions in case of accidental deletion or corruption, ensuring data integrity and facilitating rollbacks.

- Ransomware protection: Storage systems are vulnerable to ransomware attacks. Features like encryption and immutable backups ensure data remains secure and recoverable even if infected by malware. In addition, object lock is available on more advanced platforms. Leaders in this space offer additional protection features such as real-time detection of data exfiltration and anomalies.

- Reporting and analytics: Storage analytics provide insights into storage usage patterns, capacity trends, and performance metrics. This helps identify bottlenecks, optimize resource allocation, and plan for future storage needs.

- Storage optimization: Storing data efficiently reduces costs and improves manageability. Optimization minimizes storage requirements by using techniques like deduplication and compression, allowing more data to be stored without adding more hardware. This translates to lower storage expenses and easier management of the data footprint.

- Public cloud integration: Hybrid cloud storage environments are increasingly common. The integration of storage systems with public clouds allows seamless data storage and management between on-premises and public cloud resources, providing flexibility and scalability.

Table 2. Key Features Comparison

Emerging Features

- Object content indexing: Indexing helps users to quickly find specific data within objects stored in their system. It lets them search the content inside (text, data) to find the exact object needed instead of forcing them to browse object names or tags, reducing the need to rely on custom metadata and third-party solutions.

- Container Object Storage Interface (COSI): It was cited in previous GigaOm releases as an emerging technology for Kubernetes; however, it has remained in alpha status for many years. It is included in this report for reference only, as using the Kubernetes CSI provider is generally preferred.

Table 3. Emerging Features Comparison

Business Criteria

- Cost: Costs grow along with storage needs. It’s essential to find a solution that balances upfront costs, ongoing expenses, and operational efficiency to optimize total storage expenditure.

- Performance: Fast data access and retrieval times are vital for business operations. Performance impacts user productivity, application responsiveness, and overall business agility.

- Flexibility: Storage needs can evolve. A flexible solution adapts to changing data types, growth requirements, and integration with new technologies, ensuring the storage system can accommodate future demands.

- Manageability: Easy administration reduces IT workload and costs. Look for features that automate tasks, simplify provisioning, and offer intuitive management tools to streamline storage operations.

- Scalability: Businesses can require more or less storage as they grow or change, and storage solutions should be able to seamlessly scale capacity up or down as needed. Scalability ensures there will be enough storage for growth while avoiding over-provisioning and unnecessary expenses.

- Ecosystem: A strong storage ecosystem provides valuable integrations with other IT tools and services. This fosters data mobility, simplifies workflows, and enhances the overall functionality of your storage environment.

Table 4. Business Criteria Comparison

GigaOm Radar

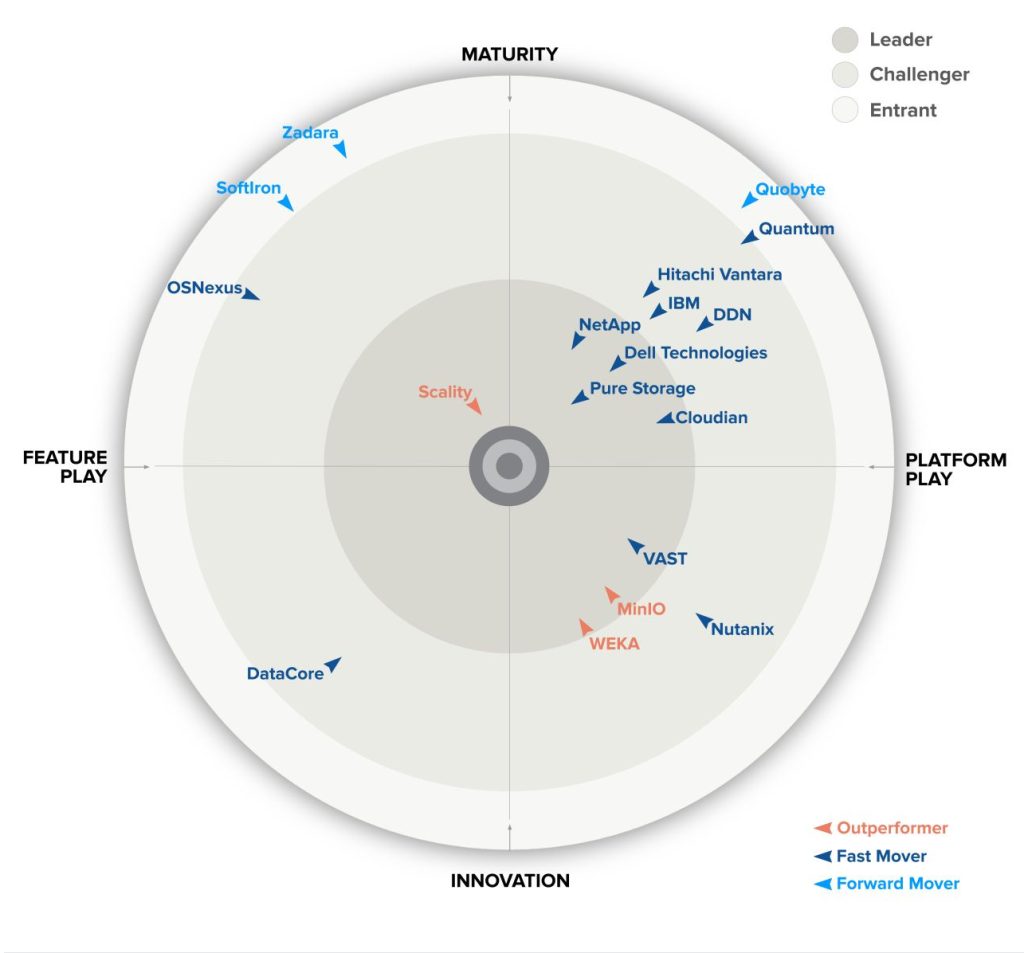

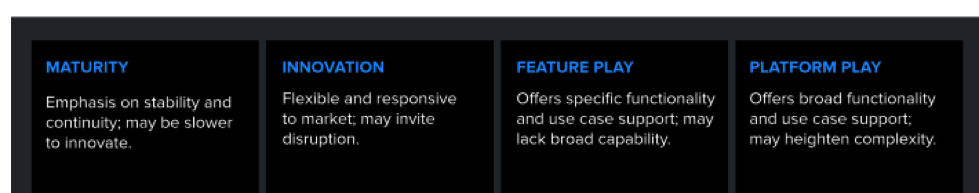

It plots vendor solutions across a series of concentric rings with those set closer to the center judged to be of higher overall value. The chart characterizes each vendor on 2 axes – balancing Maturity vs. Innovation and Feature Play vs. Platform Play – while providing an arrowhead that projects each solution’s evolution over the coming 12 to 18 months.

Figure 1. Radar for Enterprise Object Storage

Vendors have reacted to the growing demand for higher performance and lower latency flash-based solutions by developing support for new flash-based scale-out architectures. Most of these are on the platform side of the Radar chart in Figure 1, with most falling into the Maturity area, although there are a few innovative vendors whose solutions are based on newer architectures such as NVMeoF.

Leaders and Outperformers in this space are those who have been able to adapt to significant changes in data type and data size, solving the problems of new applications that use lots of small files. This has been a challenge for archive and backup solutions that were optimized for larger files and sequential streams of data, and it is a capability sorely needed by customers looking to consolidate data workflows and pipelines into single storage systems. Mature players have reacted in part by investing heavily into newer platforms, and continue to move toward software-defined deployments and greater support of public cloud resource management. This year’s report sees the entry of WEKA to the Radar as a Challenger in the Innovation/Platform Play quadrant.

Last year’s report noted that AI/ML workloads were becoming more commonplace on object storage. That trend continues this year, with vendors offering integration into common AI/ML applications such as PyTorch and TensorFlow, and many now providing multiprotocol support, including GPUDirect, to simplify and enhance the performance of storage when combined with Nvidia GPUs. The vendors whose solutions have such capabilities are firmly defined as Fast Movers or Outperformers in this year’s Radar.

In reviewing solutions, it’s important to keep in mind that there are no universal “best” or “worst” offerings; there are aspects of every solution that might make it a better or worse fit for specific customer requirements. Prospective customers should consider their current and future needs when comparing solutions and vendor roadmaps.

Solution Insights

Cloudian: HyperStore Object Storage

Solution Overview

Cloudian HyperStore offers a proven and comprehensive object storage platform capable of addressing a growing variety of workloads. The solution offers flash-optimized, HDD-optimized, and hybrid flash deployments. NVMe flash is used for metadata, and an NVMe-based AFA is also available for analytical workloads. Administrators can preconfigure storage policies on a per-bucket basis, with the ability to choose between replication and erasure coding. These policies can be configured based on the criticality of the data.

HyperStore integrates with Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure to deliver seamless data management across public cloud and hybrid cloud environments, using native data storage formats on each cloud to avoid lock-in. HyperStore is part of the AWS Hybrid Edge program as an Outposts Ready solution. It offers broad deployment options (VMware VCF with Tanzu, Red Hat OpenShift) and supports S3-compatible endpoints as a custom target for object storage on tape, including Spectra Logic BlackPearl and FujiFilm Object Archive (see the GigaOm Sonar Report for Object Storage on Tape).

The product’s usability has always been one of HyperStore’s major advantages. The UI is among the most complete on the market, offering a very user-friendly experience that includes strong multitenancy support, granular management of system resources, advanced security controls, role-based access control (RBAC), and chargeback functionality. Moreover, with Cloudian HyperIQ, it offers observability and analytics that help detect access anomalies through user behavior monitoring.

Cloudian supports a range of security certifications and provides a secure shell for protection against intrusion. HyperIQ is an advanced analytics tool that brings improved system monitoring and observability with better visibility of user and application access patterns, performance, and capacity consumption trends. Cloudian HyperStore also offers good ransomware protection with S3 Object Lock, which is integrated with backup solution providers like Veeam, Rubrik, and Veritas data protection software.

Strengths

The company provides a robust, secure, and proven platform with a large partner ecosystem of certified solutions. It’s easy to deploy and use, with many features designed to simplify system management and reduce system TCO, and it features regular updates. In addition, it has delivered substantial enhancements to existing features since the last Radar report, such as unified object and file management, and support for multifactor authentication (MFA) and external KMIP key management.

Key strengths include bimodal file/S3 object access, application awareness, comprehensive support for public cloud targets such as AWS, GCP, and Azure, including their tiering layers, and a broad solutions ecosystem. Unique across all vendors is Cloudian’s support for AWS Outposts Server and AWS Local Zones, available via AWS Marketplace.

Challenges

Hyperstore offers storage optimization in the form of compression, but it lacks the de-dupe capabilities that are now readily available from other vendors. Cloudian also lacks QLC flash support that could help reduce the TCO of flash-based use cases. It is also worth noting that while the solution is positioned as a unified file system, two distinct node types are required – object nodes and file nodes. Object nodes do not provide NFS or SMB access, and conversely, file nodes do not provide S3 access.

Purchase Considerations

The product is available as software-only (with options to run on bare metal or virtual servers) and as a preconfigured appliance. Enterprises usually choose the latter for simplified deployment and support. In addition, an AFA recently joined the list of configurations available to customers. Cloudian offers several subscription-based models with licensing based on per usable gigabyte, in inflexible 1-, 3-, and 5-year commitments.

Cloudian is suited for the following use cases:

- Private cloud storage with sovereign control: It is for organizations such as those in healthcare and finance that need strict data residency and compliance due to regulations or security concerns.

- Hybrid cloud storage: HyperStore enables storage that spans on-premises and public clouds, offering flexibility and scalability.

- Large-scale storage and archive: The solution is suitable for storing massive datasets like backups, archives, and medical records due to its exabyte scalability and manageability features.

- AI and data analytics workloads: HyperStore is compatible with frameworks like PyTorch, TensorFlow, Keras, and R to support AI, and with Splunk, Cribl, and Dremio to support analytics.

- File storage and application integration: Company’s recent focus on file storage functionality makes it applicable for file-based workloads, and it integrates with tools like Veeam for backups.

Radar Chart Overview

Cloudian is a Fast Mover and Leader in this year’s Radar with a mature and stable platform offering robust support for the S3 protocol and a clear emphasis on security.

The breadth of its features enables a range of use cases and applications, and puts the firm in the chart as a platform. The pace of its innovation and feature releases positions the vendor as a fast mover, as it adds features at a rate above its competitors but in a mature manner that ensures the supportability of its solution as a critical enterprise storage platform.

DataCore: Swarm

Solution Overview

Swarm is a scalable, on-premises object storage software solution that enables content archive, protection, and delivery. Its customers can easily scale from a few hundred terabytes to exabytes. With parallel scale-out architecture, metadata-aware search and query capabilities, self-healing automation, and multilayered data protection and resiliency functions, Swarm offers high levels of flexibility, performance, and reliability to store growing datasets. The solution can also write to tape via the S3 Glacier API or via third-party applications like FujiFilm Object Archive.

It has many security and ransomware features, including versioning, immutability via S3 object locking, WORM, legal hold, content integrity seals, audit logs, retention schedules, activity logging and hashing, encryption in flight and at rest, and air-gapping. Its security is even tighter, as there is no traditional file system or login shell to exploit. And with no Linux administration required for storage nodes, the threat of social engineering attacks is reduced. Swarm also provides single sign-on (SSO) and MFA with 3rd-party packages.

The solution can handle small objects and provides good performance, thanks to the efficiency of its internal architecture and its data placement algorithms. It includes active-active replication, erasure coding, and strong consistency for the most demanding workloads. It also provides integration with cloud computing platforms, allowing on-premises datasets to be connected to cloud workflows and enabling backup and DR use cases with other S3-compatible object stores.

Swarm offers a unified management and reporting web console that provides multitenant and content management. Prometheus and Grafana are supported for analytics, and system alerting enhances management.

Strengths

Swarm customers can easily scale from a few hundred terabytes to exabytes. With parallel scale-out architecture, metadata-aware search and query capabilities, self-healing automation, and multilayered data protection and resiliency functions, Swarm offers high levels of flexibility, performance, and reliability to store growing datasets.

Challenges

As the object storage industry widens to encompass high-performance AI drive workloads, Swarm lags behind other vendors in implementing data efficiencies that help reduce the cost of using higher-performance flash media that these newer workloads demand. DataCore clearly recognizes the value of these storage optimizations, as they are available on its SANsymphony platform as a combination of adaptive data placement and in-line de-dupe and compression for block-based workloads.

Purchase Considerations

The solution is highly flexible; it can be deployed in bare metal, VMs, or containers, even with small capacities. It claims to achieve 95% usable storage capacity, which can help drive costs down. DataCore provides automated storage and infrastructure management that allows a single administrator to handle a vast amount of petabyte-scale storage. Licensing is based on usable storage capacity across all of a customer’s Swarm instances and includes premier support (24/7) and free product updates. DataCore’s cost model can reduce the cost per terabyte as consumption increases. In addition, there is also a dedicated pricing model for cloud service providers.

Swarm is suited for the following use cases:

- Active archive: Swarm offloads less frequently accessed data from primary storage like NAS to a more cost-effective archive while ensuring on-demand retrieval.

- Immutable backups: Users can create secure, tamper-proof backups that comply with regulations or for disaster recovery purposes. This is especially valuable for industries like healthcare or finance.

- Media archiving: The solution enables the efficient storing and managing of large video and image files with easy access for editing, streaming, or collaboration.

- Long-term data preservation: Data can be preserved for extended periods without the risk of data loss or the need for media migration (future-proof storage).

- Data analytics and delivery: The platform enables storing and managing data for analytics purposes while providing on-demand access for data exploration or delivery.

Radar Chart Overview

DataCore is positioned in the Innovation/Feature Play quadrant. It has added many new features since the last report to better meet customer needs and modern object workloads. The rate at which it has done so over the last year has earned it Fast Mover status. It has added innovative new features such as AI for content management and operations management, as well as fleet management and content caching. These capabilities make it easier to manage the solution at scale and also derive new business insights from greater metadata enrichment (driven by the integration of AI technology).

DDN: Infinia

Solution Overview

Infinia is a software-defined storage platform designed for multicloud and enterprise needs. It offers a modern approach to data management, allowing users to store and manage unstructured data across on-premises, edge, and hybrid cloud environments. Challenges like scalability, multitenancy, and real-time data access are tackled through the platform, which can be deployed on dedicated appliances or in containerized or virtualized environments. With more than 20 years of experience in the storage market, DDN is known for its AI and HPC solutions at scale via its parallel file system offerings. It is worth noting that, within its broad profile of products, the company also offers a specific point solution for AI workloads (A≥I), but Infinia is the object storage solution reviewed for this Radar.

Strengths

It includes strong support for Kubernetes and OpenStack environments via the CSI framework, and it offers flexible deployment options. Core to the offering is native multitenancy with QoS and security, including defining fault domains to insulate tenants from hardware failures. In addition, the underlying architecture is built on a scalable KV store, above which DDN has built a SQL structure. This allows complex content metadata queries, including POSIX and S3 tags, to be run across the cluster without the need to open the underlying files themselves.

Challenges

Infinia does not support Azure environments at this time (although additional cloud vendor support is coming in the future). In addition, it lacks native de-dupe capabilities for increased data efficiency when using higher-cost flash-based media, which is becoming a more common feature across the industry.

Purchase Considerations

It is offered with pay-as-you-go consumption-based pricing. However, as it is positioned primarily as a migration target for the public cloud, it may not be suitable where continued usage as a hybrid object storage across public cloud providers is desired as a single namespace.

The solution is licensed on a consumption-based pricing model, where users only pay for the capacity they use. DDN offers global support and a range of partners to support and assist in the deployment of the solution.

Infinia is suited to the following use cases:

- Data management for AI: A key focus of DDN is to help manage data created at the edge and migrate this data to on-premises in order to centralize data for AI use cases.

- Data analytics and delivery: The platform can be used to store and manage data for analytics purposes while providing on-demand access for data exploration or delivery.

Radar Chart Overview

The vendor was not covered in last year’s Radar, but its continued innovation and the release of its new all-flash platform secured its inclusion in this year’s evaluation. Its current feature set, which includes S3, OpenStack, and Kubernetes support, ensures broad suitability for the majority of enterprise workloads, and it is recognized as a Fast Mover in the object storage space.

Firm’s portfolio is built to specialize in servicing specific workloads, and the Infinia platform specifically targets distributed enterprise data use cases that can have a broad range of requirements such as multitenancy, workload optimization, and automation. Because it offers a solution to such a range of requirements, it is recognized as a Platform Play.

Dell Technologies: Dell Object Storage

Solution Overview

Dell Object Storage is an enterprise-grade, high-performance, secure, scale-out object storage solution. It comprises several products, including ECS, PowerScale, and ObjectScale.

ECS is offered in 2 deployment options: a turnkey HDD appliance and a software-only solution known as ObjectScale that allows users to leverage Kubernetes for software-defined and all-flash appliance deployment models. Aimed at enterprises, the solution excels at managing massive volumes of unstructured data like backups, archives, and medical records. In addition, PowerScale all-flash NAS storage offers the performance and capacity to handle modern AI and analytics workloads, although this is typically for customers with primarily file workloads with some S3 workloads.

Object Storage offers cloud-like scalability reaching the exabyte level, strong security with cyber-resilience measures, and global namespace management for easy data access across geographically distributed sites. It integrates with various applications through S3 compatibility and offers multiprotocol support for flexibility. This solution caters to organizations seeking secure, scalable object storage for managing large datasets on-premises.

Strengths

Object Storage solutions benefit from a global distribution and support network that is often a critical requirement for large enterprises with a global footprint. It is important to recognize that since last year(s Radar, ECS has been rewritten (as ObjectScale) to remove the reliance on dedicated appliances and allow a wider range of deployment options such as virtualized and containerized environments. This has been achieved by the containerization of the OS and is offered as an in-place upgrade to existing customers.

Challenges

Dell’s flexibility comes via 3 different product platforms. While this can be considered a strength from a Feature Play perspective for specific use cases, it can complicate the purchasing process for customers, as there is overlap among these offerings. The overall trend in enterprise object storage is an incrase in newer workloads primarily driven by the explosive use of generative AI solutions; therefore, customers are seeking flexible tools that can manage all of these workloads under a single namespace. One other consideration is that deployment to smaller edge environments or native clouds continues to be challenging for customers, although there are developments and enhancements to this process being planned for delivery this year.

Purchase Considerations

Dell offers 3 object storage solutions, each with their own target use cases. PowerScale offers multiprotocol access and is targeted toward AI use cases. ECS is the most mature offering and targets on-premise S3-based long-term archive and big data analytics workloads. Finally, ObjectScale is a software-defined offering targeting S3 and Kubernetes environments. ObjectScale is also offered as an all-flash NVMe appliance with more appliance models and configurations planned for the future.

Object Storage is suited for the following use cases:

- Analytics: Dell ECS offers scalable, high-performance storage ideal for storing and analyzing large datasets.

- S3 service provider: It provides a compatible S3 interface, allowing easy integration for public or private cloud S3 object storage services and extensive automation for service provider usage.

- Backup and archive: With the EX5000 and its focus on data protection and scalability, it is a good fit for efficiently storing and managing backups and archives.

- AI/ML: The new ECS EXF900 and ObjectScale XF960 systems offer all-flash performance.

- Video surveillance: ECS scales to accommodate the high storage capacity and data throughput needed for video surveillance footage.

Radar Chart Overview

The existing features in vendor’s solution have matured, and the object storage offering has been developed to provide more deployment flexibility (through containerization). Company’s broad range of functionality ensures its suitability for a range of workloads, making it a Leader in the Maturity/Platform Play quadrant. The firm also maintains a Fast Mover status, as it releases a major release regularly (every four months) and also offers minor enhancements and fixes in the interim periods.

Hitachi Vantara: HCP

Solution Overview

The company is a global technology provider that delivers a broad portfolio of data solutions. Hitachi Content Platform (HCP) Object Storage is its enterprise-grade object storage solution and is the focus of this report. It is a single product that provides scalable, secure, and self-healing object storage for a wide range of data workloads.

It offers a secure and scalable solution for managing massive datasets on-premises or in a hybrid cloud environment. It caters to various needs, from storing backups and archives to supporting AI and data analytics workloads. The platform prioritizes data protection with features like encryption and redundancy. It also boasts high-performance access for fast data retrieval and integration with existing applications through S3 object access.

Strengths

HCP is a robust and secure solution with an outstanding management ecosystem and flexible commercial models. The focus on hybrid cloud allows customers to manage their data across on-premises and public cloud storage with the HCP platform natively. In addition to the above, HCP also offers strong data management capabilities in the form of automation, tiering, and analytics services. These combine to help customers better manage the storage processes and also help to extract additional value from their data.

Security and compliance are particularly important, and HCP prioritizes meeting these requirements with features such as encryption, access control, and data immutability that can also help mitigate the risks associated with ransomware attacks and other cyber threats. Finally, HCP integrates with various applications and serverless services, including native integration with public clouds.

Over the last 12 months, it has expanded its implementation of the S3 API to include Object Lock, Overwrite, and S3 Select data formats. Object Lock is useful in regulatory use cases, and extended S3 Select data format (JSON, parquet) better opens the data on HCP for access to AI and ML use cases.

Challenges

HCP currently offers less deployment flexibility compared to its peers due to the absence of a containerized software-defined deployment option, instead offering either an appliance or virtualized IaaS options. Hitachi has recognized the demand for this by allowing customers to use their own preferred hardware appliances with HCP. HCP can have a higher up-front cost compared to some competing options. This may be a concern for budget-conscious customers or for smaller edge-based deployments.

It is worth noting that the vendor has one major and one minor release for HCP per year, which is a slower cadence than its peers. This could be important for those types of early adopters who like to benefit from the latest innovations.

Purchase Considerations

Advanced features, such as real-time data classification or moving data based on value and workflow, are typically reliant on the use of Hitachi Content Intelligence (HCI). They also require additional management overhead and resources. If these features are important to your scenario, these are additional costs to keep in mind.

HCP offers a variety of commercial purchase options: it can be purchased either directly, as a managed service (EverFlex) from the company or its partners, or as STaaS via 15 Partner Cloud Service Provider Partners, which allows the solution to be adopted by a wide variety of organizations and budgets.

HCP is well suited to the following use cases:

- Private hybrid cloud: HCP excels in hybrid cloud environments due to its ability to seamlessly manage data across on-premises and public cloud storage. This provides flexibility and cost optimization as data storage needs evolve.

- Cloud storage gateway: HCP can integrate with cloud storage gateways to provide secure and scalable object storage for data offloaded to the cloud.

- Analytics: HCP’s scalability and performance make it suitable for storing and analyzing large datasets used in analytics workflows. Additionally, built-in data management features can help automate data tiering for optimized analytics performance.

- Edge and IoT: HCP can be deployed at the edge to collect and store data from IoT devices. Its scalability allows for handling potential influxes of data, and its ability to tier data to central storage as needed ensures efficient use of storage resources.

Radar Chart Overview

The vendor is positioned in the Maturity/Platform Play quadrant. It continues to enhance the features and capabilities of its platforms with an emphasis on easing the management of hybrid cloud scenarios. These enhancements benefit multitenant deployments.

IBM: Storage Ceph

Solution Overview

Storage Ceph is an enterprise-grade object, block, and file storage solution built on the open source Ceph platform. This translates to software-defined flexibility and lower costs compared to proprietary solutions. IBM enhances Ceph with its own management tools and enterprise support, ensuring scalability, reliability, and data security. It caters to various storage needs, from traditional file storage to modern workloads like AI and analytics, all within a single platform. This unified approach simplifies data management and allows organizations to leverage Ceph’s inherent scalability to handle massive datasets. Ceph itself is an open source SDS platform, providing the core building blocks for Storage Ceph. The solution offers the key enterprise features of encryption and access control, which provide control and protection of the data, while data immutability adds protection against ransomware attacks that seek to encrypt or maliciously modify customer data.

Strengths

Storage Ceph is a robust solution with massive scalability, strong integration with Kubernetes and Red Hat OpenShift, and a compelling edge and serverless feature set. Integration with OpenStack also makes Ceph appealing to MSPs and CSPs. In addition, it offers native support for IBM Object Cloud. The company has invested heavily in enhancing the manageability and reporting with several improvements to the Ceph Dashboard and provides web-based UIs and APIs for provisioning, monitoring, and automation.

The solution offers high availability through a self-healing architecture that is able to cope with hardware failures and maintain continuous operations, which is an important consideration when scaling to larger capacities. The firm offers the ability to use Storage Ceph as a supported architecture to build a lakehouse for IBM watsonx.data. Finally, additional data protection features such as Object lock, WORM, FIPS 140-2, key management, and service-side encryption ensure the solution is suitable for environments with more stringent security requirements.

Challenges

Although support for Azure and Amazon S3 has been added as part of lifecycle management, this is unidirectional and data can’t be transitioned back from the remote zone. This limitation results in a recommendation to use this feature only for archiving. A Ceph Object gateway is required for this scenario, adding further architectural overhead. Note that NFS capabilities on top of CephFS volumes were temporarily removed, but these were reintroduced in the latest release, Ceph 7. In addition, the vendor offers a large portfolio of solutions, such as IBM Cloud Object Storage and IBM Storage Scale, which also offer object storage capabilities for on-premises or AI and big data workloads.

Purchase Considerations

It offers a variety of commercial models, but a higher level of management expertise is required to operate Ceph in comparison to other vendors in this report, which may increase reliance on a partner or professional services. Storage Ceph may be purchased via its partner network or directly with IBM via Global Financing to fit specific Opex or Capex models. The solution is optimized for large-scale on-premises deployments, and therefore, customers seeking smaller edge or native cloud deployments may want to explore the other solutions in the IBM portfolio.

IBM Storage Ceph is for:

- Large-scale data lakes for AI/ML, with massive scalability, support for billions of objects, and native integration as a lakehouse for watsonx.data.

- Containerization, supported via tight integration with Red Hat OpenShift.

- Disaster recovery, backup, and archive, due to the cost-effective design and concurrent multitenant architecture that allow service providers to build out object-as-a-service offerings.

- Cloud native applications: The comprehensive S3 support ensures modern applications are compatible with the solution and features such as AWS secure token support (STS), SSE, and D3N query acceleration, plus native support for Parque files.

Radar Chart Overview

IBM offers a mature and stable platform with global support, putting it in the Maturity/Platform Play quadrant. It continues to keep pace with industry innovation and regularly releases quarterly product updates. Due to the reliance on Ceph, more innovative features such as deduplication are not available, but it is expected that IBM will continue to move forward, earning it Fast Mover status.

MinIO

Solution Overview

The company stands out as a high-performance, open source object storage server designed for private cloud infrastructure. Built for scalability and cost-efficiency, it offers an alternative to cloud-based storage solutions. It prioritizes compatibility with Amazon S3 APIs, allowing seamless integration with existing tools and applications built for S3. This familiar interface simplifies adoption and minimizes disruption to existing workflows. Designed for deployment flexibility, MinIO is offered as a single software-defined storage platform that runs on industry-standard hardware as a lightweight container or VM, making it easy to manage and integrate into existing infrastructure. It caters to organizations seeking a secure, scalable, and cost-effective object storage solution for private cloud deployments.

Strengths

MinIO is widely used as the basis of many commercial object stores, offering simple deployment and comprehensive Kubernetes integration. It is also capable of extremely high performance. Integration and published use cases with leading in-demand systems such as Dremio, Kubernetes, LangServe, SingleStore, and Weaviate show that customers can quickly and easily learn best practices to ensure successful deployments of these fast-moving technologies.

One key strength of MinIO is that it offers a community version of its storage platform, which helps customers with limited or POC-sized budgets and allows them to progress to enterprise support as the projects mature and grow.

One notable difference between MinIO and the AWS S3 implementation is that the vendor is able to support larger file sizes of up to 50TB per object in comparison to the AWS limit of 5TB. This may provide an advantage with larger video, backup, or container images.

Challenges

The firm doesn’t offer de-dupe capabilities, a feature that could help increase efficiency when using more costly high-performance flash-based media and is increasingly adopted by its peers as customers seek to decrease the cost of flash for high-performance workloads such as AI and ML. MinIO is designed for distributed object workloads offering robust S3 compatibility; however, it does not offer additional protocols like its competing peers, such as file protocols. This is important for organizations that would prefer to consolidate all storage to a single vendor platform.

Purchase Considerations

There are 2 enterprise licenses available: Lite is targeted at deployments of less than 1PB, and Plus is for those greater than 1PB. Lite is mainly aimed at customer-managed deployments, whereas Plus offers more robust support with strict SLAs and access to expert support. This also includes a unique Panic Button concept where users can request immediate 24/7 support from MinIO engineers directly from the console itself. Finally, MinIO offers all Plus Enterprise Object Store customers an annual review of their architecture, performance, and security to ensure best practices are implemented for a consistent experience. MinIO does not operate a hosted object storage service.

MinIO is well suited for the following use cases:

- Hybrid cloud storage: It is supported in public cloud (via EKS, AKS, or GKE), multicloud edge deployments on MicroK8s or K3s, and private cloud via VMware Tanzu, Red Hat OpenShift, HPE Ezmeral, and Rancher.

- Backup and archive: Partnerships with Veeam and Commvault ensure compatibility and support for these mission-critical enterprise backup and restore use cases.

- Data lake and HDFS migrations: MinIO’s performance and ease of scale and manageability make it an ideal solution for customers looking to migrate from Hadoop HDFS.

- AI/ML: The vendor offers the ability via tight integration with Tensorflow and Kubeflow to deliver linear performance scaling for training and inference workloads.

- Analytics: It has partnered with Snowflake and Splunk to offer supported and validated deployments using MinIO as the scalable object storage for these solutions. Performance is also published with Spark, Presto, Flink, and other modern analytic workloads.

Radar Chart Overview

MinIO maintains its position as an Outperformer in this year’s Radar due to continued innovation and release of features that cater to a broad range of user requirements, including high-performance-demanding GenAI and comprehensive integration into AI frameworks, enabling out-of-the-box usage of the solution. This combination of factors places MinIO as a Leader in the Innovation/Platform quadrant, as it continues to add innovative value-add features and maintains broad compatibility for the ever-growing use case landscape of enterprise object storage.

NetApp: StorageGRID

Solution Overview

StorageGRID is company’s primary distributed object storage solution designed for scalability and geographic flexibility. It excels at handling various data types, including archives, backups, and rich media files. It boasts features like self-healing replication for data redundancy, policy-driven automation for simplified management, and integration with cloud storage for a hybrid approach. This solution caters to organizations with geographically distributed data centers as well as those requiring a scalable object storage solution for diverse data workloads.

Strengths

StorageGRID is a balanced and secure product that delivers its best when deployed with its integrated load balancer for added multitenancy and QoS features. It’s easy to use, with granular information lifecycle management policies, outstanding implementation of the S3 Select API, and support for serverless workloads. In addition, support for QLC flash addresses the need for dense flash storage in the data center. The product integrates with a comprehensive range of validated solutions, such as Dremio, Snowflake, Veeam, Commvault, Splunk, and Kafka.

A major strength of StorageGRID is comprehensive support for several encryption techniques, including KMS, encrypted drives, grid-wide encryption, S3 bucket encryption, S3 SSE, and SSE-C (per-object encryption), which helps it meet federal information processing standards.

Challenges

In the rare event of a catastrophic reconstruction event, such as the loss of an entire node or volume, a cluster-wide rebuild may be slower due to the localized hardware RAID (which, conversely, increases performance for more common disk failures local to a node). A new architecture for cluster-wide rebuilds is expected later this year. In addition, the lack of a CSI provider for StorageGRID may be off-putting for those considering Kubernetes-based scenarios.

Purchase Considerations

Note that NetApp also provides S3 object capabilities via its unified Ontap platform, which offers multiprotocol access and is able to use StorageGRID as a storage tier. StorageGRID can be purchased directly or via the firm’s partner network and is licensed on provisioned capacity. A variety of finance options are available to meet specific Opex or Capex customer requirements.

StorageGRID excels at handling mixed-use workloads, including large amounts of deletions, which can cause issues for other solutions.

Examples of ideal workloads for the product are:

- Analytics and data lakes: The performance, durability, and scalability of the solution ensures that data lake management is simplified and future requirements can be met.

- Data protection: Its been certified for use with several leading backup partners such as Commvault, Veeam, Veritas, and Rubrik. In addition, it offers ransomware protection through the use of native immutable snapshots and S3 object lock.

- Media workflows: The scalability and performance of StorageGRID, combined with simplified management, makes it ideal for media and entertainment use cases that require throughput and comprehensive metadata management.

Radar Chart Overview

NetApp continues to be a Leader Y/Y, offering a robust feature set that has benefitted from notable enhancements over the last 12 months. It is worth noting that, compared to last year, the company has moved toward the Feature Play side of the Radar due to the limited availability of non-object protocols (SMB/NFS) relative to other vendors in this space. Instead, these capabilities are offered in conjunction with the Ontap platforms.

Nutanix: Objects Storage (as part of Nutanix Unified Storage – NUS)

Solution Overview

The company offers a scalable and simple-to-manage software-defined object storage service that supports various data workloads, from backup and archiving to object-based applications, all within a unified cloud platform. This centralized approach streamlines data management and caters to organizations seeking a scalable and secure object storage solution integrated with their existing Nutanix environment.

Objects Storage delivers extremely fast, secure, S3-compatible object storage at massive scale to hybrid multicloud environments, enabling the use of the object store as a data repository for backups and archives to newer data-intensive, high-performance applications such as analytics and AI/ML.

It prioritizes performance, scalability, and cloud-native support with the addition of new capabilities and feature sets. The Nutanix object store integrates with analytics platforms and query engines at the edge, core, and cloud.

Strengths

The firm has developed several key features over the last 12 months, including S3 replication to cloud, which helps use public cloud storage to lower the overall TCO, object browser enhancements, and support for dense NVMe drives, providing higher performance workloads at a higher density footprint. Since the last Radar report, Objects Storage has also added ransomware protection capabilities. A native browser, called Objects Browser, enables direct interaction with object contents, including the ability to run SQL queries against semistructured object data.

Challenges

When scaling Nutanix, it’s important to take into consideration additional caching and locality when planning the system expansion. The architecture relies on the cache to provide the best performance, and this can be a limiting factor that increases the number of nodes required to reach certain performance workloads. In addition, all-flash storage density is an area that Nutanix should focus continued development on to address increasing customer data center efficiency requirements.

Purchase Considerations

Objects Storage can start smaller than many competing solutions (10TB) and grow to more than 2PB, allowing smaller organizations or budget-restricted business units to benefit from enterprise-class storage. This is offered as part of the Nutanix unified license and is priced per terabyte of usable terabyte. The storage license also allows customers to use the SMB, NFS, and iSCSI protocols. Nutanix offers 2 licenses: Starter for low-cost capacity drive workloads such as backup, archive, and video surveillance, and Pro for higher performance, multicloud, and advanced replication use cases. Nutanix Objects integrates well with other company’s products, but its integration with 3rd-party applications is less comprehensive than its peers (for example, Tensorflow and AI/ML workloads).

Objects Storage supports data-heavy intensive workloads that require high performance, such as big data analytics, data lakes, cloud-native applications, AI, ML, and deep learning (DL). It works with popular big data applications such as Vertica, Snowflake, Splunk SmartStore, and Confluent Kafka. In addition, it is suited for backup and archive use cases due to the built-in object versioning capabilities.

Radar Chart Overview

Nutanix has innovated this year with a global namespace, which provides federation capabilities, and has made further enhancements to analytics, reporting, auditing, and ransomware protection. This established the company as a Fast Mover that is innovating by combining compute, storage, and management into a full-stack solution for a broad range of applications.

OSNexus: QuantaStor

Solution Overview

OSNexus QuantaStor offers software-defined object storage designed for scalability and security on-premises. It tackles massive datasets efficiently using erasure coding for data redundancy. Security features like encryption and access controls ensure data privacy. This cost-effective solution positions itself as an alternative to public cloud storage for organizations managing large archives or media libraries, or requiring long-term data preservation. OSNexus also offers physical appliances through its partnership with Seagate for customers that require dedicated hardware deployments.

It is built upon Ceph and simplifies the management of larger-scale deployments through the use of Storage Grid (up to 100 appliances). It also therefore benefits from OpenStack Swift integration.

Designed for scalability and security, it caters to organizations with large archives, media libraries, or requirements for future-proof data storage. QuantaStor uses erasure coding for efficient storage and data protection, ensuring redundancy and guarding vs. hardware failures. The vendor prioritizes security with features like encryption and access controls to maintain data privacy.

Since last year’s Radar, QuantaStor has added enhancements to its multizone and multicluster replication and expanded its storage optimization features by introducing automated tiering of objects, which allocates them to the most appropriate storage class within the cluster.

Strengths

QuantaStor benefits from Storage Grid management, which helps reduce operational complexity. OSNexus has added bidirectional replication between zones, auto-tiering based on rules and policies, and expanded object context awareness. Its software-defined architecture offers flexibility and cost-efficiency as it enables customers to deploy to their preferred hardware choices to meet workload performance and fault tolerance requirements. Security is a priority, with features such as encryption and access controls safeguarding data privacy and strong auditing capabilities.

QuantaStor offers support for multiple storage protocols including object (S3, Swift) and block (RBD and iSCSI) at the same time. In addition, as the solution is built upon Ceph, it integrates natively with OpenShift as an OpenStack Swift endpoint to the Keystone OpenStack Identity Service.

It offers several advanced security features, including 256-bit XTS encryption, HIPAA and NIST 800-53-171 compliance, audit logging, FIPS 140-2 for generation of encryption keys, MFA, RBAC, and enhancements to support S3 object locking for data governance and compliance policy enforcement.

Challenges

Although OSNexus offers advanced ransomware protection on its NAS solutions, this hasn’t yet been developed for its object offerings. Instead, it provides industry-standard immutable data protection and a choice of governance modes. Moreover, data de-dupe is not available (though it’s on the roadmap for 2025 via CEPH). QuantaStor is primarily deployed as a bare metal deployment, and although it can be deployed to a virtualized environment, it does not offer a Kubernetes-native containerized deployment option. Customers with extensive auditing requirements should take note that this is not enabled by default, and enablement could have performance impacts.

Purchase Considerations

QuantaStor is positioned as a cost-effective alternative to public cloud storage for managing large datasets on-premises. It is available to buy through global distributors, OEMs, and VARs, and is also available as a turnkey solution via MSPs and CSPs. A cloud licensing portal offers monthly billing based on provisioned storage licensing capacities. There is a minimum license key size of 32TB per appliance.

QuantaStor is well suited for the following use cases:

- Archive and backup/disaster recovery: Its scalability and focus on data protection with features like replication make it suitable for long-term data archiving and DR backups.

- M&E: It offers high-performance storage ideal for storing and managing large media files efficiently.

- Big data and analytics: Its scalability and integration with analytics platforms make it a good fit for storing and processing big data for analytics workloads.

Radar Chart Overview

OSNexus has continued to develop and enhance its offering, maintaining its status as a Fast Mover with regular updates (new features every four months as well as monthly maintenance releases) and focusing on enabling high-performance object storage for AI/ML use cases since the last report. It continues to focus on greater integration with its hardware partners to simplify management between the Storage Grid management and the underlying hardware management. Due to its reliance on the underlying Ceph platform, OSNexus is placed toward the Maturity quadrant, as many storage-level innovations are dependent on development from the upstream project.

Pure Storage: FlashBlade

Solution Overview

Pure Storage develops and sells all-flash storage hardware and software, focusing on high-performance flash storage solutions for data centers. It offers a portfolio of solutions that cover unified block and file, unstructured data storage, and integrated platforms, and also offers advanced solutions for the enablement of container storage as a service for hybrid cloud via the acquisition of Portworx.

For the purposes of this Radar, we’e focusing on the Pure Storage FlashBlade, which offers unified file and object storage that leverages Pure’s all-flash storage technology for high-performance use cases.

The architecture enables independent scaling of capacity and performance, allowing customers to meet changing needs. It supports both object and file protocols (but not block; instead, Pure offers FlashArray for unified block and file storage). FlashBlade comes in 2 versions: EFlashBlade//E, designed to be more cost-effective for large data repositories, and FlashBlade//S, which is designed for high-performance workloads.

Since last year’s report, FlashBlade has delivered enhanced security with TLS 1.3 support through the latest release of Purity//FB, improved DR and data protection with the introduction of fan-out and fan-in replication, and 3-site object replication, which enables DR with multisite writeable buckets for increased data resiliency. In addition, Object Lock and Object Bucket quotas provide greater control and data immutability for object storage on FlashBlade.

Strengths

FlashBlade is firmly targeted at high-performance unstructured data and provides a scalable solution with advanced data management built in. Support for QLC helps the economics of having an all-flash system. For users with Kubernetes-based workloads, Pure’s acquisition of Portworx is notable for providing enhanced Kubernetes management and automation capabilities when combined with FlashBlade, allowing automation and advanced management and monitoring of container-provisioned storage.

Challenges

Pure’s all-flash technology might come at a premium compared to some object storage competitors that are focused on delivering dense capacity and cost efficiency for non-high-performance backup and archive use cases. In addition, Pure lacks the public cloud integration capabilities with Azure, GCP, and AWS that are increasingly available from its peers. For smaller deployments, customers should consider the minimum deployment requirements for FlashBlade, as these may not be suitable for tight budgets or where smaller edge deployments are required.

Purchase Considerations

Pure offers multiple commercial models called Evergreen One, Flex, and Forever, which cater to consumption, STaaS, and traditional ownership methods of operating and owning the solution. For larger deployments (minimum 4PB), the FlashBlade//E offers better economies of scale than FlashBlade//S.

FlashBlade is well suited for the following use cases:

- Imaging: It excels in handling large medical image files due to its high performance and scalability, enabling fast access and manipulation for critical tasks.

- AI and analytics: Its speed and low latency benefit AI/analytics workloads by accelerating data processing and training pipelines for faster insights.

- High-performance computing: Its parallel processing architecture and NVMe support provide the high throughput and low latency needed for demanding HPC workloads.

Radar Chart Overview

Pure has moved toward the Maturity half by focusing on delivering many enhancements to its existing capabilities since the last report. This includes the refresh of its FlashBlade product line in order to take advantage of hardware advancements, security enhancements, and improved disaster recovery and data protection.

Quantum: ActiveScale

Solution Overview

The company is a provider of both primary and secondary data storage solutions with a strong focus on archive and secondary storage for massive datasets. Its ActiveScale object storage platform caters to organizations that need to manage large amounts of online data that will be continually used on a periodic basis, such as sensor data.

ActiveScale is a scalable object storage solution designed for managing massive datasets and enabling efficient data access. It excels at storing and managing cold data, which is data that is infrequently accessed but needs to be retained for long periods. ActiveScale uses a scale-out architecture for scalability and redundancy and leverages Quantum’s expertise in object and tape storage to deliver a reliable and cost-effective solution.

It recently (April 2024) released its first all-flash ActiveScale Object Storage appliance to address customer demand for enterprise object storage for AI, ML, and data analytic use cases that require higher performance and lower latencies.

Strengths

Customers can start from just 3 nodes and scale to 100s in a single namespace cluster. In addition to S3, the platform supports the NFS protocol. SMB support is available via a file gateway. Moreover, the firm offers integrated tape library functionality for backup and archive use cases. With the introduction of its All-Flash Z200 appliance, it is entering the high-performance object storage market with an emphasis on AI/HPC and advanced analytics, content delivery and streaming, high-speed ingest and processing, NoSQL databases, and high-density cold storage archives.

Challenges

With ActiveScale, all metadata storage is in NVMe, which constrains HDD deployment density on non-all-flash nodes, which in turn increases the number of nodes required, potentially leading to increased cost and complexity.

Purchase Considerations

Licensing is based on usable capacity, per terabyte. Customers can start at less than 100TB, which allows them to start at a lower entry point than some other competitive solutions, and grow over time.

Note that the manufacturer also offers a separate product, Myriad, an all-flash scale-out file and object storage solution with built-in inline de-duple and compression data services that is ideal for modern microservices built on Kubernetes as well as specialized applications such as data science, AI, VFX, and animation. Prospective buyers may want to consider which would better suit their organizations.

ActiveScale is well suited for the following use cases:

- Unstructured data management for genomic and earth sciences data sets.

- Surveillance: Its scalability and cost-efficiency are ideal for storing vast amounts of video surveillance footage that needs long-term retention.

- M&E: It can archive large media files efficiently, making it suitable for storing inactive content like film libraries or historical archives.

- Scalar tape: It integrates seamlessly with Scalar Tape libraries, providing a tiered storage solution with cost-effective tape storage for long-term data archiving.

- Data lake and analytics: Non-flash-based, it can be used as a cost-effective tier within a data lake for storing less frequently accessed data. Alternatively, the Z200 All-Flash appliance is well suited to higher-performance use cases.

- Backup and archive: It is designed to handle massive data growth, making it ideal for storing large backups and archives efficiently. Additionally, its focus on cold storage offers a cost-effective solution compared to storing inactive data on high-performance storage tiers.

Radar Chart Overview

Quantum is a Fast Mover in this year’s Radar due to performance enhancements and an all-new flash-based solution that addresses high-performance, low-latency workloads. It is well suited for unstructured data management, backup, and archive use cases. Offering a mix of maturity and broad capabilities, it is positioned in the Maturity/Platform Play quadrant.

Quobyte

Solution Overview

Quobyte offers a software-defined object storage solution designed for globally distributed data. It excels at performance through a distributed parallel file system, making it suitable for geographically dispersed deployments or real-time data access needs. It prioritizes data mobility, allowing easy data movement between on-premises, cloud, and edge locations. This flexibility caters to organizations with geographically distributed data or hybrid cloud strategies.

Security features like encryption and access controls safeguard data privacy. Additionally, the company integrates with existing workflows through S3 object access, enabling seamless use with familiar data management tools. This scalable and distributed object storage solution positions itself as a secure and performant option for organizations requiring data mobility and geographically dispersed data access.

A new feature, Quobyte’s File Query Engine, provides an advanced metadata and query capability without the need for an additional database layer. This results in faster queries and replaces the need for file system tree walks, which offers faster results and simplicity when managing larger datasets.

Strengths

Quobyte excels at multitenant environments, providing secure isolation down to the underlying hardware layer, simplifying resource management for administrators. In addition, its access control capabilities support NFSv4, AWS S3, Windows, and Mac ACLs, making it a flexible solution. Objects are mapped directly onto the file system layer, helping to support strong consistency within single clusters. Kubernetes is supported as a deployment option, and there is also a native CSI provisioner available. In addition, the firm offers multiprotocol access to its storage via SMB, NFS3/4, S3, HDFS, and MPI-IO support. A native REST API is available for automation of management operations.

Challenges

The vendor is focusing on delivering performance enhancements for read-intensive workloads with lots of small files. Support for Azure Blob and AWS S3 storage tiering are the key focus of upcoming versions but are not yet available, nor is support for advanced object versioning.

Purchase Considerations

The solution can be deployed in a variety of configurations, including on any x86 server, public cloud providers, and Kubernetes. Pricing is based on usable capacity as an annual subscription. The company does not offer hardware appliances, so customers are responsible for selecting, configuring, and managing the hardware for the solution.

Quobyte is well suited for the following use cases:

- HPC and analytics: Its parallel file access and scalability enable efficient data sharing and accommodate large datasets for these compute-intensive workloads.

- Kubernetes: It offers persistent storage for containerized applications, facilitating data management in Kubernetes environments.

- Financial, M&E, life sciences, and bio IT: It handles large datasets effectively and offers multiprotocol access for various applications, potentially aiding in regulatory compliance.

- AI/ML: It offers the scalability and linear performance scaling needed to meet AI and ML demands while also ensuring that multiple systems can access the data at any stage of the AI/Ml pipeline via its comprehensive multiprotocol support.

Radar Chart Overview

The company is a Forward Mover and has delivered continued enhancement of its current product offering while targeting a wider range of use cases. This blended approach places the vendor in the Maturity/Platform quadrant, as it seeks to ensure stability and continuity while also offering broader functionality for their customers.

Scality: RING

Solution Overview

Scality RING is a software-defined object storage solution designed for massive data management on-premises or in hybrid cloud environments. It prioritizes scalability, boasting exabyte-level capacity to handle large datasets efficiently. It excels at cost-effectiveness compared to public cloud storage, making it suitable for organizations managing large archives, backups, or media libraries. It provides very high levels of data durability and resiliency through local and geo-distributed erasure coding and replication for user data, with services for continuous self-healing to resolve expected failures in platform components such as servers and disk drives. High availability is provided through a distributed system that includes no single points of failure, the ability to route around failure conditions, online capacity and performance scaling, online rolling upgrades, and the ability to tolerate data center (site) failures while preserving service availability in synchronous geo-stretched deployments across multiple data centers.

The platform offers multiprotocol support, allowing access through industry-standard file system protocols (NFS, SMB) alongside native S3 object access. Security features like erasure coding, data replication, and access controls are natively provided by the platform.

Scality also offers ARTESCA, a simple S3 solution designed for SMBs, which targets immutable backup storage and ransomware protection use cases.

Strengths

RING supports a range of deployment configurations from all-flash (QLC, TLC SSD) to hybrid (SSD/HDD), with easy scale-out to hundreds of nodes. In addition to object protocols, it offers integrated scale-out file support without the need for additional gateways. This helps to support more demanding use cases such as medical imaging (Picture Archiving and Communication System, or PACS) and video streaming. It is notable that the company is investing in quantum-safe encryption, realizing the market’s early recognition of methods to secure data vs. cryptanalytic attacks by quantum computers. Since the last report, the firm added a multitude of management and observability enhancements, including a new cloud-native monitoring stack built upon Prometheus and AlertManager, dashboard enhancements, and expanded utilization of APIs.

Challenges

S3 Express API support is currently being developed, and unlike some of its peers, it doesn’t yet have an S3 open source PyTorch-to-S3 connector. The vendor has also recognized that it must enhance data migration from foreign or external data sources, which is a common requirement for financial services and government agencies, and this is being addressed over the next 12 months. Finally, RING does not offer de-dupe as a storage optimization, which is present in other vendors’offerings and valuable for reducing the overall cost when using higher-performance media.

Purchase Considerations

RING software is licensed through an Opex model based on usable capacity (a $ per terabyte per year subscription model). Subscriptions are all-inclusive of features (file and object storage) and include baseline support (premium Scale Care Services are optional and extra cost). As is standard practice, discounts can be negotiated for higher capacity commitments and purchases.

RING is well suited for the following use cases:

- VMware Cloud Director (VCD): It integrates with VCD, offering scalable object storage for VMs and workloads deployed within the VCD environment.

- Backup and archive: Its focus on scalability and data protection makes it suitable for efficiently storing and managing backups and archives. Additionally, its tiering capabilities can help move older data to cost-effective storage options.

- Analytics and AI data lake: Its scalability and ability to handle large datasets make it a good fit for storing and accessing data used in analytics and AI workloads. Features like multitenancy can further isolate data for different analytics projects.

- Private/hybrid cloud: It excels in hybrid cloud environments. With Scality ZEN, customers can manage data across on-premises and public cloud storage, providing flexibility and cost optimization as data storage needs evolve.

- M&E: Its scalability caters to the massive storage requirements of media and entertainment companies. Additionally, its focus on data durability ensures the integrity of valuable media assets.

- Healthcare: Its scalability and security features make it suitable for storing large medical datasets while adhering to healthcare data privacy regulations.

Radar Chart Overview

Previously, the vendor offered a large number of enhancements and product innovations, including notable capabilities for managing native S3 objects under a single namespace. This year, it is a Leader, having outperformed many vendors with more enhancements and new features released over the last 12 months. The roadmap continues this theme, and we expect to see the continued development and maturity of these new features.

SoftIron: HyperCloud

Solution Overview

SoftIron’s primary offering is HyperCloud, which delivers a software-defined storage solution designed for flexibility and scalability. It caters to various deployment options, including private cloud, hybrid cloud, and multicloud environments. This versatility allows organizations to choose the deployment model that best suits their needs.

HyperCloud offers unified block, file, and object storage, simplifying data management by eliminating the need for separate storage systems. It boasts strong performance for all data types, making it suitable for demanding workloads like VMs, media files, or real-time analytics. Additionally, it integrates seamlessly with existing infrastructure through industry-standard protocols and supports popular cloud platforms for hybrid cloud deployments.

Strengths

It offers more than simply object storage, including VMs, apps, and containers. It also enables the management of public cloud resources, which is notable for edge use cases. In addition, it is a single-vendor solution that the vendor states makes possible a secure chain of software and hardware auditability and traceability, which is key for more secure deployments. This may be advantageous for customers with specific supply chain requirements.

Challenges