Recap of 56th IT Press Tour in Silicon Valley, California

With 9 companies: Arc Compute, DDN, Hammerspace, Index Engines, Juicedata, MaxLinear, Oxide Computer, Tobiko Data and Viking Enterprise Solutions

By Philippe Nicolas | June 28, 2024 at 2:02 pm This article has been written by Philippe Nicolas, initiator, conceptor and co-organizer of the event launched in 2009.

This article has been written by Philippe Nicolas, initiator, conceptor and co-organizer of the event launched in 2009.

This 56th edition of The IT Press Tour took place in Silicon Valley a few weeks ago and it has been, once again, a good tour and a great opportunity to meet and visit 9 companies all playing in IT infrastructure, cloud, networking, security, data management and storage plus AI. Executives, leaders, innovators and disruptors met shared company and products strategies and futures directions. These 9 companies are by alpha order Arc Compute, DDN, Hammerspace, Index Engines, Juicedata, MaxLinear, Oxide, Tobiko Data and Viking Enterprise Solutions.

Arc Compute

Beyond HPC, AI is hot, no surprise here, and finally helps high performance related technology to penetrate even more enterprises. Arc Compute, founded in 2020 in Toronto, Canada, with a few million dollars raised for its series A, targets GPU usages and consumption optimization. The small team has developed ArcHPC, a software suite to drastically maximize performance and reduce energy, a key dimension in such deployments.

The idea is to limit the hardware, GPU and associated resources purchases and therefore environmental impacts and increased costs while optimizing these scarce resources are used. Other alternatives rely on ineffective software or manual special routine and so far didn’t bring any significant value and appear to be transient methods.

To deliver its mission, the firm has developed ArcHPC, a software suite composed of 3 components: Nexus, Oracle and Mercury.

First, Nexus is a management solution for advanced GPU and other accelerated hardware. The goal is to maximize user/task density and GPU performance. It comes with GUI and CLI to allow integration with job schedulers like SLURM. Its flexibility offers real choices for users to select various accelerators. Nexus intercepts machine code in the loading phase dedicated to the GPU portion. Today is under version 3.

Second, Oracle provides task matching and deployment, a process that is time consuming and often done manually. It represents a real paramount for dynamic environments and brings governance. It is centered on the heat of GPUs and energy alignment and offers task distribution among clusters.

Third, Mercury, still on task matching, selects hardware for maximum throughput. Arc Compute was selected by several users and LAMMPS is a good example. The company leverages a direct sales model targeting large AI/ML companies and super computers but the sales team has started some talks with key oems and channel players. Partners are AMD, Dell, HPE, Nvidia and Supermicro.

ArcHPC is charged per GPU/year for on-premises and per hour for cloud instances.

DDN

Established leader in HPC storage and obvious reference in AI storage, the session covered the company strategy with solutions to new challenges in these domains with ExaScaler evolution and Infinia but was also extended with Tintri.

ExaScaler, a flagship product for DDN for years and recognized parallel file storage, continues its domination in the HPC storage with new features beyond what its foundation, Lustre, offers. It is also widely used for AI storage and supports some of the most demanding training environments with a very deep Nvidia partnership and integration. Among other things we noticed an optimized I/O stack delivering real high bandwidth and IOPS numbers, advanced data integrity, encryption and reduction with sophisticated compression, Nvidia Bluefield-3 acceleration, obviously checkpoints and genuine scalability. It can be adopted for small super fast configurations but also for very large capacity.

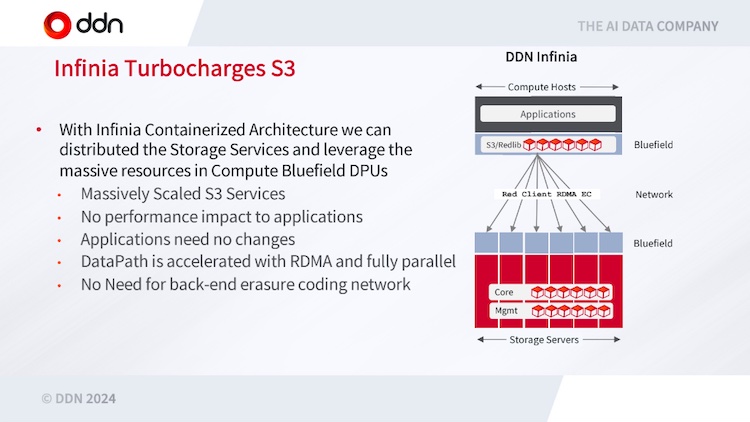

The second product released a few months ago is Infinia, a high performance object storage software, we should say distributed data platform, that is the result of 8 years of R&D effort from DDN, designed to address modern data challenges at scale. It is a pure SDS available as a cluster of 1U appliances, a union of 6 is required to deliver performance and resiliency. It implements a transactional key/value store as the data plane covered by an access layer exposing S3, CSI and soon industry standard file sharing protocols and even probably a Posix client plus some vertical integrated methods such as a SQL engine for native and deeply connected analytics. Each appliance supports 12 U.2 NVMe SSDs and protects data with an advanced dynamic erasure coding mechanism. The goal of this platform is to be configurable for a various list of use cases associated with different workloads patterns. It is multi-tenant by design, inspired by the cloud model, as the key itself includes a field for each tenant. The keyspace is therefore global and shared across all tenants, filtered by it, leveraging all the storage layer. This is better than storage shards difficult to size correctly. And it is fast thanks to a parallel S3 implementation coupled with Nvidia Bluefield DPUs.

Hammerspace

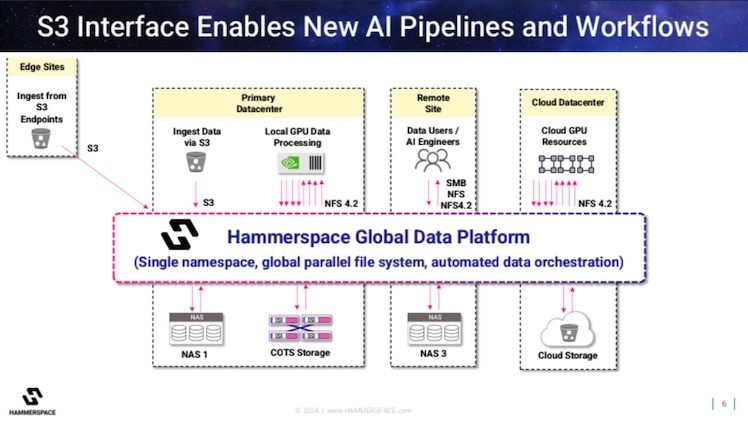

Highly visible data management Hammerspace continues to occupy the space with several announcements during the last few months and participation at key shows. They promoted GDE, their global data orchestration service, and more recently Hyperscale NAS architecture leveraging pNFS, the industry standard parallel file system as an extension of NFS. The team also added data management with secondary storage considerations with data archived and migrated on tape. Following Rozo Systems acquisition and its innovative erasure coding technique named Mojette Transform, their solutions have been extended with this advanced data protection mechanism. The company is moving fast, recruiting lots of new talent and penetrating significant accounts. The last product iteration is the S3 client that finally gives GDE the capability to be exposed as a S3 access layer whatever is the back-end. This move confirms users’ and market’s interest for a U3 – Universal, Unified and Ubiquitous – storage solution we introduced more than 10 years ago.

The company, like several others, recognized the need to promote a data platform and not a tool, product, solution or even an orchestrator as it is finally just a function, a key one but a function. A platform is different for enterprises; it represents an entity where many, not to say all, data flow is moving through to offer services for the business and potentially for the IT itself. This platform plays a central role, both physically and logically, with addition of various services that enrich data and associated usages of them.

It also confirms the take-off of edge computing with the necessity to have some sort of bridge to access data wherever they reside, locally to this edge zone, but also potentially coupled with other zones, data centers sites or even the cloud itself.

This new S3 service is offered free of charge for Hammerspace client as it is part of the standard license and it should be GA in Q4 2024 with some demonstrations at SC24 in Atlanta.

Index Engines

Founded by senior executives with a strong expertise in disk file systems and associated file storage technologies having initiated Programmed Logic Corp. morphed into CrosStor with their innovative HTFS and StackFS, later acquired by EMC, Index Engines was born around the idea of a deep content indexing technology. They first applied their solution to backup images on tape with the capability to reindex the content of backup images leveraging their expertise in backup catalogs and tape format. Rapidly the team has extended their use cases to cyber security ones recognizing the growing pressure from ransomware.

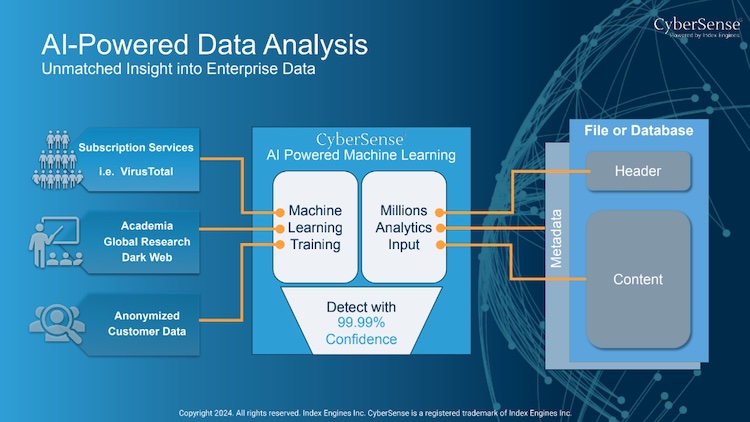

The result is CyberSense, a software deployed at more than 1,400 sites in various verticals from 1TB to 40+PB scanning every day more than 5EB. We all know the impact of ransomware on the business and obviously backup is not enough even if it is one of the key components of a comprehensive solution to protect and prevent data manipulation. CyberSense works as an extension to backup software exploring deeply backup images to detect some pattern, divergence and other traces of changes and therefore potential attacks. Index Engines is proud to say that CyberSense reaches 99.99% of confidence in detecting corruption, again it doesn’t prevent but really help to detect the on-going process and even the sad result. This impressive number was obtained based on 125,000 data samples with 94,100 of them infected by ransomware, CyberSense has detected 94,097 infected with 3 false negatives.

Leveraging its strong content indexing engine coupled with some AI-based models, CyberSense offers a very high level of SLA in that domain, and has proved his results against famous ransomware variants like AlphaLocker, WhiteRose, Xorist, Chaos, LockFile or BianLian. It is one of the reasons, Dell with PowerProtect, IBM with its Safeguarded FlashSystem and Infinidat with its InfiniSafe cyber storage approach picked the solution to bundle with their own solution.

And the result is there as Index Engines Tim Williams mentioned a 8 digits revenue range, hoping to pass 9 soon.

Juicedata

Juicedata develops JuiceFS under an Apache 2.0 license model, the team founded the entity in 2017 following some experiences at giant internet companies that addressed and solved some scalability challenges. They spent time to solidify the code and iterate 2 flavors, a community and an enterprise edition that offer the same experience from a client perspective but at different scale, performance and pricing. They recognize to be very confidential having chosen the product versus the visibility until now.

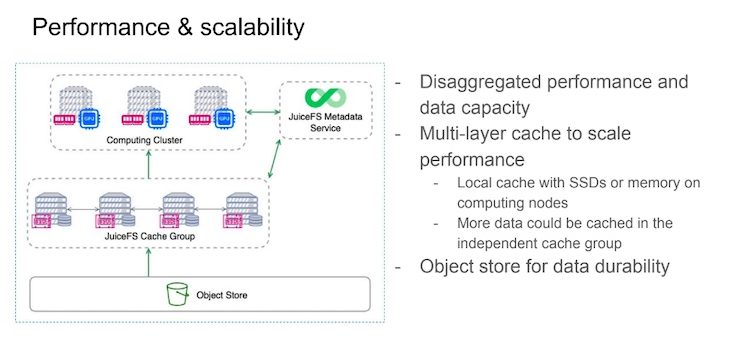

They address the challenge of an universal datastore with wide and flexible access methods such S3, NFS, SMB and even CSI for Kubernetes deployments. In fact they recognized that S3 is widely used and appreciated with some limitations especially for security aspects and data intensive workloads. At the same time, cloud took off without any real dedicated or well designed file system. What do users need in terms of gateway? The presence of tons of small files also introduces a problem with the real need to be cloud agnostic.

The developed JuiceFS is aligned with Posix requirements, delivering high performance, being elastic and is multi-cloud. On the paper it is an animal lots of users dream about.

The product segments metadata and data as these 2 entities require different data repositories and are processed differently being super small for first ones and potentially very large for the second especially in big data, AI and other analytics. Metadata uses various databases and models and data is stored on any back-end such as S3, Ceph, MinIO, cloud service providers… To reach desired performance levels, clients cache metadata and data at different tiers with a strong consistency model. These performance numbers are pretty impressive illustrated by several key references. JuiceFS supports any client, we mean, Linux, MacOS or Windows able to be deployed on-premises or in the cloud.

JuiceFS is another good example of the U3 Storage approach.

MaxLinear

Launched more than two decades ago, MaxLinear is a reference in the semiconductor market segment with recognized leadership positions in infrastructure networks and connectivity. The company, who made its IPO in 2010, with more than 1,300 employees today, generated almost $700 million in annual revenue in 2023.

The firm grew also by acquisitions, they made 6 of them, and one made in 2017 with Exar for $687 million gave them the off load data processing capabilities delivered today under the name Panther III Storage Accelerator. This technology came from Hifn and probably even before from Siafu, a company founded by John Matze, who designed and built compression and encryption appliances.

Panther III is pretty unique as it provides all its services – advanced data security, reduction, protection and integrity – in one single pass. The level of performance is very high and linear as soon as you add several cards in the same server. Up to 26 of them can be coupled to deliver a total of 3.2Tbps as one card offers 200Gbps. Latency is very low, 40us for encoding and 25us for decoding, the reduction ratio is also significant and much higher than “classic” approaches. The solution arrives with source code software, for Linux and FreeBSD, optimized for Intel and AMD, delivering the exact same result if for any reason the server with the board is not available. Beyond performance, the card is also very energy frugal. Dell for its PowerMAX embeds Panther III to maximize storage capacity and high PB per Watt. This is today a PCIe GEn 4 card and we expect a Gen 5 in the coming months that will also deliver a new level of performance in a consolidated way.

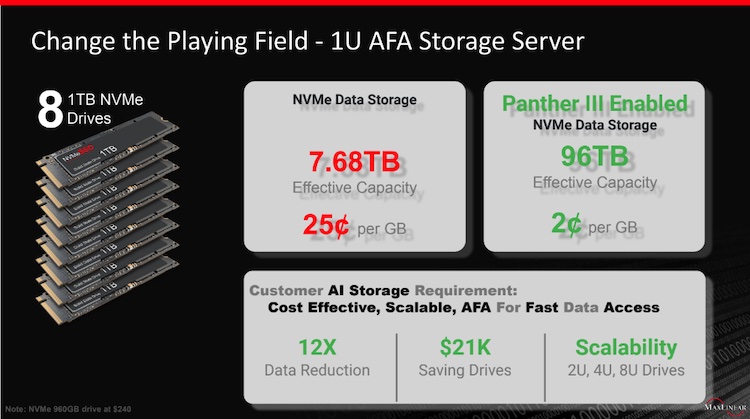

With Panther III, one 1U storage server with 8 1TB NVMe SSDs appears as a 96TB storage server with 2 cents per GB instead of 25 cents per GB. It is a 12x data reduction ratio.

This approach is different from a DPU model with clearly data passing the card to land on other devices, this is especially the case with networking implementations.

Oxide Computer

Founded by a few alternatives IT infrastructure believers, the company, started in 2019 with, so far almost $100 million raised, is on a mission. They try to define and build a new computing model for modern data centers. At the opposite of what cloud service providers have used for a few decades now, always evolving, Oxide thinks that it starts at the data center level and not with a computer with integration and product accumulation.

The team has a strong bet on open source, promoting a single point of purchase and support, with a very comprehensive API. And this is what they define as a cloud computer with compute provisioning services, a virtual block storage service, integrated network services, holistic security and high efficient power model. The rack offers an integrated DC bus bar that makes electricity distribution efficient and well consumed, same approach for the airflow.

For the storage part, they develop a distributed block storage based on Open ZFS with high performance NVMe SSDs leveraging also some other advanced ZFS features. One question is how they rely on a distributed erasure coding mode with a flexible dynamic parity model as ZFS was really a server centric model that couples file system and volume management. Internally ZFS file system is available, exposed potentially with NFS, SMB and S3 and as said a block layer as well. Everything is managed via a high level console with all the internals masked and provides a full abstraction for administrators and users. Obviously the approach is multi-tenant, ready to be deployed on-premises and in the cloud. Deployments leverage Terraform, a well adopted tool, to ease configuration management. The configuration is monitored with a fine granular telemetry model to check the convergence with SLAs.

The difficulty would be to sell to companies, mostly large, with strict SLAs running their IT based on a small company that still represents a risk. And if there is a risk, a doubt and danger may arise that impacts this IT foundation and therefore the activity of the client company.

We’ll see if this approach will really take off, so far it seems to be more anecdotic or for satisfied happy few, but it’s probably enough to stay motivated trying to convince “classic” users.

Tobiko Data

Young company launched in 2022 by the Mao brothers, Tyson and Toby, Tobiko Data recently raised approximately $18 million for a total of 21. Leveraging its experience at Airbnb, Apple, Google and Netflix, the team develops SQLMesh, an open source data transformation platform, a well adopted tool for companies wishing to build efficient data pipelines.

At these companies, the team and in particular the 2 brothers have encountered a series of challenges in the application development phase, with real difficulties to obtain deep visibility for data pipeline and their optimization and large datasets format. At the same time, they realized that the reference in the domain, dbt, doesn’t scale so it validates the need for a serious alternative and the Tobiko Data project got started.

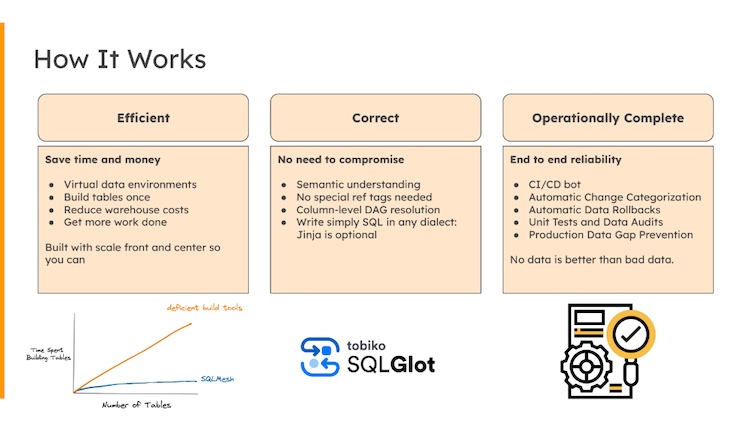

The Tobiko approach works in 3 phases with the goal to reduce time, storage space and associated cost thus complexity globally. It starts by the creation of a virtual data environment being a copy of the production, then this copy becomes the development area where all changes are made producing different versions the production can point to. Database table spaces are built only once, and it is key in the Tobiko model, then semantic is integrated and column-level DAG resolution and conclude with any simple SQL commands. It is coupled with CI/CD logic with automation at different levels, automatic rollbacks are also possible, tests and audits as well with a control of the potential data divergence with the production.

To deliver this promise, Tobiko Data has also developed SQLGlot and as the readme says on GitHub, SQLGlot is a no-dependency SQL parser, transpiler, optimizer, and engine. It can be used to format SQL or translate between 21 different dialects like DuckDB, Presto / Trino, Spark / Databricks, Snowflake, and BigQuery. It aims to read a wide variety of SQL inputs and output syntactically and semantically correct SQL in the targeted dialects.

The company is very young with brilliant engineers, we’ll see how things will go and where they will land…

Viking Enterprise Solutions

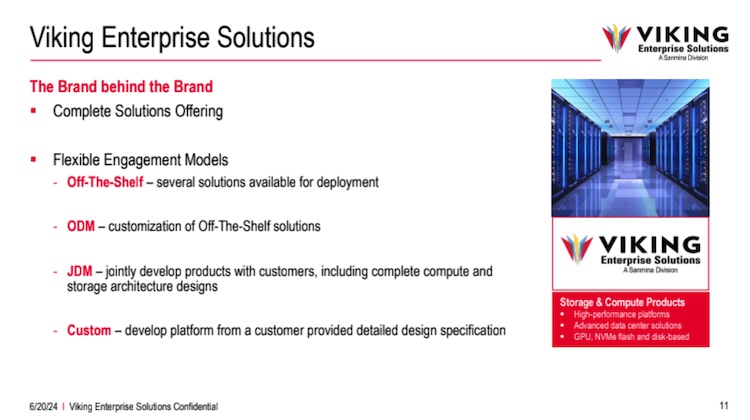

Viking Enterprise Solutions aka VES, a division of Sanmina, partners with other vendors to design, build and develop their compute and storage systems. Sanmina was founded in 1980 with 34,000 employees today generating close to $9 billion in 2023. The firm launched 3,000 products per year illustrating its production capacity and wide list of clients and partners. To summarize, they are the manufacturer behind the brand. The product team focused on data center solutions with Software Defined Storage, racks and enclosures, compute and AI/ML solutions, liquid cooling, server and storage systems and also associated services.

VES came from the acquisition of Newisys in 2003 and a division of Adaptec in 2006. VES partners with a wide list of technology and product leaders for CPUs, GPUs, networking and connectivity and storage.

On the hardware side, the performance and resiliency are 2 fundamental elements of the company. The products are famous for high density, high capacity, very good performance level and low power in 1 or 2U sizes. This is the case in Media and Entertainment, enterprise AI or on-premise cloud storage. Among the vast line of storage server products, we noticed the NSS2562R with a HA design in A/P, dual port PCIe Gen 3.4 for NVMe SSDs offering 56 U.2 drives. Also the VSS1240P, a 1U storage server with 24 EDSFF E1.S drive in dual node and full PCIe Gen 4. On this we found also the NDS41020 with 102 SATA/SAS HDDs among a long list.

On the AI/ML side, VES offers the VSS3041 with up to 3 or 5 GPUs, 240TB of storage with PCIe Gen 5 and AMD Genoa CPUs. The company selects GPUs from Nvidia and AMD and develops an edge oriented AI framework named Kirana. It is illustrated by the CNO3120 AMD based appliance.

On the software aspect with CNO for Cloud Native Orchestrator, VES is very active in the open source domain with deep expertise in Ceph and other OSS. The idea here is to build global solutions that couple OSS and their own hardware platforms. Global means here also a multi-site dimension with geo-redundancy, high availability, data durability and security.

Companies like VAST Data, DDN, Infinidat, Wasabi and others have selected VES for years and rely on this partnership to deliver leading solutions.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter