NVIDIA GTC: SK hynix Unveiled PCB01 PCIe 5.0 Performing SSD for AI PCs

Up to 14GB/s and up to 12GB/s sequential RW speeds

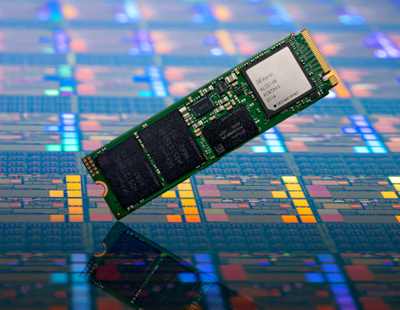

This is a Press Release edited by StorageNewsletter.com on March 25, 2024 at 2:02 pmAt Nvidia GTC 2024, SK hynix, Inc. unveiled a new consumer product based on its latest SSD, PCB01.

Applied to on-device AI (1) PCs, PCB01 is a PCIe (2) 5-Gen SSD which recently had its performance and reliability verified by a major global customer. After completing product development in the 1H24, the company plans to launch 2 versions of PCB01 by the end of the year which target both major technology companies and general consumers.

Optimized for AI PCs, capable of loading LLMs within 1 second

Offering sequential read speed of 14GB/s and a sequential write speed of 12GB/s, PCB01 doubles the speed specs of its previous-gen. This enables the loading of LLMs (3) required for AI learning and inference in less than 1s.

To make on-device AIs operational, PC manufacturers create a structure that stores an LLM in the PC’s internal storage and quickly transfers the data to DRAMs for AI tasks. In this process, the PCB01 inside the PC supports the loading of LLMs. The company expects these characteristics of its latest SSD to increase the speed and quality of on-device AIs.

PCB01 also offers a 30% improvement in power efficiency compared to its previous-gen so it can manage power from large-scale AI computations. In addition, the company has applied single-level cell (SLC) (4) caching technology to the product which enables some of the cells – the storage areas of NAND – to operate as SLCs with fast processing speeds. The technology allows selected data to be quickly read and written, speeding up the operation of AI services and general PC operations.

Jae-yeun Yun, head, NAND product planning and enablement, stated: “PCB01 will not only be highlighted for its use in AI PCs, but this high-performing product will also receive significant attention in the gaming and high-end PC markets. Our much-anticipated product will allow us to solidify our position as the global ≠1 AI memory provider not only in the HBM field but also in the on-device AI area.”

Alongside its PCB01-based product, the firm also introduced its next-gen technologies and solutions at GTC 2024 including its 36GB 12-layer HBM3E, CXL (5), and GDDR7 (6). The display of HBM3E, the industry’s first 5-gen HBM solution, follows the company’s announcement that it has begun volume production of the product. In addition, GDDR7 attracted attention for doubling its bandwidth and improving its power efficiency by 40% compared to its predecessor GDDR6.

On top of presenting its world-class portfolio, the firm also held presentation sessions at GTC 2024. Seoyeong Jeong, technical leader, HBM marketing, gave a talk titled The Outlook of the HBM Market where she shared insights on the importance of AI memory and its core technologies.

(1) On-device AI: The performance of AI computation and inference directly within devices such as smartphones or PCs, unlike cloud-based AI services that require a remote cloud server.

(2) PCIe: A high-speed IO interface with a serialization format used on the motherboard of digital devices.

(3) Large language model (LLM): As language models trained on vast amounts of data, LLMs are essential for performing generative AI tasks as they create, summarize, and translate texts.

(4) Single-level cell (SLC): A type of memory cell used in NAND flash that stores one bit of data in a single cell. As the amount of data stored increases, the memory cell becomes a MLC, a TLC, and so on. Even though more data can be stored in the same area, the speed and stability decreases. SLC speeds up the processing of selected data.

(5) Compute Express Link (CXL): An integrated interface that connects multiple devices such as memory, CPUs, GPUs, and accelerators all at once.

(6) GDDR7: The 7th-gen of graphics memory that supports GPUs to work faster and more efficiently.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter