Hammerspace Hyperscale NAS Achieves GPUDirect Storage Self-Certification from Nvidia

To accelerate AI and deep learning pipelines

This is a Press Release edited by StorageNewsletter.com on February 26, 2024 at 2:01 pmHammerspace, Inc. announced that its Hyperscale NAS is now available with NVIDIA GPUDirect Storage support.

Training large language models (LLMs), generative AI models, and other forms of deep learning require access to huge volumes of data and HPC levels of performance to feed GPU clusters. Delivering consistent performance at this scale has previously only been possible with HPC parallel file systems that don’t meet enterprise standards, adding complexity and costs.

Hammerspace software makes data available to different foundational models, remote applications, decentralized compute clusters, and remote workers to automate and streamline data-driven development programs, data insights and business decision-making. Organizations can source data from existing file and object storage systems to unify large unstructured data sets for AI training.

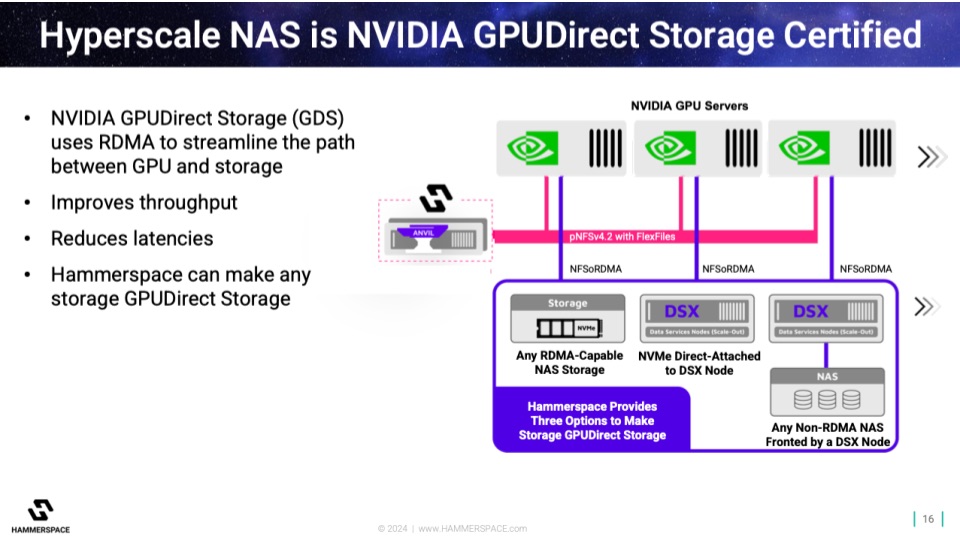

The company introduced high-performance storage capabilities to the Global Data Environment by embracing the Hyperscale NAS storage architecture – the first to combine the performance and scale of parallel HPC file systems with the simplicity of enterprise NAS, bringing new levels of speed and efficiency to storage in order to address emerging AI and GPU computing applications. And now, firm’s NAS is supported by NVIDIA GPUDirect Storage, further bolstering these capabilities.

Magnum IO GPUDirect Storage

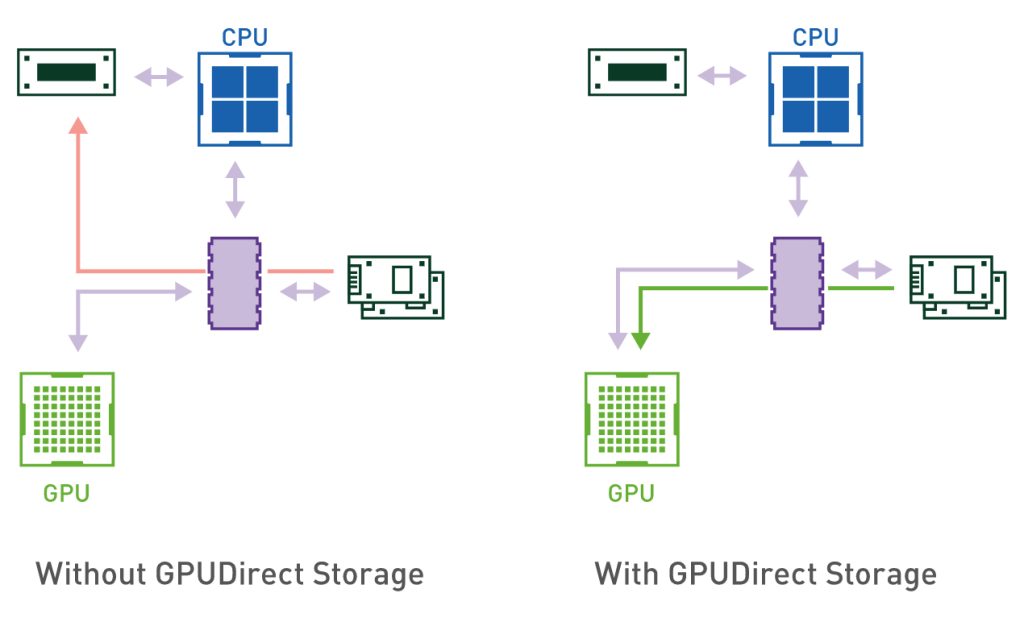

The added support will allow enterprise and public sector organizations to leverage company’s software to unify unstructured data and accelerate data pipelines with NVIDIA’s Magnum IO GPUDirect family of technologies, which enable a direct path for data exchange between NVIDIA GPUs and third-party storage for faster data access. By deploying the product in front of existing storage systems, any storage system can now be presented as GPUDirect Storage via Hammerspace.

Hyperscale NAS architecture can scale out without compromise to saturate the performance of even the most demanding network and storage infrastructures.

“Data no longer has a singular relationship with the applications and compute environment from which it originated. It needs to be used, analyzed and repurposed with different AI models and alternate workloads across a remote, collaborative environment,” said David Flynn, founder and CEO. “There is an urgent need to remove latency from the data path in order to get the full value out of very expensive GPU environments. Organizations need to be able to realize the full value of their investment in compute without impacts on performance. The more direct the path is from compute to applications and AI models, the faster results can be realized and the more efficiently infrastructure can be leveraged.“

“The rapid shift to accommodate AI and deep learning workloads has created challenges that exacerbate the silo problems that IT organizations have faced for years,” he added. “NVIDIA will be a key collaborator in furthering our mission to enable global access to unstructured data, helping allow users to take full advantage of the performance capabilities of any server, storage system, and network – anywhere in the world.”

Hammerspace joins NVIDIA Inception

Additionally, the company has joined NVIDIA Inception, a program that nurtures start-ups revolutionizing industries with technological advancements.

NVIDIA Inception helps start-ups during critical stages of product development, prototyping, and deployment. Every NVIDIA Inception member gets a custom set of ongoing benefits, such as NVIDIA Training credits, preferred pricing on NVIDIA hardware and software, and technological assistance, which provides start-ups with the fundamental tools to help them grow. The program also offers members such as Hammerspace the opportunity to collaborate with industry-leading experts and other AI-driven organizations.

Hammerspace at NVIDIA GTC 2024

It will participate in the NVIDIA GTC AI conference on March 18-21, 2024, in San Jose, CA.

Learn More

Data Sheet: Hammerspace and NVIDIA Joint Solution

White Paper: Revolutionize Your AI Workloads with Hammerspace

Podcast: Best Practices in Building AI Pipelines with David Flynn

Solutions Page: Analytics, AI and ML

Comments

New validation for GDS and NFS that proves that something is possible outside of the classic parallel file storage approach or with Vast Data for NFS and with now NFS in its parallel flavor. This is good as it reflects the power of parallelism needed in high demanding environments such as AI but also the standard model and NFS in particular.

It gives more choice to users and we'll see the adoption of pNFS in AI. We estimate, a lot of education, use cases and validations plus return of experiences must be done to make a clear breakthrough in the domain. We'll see in 2024 but again it will be all about sales execution.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter