Recap of 53rd IT Press Tour in California

With 9 companies: AirMettle, Cerabyte, CloudFabrix, Graid Technology, Groq, Hammerspace, HYCU, Rimage and StorageX

By Philippe Nicolas | February 12, 2024 at 2:01 pm This article has been written by Philippe Nicolas, initiator, conceptor and co-organizer of the event launched in 2009.

This article has been written by Philippe Nicolas, initiator, conceptor and co-organizer of the event launched in 2009.

The 53rd edition of The IT Press Tour took place in San Francisco, CA, and the bay area end of January and it was the right opportunity to meet and visit 9 companies all playing in IT infrastructure, cloud, networking, data management and storage plus AI. All inspiring executives, leaders and innovators shared their views on the current business climate, technology directions and market trends. These 9 companies are AirMettle, Cerabyte, CloudFabrix, Graid Technology, Groq, Hammerspace, HYCU, Rimage and StorageX.

AirMettle

Founded in 2018 by Donpaul Stephens, CEO, Matt Youill, leading analytics and Chia-Lin Wu for the object storage part, it is on a mission to introduce a new data analytics platform called Analytical Storage and shake the big data landscape.

For some of our readers, Stephens is one of the founder of Violin Memory, a pioneer in high performance flash array, later renamed Violin Systems, and then acquired by StorCentric after several episodes and leadership failures. As a consequence of Covid, StorCentric was dismantled and ceased operations, therefore Violin disappeared.

Based on the strong experiences of the founding team having created key products in the industry and having anticipated some waves and needs, the firm wishes to glue a SDS approach and parallel processing coupled with AI to deliver a new gen of analytics.

Even if the product is not GA yet, the project is being supported by key public government bodies, universities and research entities such as NOAA, Los Alamos National Labs or Carnegie Mellon to name a few.

But all of this starts with a series of challenges to address around performance limitations for data warehouses but also memory, compute and networking cost. The team wished to tackle this with a radically new solution still based on S3 API.

And to avoid silos, partial data sets and limited indexing, the analytical storage claims to offer a horizontal model with an order of magnitude acceleration factor for big data analytics. Classic approaches used in other products, the “divide and conquer” principle is applied here to illustrate once again that parallelism is the way to adopt in order to solve this kind of challenges. For each data object, the firm’s engine generates and distributes specific metadata to boost processing. The company names this parallel in-storage analytics to promote a solution instead of adoption data warehouses that many of them appear to be old, heavy and not aligned with modern processing and analytics goals.

The S3 Select API can be used to query tabular data stored in the AirMettle analytics storage platform. This is the case for SIEM, IoT or NLP to scan special historical events or via special keywords. The product supports Parquet as file format and accelerates significantly Presto, Spark and Arrow processing capabilities.

The competition is significant with strong visible leaders, innovators and other recent players such as Oracle, Cloudera, Databricks, Snowflake or Yellowbricks and even potentially the confidential Ocient. Some others from the storage landscape try to add this kind of connection with upper layers, actors from the top try to leverage and optimize the storage foundation but clearly these are still 2 distinct entities, what AirMettle targets with its analytical storage.

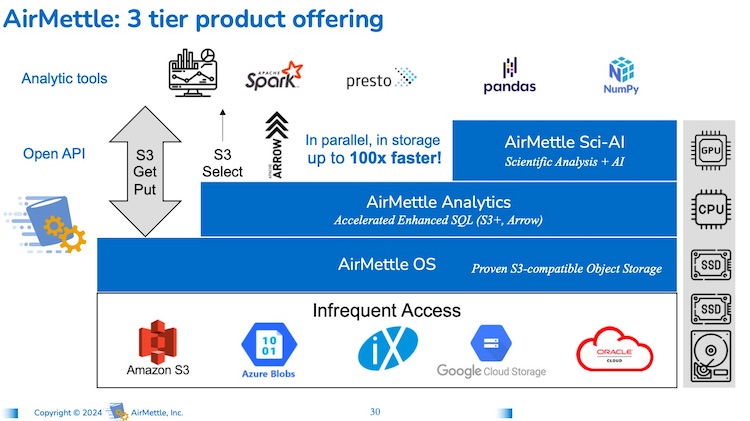

The company wishes to fill the special middle layer between analytics/data warehouse and object storage with a platform able to couple both worlds in one entity, running on-premises or in the cloud. The object storage layer provides a S3 compatible interface and relies on large scale clusters with data protected with erasure coding and replication. These clusters are federated and are glued by an analytical layer queryable by S3 Select, Presto, Pandas, Spark or Arrow supporting various format like Parquet, Json… and on top, another layer with AI.

Gary Grider, HPC division leader at LANL, partner of lots of companies, already jumped into AirMettle wave as the first public early reference announced in October 2023 even if the product was not available as it is planned GA for 2Q24.

The company will use direct and indirect sales model with system integrators like iXsystems listed as an early partner, government facilitators and cloud services like AWS S3, Azure Blob, Google Cloud storage or Oracle Cloud. The pricing model will be based on subscription plus per-API call for fully managed services.

Cerabyte

Cold storage is hot, I should add once again, as we saw in the past, some tentatives to develop and promote new media. This Austrian company founded in 2022, has the mission to develop a new media based on ceramic and associated technologies for write and read operations and also the data format.

As pressure continues on cold data to align their location on the right technology, it makes sense to develop cold storage. But pay attention, not all cold data requires cold storage, even if both of them belong to the secondary storage category. Cold data has multiple flavors, it could be a backup image, a file no longer accessed after 30, 60 or 90 days or of course an archive data set that by nature is cold. All these should be stored on the right storage subsystem and we find on the market various solutions dedicated to each of them. This is important to understand as some confusion exists on the market as vendors maximizes the effect of cold data. Keep in mind that just a fraction of cold data is kept on cold storage.

So now what is cold storage? The first and rapid definition of this is associated with the energy or electricity consumption and the passive nature of the media. Examples of this are tapes, optical or any support with that property or process that makes this possible. We saw lots of DNA storage articles recently with lots of potential. Among them, we can list spin-/powered- down HDDs. We remember MAID (Massive Array of Idle Disks) solutions with Copan Systems, Nexsan, NEC, Fujitsu or Disk Archive or the very recent Leil Storage from Estonia. Another key dimension is the retention time needed and for some media the necessity to refresh and migrate data to new support, more recent. This durability is an essential property as the media has to resist to temperature, radiation, UV or EMP exposures.

What Cerabyte is developing is a new media with several key principles: the media must be durable able to span multiple decades or even centuries without the need of content migration, be alteration resistant, WORM by nature and fully passive. This media is based on glass sheets with ceramic coating with laser engraved very small data structures to ease scalablity. The other fundamental element of the solution relies on the writing and reading processes. The data capture on the media is achieved with femto laser with parallel beams delivering up to 2 million bits in one shot. The reading phase couples light and microscope and you get it, there is no contact with the media in these 2 operations. The density is a key metric to store exabyte of data in reasonable number of data centers racks. Other critical metrics are the speed and cost of course. And the reference here is tape, the today’s preferred and wide adopted media for cold storage. And this is to understood with the following statement: storing data at near zero energy cost over the lifetime of the media.

As of today, we had the opportunity to see a prototype in Aachen in Germany, and we expect new iterations in the coming months.

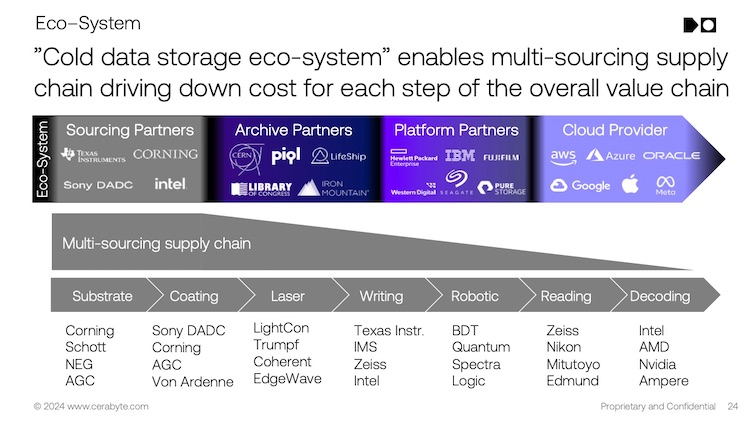

Ultimate Cerabyte goal is to replace tape as the solution would address and solve tape limiting factors. And analyzing market numbers, the opportunity is huge. It translates on the time to develop and release a mature solution and how the potential competition will react. Also industrial partners are paramount here as the glass, for instance, won’t be produce by Cerabyte of course, neither the laser, microscope, media library, robotic… so the ecosystem and supply chain of sourcing and partners is already a serious aspect of this project.

We invite you to listen to the interview with Christian Pflaum, CEO of Cerabyte, available here.

CloudFabrix

Met exactly last year at the same time, we measured the progress made by the company. Its team has continued to develop its observability engine, integrations especially with AI and partnerships.

The reality of IT for a few years is hybrid with different proportion between cloud and on-premises deployments including SaaS and edge. For sure it adds some complexity with the common wish for users to manage these configurations the same way and improve the customer experience. And in that complex IT world, this experience represents the new real metric what some people called a KPI with all control and measurement requirements around it. How can these kind of complex deployments deliver a strong sustainable level of services and at the end of the day deliver their promises and support the business?

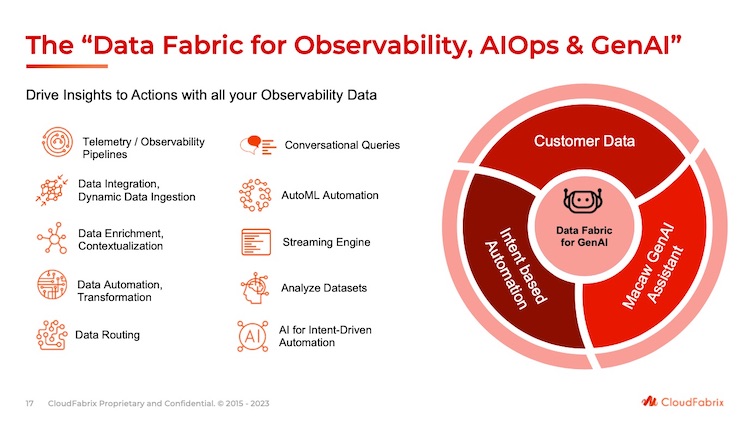

CloudFabrix mission is exactly here building a Data Fabric to unify independent silos and connect elements not connected. Some people say connect the dots and this Data Fabric working as a horizontal data bus across all tiers and applications to collect, measure, observe, control and automate the behavior of the IT environment.

This approach confirms the mandatory need for this middleware layer and IT data pipelines for a rich and comprehensive observability strategy. The industry comes from AIOps with event correlation, predictive insights, incident management, auto remediation with robots and specific modules to FinOps, adding intelligent log engines, deep visibility and telemetry to finally land on Full Stack Observability.

This is where CloudFabrix plays with the recent addition of Generative AI and the team chose Macaw. The state of the art reaches new levels with the consideration of that Full Stack Observability that is, among others, instantiated by Cisco and its FSO solution. The FSO model seems to be the right approach for KPI experience beyond KPI availability and performance. The market is huge according to some analysts evaluated at $34 billion.

Picking Macaw generative AI assistant, it adds real value to the incident management giving the ability to make rapid queries, generate charts and pipelines via conversational interface.

The company signed significant deals and among them the one secured with a very large telco operator for edge, cloud and core. This example is a real good example of the horizontal flavor of its approach. IBM also collaborates with the vendor with its consulting group. Of course, as a close partner, the Cisco integration is very precise with dedicated CloudFabrix modules and OTEL data enrichment and ingestion features. We see modules for vSphere and SAP but also campus analytics, asset and operational intelligence and infrastructure observability. In addition, the go-to-market model includes global system integrator such as WWT, DXC.technology or Wipro.

As a close partner of Cisco, the company has witnessed AppDynamics and ThousandEyes usages and the pending acquisition of Splunk with the FSO – Full Stack Observability – platform. The team sees a real market traction and with already 2 companies sold to Cisco, we even think that the management team expects a similar destiny, we’ll see.

Graid Technology

The team picked the IT Press Tour to launch its new RAID card, the SupremeRAID SR-1001, adopting now a strategy from the desktop to the cloud.

The company, founded in 2020 by Leander Yu, has raised around $22 million so far with a small mezzanine round last year and it appears that they don’t need to raise a new round. With around 60 employees, it is doing very well with tons of certifications, validations, partnerships and OEMs that support a strong market penetration. To illustrate this, the company delivers +475% Y/Y growth between 2022 and 2023.

To refresh a bit our readers, Graid designed and developed a PCIe Gen 4 card with GPU on it supporting all RAID processing for NVMe SSDs connected to the system. The idea is to offload all associated intensive parities calculation and rebuild processing to a dedicated entity that free CPU cycles. This approach already chosen in other domains has proven significant gains and the Graid model illustrates perfectly the case for online data protection needs.

RAID classic implementations with hardware and software approaches seem to reach some limits especially with high capacity HDDs and SSDs and users realized it especially with independent software. The main issue is that this kind of software is not a product but more a feature or a tool and must be embedded in more global solution. Of course it remains the second usual model with dedicated controller like Adaptec did many many years ago. Today this is essentially covered by Broadcom card. The idea to offload is set but the ultimate model is realized with GPU.

It’s the reason why I’m always very surprised to see comparison with software RAID as nothing is common between the 2 except the data protection goal they both deliver but really for different use cases and performance levels. Graid has published results that demonstrate significant performance differences between the 2 approaches.

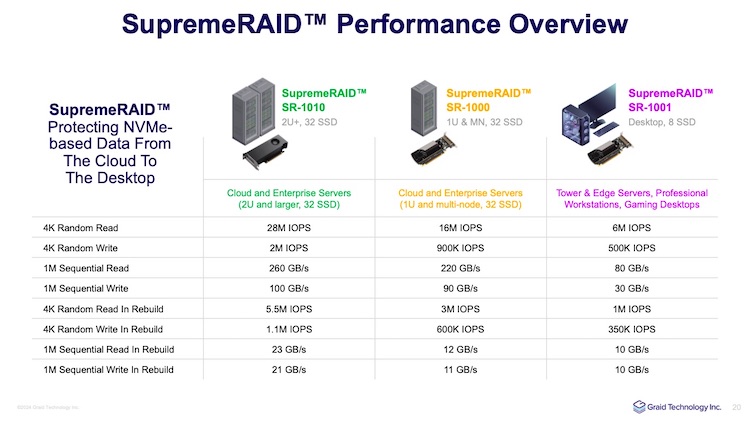

Available for 1U and 2U servers, for data centers and cloud infrastructure with their SupremeRAID SR-1000 and SR-1010 supporting up to 32 SSDs, the company just announced a new iteration dedicated to edge servers and workstations with the SR-1001. This one is more limited but largely enough for this kind of environments supporting up to 8 SSDs.

The engineering team also has made an evolution for the SR-1010 sometimes considered too large as a board, the idea is to reduce its larger to 1 slot. They release the fan-less kit for SR-BUN-1010 and remove some elements on the card.

The table below displays characteristics and performance benefits for each SupremeRAID card.

Dual controller configuration is also supported with active-active or active-passive modes, in the first case 16 SSDs are supported by each card as the maximum for 1 card is 32 SSDs.

We mention here essentially direct NVMe connections but the SupremeRAID card supports NVMe-oF as well. It means that we can imagine new topologies across compute and storage servers as the target and initiator mode can be activated.

The development team has developed a UEFI boot utility available for Linux today that helps creating mirrors system disks. With the updated BIOS settings and Linux OS installer, the tool activates RAID-1 during the boot process. Today the utility supports only Linux with CentOS, Rocky, Alma, RHEL, Oracle, Suse and OpenSuse, Windows flavor opportunity being under evaluation. Available today, oem partners will receive source code for integration with a similar ELA license model.

We learned that a big OEM and big distributors should be announced soon and it just illustrates the adoption rate of Graid in the industry. We won’t be surprised to see some M&A discussions from some players, it could come from Intel, AMD, Nvidia or special boards manufacturers even servers vendors, giving them a real differentiator.

Groq

As an education effort, the event organizer secured a meeting with Jonathan Ross, the CEO of Groq, to understand their AI approach with their own processor, architecture and software but also in order to learn more about market perspectives, business needs and users expectations. Met at SC23, the firm was a curiosity and then the idea to meet the company born there and we now better understand what they do.

Real DNA of the founder with a single mission, Ross spent time at Google where he invented the Tensor Processing Unit or TPU. He co-founded Groq in 2016 and raised so far more than $360 million. The session we had was amazing, we mean spectacular, illustrating what they develop with their chat solution. Ross started with the genesis and justification of generative AI mentioning tokens being what oil was for transportant age or bytes for the information age. All market indicators and predictions are fully green with hyper hype and growth potential.

AI has essentially 2 phases, training and inference, and GPU is good for training with long run time with high parallelism manipulating tons of data and today it is the preferred choice. But GPU, as the first letter confirms, was not designed for AI and appears to be not the right choice for inference. Here it’s another story as “low latency inference is sequential” and Groq has chosen a dedicated approach still requiring a multi-rack scale.

For LLM and to support its rapid growth, the vendor has built all the stack, design the architecture, the processing unit called the LPU or Language Processing Unit, the card, the rack… and the GroqWare software that is considered as the key focus for the company even with all developments around. It is translated to the power of development given to developers and users of such generative AI.

The mission of Groq is simple as the company wishes to drive cost of compute to zero.

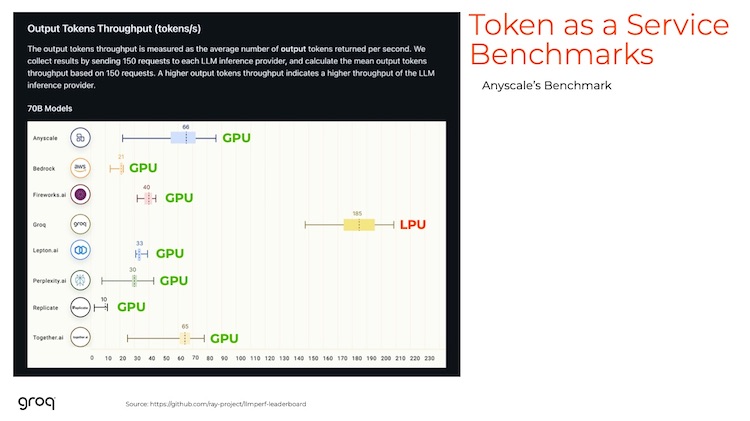

Benchmarks show clear results in favor of LPU in comparison to GPU in various dimensions: latency and development time, tokens throughput, energy consumption and cost.

The concept relies on a massive scale architecture starting with the GroqCard, a PCIe Gen 4 16 lanes card, with 8 LPU engines installed in a 2U compute server equipped with AMD EPYC cpus running Ubuntu Linux and coupled with GroqWare software. A LPU supports large memory with high bandwidth and is connected to other LPUs via direct network links. Therefore there is no hop and contributes to the high performance of the global system.

This server is connected to a 2U storage server with 24 NVMe SSDs and together they form a GroqNode of 4U. In a GroqRack, 9 GroqNodes, 8 active and 1 stand by, are configured and you can of course add racks all connected. The company has signed key partners like BittWare, Samsung and GlobalFoundries to produce final products and deliver them to the market.

Currently with first silicon generation, a GroqNode embeds 8 LPUs for 264 LPU over 4 racks. The roadmap shows a next iteration with a double density with 4128 LPU for 33 racks.

Hammerspace

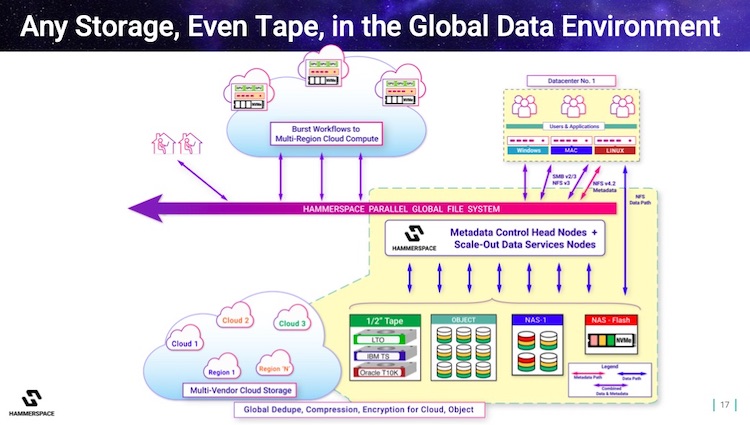

The company clearly accelerated since our last meeting with a variety of strategic events such as Rozo Systems acquisition, pNFS reference architecture for AI workloads, key recruitments like Mark Cree as SVP strategic partnerships and addition of tape environments to its global file landscape with an obvious S3-to-tape extension.

The team counts now around 120 people, growing fast.

Covering GDE – Global Data Environment – the message is clear with new successes validating a pretty exclusive data sharing model. The firm arrived at the right time to deliver its NFV – Network File Virtualization – and NFM – Network File Management – solution, what the team called data orchestration. What is know for enterprises as a real nightmare with disk file systems, network and distributed ones but above all independent and not linked at all. This approach sitting above all silos allows easy and transparent access to data wherever it resides, locally or remotely, on-premises on in the cloud. GDE decouples the physical presence and file association with disk file systems exposed via industry standard file sharing protocols like NFS and SMB and displays a large ubiquitous virtual file system. Today the technology is under gen 5.

One core aspect of it is the central real-time database, let’s say catalog, residing on the metadata server, instantiated with the RocksDB key-value store. The engine is a contribution to the market from Meta.

Beyond this global access, this solution offers advanced data services such as migration, replication, tiering and specific data placement.

Hammerspace is a very good example of the U3 – Universal, Unified and Ubiquitous – storage introduced 10 years ago.

The second key topic beyond GDE is the parallel file system angle with pNFS and the active role playing by Hammerspace in the NFS standard development and promotion. Important aspect is the support of RDMA with NFSv4.2 which put all NFS-based market solutions flavors in perspective. The team continues to insist on the value of the Flex layout invented more than 10 years ago. The company has published a reference architecture leveraging pNFS with tons of clients machines, CPUs, GPUs, data servers with NVMe SSDs coupled with disk file systems and exposed via NFS v3. We expect a return of experience from users about this design that couple both worlds, the power of standard and NFS and the beauty of parallel access. And again, Los Alamos National Labs with Gary Grider is in the loop here helping Hammerspace beyond their MarFS approach and need for horizontal file access.

The second unique effect when users adopt Hammerspace is the combination of GDE with pNFS extending potentially pNFS to other and new client machines.

Introduced during SC23 and extending more recently, the team has added tape extension with a S3-to-tape feature. For that, they don’t reinvent the wheel and select 3 key established partners such as QStar Technologies, Grau Data and PoINT Software and Systems among the 10 active ones. This is strategic as the company targets high performance computing and research centers who are historical tape users with huge configurations. We’ll see if HPSS well present at these customers will be added, same thing for Versity.

Business results are visible with very large clusters deployed, 8 figures per year software licensing revenue and active deals pipelines. 2023 will remain the year when the business really took off.

The company was selected by Coldago Research and is listed as specialist and challenger in the recent Map 2023 for File Storage in the respective high performance and cloud file storage maps.

HYCU

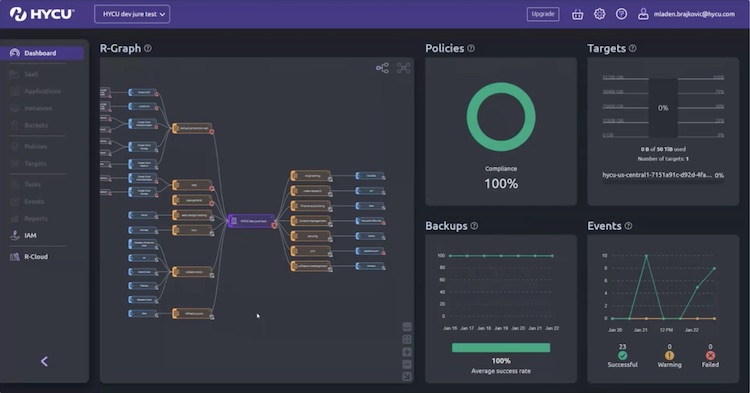

Ninth participation for HYCU who chose again to launch a new product iteration during The IT Press Tour. We all remember the R-Cloud launch in January 2023 in San Jose, CA, that really set the pace on the SaaS applications data protection market. We recognize the role of pioneer of the company in the domain having identified a key market trend with cloud and the hype and real boom around SaaS.

This new radical backup model offers the capability to develop a dedicated tailored module glued with and controlled by the HYCU platform. This comes with R-Graph and the marketplace that show all successful integrations available and we understand that this is approaching 100 today.

Now a new level is achieved as the team raised the bar once again integrating Generative AI in the module development boosting significantly this phase.

The firm picked Claude AI service from Anthropic and we have to recognize that the demonstration made during the session was pretty impressive. R-Cloud, launched here, is an AI-powered assistant that eases drastically application modules and offers the option for users to protect applications not available before. The first 2 examples of such protection are Pinecone and Redis, 2 very famous vector databases used in AI environments. This initiative joins the low-code wave and illustrates the new need for rapid development in that domain, now possible with generative AI integrations.

With all the hype around generative AI, seeing a real application of such technology represents a major step forward.

One of the battle in the SaaS applications data protection domain remains the application coverage as users’ goal is to limit the number of solutions to reduce cost, complexity and improve SLAs.

Beyond bare metal, VM, containers and cloud, this model has participated to its redefinition to a new category named Modern Data Protection.

We measure here all the progress made by HYCU with several key iterations especially around AWS, Azure and Google Cloud and its associated data services like EC2, EBS, S3, Lambda, RDS, DynamoDB, Aurora, Azure Compute, GCE, GCS, GKE, AlloyDB, BigQuery, CloudSQL but also VMware and Nutanix and other ones relying on these public clouds such as Asana, Atlassian Confluence and Jira, ClickUp, Trello, Miro, Typeform, Terraform or Notion but of course Google Workspace, Office365 or Salesforce. HYCU added Backblaze B2 as an alternative S3 as well. All protection made on AWS can be benefits from HYCU R-Cloud, especially the capability to restore granular items.

What HYCU built for the last few years confirms the fast growing need for data protection as SaaS applications continue to explode with more and more users and data and therefore with a high risk and loss exposure. And to support the coming months active development, marketing efforts, partners developments and market presence, the company has recruited Angela Heindl-Schober as its new marketing executive.

The last big news announced last week between Cohesity and Veritas Technologies around NetBackup confirms that old players and even more recent ones, Cohesity was founded 10 years ago, have difficulties to address SaaS applications data protection challenges. We’ll see if this move will trigger some reactions from the competition such as new M&As.

Rimage

Reference in CD, DVD and Blu-ray duplication for years, the company has morphed its business. It has secured more than 3000 clients with fnames like Cisco, Audi, HP, GE, IBM, Boeing, Siemens, Wells Fargo, Walmart or Airbus with an indirect channel strategy with 44 partners in Americas, 94 in EMEA and 56 in APAC.

Founded in 1978 and traded several years on the Nasdad, Rimage has been acquired by Equus Holdings in a private equity move.

Back to the root, as mentioned, the company was one of the leaders in optical-based secondary storage but the market pushed the firm to evolve rapidly essentially due to cloud pressure, drastic reduction of on-premises solutions and ubiquitous software. It invited the company to reinvent itself and added data oriented software solutions to optimize the management of data, its lifecycle and associated compliance, governance and provide ways to leverage this data as information with a constant goal about energy savings and cost reduction.

This is the reasons why Rimage started this data stewardship initiative with essentially 2 solutions: Enterprise Laser Storage (ELS) and Data Lifecycle Management (DLM).

First, ELS, an optical-based storage solution, developed to store the data on an immutable media for centuries. This is mandatory in various industries, asked by government bodies and required by laws and regulations. But at the same time, with AI tsunami coming, the necessity to secure data in a WORM format has never been so urgent. Potentially AI engines and other based software are able to modify, alter, change any data without any notices and traces. This is a real danger that can put reference data in danger and this ELS and other similar solutions are one of the key answers to this potential degradation of trust. This approach is pretty similar to ransomware with the need to adopt a air-gap strategy.

Also as a key player, Rimage has chosen to partner with an other reference in the domain, PoINT Software and Systems, famous for its jukebox management software.

Second, DLM, a software based solution, fully agnostic to cover the entire lifecycle of unstructured data.

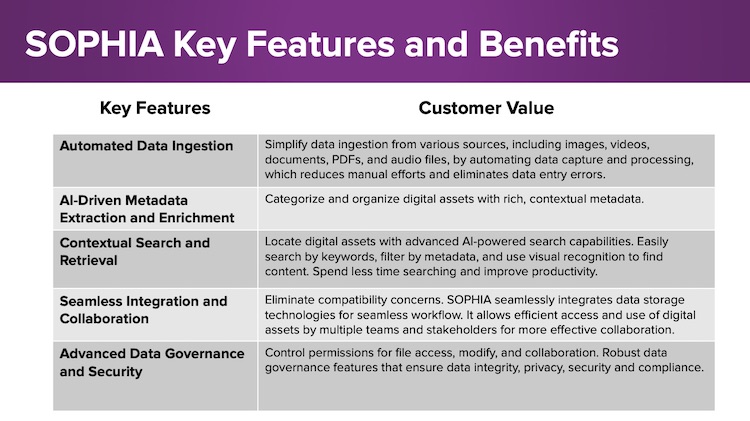

The company took advantage of the tour to launch Sophia, its AI powered digital asset management being a key element of a data lifecycle management approach. The idea of the product is to capture data from any source via NFS, SMB, local file systems, S3, tape or optical and extract metadata and other specific data and build a comprehensive catalog whatever is the format and nature of digital unstructured informations. The data extraction starts almost immediately to feed the internal rich data catalog with tags. AI provides additional capabilities to give contextual informations and easy search. The solution supports any back-end storage, running on-premises, in the cloud or deployed as a hybrid configuration, and is very flexible in the ingestion process. Users interact with a simple GUI, a web interface and the product can be also integrated with other solutions via a rich API. The solution also provides a global sync feature able to guarantee the presence of data in various places and obviously some redundancy.

The team has elaborated an interesting pricing model with data volume coupled with concurrent users.

And to maximize its market penetration, Rimage is looking for new partners in vertical industries and new ones in the MSP domain.

It may make sense for the company to gain visibility also by joining the Active Archive Alliance and extend partnerships key for DLM/DAM adoption.

We heard also that the company will introduce a new ELS solution in a few months and it makes really sense as most of users didn’t anticipate that AI could also be the core engine for silent data alteration. And this implicit threat could be the trigger of a big tsunami, WORM media is obviously an answer to this danger.

Listen to the interview with Christopher Rence, CEO of Rimage, available here.

StorageX

Recent player, founded in 2020 by Steven Yuan, it addresses computing in place with a specific PCIe card. The idea is to process data where it resides without any need to move it thus avoiding cost divergence in time, network, power, globally resources and pure bottom line. Even with compute cluster, it appears that the classic model is not aligned with the new workload model and the explosion of data as detected by the StorageX management.

Embedded in x86 servers, the company designs and develops a board to accelerate results in a disaggregated approach. This computational storage processor aka CSP is different than computational storage devices as the computing resides outside the device itself. It supports PCIe Gen 4 with 2 ports at 100Gb/s and is ready for RDMA and NVMe-oF supporting TCP and includes an AI engine. There is no GPU but a FPGA on the board and Yuan told us that an ASIC should be available in 2025.

Deployment is flexible with card installed in compute or storage servers with the option to network and couple boards together.

Yuan promotes StorageX’s approach as a XPU aligned with various market initiatives with GPU, DPU, IPU, TPU… confirming the acceleration extension outside of the CPU domain. At the same time, DPU didn’t really take off except a few models. Annapurna Labs got acquired by AWS in 2015, Fungible by Microsoft in 2022 for just $190 million, and we see Nebulon or Pliops continuing to develop their model a bit confidentially. The most visible example remains SmartNIC from Nvidia with its BlueField product line following Mellanox acquisition in 2019. CSD also had difficulties, we all remember NGD Systems who disappeared and only ScaleFlux appears to have a viable product.

StorageX product seems to have some early traction at some OEMs as this product and similar ones are definitely targeting this business path…

On the company side, the financial seems to be confidential but small in a domain where investment is a must.

But we’re surprised that neither Data Dynamics who owns StorageX as a data management solution existing on the market for approximately 2 decades and StorageX itself didn’t pay attention of this name conflict. We realize they didn’t at all anticipate and pay attention to a very low risk of conflict. And even if the domain name has .ai extension, we’re pretty sure Yuan will have to change its company name for anteriority arguments. We expect news on that point in the coming months.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter