Supermicro Launches Full Stack Optimized Storage Solution for AI and ML Data Pipelines

Hundreds of petabytes in multi-tier solution supports massive data capacity required and high-performance data bandwidth necessary for scalable AI workloads.

This is a Press Release edited by StorageNewsletter.com on January 30, 2024 at 2:01 pmSupermicro, Inc. is launching full stack optimized storage solution for AI and ML data pipelines from data collection to high performance data delivery.

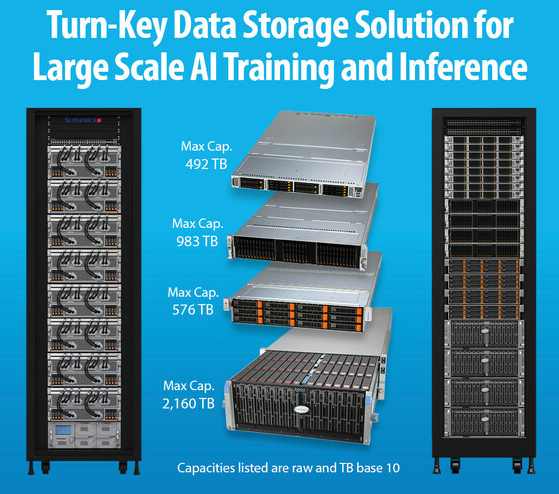

Turn-key storage solution for large scale AI training and inference

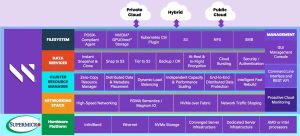

This solution maximizes AI time-to-value by keeping GPU data pipelines fully saturated. For AI training, massive amounts of raw data at petascale capacities can be collected, transformed, and loaded into an organization’s AI workflow pipeline. This multi-tiered the company’s solution has been proven to deliver multi-petabyte data for AIOs and MLOs in production environments. The entire multi-rack scale solution from the firm is designed to reduce implementation risks, enable organizations to train models faster, and use the resulting data for AI inference.

“With 20PB per rack of high-performance flash storage driving 4 application-optimized NVIDIA HGX H100 8-GPU based air-cooled servers or 8 NVIDIA HGX H100 8-GPU based liquid-cooled servers, customers can accelerate their AI and ML applications running at rack scale,” said Charles Liang, president and CEO. “This solution can deliver 270GB/s of read throughput and 3.9 million IO/s/storage cluster as a minimum deployment and can easily scale up to hundreds of petabytes. Using the latest Supermicro systems with PCIe 5.0 and E3.S storage devices and Weka Data Platform software, users will see significant increases in the performance of AI applications with this field-tested rack scale solution. Our new storage solution for AI training enables customers to maximize the usage of our most advanced rack scale solutions of GPU servers, reducing their TCO and increasing AI performance.”

Petabytes of unstructured data used in large-scale AI training processing must be available to the GPU servers with low latencies and high bandwidth to keep the GPUs productive. The company’s portfolio of Intel and AMD based storage servers is a crucial element of the AI pipeline. These include the firm’s Petascale All-Flash storage servers, which have a capacity of 983.04TB/server (*) of NVMe Gen 5 flash capacity and deliver up to 230GB/s of read bandwidth and 30 million IO/s. This solution also includes the SuperServer 90 drive bay storage servers for the capacity object tier. This complete and tested solution is available worldwide for customers in ML, GenAI, and other computationally complex workloads.

Storage solution consists of:

All-flash server SSG-121E-NE316R

-

All-Flash tier – Petascale storage servers

SYS-821GE-TNHR appliance

-

Application tier – 8U GPU servers: AS -8125GS-TNHR and SYS-821GE-TNHR

-

Object tier – Supermicro 90 drive bay 4U SuperStorage server running Quantum ActiveScale object storage

Weka Data Platform object storage

Click to enlarge

-

Software: Weka Data Platform and Quantum ActiveScale object storage

-

Switches: Supermicro IB and Ethernet switches

“The high performance and large flash capacity of Supermicro’s All-Flash Petascale Storage Servers perfectly complement Weka’s AI-native data platform software. Together, they provide the unparalleled speed, scale, and simplicity demanded by today’s enterprise AI customers,” said Jonathan Martin, president, Weka.IO Ltd.

Resource:

The company will present the optimized storage architecture in more detail in a webinar. To attend the webinar live on February 1, 2024, or view Supermicro and Weka webinar on-demand (registration required).

(*) Raw value is based on vendor raw base capacity of 30.72TB. TB is base-10 decimal. Availability of 30.72TB E3.S SSD is subject to vendor availability.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter