NetApp Cloud Volumes Ontap Flash Cache Improves Cloud EDA Workflows in Google Cloud

Closer look at some of Cloud Volumes Ontap features that utilize Google Cloud infrastructure to address applications performance challenges while providing solution for file storage in cloud computing

This is a Press Release edited by StorageNewsletter.com on December 28, 2023 at 2:00 pm By Alec Shnapir, principal architect, Google Cloud, and

By Alec Shnapir, principal architect, Google Cloud, and

Guy Rinkevich, principal architect, Google Cloud

Guy Rinkevich, principal architect, Google Cloud

Storage systems are a fundamental resource for technological solutions development, essential for running applications and retaining information over time.

Google Cloud and NetApp, Inc. are partners in the cloud data services market. And we have collaborated on a number of solutions, such as bursting EDA workloads and animation production pipelines that help customers migrate, modernize, and manage their data in the cloud. Google Cloud storage offerings provide a variety of benefits over traditional on-premises storage solutions, including maintenance, scalability, reliability, and cost-effectiveness.

In this blog we’ll take a closer look at some of the NetApp Cloud Volumes Ontap (NetApp CVO) features that utilize Google Cloud infrastructure to address the applications performance challenges while providing the best solution for file storage in cloud computing.

Instance configuration types

Google Cloud offers a variety of compute instance configuration types, each optimized for different workloads. The instance types vary by the number of virtual CPUs (vCPUs), disk types, and memory size. These different configurations indicate the instance IO/s and BW limitations.

CVO is a customer managed SDS offering that delivers advanced data management for file and block workloads in Google Cloud.

Recently NetApp introduced 2 more VM types to support CVO single-node and HA deployments: n2-standard-48 and n2-standard-64.

Choosing the right Google Cloud VM configuration for CVO deployment can affect the performance of your application in a number of ways.

For example:

- Using VMs with larger vCPU core count will enable concurrent tasks execution and improve overall system performance for certain type of applications.

- Workloads with high file count and deep directory structures can benefit from having a CVO VM configured with a large amount of system RAM to store and process larger amounts of data at once.

- The type of disk storage that your CVO VM has will affect its I/O performance. Choosing the right type (e.g Balanced PD vs. SSD PD) will dictate disk performance limits . Persistent disk performance scales with disk size and with the number of vCPUs on your VM instance. Choosing the right instance configuration will determine the VM instance performance limits.

- The network bandwidth that your CVO VM has will determine how quickly it can communicate with other resources in the cloud. Google Cloud accounts for bandwidth per VM and VM’s machine type defines its maximum possible egress rate.

Introducing NetApp Flash Cache

NetApp has also introduced Flash Cache for CVO that reduces latency for accessing frequently used data and improves performance for random read-intensive workloads. Flash Cache uses high-performance local SSDs to cache frequently accessed data and augments the persistent disks used by the CVO VMs.

Flash Cache speeds access to data through real-time intelligent caching of recently read user data and NetApp metadata. When a user requests data that is cached in local SSD, it is served directly from the NVMe storage, which is faster than reading the data from a persistent disk.

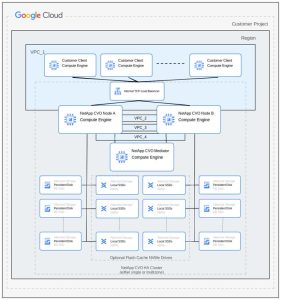

Figure 1: NetApp Cloud Volumes Ontap Flash Cache architecture

Click to enlarge

Storage efficiencies

Recently NetApp introduced a temperature sensitive storage efficiency capability (enabled by default) which allows CVO to perform inline block-level features including compression and compaction.

- Inline compression. Compresses data blocks to reduce the amount of physical storage required

- Inline data compaction. Packs smaller I/O operations and files into each physical block

Inline storage efficiencies can mitigate the impact of Google Cloud infrastructure performance limitations by reducing the amount of data that needs to be written to disk and allow CVO to handle higher application IO/s and throughput.

Performance comparison

Following our previous 2022 blog where we discussed bursting EDA Workloads to Google Cloud with NetApp CVO FlexCache, we wanted to rerun the same tests using a synthetic EDA workload benchmark suite but this time with both high write speed and Flash Cache enabled. The benchmark was developed to simulate real application behavior and real data processing file system environments, where each workload has a set of pass/fail criteria that must be met to successfully pass the benchmark.

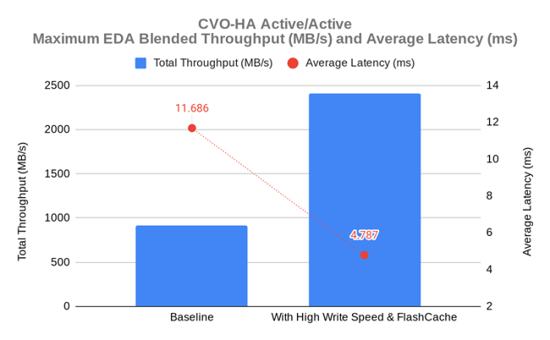

The results presented below show performance for top valid/successful run using CVO-HA Active/Active configuration and as it can be clearly seen using high write speed mode in conjunction with Flash Cache introduces ~3x improvement in performance.

Figure 2: NetApp CVO performance testing

In addition, to present potential performance benefits of the new CVO features, together with the NetApp team we used a synthetic load tool to simulate widely deployed application workloads and compare different CVO configurations loaded with unique I/O mixture and access patterns.

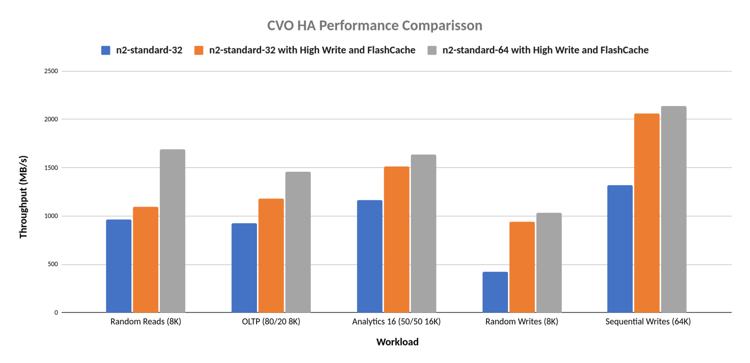

Following chart presents a comparison of performance between default n2-standard-32, n2-standard-64 CVO HA (active/active) deployments with and without high write speed and Flash Cache enabled. CVO high write speed is a feature that improves the write performance by buffering data in memory before it is written to a persistent disk. Since the application receives the write acknowledgement before the data is persisted to storage, this can be a good option, but you must verify that your application can tolerate data loss and you should ensure write protection at the application level.

Figure 3: CVO performance testing comparison for various workloads

Assuming high compression rate (>=50) and modest Flash Cache hit ratio (25-30%), we can see:

- Better performance for deployments with more powerful instances with Flash Cache running random read intensive workloads benefiting from more memory and higher infrastructure performance limits. HPC and batch applications can benefit from Flash Cache. The higher cache hit ratio yields overall higher system performance and can be 3-4x that of the default deployment without a Flash Cache.

- Write intensive workloads can benefit from high write speed and are typically generated by backup and archiving, imaging and media applications (for sequential access) and databases (for more random writes).

- Transactional workloads are characterized by numerous concurrent data reads and writes from multiple users, typically generated by transactional database applications such as SAP, Oracle, SQL Server, and MySQL. These kinds of workloads can benefit from moving to stronger instances and using Flash Cache.

- Mixed workloads present a high degree of randomness and typically require high throughput and low latency. In certain scenarios, they can benefit from running on stronger instances and Flash Cache. For example, virtual desktop infrastructure (VDI) environments typically have mixed workloads.

Resources:

Try the Google Cloud and NetApp CVO Solution.

Learn more and get started with Cloud Volumes ONTAP for Google Cloud.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter