All-Flash Storage All the Time?

When it comes to price/performance, high-density SSDs can't fully replace HDDs.

This is a Press Release edited by StorageNewsletter.com on June 22, 2023 at 2:02 pm This article, published on June 1, 2023, was written by Paul Speciale, CMO, Scality.

This article, published on June 1, 2023, was written by Paul Speciale, CMO, Scality.

All-Flash storage all the time?

When It Comes to Price/Performance, High-Density SSDs Can’t Fully Replace HDDs.

Scality, Inc. has delivered storage solutions for more than a dozen years since it first launched RING in 2010. Its goal has always been to provide customers with solutions for multi-petabyte-scale data problems that offer easy application integration, simplified systems management and reliable long-term data protection – all at an affordable price.

Through 9 major software releases, RING has embraced both flash-based SSDs and HDD media in storage servers for what they each do well:

• Flash SSDs provide low-latency access for small IO-intensive metadata and index operations.

• High-density HDDs are for storing high volumes of customer data in the form of files and objects for most workloads (including those requiring high throughput).

• For latency-sensitive, read-intensive workloads, we do recommend all-flash for primary storage.

Combined use of flash and HDD – leveraging the strengths of each for specific circumstances – provides an optimal balance of performance, capacity, long-term durability and cost. In essence, firm’s software is able to use the appropriate storage media to provide the best fit for each workload, since this is ultimately about delivering value to customers.

Are high-density flash SSDs ideal for high-capacity unstructured storage?

Recently, high-density flash SSDs have been purported as a superior alternative to HDDs for high-capacity unstructured storage. Industry news and several storage solution vendors have presented the latest gens of flash as effectively “on par” with HDD in terms of capacity cost, claiming it enables petabyte-scale storage along with several other advantages of flash. Some have even predicted the imminent death of HDD, arguing that high-density flash SSDs can do it all and better.

But does all-flash all the time really make sense for large volumes of data? Are HDDs going the way of the dodo? No, and no. Here’s why: When it comes to price/performance, high-density SSDs can’t fully replace HDDs, especially with respect to petabyte-scale unstructured storage across the spectrum of application workloads.

A fast history of fast flash storage

In the last decade, flash has received extraordinary attention and investment from the enterprise storage industry. While a decade can be considered an eon in our space, consider that flash SSDs have now been implemented in enterprise storage arrays for over 15 years since EMC delivered them starting in 2008. To help accelerate access to this new lower latency media, we’ve seen rapid advancements in interface protocols and speeds as the industry evolved from FC to SATA and SAS, and then to PCI bus-based interfaces, culminating in the NVMe spec from Intel in 2011.

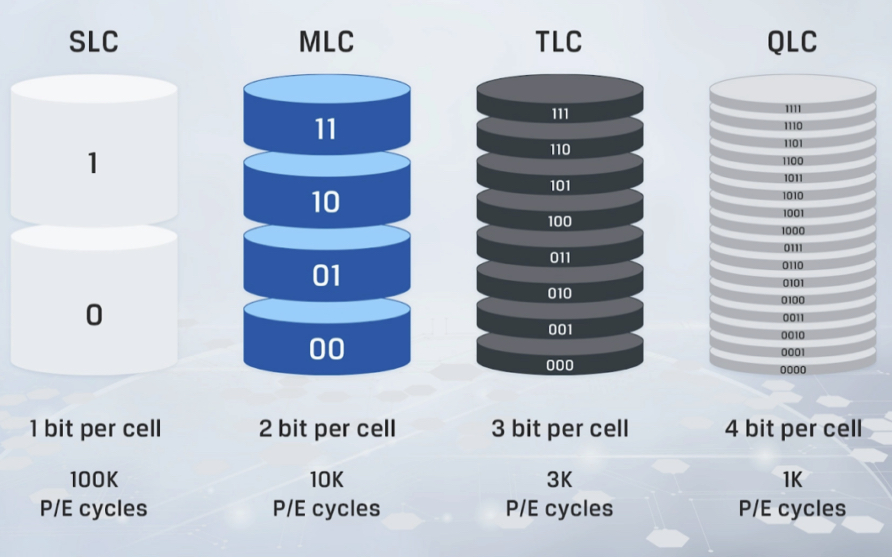

Simultaneously, incredible advances were made in the underlying NAND flash, the type of non-volatile memory that first emerged on the market in the late 1980s. The original NAND flash technology was SLC – one bit per cell – making it limited in capacity and expensive. The transition to 2 bits per cell (MLC) provided a 100% increase in density from SLC. TLC offered a 50% increase over MLC, going from 2 to 3 bits per cell. This was a major inflection point. Flash memory was then adopted by mainstream IT since it was high-performance, reliable and more affordable.

Quad-level cell (QLC) is the latest big step in flash storage, offering 2x as much density as TLC. But, it’s also extremely important to understand that due to its higher bit density (more bits per cell), the durability properties of QLC SSD are inherently several orders of magnitude lower than HDDs and even compared to lower-density flash SSDs. We see this demonstrated through well-known “P/E cycle” ratings of the various flash types – a measure of the number of times the flash media itself can be programmed and erased, as we progress from 100,000 cycles down to just 1,000 cycles on QLC.

Before we discuss the impact of these QLC flash durability properties in storage systems, let’s establish what QLC flash SSDs claim to provide:

• High density (just over 30TB per drive in the latest 2.5″ NVMe-based drives)

• Low-latency access (microseconds compared to millisecond level access times on HDD)

• Improved cost curves (trending better than HDDs annually on a per ggabyte basis)

• Better “green” characteristics than HDDs (specifically from a power consumption perspective)

But do these claims match reality? Let’s address performance first.

The performance reality of high-density QLC flash SSDs

Storage systems optimized for petabyte-scale unstructured data (mainly file and object storage) have primarily leveraged HDDs for their high-density, reliability and performance characteristics – and, importantly, their cost-effectiveness in terms of low dollar per gigabyte of capacity.

For organizations (like financial services, healthcare, government agencies, media, etc.) that require dozens of petabytes or more, HDD-based storage solutions still offer the best blend of price/performance and affordability to store and protect data for long-term retention periods. These storage solutions offer exceptional price/performance, and their absolute raw performance is exceptional for these data workloads. Demonstrating their proven nature at massive scale: 90% of storage capacity in cloud data centers is still HDD-based today.

In recent years, a few vendors have started to embrace newer QLC flash SSDs as a basis to deliver a trifecta of high density, high performance and low cost for storing massive capacities of unstructured data.

The argument for these solutions hinges on the ability of these vendors to deliver the aforementioned combination of advantages:

1 Performance that is meaningfully better to the application

2 Reduction in the effective cost to near parity with HDD on a cost-per-GB basis

3 Sufficient durability to provide enterprise data reliability for the long-term

Flash storage systems can optimize performance for workloads that require random access to small data payloads. A classic example is a transactional system that performs random queries vs. a product ordering system, performing lookups of customer records based on a key (such as the customer name or phone number). Newer workloads with similar patterns are those involving small IoT or device sensor event streams, with small event data (kilobytes or less per record). In this scenario, flash SSDs can offer gains in terms of lower latency and higher operations per second compared to HDDs.

Globally, enterprise customers achieve sufficient performance on HDD-based systems even for latency-sensitive workloads (and operations per second). If the difference between microsecond and millisecond-level latencies isn’t visible or important (again, the key word being meaningful) to the end user, then other factors including the pricing of the solution will become the primary buying criteria.

Most storage people know that performance requirements depend on the data (type, size, volume) and the application’s access requirements, so we have to understand a diverse range of workload demands on storage. When an application requires sequential access to larger payloads (files in the megabyte and higher range), the performance equation changes dramatically from small file workloads.

Many modern applications fit this characterization – anything that deals with video, high-resolution photos and other large binary data types (such as video content delivery, surveillance, imaging in healthcare, and telemetry) will likely have sequential write and read patterns.

Backup applications within the broad context of data protection also fit this profile. With cybercriminals targeting backup data in 93% of attacks, solutions from vendors like Veeam, Commvault, Veritas, IBM, and Rubrik have gained importance as a mission-critical line of defense vs. ransomware threats and go hand-in-hand with immutable storage solutions as the targets for storing backups.

These applications tend to read and write larger file payloads to storage, with object storage rising thanks to intrinsic immutability advantages.

In terms of performance demands on the storage system, these applications are just about the exact opposite of random IO, latency-sensitive workloads in terms of their performance demands:

• Backup: Store (write) large backup payloads into the storage system sequentially

• Restore: Critical to rapid business recovery, the application sequentially reads these large stored backup payloads back to the user application.

The important performance metric for the backup application is throughput in gigabytes per second (or terabytes per hour) for fast sequential access to large backup files. Additionally, in today’s enterprises with hundreds of mission critical applications, there will be a need to process multiple backup and restore jobs simultaneously and in parallel. Economically, it makes sense to leverage shared storage systems and avoid the classic storage silo explosion.

For these sequential IO workloads, the difference between a QLC-flash and HDD-based solution is insignificant. HDD-based object storage solutions can be configured to achieve dozens of Gb/s (tens of terabytes per hour), with sufficient throughput to saturate the network to the application. This is key, since the storage system isn’t the limiting factor in terms of performance.

Moreover, the application itself can be the limiting factor in overall solution performance, with application processing, de-dupe/compression and data reassembly times surfacing as dominant time factors for backup and restore. This means that the incremental difference between HDD and flash SSD throughput can be often rendered meaningless, especially when we consider the factor of cost (as we do in the next section).

Before we analyze cost, there is one remaining major performance characteristic of flash to be aware of. Performance decays as the workload “churns” (overwrites and deletes) the flash media and as capacity approaches maximum utilization. When a drive is factory-fresh, new data is written to the drive in a linear pattern until the usable capacity has been filled. In this scenario, the drive hasn’t had to perform any reorganization or reclamation of its internal blocks and pages (termed garbage collection) resulting from data being deleted or overwritten.

For many application workloads, including backup, data is frequently overwritten and deleted. This means that the drives will have to perform garbage collection, and the pattern in which data is written will begin to impact performance. Entire studies are dedicated to utilization and garbage collection dynamics of flash SSD as well as more esoteric aspects related to data reduction techniques and data entropy.

We can summarize that in practice – and especially for workloads that are not purely sequential – an SSD’s performance begins to decline after it reaches about 50% full, and capacity should be maintained under 70% for performance.

What does this mean for end users? The initial performance of flash storage solutions when first deployed represents absolute peak capabilities, with performance degrading over time as the system is filled. Some savvy admins even resort to custom “over-provisioning” levels on the SSD to reserve additional internal capacity to boost performance – but this effectively reduces the available storage capacity of the drive and further increases the real cost.

The real cost of QLC flash SSDs

As a SDS vendor, Scality partners with nearly all industry-leading server platform providers, giving us insights into the true prices of storage media across the HDD and flash SSD spectrum. To fairly assess the current claims of price parity between flash SSDs and HDDs, you first have to clarify what is really being compared.

For high-capacity storage, the comparison we need to make is between the following flash SSD and HDD drive types:

• High-density, large form factor (3.5″ LFF) HDDs: Up to 22TB per drive (as of today’s writing)

• High-density, QLC based flash SSDs: Up to 30TB per drive (today)

Today, the reality is media costs of QLC flash drives are 5 to 7x higher than LFF HDDs on a dollar per gigabyte basis.

Since both types of media technologies have active roadmap development, this gap will decline but is still forecasted to be 4 to 5x in favor of HDDs on a $/GB basis by the end of the decade. And a really important note: a much more favorable price comparison for SSDs can be made by vendors when comparing to small form factor (2.5″ SFF) HDDs.

Ask your vendor the tough questions about what is being compared when they make cost claims about flash SSDs vs. HDDs, since SFF HDDs don’t offer the same advantages as LFF HDDs.

Given the 5 to 7x higher price of capacity for flash SSDs, vendors must rely on data reduction techniques to make the effective cost of flash lower. Techniques including data compression and de-dupe are implemented in flash storage systems to provide this level of reduction (and also to preserve flash media durability), with claims ranging from nominal 2:1 up to 20:1 reduction factors in required storage capacity.

These claims assume that the application does not implement data reduction techniques itself. While this may be true in some specialized application domains, we can once again refer to enterprise backup applications, which do indeed perform data reduction functions, dramatically reducing the ability of the storage system to achieve the reduction factors claimed above. This is also true for domains such as video content and genomics, where data is already extremely optimized and reduced in some form.

Here, as with backup data, the underlying flash system cannot be expected to gain the same levels of data reduction as with non-optimized data. This ends up minimizing and eroding the third leg of the claimed flash SSD trifecta: cost per gigabyte.

In summary:

• The promise of cost parity for flash SSDs hasn’t yet arrived.

• Fash vendors won’t be able to eliminate this media price disadvantage through data reduction techniques for backup and other unstructured data workloads.

• While there may be a relatively small advantage in flash SSD performance for sequential access workloads, it comes with a significant penalty in price vs. HDD-based solutions.

Takeaway:

QLC flash-based storage is not needed for all large-scale, mission-critical workloads. While we recommend and use it for latency-sensitive, read-intensive workloads that justify its higher cost, QLC flash is not an ideal fit today for most other workloads, including backups, at the center of modern data and ransomware protection strategies.

The long-term question: Durability of QLC flash for “high-churn” workloads

There are two other critical considerations we will explore in forthcoming blogs. The first relates to power efficiency of flash SSD vs. HDD, and whether or not this is reality or hype. The second relates to the critical issue of long-term durability of QLC-based flash SSDs for workloads that are not storing data statically, and instead require a high rate of data churn.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter