Korean Start-Up Panmnesia Shatters Memory Limits with CXL Technology for Large-Scale Al Applications Like ChatGPT

Developed AI acceleration system called TrainingCXL to address challenges of processing large-scale AI using CXL-based disaggregated memory pool.

This is a Press Release edited by StorageNewsletter.com on April 24, 2023 at 2:02 pmPanmnesia, Inc., a KAIST (Korea Advanced Institute of Science and Technology) semiconductor start-up, has introduced an AI acceleration system powered by Compute eXpress Link (CXL) technology.

This system provides memory capacity and performance scalability.

The innovative solution by the company connects a GPU with multiple next-gen memory expanders through the CXL interconnect.

Myoungsoo Jung, CEO, explains that its system can scale memory capacity up to a staggering 4PB Additionally, it reduces training execution time by 5.3x when compared to leading systems utilizing the existing PCie interconnect.

As the AI landscape becomes increasingly competitive, tech giants like Meta and Microsoft have been forced to expand the size of their models to maintain their market positions. This has resulted in a surging demand for computing systems capable of handling large-scale AI. However, current AI accelerators like GPUs fall short in meeting these demands due to their limited internal memory-only a few tens of gigabytes – owing to the scaling constraints of DRAM technology.

The company‘s CXL-based system aims to tackle this challenge head-on, offering a robust solution for memory-intensive AI applications. To address this issue, several approaches have been proposed to exploit SSDs and expand their host memory using SSDs as backend storage media. While this SSD-integrated memory expansion technique can handle large-sized input data, they, unfortunately, suffer from severe performance degradation due to SSDs’ poor random read performance and the data movement overhead between SSDs and GPUs.

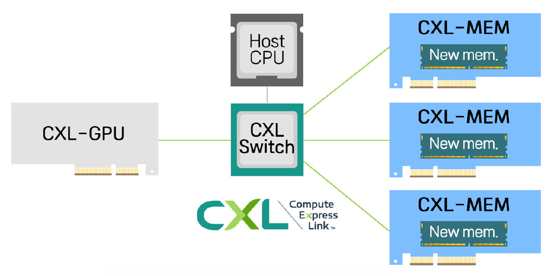

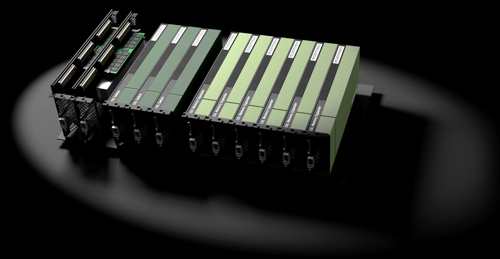

The company has developed an AI acceleration system called TrainingCXL to address the challenges of processing large-scale AI using a CXL-based disaggregated memory pool. TrainingCXL features a composable architecture that connects high-capacity, emerging memory-equipped memory expander devices to GPUs via CXL, providing GPUs with scalable memory space.

Central to this system is CXL, a memory interface that facilitates a fully disaggregated system. It allows CPUs, GPUs, and memory expanders to communicate with one another through a high-speed interconnect. Cloud providers and hyperscalers are increasingly embracing CXL to meet their growing memory demands.

Overview of TrainingCXL

TrainingCXL offers scalable memory space for GPUs and also minimizes data movement overhead between connected devices. Specifically, it employs the cxl.cache sub-protocol, one of several sub-protocols offered by CXL. This sub-protocol enables rapid, active data transfer between the GPU and memory expanders without any software intervention. By overlapping the latency of data transfer with that of essential computing, TrainingCXL effectively takes the data transfer overhead off the critical path, resulting in enhanced overall performance.

The Panmnesia research team has further optimized AI model training execution time by incorporating domain specific computation capabilities into the memory expander device. The team focused on deep learning-based recommendation models, which are used to personalize content for users in services like Amazon, YouTube, and Instagram. Modern recommendation systems often require dozens of terabytes of memory, as they store information for each user and content, known as embedding vectors, in their models. This memory requirement is even larger than that of popular large generative AIs like ChatGPT.

TrainingCXL addresses this challenge by storing embedding vectors in memory expanders and utilizing a computing module that processes them directly within the memory expander. This approach allows for rapid processing of embedding vectors and also transfers a reduced data set to the GPU instead of the original vectors, effectively mitigating data movement overhead.

In their research, the team demonstrated that TrainingCXL achieves training speeds 5.3x faster across various recommendation system models compared to modern systems that connect emerging memory through traditional PCie interconnects.

Jung asserts: “TrainingCXL points to a revolutionary direction for nextgeneration AI acceleration solutions that fully harness the potential of CXL technology. We will continue to champion CXL technology and contribute to the CXL ecosystem by presenting our groundbreaking research at top-tier conferences and journals.”

The firm research team’s work was published in the March-April 2023 issue of IEEE Micro magazine, in an article titled Failure Tolerant Training With Persistent Memory Disaggregation Over CXL. They also delivered an opening invited talk at the HCM workshop, co-located with the IEEE International Symposium on High-Performance Computer Architecture (HPCA) in Montreal, Canada. Furthermore, they will present an invited talk at the International Parallel and Distributed Processing Symposium (IPDPS) in Florida this May.

Research details:

TrainingCXL is designed to efficiently train large recommendation models using a CXL-based disaggregated memory pool. By connecting GPUs and high-capacity, emerging memory-equipped expanders through a CXL switch, GPUs can access the vast memory space provided by the expanders. CXL unifies the memory space of various hardware components, such as CPUs, GPUs, and memory expanders, into a Host Physical Address (HPA). This setup allows users to access data with a corresponding address, no matter where it is stored.

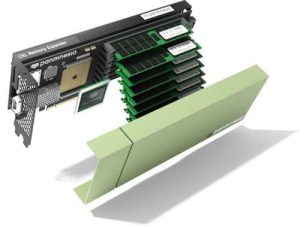

Panmnesia boards

TrainingCXL further enhances efficiency with 2 additional components:

-

Automated data movement. To reduce data movement overhead, TrainingCXL introduces a hardware automation approach that relies on CXL hardware components. Researchers designed the devices as Type 2, one of the 3 CXL device types (Type 1/2/3), to enable active data exchange using internal memory. Type 2 devices are unique because they possess their own memory and support active data access through the cxl.cache protocol. The other device types are unsuitable for this approach as they either lack internal memory (Type 1) or active data access capabilities (Type 3). TrainingCXL’s Type 2 devices feature a device coherency engine (DCOH) that manages cxl.cache-based memory access, allowing for data transfer without software intervention.

-

Near data processing. To enhance the efficiency of training recommendation models, TrainingCXL incorporates a specialized computing module close to the data, designed for recommendation models. This module is located within the memory expander’s controller. It retrieves the data (i.e., embedding vectors) associated with users and content from the emerging memory in the expander and transforms them into smaller data pieces required for the next stage of the recommendation model. The module then transfers the data to the GPU using a hardware-automated process. By processing the data near its source, this approach reduces the time and energy spent on data movement, as it minimizes the amount of data to be transferred. To further improve performance, TrainingCXL employs a hardware accelerator designed for embedding vector processing in the computing module. This module comprises multiple vector processing units capable of processing numerous numerical elements in the embedding vectors simultaneously.

Example of composed TrainingCXL

Broader Impact:

CXL is an emerging technology with potential to address the growing memory demands in the industry, particularly for large-scale AI applications. TrainingCXL offers a promising and efficient way to fully harness the benefits of CXL, transforming the landscape of AI acceleration. By implementing TrainingCXL, businesses can reduce Capex as they replace expensive GPUs with cost-effective memory expanders without compromising the system’s ability to run largescale AI models. Furthermore, the near data processing approach of TrainingCXL optimizes energy consumption, leading to lower Opex by minimizing excessive data movement and utilizing energy-efficient emerging memory technologies. TrainingCXL represents a solution with the potential to change the AI industry, driving advancements in heterogeneous computing and paving the way for more shareable, efficient, and intelligent systems.

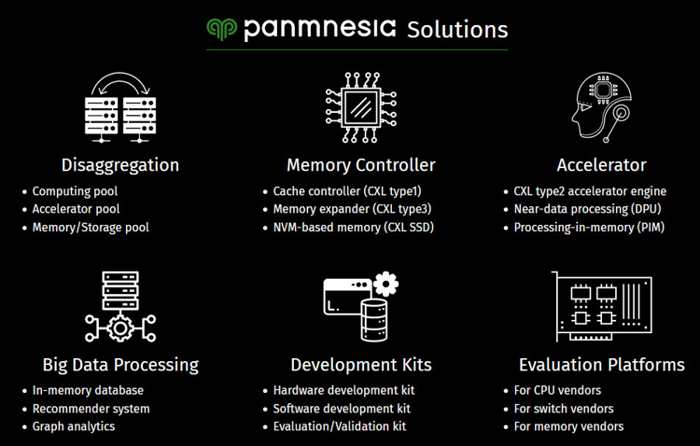

About Panmnesia

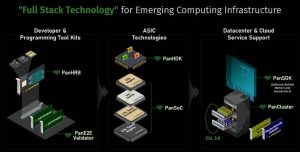

Panmnesia, Inc., a KAIST startup, envisions a future where perfect memory systems eliminate data movement bottlenecks, enabling devices like CPUs, GPUs, TPUs, and Al/ML accelerators to operate at peak performance without resource limitations. The firm’s solutions empower all forms of heterogeneous computing to break free from memory constraints, making systems more shareable and intelligent than ever before. The company has developed the first fully functional CXL 2.0 system, which can run real user applications such as AI training and inference, and has advanced to CXL 3.0 with a diverse range of composable computing resources. Leveraging its expertise, the company has secured multiple IP rights and collaborates with organizations and companies worldwide.

Article: Failure Tolerant Training With Persistent Memory Disaggregation Over CXL

IEEE Micro has published an article written by Miryeong Kwon; Junhyeok Jang; Hanjin Choi; Sangwon Lee; and Myoungsoo Jung, KAIST and Panmnesia, Daejeon, South Korea.

Abstract: “This article proposes TrainingCXL that can efficiently process large-scale recommendation datasets in the pool of disaggregated memory while making training fault tolerant with low overhead. To this end, we integrate persistent memory (PMEM) and graphics processing unit (GPU) into a cache-coherent domain as type 2. Enabling Compute Express Link (CXL) allows PMEM to be directly placed in GPU’s memory hierarchy, such that GPU can access PMEM without software intervention. TrainingCXL introduces computing and checkpointing logic near the CXL controller, thereby training data and managing persistency in an active manner. Considering PMEM’s vulnerability, we utilize the unique characteristics of recommendation models and take the checkpointing overhead off the critical path of their training. Finally, TrainingCXL employs an advanced checkpointing technique that relaxes the updating sequence of model parameters and embeddings across training batches. The evaluation shows that TrainingCXL achieves 5.2× training performance improvement and 76% energy savings, compared to the modern PMEM-based recommendation systems.“

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter