Recap of 49th IT Press Tour in Israel

With 8 companies: Ctera, Equalum, Finout, Pliops, Rookout, StorONE, Treeverse and UnifabriX

By Philippe Nicolas | April 12, 2023 at 2:01 pmThis article was written by Philippe Nicolas, organizer of the event.

The 49th edition of The IT Press Tour happened recently in Israel and it was the opportunity to meet and visit a few innovators that lead some market segments or shake some established positions with their new approach playing in cloud, IT infrastructure, data management, storage: Ctera, Equalum, Finout, Pliops, Rookout, StorONE, Treeverse and UnifabriX.

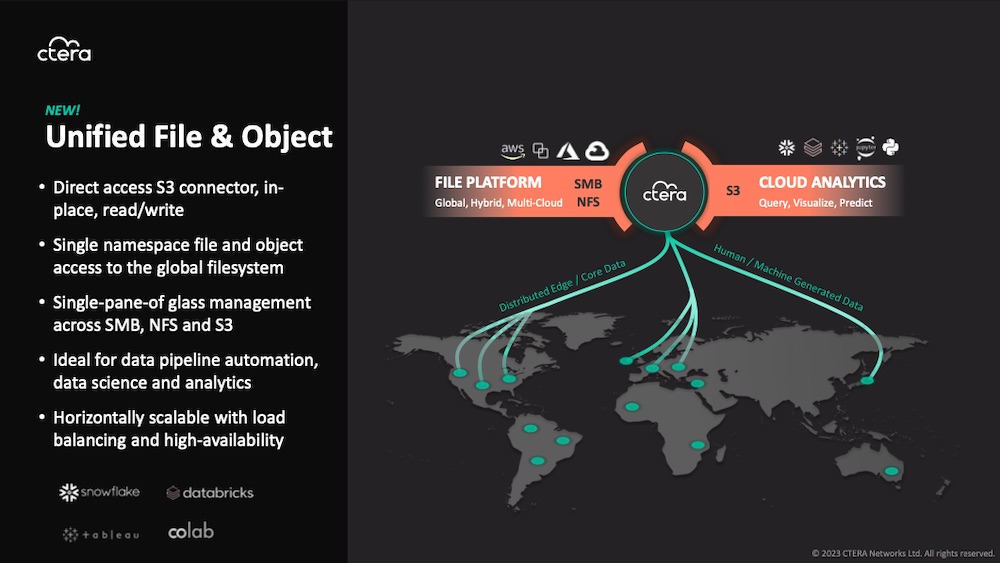

Ctera

Among the leaders in cloud file storage, it enters into a new era. It took advantage of this session to unveil two major announcements.

The first one is related to promotions for several key executives. Oded Nagel is the new CEO, he joined the team in the early days of the company and was chief strategy officer for 5 years, Liran Eshel moves to executive chairman, Michael Amselem becomes CRO and Saimon Michelson is elevated to VP of alliances. During this session, we measured all the progress made by the team with a significant market footprint increase illustrated by large deals in US and globally. This changes arrive after a successful FY22 with 38% revenue growth reaching 150PB under management.

The session started with Liran Eshel who spoke about data democracy, a new level to reach that goes beyond data ownership considered as necessary but not enough.

The team also shared product news and reiterates some recent new features around ransomware protection, cloud storage routing, an impressive media use case with Publicis and unified file and object or UFO. At the same time, new partners join the ecosystem such as Hitachi Vantara, Netwrix or Aparavi.

The ransomware modules embeds some AI-based development made at Ctera to detect and block attacks under 30s. The idea is to identify some behavior anomalies and then decide which decision to make and enable, this approach doesn’t rely on signature database which is consider not enough for today’s threats.

The cloud storage routing introduced with the recent 7.5 release allows to logically group buckets from various clouds. This subtle feature provides a directory level data placement to optimize data residence based on business, compliance, project or technical needs or quality of service with data placed close to users.

The other new module is the UFO with a direct S3 read-write access directly from the portal. It couples NFS, SMB and S3 under a single namespace to finally expose same content via different access methods. This is required for data integration for data pipeline and analytics projects.

The ransomware and UFO capabilities will be available at the end of June 2023.

Click to enlarge

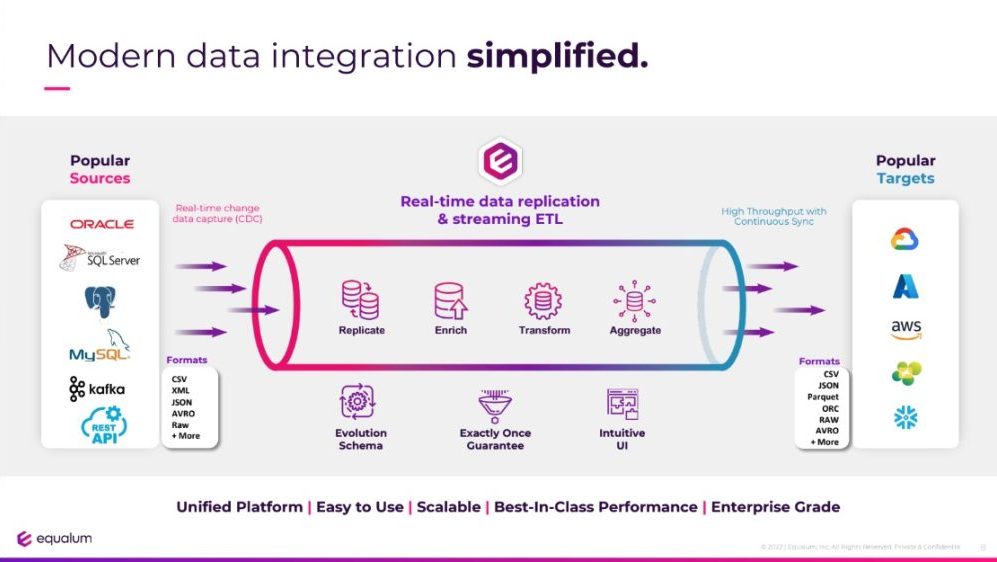

Equalum

Data integration is a challenge and current market climate with the need of real-time analytics increases its complexity.

The firm, founded in 2015 with $39 million raised with 5 VC rounds, is a software company developing an enterprise-grade real-time data integration and data streaming platform featuring industry-leading change data capture (CDC).

With the classic explosion of volume of data, its velocity and variety, it’s a real mission for enterprises as all of them recognize that they live in a data driven world. The environment mixes on-premise, cloud and various APIs, data lake and data warehouse but the data integration of all that is tough, delicate and takes time. And as said when you couple the real time necessity it creates a real nightmare. It explains why several companies were founded to address this pain, this growing data pipeline ecosystem is now a real market segment. And clearly CDC and ETL functions represent two key frictions to spend time on for users.

The company offers a modern data integration platform based on CDC able to connect, extract, replicate, enrich, transform and aggregate data from various sources to load them into popular targets. The solution relies on an advanced proprietary CDC and streaming ETL to reach a new level of real time analytics without any code to write or integrate. In this solution, the CDC is a fundamental element of its solution that creates a real differentiator versus its competitors. In terms of market adoption, customers are like Warner Bros, T-Systems, GSK, Walmart, ISS or Siemens.

Click to enlarge

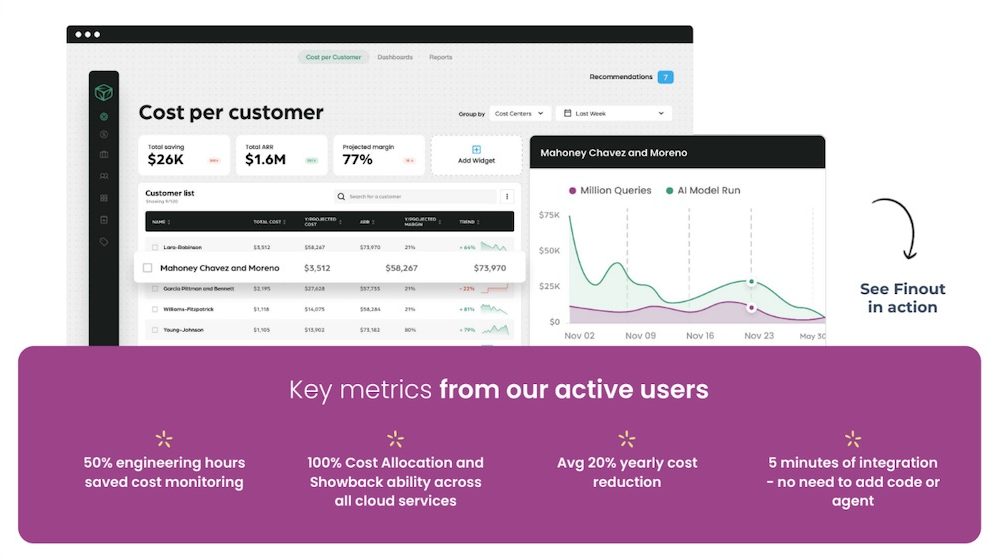

Finout

Founded in 2021, the company raised $18.5 million in 3 VC rounds on the idea to deliver an advanced comprehensive usage-based billing for cloud data services. The main idea really is to view global costs beyond cloud services providers own services.

The market names FinOps this solution segment and we have to admit that several players understand the opportunity to optimize online services bills. The team considers that the cloud is inevitable and will remain a strong force on the market for the coming years, swallowing more and more workloads and associated data.

Finout approach relies on 4 major functions: Visualize, Allocate, Enrich and Reduce. Visualize is the first fundamental brick as it offers a global view of all cloud expenses into a simple bill named MegaBill. It helps cloud-users, enterprises of any size, seeing in details what they consume and the associated cost and define alerts and customizable dashboards. Allocate, the second function, can then tag or attribute each cost item to characterize these elements and highlight trends… Next, Enrich provides connectors to external solutions such as Datadog, Prometheus or CloudWatch to show a complete end-to-end services charges. And finally Reduce, represented by Finout CostGuard, shows cloud waste and spend anomalies and displays rightsizing recommendations to optimize cost over real usage. The installed base delivers globally an average 20% yearly cost reduction which provides an almost immediate ROI. The solution is very granular able to assign cost line to various business or IT instances or applications or even sub-components.

In terms of implementation, there is no code to add or agent to deploy just connectors to setup to support a service. The product supports all major cloud providers, Kubernetes, cloud data warehouse like Snowflake, applications like Salesforce or Looker and CDNs.

It confirms once again that usage-based pricing is a major trend on the market with a user-oriented approach with Finout and vendor-one with Amberflo.

The coming KubeCon conference will be the place to discover the new product iteration Finout plans to display. In fact, it is a governance suite dedicated to Kubernetes with an agentless approach to manage, forecast and optimize multicloud operations relying on Kubernetes.

Click to enlarge

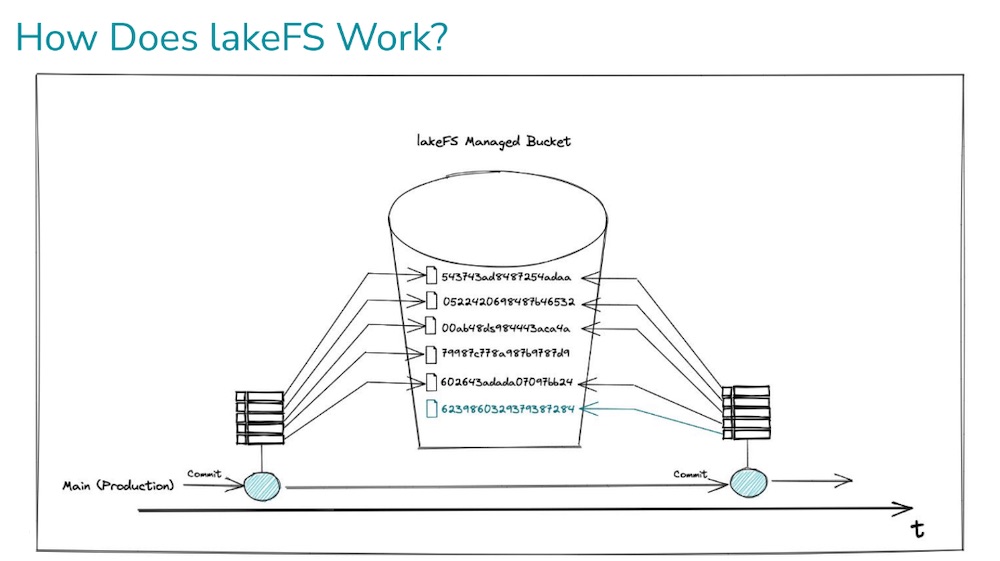

LakeFS by Treeverse

Treeverse is the company behind LakeFS, founded in 2020 with 28 employees globally, 15 developers among them, having raised $23 million raised so far. Founders came from SimilarWeb, a famous Israeli web company specialized in websites analytics and intelligence.

LakeFS is an open-source project that provides data engineers with versioning and branching capabilities on their data lakes, through a git-like version control interface. As Git is a standard for code, the team imagined a similar approach to control data sets versions with the capability to isolate data for specific needs without copying data to finally provide safe experiment on real production data. The beauty of this resides in its capacity to work on metadata without touching the data. The immediate gain are visible as no data duplication is needed so the storage cost is obviously reduced, it boosts the data engineers productivity according to the vendor and the recovery also is fast.

The second key point for such product is the integration to facilitate adoption. LakeFS splits this in 6 groups: storage, orchestration, compute, MLOps, ingest and analytics workflow. For each of them obviously the company has chosen the widely used solutions and for storage it means Amazon S3, Azure Blob Storage, Google Cloud Storage, Vast Data, Weka, MinIO and Ceph.

The adoption for LakeFS is already there with more than 4,000 members in the community with companies such as Lockheed Martin, Shell, Walmart, Intel, Facebook, Wix, Volvo, Nasa, Netflix or LinkedIn. Treeverse owns the code and the project doesn’t belong to any open source software (OSS) foundation even if they deliver the code under Apache 2.0 license model.

Essentially the product is available as OSS for on-premise and also for the cloud, by default the cloud flavor is the full version. In terms of pricing, the team offers an OSS flavor, limited but pretty comprehensive, and a cloud and full on-premise version at $2,500 per month.

The competition is non negligible with commercial approaches and open source as well coming from established companies but also from emergent ones.

The goal of the company is to become as Git the standard for data version control, we’ll see if they’ll succeed.

Click to enlarge

Pliops

Plenty of IOPS aka Pliops, founded in 2017 has raised so far more than $200 million in 5 rounds. The team has recognized the need to provide a new way to accelerate applications performance for large scale and data center environments. IT touches SaaS, IaaS, HPC and social media use cases as all of them use large distributed configurations that require new way to boost IO/s. But this new approach has to consider green aspects, resources density and cost of course.

The founding team recognized the growing gap between CPUs and storage entities based on NVMe that will hit a wall of nothing is made to address this issue. SATA and SAS didn’t generate same pressure on CPUs but NVMe changed that game, same as write amplification and RAID protection not originally designed for NVMe SSDs.

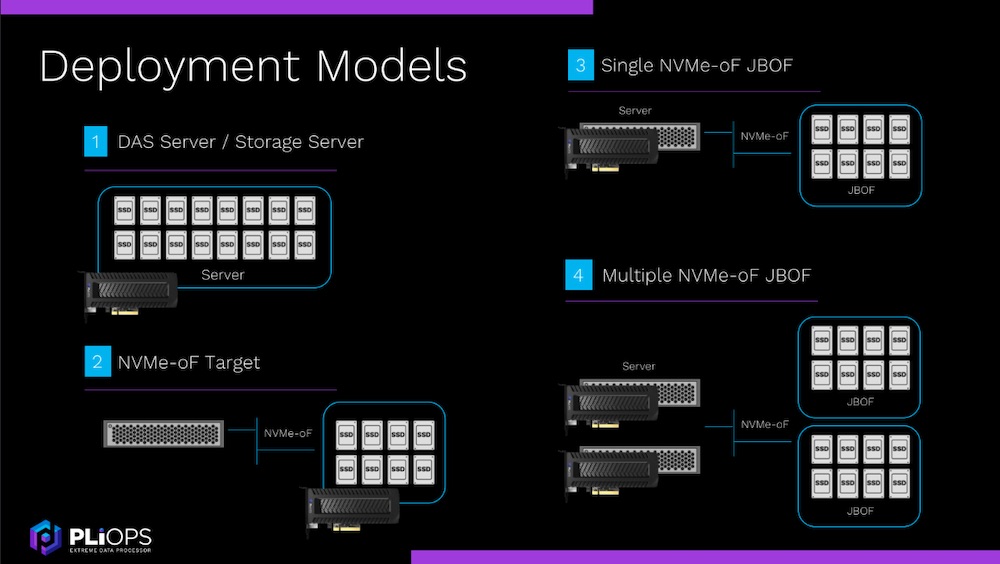

The company designs and builds XDP, eXtreme Data Processor, PCIe card today based on an FPGA and in the future replaced by an ASIC. This board is placed between CPUs and the storage layer exposing a NVMe block interface and a KV API. It supports any kind of SSDs and NVMe, direct attached or NVMe-oF based.

XDP continues to offer new key functions and today these data services cover essentially AccelKV, AccelDB and RAIDplus.

RAIDplus offloads the protection processing to XDP and delivers 12x faster in various configurations and mode even during rebuilds. One of the effect of this approach is the positive impact of the SSD endurance, whatever they are TLC or QLC, it’s even spectacular for QLC. And the last gain is the capacity expansion thanks to an advanced compression mode for a better cost/TB.

AccelDB targets SQL engines like MySQL, MariaDB and PostgreSQL but also NoSQL ones like MongoDB and even SDS solutions like Ceph. Benchmarks show high throughput, reduced latency, better CPU utilization and high capacity expansion in this domain with interesting ratios. Beyond these, XDP also delivers 3.4x more transactions per second, this is the case with MySQL or 2.3x for MongoDB.

AcceKV boosts storage engines such WiredTiger or RocksDB with higher multiple in all categories listed above. It helps to build DRAM plus SSDs based DB environments instead fo full DRAM ones that increase drastically cost. The good example of that is the config of XDP coupled with Redis.

Of course there is no serious comparison with software RAID and users with other traditional RAID controllers will receive multiple gains as mentioned above that go beyond just data protection.

XDP can be deployed in various configurations giving flexibility: pure DAS within a server, with a networked JBOF via NVMe-oF and the board installed in the remote server, same with the board in the client server and finally with multiple server and XDP in them connected to networked JBOFs.

Click to enlarge

Rookout

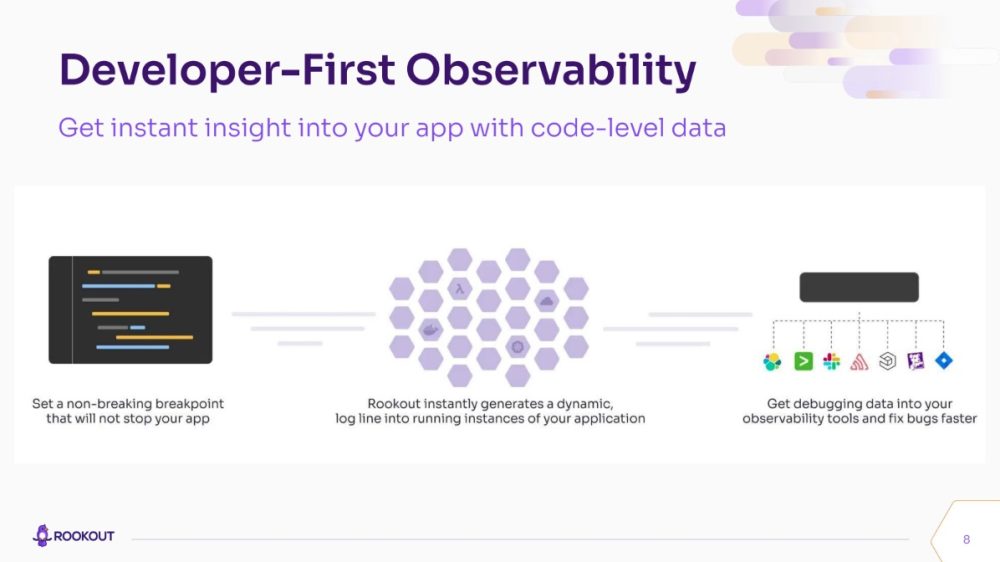

The company focused on developers with an observability platform tailored to live-code, on-demand. The firm was founded in November 2017, has raised $28.4 million in 3 VC rounds and has 45 full time employees today.

The idea came from the fact that development shifted to microservices, containers, orchestration in a DevOps mode several years ago, it even accelerated, but tools for developers appear to be limited especially for cloud-native applications.

Then come the observability approach in all the software development lifecycle which became even more complex for a few years now. Rookout model is about connecting to the source code with adding non-breaking point in the application. The solution generates immediate information for these instrumentalized codes thanks to a live debugger, a live logger, a live profiler and and like metrics. The outcome is rapid as it reduces the MTTR by up to 80% and the time to market with faster release process and improved Q&A.

The solution covers Kubernetes, microservices, serverless and service-mesh based applications with multiple languages like Java, Python, .Net, Node,JA, Ruby and Golang.

The firm has plenty of users like Amdocs, Cisco, Dynatrace, Booking.com, NetApp, Autodesk, Santander, Zoominfo, Backblaze or Mobileye to name a few. And, of course, integration is key again here, with partner software, cloud provides and various online tools and services.

Beyond this 3 pillars for its observability model – logs, traces and metrics – Rookout just added a 4th one with Smart Snapshots. The platform becomes even more comprehensive with this last module that delivers the next level in applications understanding and its capabilities to capture applications moments after incidents or unexpected events. It contributes to boost release time and time to market with a new level of applications quality. It goes beyond classic logs and traces as snapshots contain much more information on the reason and applications contexts.

Click to enlarge

StorONE

New meeting with this company and we had the opportunity to see progress made by the team.

The first one is related to team changes and Gal Naor, CEO, recruited Jeff Lamothe as chief product officer. This later has spent some times at various storage companies such HP, EMC, Compellent or Pure Storage. This arrival is key for the company having a clear need to promote itself thanks to a real technical skilled executive.

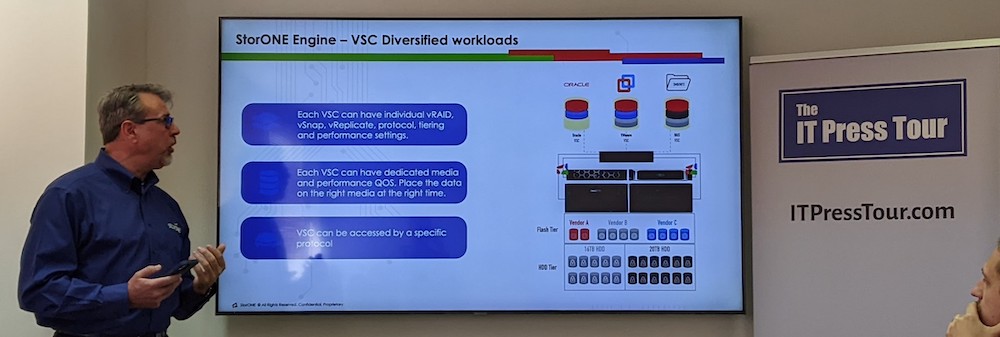

The team has started a new messaging angle around the Virtual Storage Container (VSC) concept. The company has made several iterations of product developments and company positioning. Since the inception, the ambition was to shake the industry with a new storage controller model really in the wave of SDS to maximize the value of storage media. They designed a solution that is cacheless with the idea to offer one of the best ROI on the market. It turns out that the team reached a new level in this mission.

VSC delivers a hypervisor-like controller but dedicated to storage coupled with storage volumes. This new entity runs on a single Linux server, doubled to offer high-availability, and connected to disk array, intelligent or just JBODs. Multiple VSCs are deployed on these 2 redundant nodes and control their own volumes with their private attribute. These intelligent controller provides multiple data services such RAID levels, snapshots, replication or tiering. With its recovery model, a 20TB HDD recovers in less than 3h and SSD in less than 5mn.

The engine is responsible to place the data on the right-volume and the admin has to configure each VSC for its specific usage. A volume could be full flash, full HDD or a mix of these 2 with potentially automatic and transparent tiering between these entities. And finally a VSC exposes its data volumes through a block interface like iSCSI, a file one with NFS or SMB or finally S3. This solution seems to be a good fit for SMB+ for specific use cases based on a pure software approach and COTS. StorONE software already runs in Azure and soon in AWS and be reference in the marketplace according to the CEO.

In terms of pricing model, as always Naor tried to make things differently from the competition, and this time, the team adopts a per drive cost whatever is the capacity. And of course, nobody reveals its MSRP.

The company with more than 60 people spent several years to built and penetrate USA, this time this is the turn of Europe and the organization is actively recruit in the region to build its European channel force.

Even if the company plans to be cash positive next quarter without any plan to raise a new round, we also understood that Gal Naor ambition is to make an IPO, we’ll see…

Click to enlarge

UnifabriX

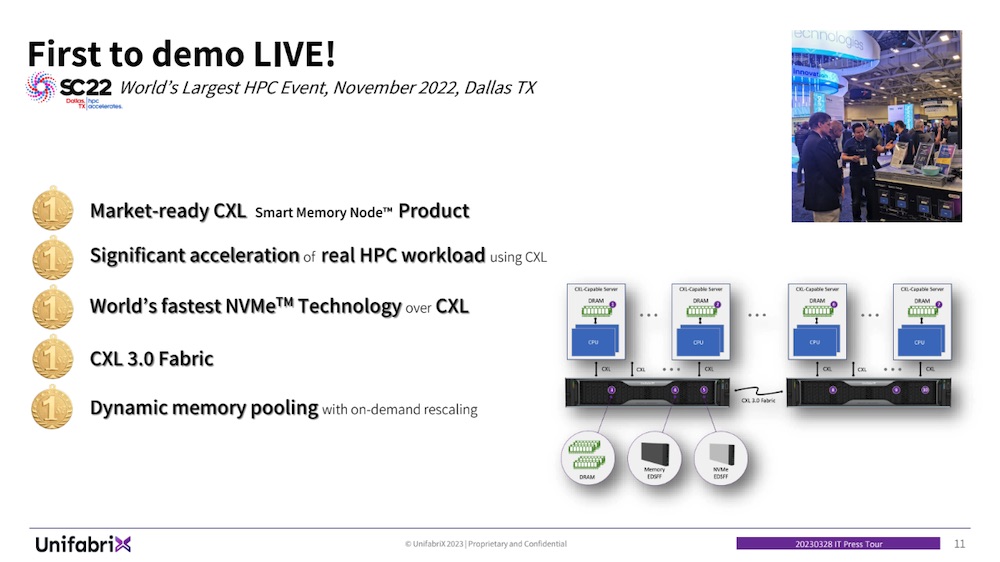

The company plays in one of the most promising IT segment for the coming years, the CXL-based memory technology.

Founded in 2020, we met the firm at his HQ in Haifa, Israel, and we realized that the team was at the origin of several innovations such as the Intel IPU, FlexBus and IAL. They clearly are expert in data center architecture and high performance data fabric design with a proven experience at industry leaders.

Addressing the next big challenge in IT with DRAM bandwidth, capacity and cost limitations, UnifabriX leverages CXL to solve these issues. CXL is a new standard promoted by the CXL Consortium which offers memory expansion, pooling and fabric aligned with his various protocol iterations and versions. It helps large servers pools with gigantic memory attached to them coming from the rack members. Being key active participants in that CXL design and evolution, the team has built and developed one of the most advanced CXL-based solution today named Smart Memory Node that bridge servers and memory providers in a fast, intelligent and secure way.

This 2U chassis embeds memory modules and NVMe SSDs all in the EDSFF format plus its RPU or Resource Processing Unit for a total of 128TB. It already supports CXL 1.1 of course but is capable to work with CXL 2.0 and is ready for 3.0 plus PCIe Gen 5 as demonstrated at the recent SuperComputing show in Dallas in November. Several combinations are possible to connect and expand memory between servers. This event was a pivot point for CXL and the company, organizers set up a dedicated CXL pavilion with plenty of vendors, all active participant in this landscape. UnifabriX was the most spectacular one with performance gain of 26% over saturated configurations for the HPCG benchmark. The numbers shown were just impressive with very high IO/s, very low latency and significant bandwidth.

In terms of market, the opportunity is huge, projections and expectations reach impressive numbers for the coming years as soon as new CPUs will flood the field. And it is expected to take off this year as AMD already shipper CXL capable CPUs. Micron estimates a $20 billion market in FY30 and a memory pooling of between $14 and $17 billion in a global $115 billion server memory total addressable market.

Of course, this is not everyone and UnifabriX identifies the HPC as the premier demand following by the cloud and on-premise market. In other words, HPC will adopt first CXL due to their high demanding jobs for memory, serving also as the proof, and then a more “classic” server market will be addressed, if we can say that. The conclusion is immediate, you have to be ready and UnifabriX understands that.

Click to enlarge

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter