Hammerspace Software V.5 for High-Performance File Data Architectures

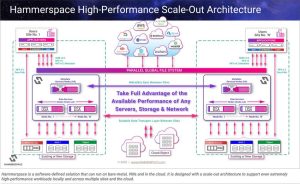

Allows organizations to take advantage of performance capabilities of any server, storage system and network anywhere.

This is a Press Release edited by StorageNewsletter.com on November 17, 2022 at 3:26 pm-

High-performance across data centers and to the cloud: saturate the available Internet or private links available

-

High-performance across interconnect within the data center: saturate Ethernet or IB networks within the data center

-

High-performance server-local io: deliver to applications near theoretical io subsystem maximum performance of cloud instances, VMs and bare metal servers

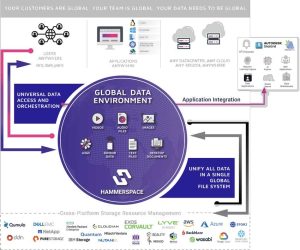

Hammerspace unveiled the performance capabilities that many of the most data-intensive organizations in the world depend on for high-performance data and storage in decentralized workflows.

click to enlarge

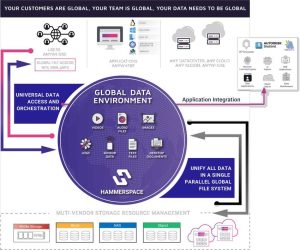

It completely changes previously held notions of how unstructured data architectures can work, delivering the performance needed to free workloads from data silos, eliminate copy proliferation, and provide direct data access to applications and users, no matter where the data is stored.

The firm’s software allows organizations to take advantage of the performance capabilities of any server, storage system and network anywhere in the world. This capability enables a unified, fast, and efficient global data environment for the entire workflow, from data creation to processing, collaboration, and archiving across edge devices, data centers, and public and private clouds.

click to enlarge

High-performance across data centers and to cloud: Saturate available Internet or private links

Instruments, applications, compute clusters and the workforce are increasingly decentralized. With the company, all users and applications have globally shared, secured access, to all data no matter which storage platform or location it is on, as if it were all on a local NAS.

The firm overcomes data gravity to make remote data fast to use locally. Modern data architectures require data placement to be as local as possible to match the user or application’s latency and performance requirements. The company’s Parallel Global File System orchestrates data automatically and by policy in advance to make data present locally without wasting time waiting for data placement. And data placement occurs fast. Using dual, 100GbE networks, the software can intelligently orchestrate data at 22.5GB/s to where it is needed. This performance level enables workflow automation to orchestrate data in the background on a file-granular basis directly, by policy, making it possible to start working with the data as soon as the first file is transferred and without needing to wait for the entire data set to be moved locally.

Unstructured data workloads in the cloud can take advantage of as many compute cores as allocated and take advantage of as much bandwidth as is needed for the job, even saturating the network within the cloud when desired to connect the compute environment with applications. A recent analysis of EDA workloads in Microsoft Azure showed that the company’s software scales performance linearly, taking advantage of the network configuration available in Azure. This performance cloud file access is necessary for compute-intensive use cases, including processing genomics data, rendering visual effects, training machine learning models and implementing HPC architectures in the cloud.

click to enlarge

Performance across data centers and to cloud in Release 5 software include:

-

Backblaze, Zadara, and Wasabi support

-

Continual system-wide optimization to increase scalability, improve back-end performance, and improve resilience in very large, distributed environments

-

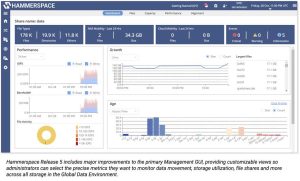

Hammerspace Management GUI, with user-customizable tiles, better administrator experience, and increased observability of activity within shares

-

Increased scale, increasing the number of Hammerspace clusters supported in a single global data environment from 8 to 16 locations

High-performance across interconnect within data center: Saturate Ethernet or IB networks within data center

Data centers need massive performance to ingest data from instruments and large compute clusters. Hammerspace makes it possible to reduce the friction between resources, to get the most out of both your compute and storage environment, reducing the idle time waiting on data to ingest into storage.

Hammerspace supports a wide range of performance storage platforms that organizations have in place today. The power of the company’s software architecture is its ability to saturate even the fastest storage and network infrastructures, orchestrating direct I/O and scaling linearly across otherwise incompatible platforms to maximize aggregate throughput and IOPs. It does this while providing the performance of a parallel file system coupled with the ease of standards-based global NAS connectivity and out-of-band metadata updates.

In one recent test with moderately sized server configurations deploying just 16 DSX nodes, the firm’s file system took advantage of the full storage performance to hit 1.17Tb/s, which was the max throughput the NVMe storage could handle, and with 32kb file sizes and low CPU utilization. The tests demonstrated that the performance would scale linearly to extreme levels if additional storage and networking were added.

Management interface

Click to enlarge

Performance across interconnect within data center enhancements in Release 5 software include:

-

20% increase in metadata performance to accelerate file creation in primary storage use cases

-

Accelerated collaboration on shared files in high client count environments

-

RDMA support for global data over NFS v4.2, providing high-performance, coupled with the simplicity and open standards of NAS protocols to all data in the global data environment, no matter where it is located

Performance server-local IO: Deliver to applications near theoretical I/O subsystem maximum performance of cloud instances, VMs, and Bare Metal servers

High-performance use cases, edge environments and DevOps workloads all benefit from leveraging the full performance of the local server. The company’s solution takes full advantage of the underlying infrastructure, delivering 73.12Gb/s performance from a single NVMe-based server, providing nearly the same performance through the file system that would be achieved on the same server hardware with direct-to-kernel access. The Parallel Global File System architecture separates the metadata control plane from the data path and can use embedded parallel file system clients with NFS v4.2 in Linux, resulting in minimal overhead in the data path.

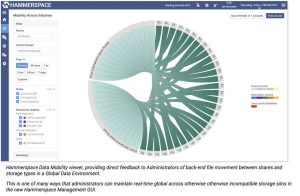

Mobility interface

Click to enlarge

For servers running at the edge, the software handles situations where edge or remote sites become disconnected. Since file metadata is global across all sites, local read/write continues until the site reconnects, at which time the metadata synchronizes with the rest of the global data environment.

David Flynn, founder and CEO, Hammerspace and previous co-founder and CEO, Fusion-IO, said: “Technology typically follows a continuum of incremental advancements over previous generations. But every once in a while, a quantum leap forward is taken with innovation that changes paradigms. This was the case at Fusion-IO when we invented the concept of highly-reliable high-performance SSDs that ultimately became the NVMe technology. Another paradigm shift is upon us to create high-performance global data architectures incorporating instruments and sensors, edge sites, data centers, and diverse cloud regions.”

Eyal Waldman, co-founder and previous CEO, Mellanox Technologies, and advisory board member, Hammerspace said: “The innovation at Mellanox was focused on increasing data center efficiency by providing the highest throughput and lowest latency possible in the data center and in the cloud to deliver data faster to applications and unlock system performance capability. I see high-performance access to global data as the next step in innovation for high-performance environments. The challenge of fast networks and fast computers has been well solved for years but making remote data available to these environments was a poorly solved problem until Hammerspace came into the market. Hammerspace makes it possible to take cloud and data utilization to the next level of decentralization, where data resides.”

Trond Myklebust, maintainer, Linux Kernel NFS Client and CTO, Hammerspace, said: “Hammerspace helped drive the IETF process and wrote enterprise quality code based on the standard, making NFS4.2 enterprise-grade parallel performance NAS a reality.”

Jeremy Smith, CTO, Jellyfish Pictures, said: “We wanted to see if the technology really stood up to all the hype about RDMA to NFS4.2 performance. The interconnectivity that RoCE/RDMA provides is really outstanding. When looking to get the maximum amount of performance for our clients, enabling this was an obvious choice.”

Mark Nossokoff, research director, Hyperion Research, said: “Data being consumed by both traditional HPC modeling and simulation workloads and modern AI and HPDA workloads is being generated, stored, and shared between a disparate range of resources, such as the edge, HPC data centers, and the cloud. Current HPC architectures are struggling to keep up with the challenges presented by such a distributed data environment. By addressing the key areas of collaboration at scale while supporting system performance capabilities and minimizing potential costly data movement in HPC cloud environments, Hammerspace aims to deliver a key missing ingredient that many HPC users and system architects are looking for.”

Resources:

Technical Brief

Hammerspace Technology

Data Unchained Podcast with Eyal Waldman, co-founder and previous CEO, Mellanox Technologies

Data Unchained Podcast with Jeremy Smith, CTO, Jellyfish Pictures

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter