Xinnor xiRAID Efficient RAID for NVMe Drives

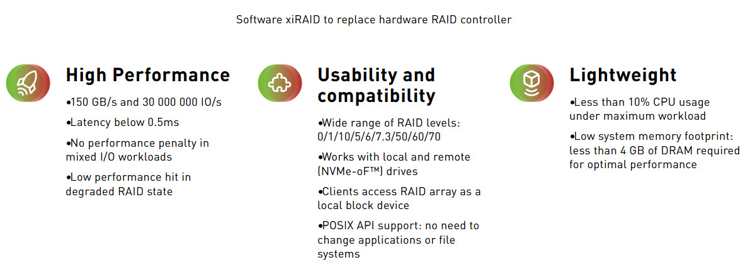

Software module of performance to replace any other hardware, hybrid or software RAID

This is a Press Release edited by StorageNewsletter.com on October 21, 2022 at 2:02 pmFrom Xinnor

Since the introduction of NVMe SSD the question of RAID has been ever-present. Traditional technologies haven’t been able to scale proportionally to the speed increase of new devices, so most multi-drive installation relied on mirroring, sacrificing TCO benefits.

At Xinnor (Haifa, Israel), we applied our experience in mathematics, software architecture and knowledge of modern CPU, to create a new generation of RAID. A product designed to perform in modern compute and storage environments, a RAID product for NVMe.

Design goals for product were simple:

-

Today the fastest enterprise storage devices in their raw form can deliver up to 2.5 million IO/s and 13GB/s of throughput. We want our RAID groups to deliver at least 90% of these, scaling to dozens of individual devices.

-

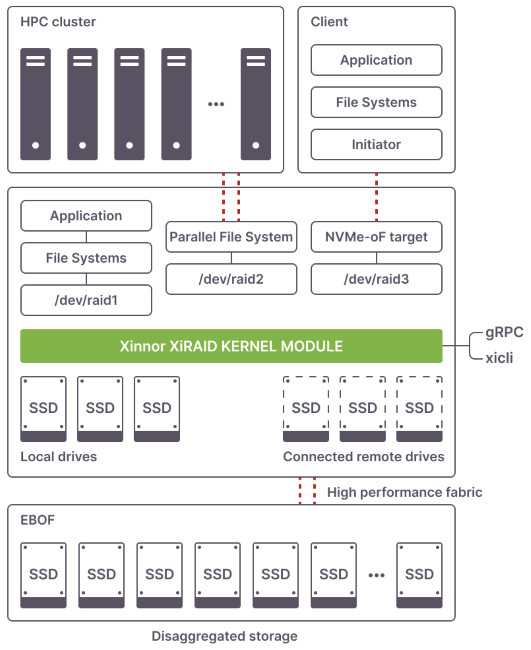

Our product need to be usable in disaggregated/composable (CDI) infrastructures with network-attached NVMe storage.

-

Installation and tuning need to be fast and simple.

-

We are a service part of a greater infrastructure, so there should be all the necessary APIs to easily integrate our product with the ecosystem.

-

Low CPU and memory footprint. Host resources are precious and they belong to the business application. As a service, we need to be as compact as possible.

The result of this vision is xiRAID – a simple software module of performance to replace any other hardware, hybrid or software RAID.

xiRAID in relation to other system components

Before we show the solution, let’s take a closer look at the issue. We’ll run a simple test of 24 NVMe devices grouped together with mdraid, which is a staple software RAID for Linux along with its sibling, Intel VROC. Let’s put some random 4k read workload on our bench configuration detailed below:

We observe our test performance hitting a wall just below 2 million IO/s. Launching htop we can visualize the issue – some CPU cores are running at 100% load or close to it, some are idle.

Click to enlarge

What we have now is an unbalanced storage subsystem that is prone to bottlenecks. Add a business application on top of this, and you have a CPU-starved app competing with a CPU-hungry storage service, pushing each other down on the performance chart.

In xiRAID, we make it a priority to even out CPU core load and avoid costly locks during stripe refresh. Let’s see it in action.

In our lab, we’re running Oracle Linux 8.4, but the product is available on different Linux distributions and kernels. Our bench configuration is as follows:

System information:

Processor: AMD EPYC 7702P 64-Core @ 2.00GHz

Core Count: 64

Extensions: SSE 4.2 + AVX2 + AVX + RDRAND + FSGSBASE

Cache Size: 16MB

Microcode: 0x830104d

Core Family: Zen 2

Scaling Driver: acpi-cpufreq performance (Boost: Enabled)

Graphics: ASPEED

Screen: 800×600

Motherboard: Viking Enterprise Solutions RWH1LJ-11.18.00

BIOS Version: RWH1LJ-11.18.00

Chipset: AMD Starship/Matisse

Network: 2 x Mellanox MT2892 + 2 x Intel I350

Memory: 8 x 32 GB DDR4-3200MT/s Samsung M393A4K40DB3-CWE

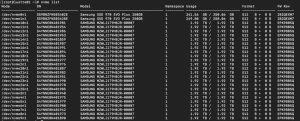

Disk: 2 x Samsung SSD 970 EVO Plus 250GB + 48 x 1,920GB SAMSUNG MZWLJ1T9HBJR-00007

File-System: ext4

Mount Options: relatime rw

Disk Scheduler: NONE

Disk Details: raid1 nvme0n1p3[0] nvme1n1p3[1] Block Size: 4096

OS: Oracle Linux 8.4

Kernel: 5.4.17-2102.203.6.el8uek.x86_64 (x86_64)

Compiler: GCC 8.5.0 20210514

Security: itlb_multihit: Not affected

+ l1tf: Not affected

+ mds: Not affected

+ meltdown: Not affected

+ spec_store_bypass: Vulnerable

+ spectre_v1: Vulnerable: __user pointer sanitization and usercopy barriers only; no swapgs barriers

+ spectre_v2: Vulnerable IBPB: disabled STIBP: disabled

+ srbds: Not affected

+ tsx_async_abort: Not affected

NVMe drives list

Click to enlarge

Installation steps:

-

Install DKMS dependencies. For Oracle Linux, we need kernel-uek-devel package.

dnf install kernel-uek-devel -

EPEL installation

dnf -y install https://dl.fedoraproject.org/pub/epel/epel-release-latest-8.noarch.rpm -

Adding Xinnor repository

dnf install https://pkg.xinnor.io/repositoryRepository/oracle/8.4/kver-5.4/xiraid-repo-1.0.0-29.kver.5.4.noarch.rpm -

Installing xiRAID packages

dnf install xiraid-release

At this point the product is ready and you can use it with up to 4 NVMe devices. For larger installations, you’ll need a license key. Please note that we’re providing temporary licenses for POC and benchmarking. -

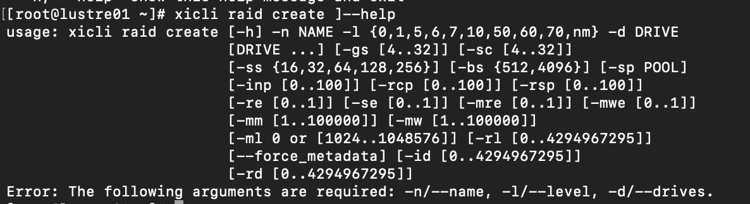

Array creation

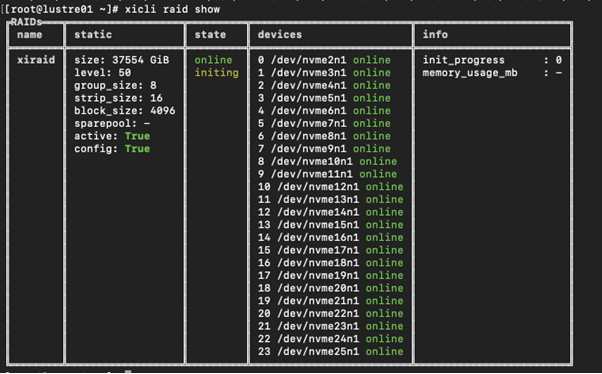

xicli raid create -n xiraid -l 50 -gs 8 -d /dev/nvme[2-25]n1

This will build a RAID-50 volume with 8 drives per group and a total of 24 devices. No extra tuning is applied.

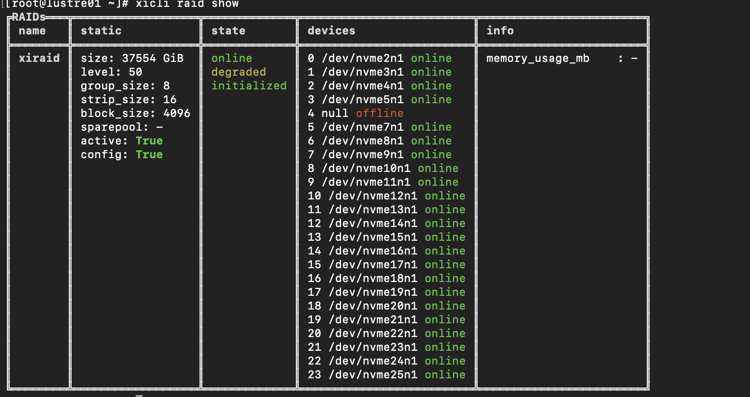

To show the result we use xicli raid show

Note: pay attention to the initing state. Full volume initialization is necessary for correct results. Only start tests after it’s done.

While our volume is initializing, let’s have an overview of some of xiRAID features:

-

Multiple supported RAID levels from 0 to 70

-

Customizable strip size

-

64 individual storage devices limit

-

Customizable group size for RAID-50, -60, -70

-

Tunable priority for system tasks: volume initialization and rebuild

-

-

List volumes and their properties

-

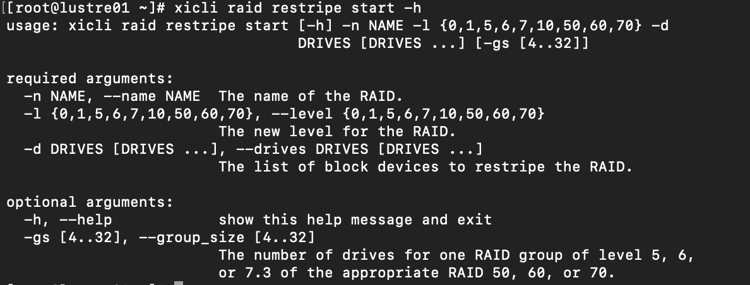

Grow volumes and change RAID levels

-

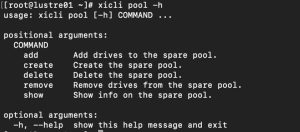

Create and manage spare pools

Now that our volume is ready, let’s get to the testing.

All our tests are easy to understand and repeat, we are using a reasonably “heavy” system without stretching into the realm of theoretical benchmarks. The results should give you an idea of what our product can do on COTS hardware.

We are sharing all the details so that you could try this in your own environment.

To create a baseline, we’ll first evaluate raw device performance using all 24 SSDs with a series of simple fio tests:

[global]

rw=randread / randwrite / randrw rwmixread=50% / randrw rwmixread=70%

bs=4k

iodepth=64

direct=1

ioengine=libaio

runtime=600

numjobs=3

gtod_reduce=1

norandommap

randrepeat=0

gtod_reduce=1

buffered=0

size=100%

time_based

refill_buffers

group_reporting

[job1]

filename=/dev/nvme2n1

[job2]

filename=/dev/nvme3n1

[job3]

filename=/dev/nvme4n1

[job4]

filename=/dev/nvme5n1

[job5]

filename=/dev/nvme6n1

[job6]

filename=/dev/nvme7n1

[job7]

filename=/dev/nvme8n1

[job8]

filename=/dev/nvme9n1

[job9]

filename=/dev/nvme10n1

[job10]

filename=/dev/nvme11n1

[job11]

filename=/dev/nvme12n1

[job12]

filename=/dev/nvme13n1

[job13]

filename=/dev/nvme14n1

[job14]

filename=/dev/nvme15n1

[job15]

filename=/dev/nvme16n1

[job16]

filename=/dev/nvme17n1

[job17]

filename=/dev/nvme18n1

[job18]

filename=/dev/nvme19n1

[job19]

filename=/dev/nvme20n1

[job20]

filename=/dev/nvme21n1

[job21]

filename=/dev/nvme22n1

[job22]

filename=/dev/nvme23n1

Baseline results for 4k block size:

- 17.3 million IO/s random read

- 2.3 million IO/s random write

- 4 million IO/s 50/50 mixed

- 5.7 million IO/s 70/30 mixed

An important note for write testing: due to the nature of NAND flash, fresh drive write performance differs greatly from sustained performance, once NAND garbage collection is running on the drives. In order to get the results that are repeatable and closely match real-world production environments, a pre-conditioning of the drives is necessary as described in SNIA methodology.

We are following this spec for all our benchmarks.

Proper pre-conditioning techniques are out of the scope of this publication, but in general a drive sanitize, followed by overwriting the entire drive capacity twice, immediately followed by the test workload is good enough for most simple benchmarks.

Now that we have our baseline, let’s test xiRAID:

xiRAID RAID50 4k results:

- 16.8 million IO/s random reads

- 1.1 million IO/s r random writes

- 3.7 million IO/s mixed rw

Comparing to baseline xiRAID performs at

- 97% for random reads – this is industry fastest RAID performance

- 48% for random writes – this is almost at the theoretical maximum due to read-modify-write RAID penalty

One extra step in our benchmark is always a test of system performance in degraded mode, with one device failed.

The simplest way to simulate a drive failure with xiRAID CLI:

xiraid replace -n xiraid -no 4 -d null

Re-run the tests with the following results:

- 14.3 million IO/s random reads

- 1.1 million IO/s random writes

- 3.1 million IO/s mixed rw

We see a 15% maximum drop of performance in degraded state with xiRAID, compared to 50-60% in other solutions.

TL;DR summary of xiRAID benchmark: we were able to get an industry-leading result of a software RAID-50 volume operating at 97% of baseline during random read tests, dropping 15% during a simulated drive failure.

Summary table (for your convenience, we have rounded the results to hundreds of kIO/s):

|

|

Random read 4k, kIO/s |

% |

|---|---|---|

|

Baseline raw performance |

17,300 |

100,00% |

|

xiRAID |

16,800 |

97,11% |

|

xiRAID degraded |

14,300 |

82,66% |

|

MDRAID |

1,900 |

10,98% |

|

MDRAID degraided |

200 |

1,17% |

|

ZFS |

200 |

1,17% |

|

ZFS degraded |

200 |

1,17% |

|

|

Random write 4k, kIO/s |

% |

|---|---|---|

|

Baseline |

2,300 |

100,00% |

|

xiRAID |

1,100 |

47,83% |

|

MDRAID |

200 |

8,70% |

|

ZFS |

20 |

0,87% |

We at the company encourage you to run similar tests on your own infrastructure. Feel free to reach out to us at request@xinnor.io for detailed installation instructions and trial licenses.

About Xinnor

It develops xiRAID – a software RAID engine of performance. It is a product of a decade of math research, algorithms of data protection and in-depth knowledge of modern CPU operation. Although it works with all types of storage devices, it really shines when deployed together with NVMe or NVMe-oF devices. It is the only software solution in the market capable of driving up to 97% of raw device performance in a computationally heavy RAID-5 configuration, while maintaining a very modest load on the host CPU and low memory footprint.

Resources :

Xinnor xiRAID System Requirements.pdf

Xinnor xiRAID Installation Guide.pdf

Xinnor xiRAID Administrators Guide.pdf

Xinnor xiRAID Command Reference.pdf

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter