MinIO Object Storage Running on Google Cloud Platform

By Pete Hnath

This is a Press Release edited by StorageNewsletter.com on July 20, 2022 at 2:02 pm Blog written on July 13, 2022 by Pete Hnath, focused on building customer education at MinIO, Inc.

Blog written on July 13, 2022 by Pete Hnath, focused on building customer education at MinIO, Inc.

MinIO Object Storage Running on the Google Cloud Platform

As organizations organize themselves around data, they are becoming application-oriented. The modern application is a cloud native and data-centric application, and benefits from decoupled stateless, immutable services capable of exceptional performance and scale.

While MinIO is available on every cloud – public, private, and edge, this post is focused on the Google Cloud Platform with an eye on why you need MinIO within GCP, to achieve a consistent user experience and application performance.

The post will focus on the following areas

- Deploying MinIO within GCP

- Improving performance of data intensive workloads

- Providing portability across public clouds and Kubernetes

- Leveraging multiple storage tiers to improve application efficiency

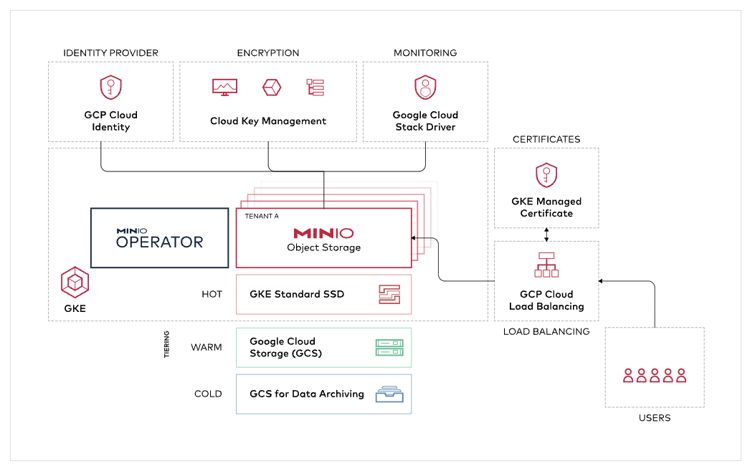

Deploying MinIO within GCP

When Kubernetes is deployed on Google Cloud, whether Google Kubernetes Engine (GKE) or a commercial distribution such as Red Hat OpenShift or VMWare Tanzu, applications are largely abstracted from the underlying compute, network, and storage; each is an elastic cloud service that can be provisioned and managed independent of the cluster. That abstraction does not, however, lessen the impact of each as a potential constraint to storage, retrieval, and processing.

MinIO is a data store and there are 2 primary ways to deploy it on GCP. The first is the marketplace model. We looked at all of the different types of infra options and have delivered an opinionated implementation that covers all of the key areas. We evaluated dozens of the instance types best suited for MinIO, taking into account factors such as CPU type, number of cores, storage type, and network performance. Then we benchmarked MinIO on these instance types to determine the best price-performance ratio. The winner was 4 n2-standard-32 instances. With those, you can expect to get up to 2.4GiB/s PUT and 5.1GiB/s GET throughput.

Beyond the pre-vetting of infrastructure, the marketplace model has the value of consolidating MinIO data store costs within a customer’s overall Google purchase commitment.

The second way to deploy MinIO is through a “roll your own” approach. There are more than 150,000 of these deployments running on GCP. One way to make the “roll your own” even easier is to leverage the MinIO Operator. The MinIO Operator works with any flavor of Kubernetes to provision MinIO on top of GCP infrastructure.

We are comfortable with the developer going in either direction because, ultimately, the end result is the same – S3 compatible object storage that reduces operational complexity, is performant at scale, and remains portable across Kubernetes clusters.

Improving Performance of Data Intensive Workloads

Data intensive workloads such as AI/ML, databases, cloud native applications, streaming data services, and DR are particularly dependent on optimized SDS for application performance. MinIO optimizes data access, delivering high throughput, scalability, and portability. Given the throughput stats outlined above, MinIO more than delivers the performance needed to power demanding workloads like Apache Spark, Starburst Presto/Trino, Clickhouse, and just about any other cloud-native database, analytics, or AI/ML workload.

What are the implications for GCP deployments? Grab the fastest network your budget/workload allows for. If NVMe, MinIO will max out the network. For HDD, we might max the drives first. But optimizing data intensive workloads will generally result in maxing out the underlying infrastructure before you max out MinIO. Architect accordingly.

Providing Portability Across Public Clouds and Kubernetes

One of the things about the public cloud that is not readily evident is that they are incompatible with each other – particularly when it comes to storage. AWS has S3, and it is the de facto standard. Azure has its Blob API. GCP has its own object storage API. An application built for data on one, will not run on the other.

There is a way to address storage incompatibility – and that is to run MinIO as the object store and use whatever infrastructure makes sense for the budget or workload. The reason is that MinIO is not only S3 compatible, but runs natively on all of the public clouds courtesy of Kubernetes.

The net effect is to simplify application development and deliver homogeneous application access to data across clouds.

While we are fans of Google’s Cloud – it has infra options and some amazing services (BigQuery for one, etc.) – the GCS object storage API is the least adopted of the major clouds. This potentially creates problems for application development, application integration and even developer familiarity. For example, customers have found application-breaking differences in Google’s implementation of the S3 API in ACLs, CORS structure, and Object Lifecycle Policies, among other elements.

Adopting an S3-centric application approach through MinIO will provide access to the broadest range of applications and the largest ecosystem. It might be the most powerful argument to run MinIO inside of GCP.

Leveraging Multiple Storage Tiers to Improve Application Efficiency

Switching gears slightly, there are also some very sophisticated features built into MinIO that work inside of GCP and further enhance the reasons to run it there. One of those feature sets is data lifecycle management. With MinIO native tools – available across the interface options (Operator, Console, mc, CLI) – the user can configure MinIO such that data is tiered across any of the Google storage tier options from the TPC instances to infrequently accessed HDD. These policies can even manage the tiering of data to other clouds or on-prem instances. Your business needs are the guide – MinIO becomes the enabling software.

For example, we can configure MinIO to with maximum “hot tier” throughput using bare metal local NVMe storage, then HDD as a warm tier and Google Cloud Storage as a third tier for scalability. Add to this the belt and suspenders option of multi-cloud, active-active replication and you can centralize on Google while building out failover strategies to other clouds. For obvious reasons, the other public clouds don’t make replication and failover to competing clouds easily available – but MinIO does.

All Together Now

As noted in the introduction, we think highly of Google’s Cloud. It is why we are on the marketplace and why we support so many customers who roll their own or run a hybrid infrastructure. Having said that, we think (and we are admittedly biased), that the optimal way to design your data architecture is to use GCP’s services and infrastructure and MinIO’s object store to ensure compatibility, application portability and simplicity within and across clouds. By doing so, you also gain critical capabilities around S3 security, resilience, simplicity, and performance.

The best part is that you can assess our position yourself. Check out the marketplace app or roll your own.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter