Handling HDD Failures in Computer Storage

By Weipeng Jih, data analyst, Ulink Technology

This is a Press Release edited by StorageNewsletter.com on May 30, 2022 at 2:02 pmComputer storage HDDs are getting bigger and typical HDDs range from 1 to 8TB without blinking an eye,1,2 with Seagate HDDs having averaged 6.1TB in late 2021.

However, this bigger capacity also means 2 things: a) You can lose more data than ever before when a HDD fails. This can mean losing engineering documents, design projects, or client files. b) HDD was part of a RAID, you will need a lot of time to rebuild it if it fails.

A 1TB HDD can cost an organization an entire day to rebuild.3 This can mean loss of productivity while people are waiting for the data to become accessible again. Despite modern HDDs being fairly robust, they still fail from time to time. The data center Backblaze reported an annualized failure rate of 1.22% for the first quarter of 2022, with figures ranging from 0 to 24% depending on the model.4

Pros and cons of various methods in addressing HDD failures:

There are 4 main ways to address HDD failures: a) having data redundancy, b) replacing HDDs on a regular basis, c) anticipating failures with threshold-based methods, and d) anticipating failures with ML algorithms.

Each method has its benefits and drawbacks.

Data redundancy

The basic idea with data redundancy is that you create multiple copies of data so that if one or more HDDs fail, you still have your data saved on 1 or more other HDD. Data redundancy can take on a few different forms.

You can make your data redundant by setting up a redundant array of independent disks (i.e. RAID) array. In certain RAID configurations (not all RAID configurations offer data redundancy), you can have data redundancy through either mirroring or parity. Mirroring produces data redundancy by writing entire copies of the data onto different HDD. Parity produces data redundancy by having the bits across multiple HDDs in a RAID array add up to either an even or odd number. This way, if a single HDD fails, its data can be reconstructed by the knowledge that the bits should either add up to an even or odd number. In addition to data redundancy, using RAID has the added benefit of distributing wear across HDDs.

You can also make your data redundant by regularly backing up your data. The backups can either be saved on your own hardware such as external HDDs and NAS or uploaded to a cloud service. However, a backup can only protect vs. data loss up until the last time that it occurs. The amount of data that is lost when relying on a backup strategy will depend on how often the backups occur. Many SMBs will backup their data once per day.5

The main benefit of data redundancy is that it offers good protection vs. data loss. A simple example of this is if you have 2 HDDs configured as RAID-1 (which mirrors data), and each HDD has a 1.22% chance of failure in any given year, then the probability of you losing data due to HDD failure is only 0.015% per year.

What data redundancy does not offer is any kind of warning as to when HDD failures will occur. In the previous 2 HDD examples, while the chance of losing data due to HDD failure is only 0.015%, the chance that at least 1 HDD will fail is actually greater than the chance of experiencing HDD failure if you only have a single HDD (i.e. 2.4%). When a HDD does fail, chances are that you will want to replace it and rebuild it with the original information. And as mentioned earlier, rebuilds can take a substantial amount of time.

Regular scheduled replacement

Another way to deal with failing HDDs is to simply replace them periodically. This approach can be seen in data centers, that often replace their HDDs every 1 to 6 years.6 This approach can trim away HDD failures due to older HDDs, which may have experienced higher wear than younger HDDs.

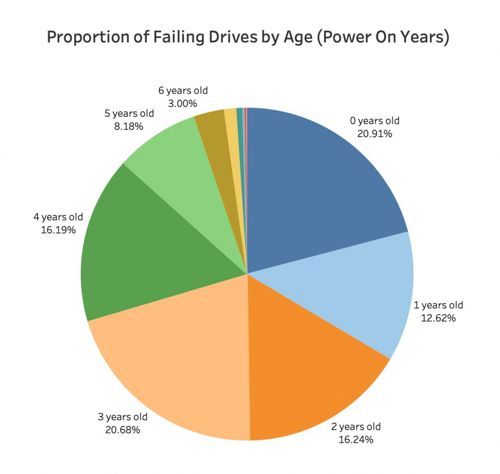

However, young HDDs can also fail. If we examine a recent distribution of HDD failures from 2021 in NASs out in the real world, we can see that many fail before they reach 1 year old.7 So, if you replace your HDD annually, you would still experience the HDD failures that occur in HDDs under 1 year old.

Anticipating HDD failures with threshold-based methods.

HDD failures can also be anticipated to a certain extent by monitoring HDD health indicators and looking out for when they pass certain thresholds. HDD health indicators include SMART attributes as well as a host of other HDD health statistics reported by the computing system and the HDD itself.

Each SMART attribute comes with various pieces of information, including raw values, normalized values, and sometimes thresholds for “trip” values and/or pre-fail flags. Normalized SMART values range between 0 and 255 (though are often presented in hexadecimal), with ideal values typically at or above 100. However, the meaning of individual SMART attributes and their specific values have not been standardized across manufacturers. This means that a SMART value in one HDD may not mean the same thing as the same SMART value in another HDD. Sometimes SMART attributes will come with trip values. When the normalized SMART values drop below these trip values, it may indicate that the HDD is about to fail in the near future. The original intent behind SMART trips is to warn about failures in the next 24 hours.8,9 Sometimes, SMART attributes will also come with a pre-fail flag. This flag indicates that if the SMART attribute trips, it is considered by the manufacturer to be a severe condition for the HDD.

Several other HDD health indicators exist. These HDD health indicators are sometimes better standardized than SMART attributes, with organizations like the American National Standard of Accredited Standards Committee publishing specs like the IT ATA/ATAPI Command Set that manufacturers can refer to in designing their HDDs, though they may not be as conveniently obtainable as SMART attributes.

HDD failures can be anticipated sometimes by thresholding SMART attributes and other HDD health indicators. For example, we can decide that a HDD is in danger of failing if its raw SMART 197 (current pending sector count) value rises above 0, or its normalized value drops below 100, or if it trips. Similarly, we can decide that a HDD is in danger of failing if its SATA device error count rises above 0.

Using threshold-based methods also has the potential advantage of explaining the reason behind a HDD’s deterioration. For example, if a HDD’s SMART attribute 9 rose above some threshold, you might conclude that is performing poorly due to old age. This interpretability is one reason why technically proficient users might choose to handle HDD failures with a threshold-based approach.

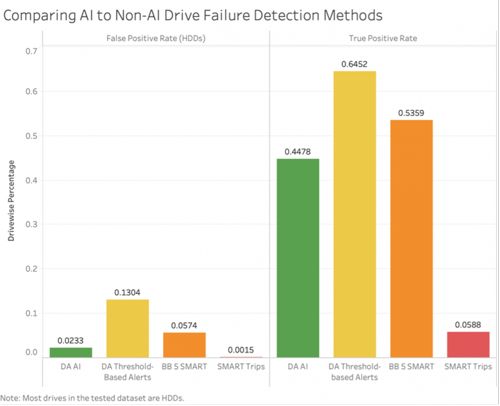

However, anticipating HDD failures using threshold-based methods is not a simple matter. Doing so requires choosing one or more appropriate HDD health indicators to focus on and defining thresholds that will maximize true positives while minimizing false positives. And the differences are not trivial. For example, if we relied on SMART trips to determine HDD failures, we would only be able to catch about 3-10% of HDD failures.7,8 Alternatively, if we relied on threshold-based alerts from DA Drive Analyzer, we would be able to catch 65% of HDD failures, though it comes with a much higher false positive rate.

Furthermore, threshold-based approaches often only consider the health of the HDD using snapshots in time. For example, SMART 5 (reallocated sector count) is based on the latest value of reallocated sector count, but does not tell you anything about its change over time, which can be pertinent to HDD failures.

Anticipating HDD failures with ML

The final way to deal with HDD failures is by predicting them with ML, for example using DA Drive Analyzer’s AI-based alerts. ML approaches can leverage multiple HDD health indicators simultaneously, including their interactions, as well as patterns of HDD health information over time. Furthermore, they come balanced between true positives and false positives out of the box.

An example of the out-of-the-box pre-balancing of true positive and false positive can be seen below in a comparison between various HDD failure prediction approaches. Other than DA Drive Analyzer’s ML approach (DA AI), the other methods are threshold-based methods of HDD failure anticipation. In general, we wish to see true positives as high as possible, while keeping false positives as low as possible.

As shown above, a ML approach, while perhaps not catching as many failures as some threshold-based methods, is able to achieve a relatively high true positive rate, while keeping false positives to an acceptably low level.

DA Drive Analyzer

It is a software as-a-service developed by ULINK Technology, Inc. that helps users handle HDD failures by anticipating them through threshold-based methods (recommended for advanced users) or ML (recommended for casual users). It can be used in conjunction with data redundancy and/or scheduled HDD replacement to produce robust protection vs. HDD failures, while offering preparation time that data redundancy alone lacks, and protection vs. failures in young HDDs that scheduled replacement alone cannot prevent. Furthermore, it provides an automated method for collecting several HDDs health indicators (some of which are better-standardized than SMART). It also comes with an email-based push notification feature linked to its ML and threshold-based algorithms. And it gives users a centralized online dashboard for viewing their HDDs’ health from multiple systems.

Ultimately, the approach that a user takes to address computer storage failure will depend on their tolerance for failure, the importance of their data, and many other considerations. Each approach has its benefits and drawbacks. The important thing is to be aware that HDD failure is an issue all HDDs will eventually face, and to be ready for it when it happens.

References:

- https://www.statista.com/statistics/795748/worldwide-seagate-average-hard-disk-drive-capacity/

- https://en.wikipedia.org/wiki/History of_hard_disk_drives

- From communications with Qnap Systems

- https://www.backblaze.com/blog/backblaze-drive-stats-for-q1-2022/

- https://www.acronis.com/en-us/blog/posts/data-backup/

- https://www.statista.com/statistics/1109492/frequency-of-data-center-system-refresh-replacement-worldwide/

- Analysis of data from Qnap Systems by ULINK Technology

- Zhang, T., Wang, E., & Zhang, D. (April 2019). Predicting failures in hard drivers based on isolation forest algorithm using sliding window. In Journal of Physics: Conference Series(Vol. 1187, No. 4, p. 042084). IOP Publishing.

- Brumgard, C. D. (2016). Substituting Failure Avoidance for Redundancy in Storage Fault Tolerance.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter