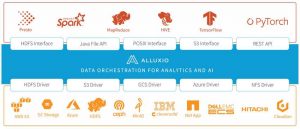

Alluxio Data Orchestration Platform V.2.7 With Enhanced Performance Insights and Support for Open Table Formats

And features improve I/O efficiency for data loading and preprocessing stages of AI/ML training pipeline to reduce end-to-end training time and costs

This is a Press Release edited by StorageNewsletter.com on November 25, 2021 at 2:02 pmAlluxio, Inc. announced the version 2.7 of Data Orchestration Platform.

This release has led to 5x improved I/O efficiency for ML training at lower cost by parallelizing data loading, data preprocessing and training pipelines. It also provides enhanced performance insights and support for open table formats like Apache Hudi and Iceberg to scale access to data lakes for faster Presto and Spark-based analytics.

“Alluxio 2.7 further strengthens Alluxio’s position as a key component for AI, ML, and deep learning in the cloud,” said Haoyuan Li, founder and CEO. “With the age of growing datasets and increased computing power from CPUs and GPUs, ML and deep learning have become popular techniques for AI. This rise of these techniques advances the state-of-the-art for AI, but also exposes some challenges for the access to data and storage systems.“

“We deployed Alluxio in a cluster of 1,000 nodes to accelerate the data preprocessing of model training on our game AI platform. Alluxio has proven to be stable, scalable and manageable,” said Peng Chen, engineer manager, big data team, Tencent Holdings Ltd. “As more and more big data and AI applications are containerized, Alluxio is becoming the top choice for large organizations as an intermediate layer to accelerate data analytics and model training.“

“Data teams with large-scale analytics and AI/ML computing frameworks are under increasing pressure to make a growing number of data sources more easily accessible, while also maintaining performance levels as data locality, network IO, and rising costs come into play,” said Mike Leone, analyst, ESG. “Organizations want to use more affordable and scalable storage options like cloud object stores, but they want peace of mind knowing they don’t have to make costly application changes or experience new performance issues. Alluxio is helping organizations address these challenges by abstracting away storage details while bringing data closer to compute, especially in hybrid cloud and multi-cloud environments.“

Click to enlarge

V.2.7 Community and Enterprise edition features capabilities, including:

-

Alluxio and Nvidia’s DALI for ML

Nvidia’s Data Loading Library (DALI) is a commonly used python library which supports CPU and GPU execution for data loading and preprocessing to accelerate deep learning. With release 2.7, the company’s platform has been optimized to work with DALI for python-based ML applications which include a data loading and preprocessing step as a precursor to model training and inference. By accelerating I/O heavy stages and allowing parallel processing of the following compute intensive training, end-to-end training on the firm’s data platform achieves performance gains over traditional solutions. The solution is scale-out as opposed to other solutions suitable for smaller data set sizes. -

Data loading at scale

At the heart of the company’s value proposition is data management capabilities complimenting caching and unification of disparate data sources. As the use of Alluxio has grown for compute and storage spanning multiple geographical locations, the software continues to evolve to keep scaling using a new technique for batching data management jobs. Batching jobs, performed using an embedded execution engine for tasks such as data loading, reduces the resource requirements for the management controller lowering cost of provisioned infrastructure. -

Ease of use on Kubernetes

The company solution’s now supports a native Container Storage Interface (CSI) Driver for Kubernetes, as well as a Kubernetes operator for ML making it easier than ever before to operate ML pipelines on the firm’s platform in containerized environments. The Alluxio volume type is natively available for Kubernetes environments. Agility and ease-of-use are a constant focus in this release. -

Insight driven dynamic cache sizing for Presto

An intelligent new capability, called Shadow Cache, makes striking the balance between high performance and cost easy by dynamically delivering insights to measure the impact of cache size on response times. For multi-tenant Presto environments at scale, this new feature reduces the management overhead with self-managing capabilities.

“Data platform teams utilize Alluxio to streamline data pre-processing and loading phases in a world where storage is separated from ML computation,” said Adit Madan, senior product manager, Alluxio. “This simplicity enables maximum utilization of GPUs with frameworks such as Spark ML, Tensorflow and PyTorch. The Alluxio solution is available on multiple cloud platforms such as AWS, GCP, and Azure Cloud, and now also on Kubernetes in private data centers or public clouds.“

AvailabilityFree downloads of V.2.7 open source Community edition and of Alluxio Enterprise edition are available to download.

Resources:

Blog: What’s New in Alluxio 2.7: Enhanced Scalability, Stability and Major Improvements in AI/ML Training Efficiency

Blog: Alluxio Use Cases Overview: Unify silos with Data Orchestration

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter