How Samsungs Scalable Memory Development Kit Simplifies Memory Expander Deployment

Provides opportunities for greater memory bandwidth and capacity in data-intensive applications like In-Memory Databases.

This is a Press Release edited by StorageNewsletter.com on November 3, 2021 at 2:02 pmFrom Samsung Electronics Co., Ltd.

Samsung’s announcement of the Scalable Memory Development Kit (SMDK), an open-source software toolkit that allows easy, optimized deployment of heterogeneous memory under the Compute Express Link (CXL) interconnect standard, provides new opportunities for far greater memory bandwidth and capacity in data-intensive applications like In-Memory Databases (IMDBs),

VMs and AI/ML in a range of computing environments including edge cloud. This announcement also demonstrates how the comany is evolving into a solution provider to build customer value by meeting demand for advanced memory hardware and facilitating its adoption with robust software environments.

An especially important aspect of the SMDK is its compatible application programing interface or compatible API, which allows end users to implement heterogeneous memory strategies, including memory virtualization, under the CXL standard without having to make any changes to application software. Alternatively, a separate Optimization API supports high-level optimization by enabling modification of application software to suit special system needs.

As a result of these efforts, HPC system developers can now combine traditional DRAM and CXL-based memory such as the Samsung CXL Memory Expander announced in May, to increase memory channel and density limits. The SMDK makes adoption of CXL-based memory a far easier and faster process and also opens new possibilities for organizations seeking to manage the exploding quantities of data generated in advanced applications.

CXL: Significant option for compute architectures

We’re in an era of growth of data, much of it machine-generated, with increasing amounts of that data being used for AI/ML and other data-intensive tasks that benefit from high-performance low-latency memory. Existing memory architectures are increasingly struggling to provide enough data to keep today’s CPUs and accelerators fully utilized. This is a critical consideration for emerging applications that are highly focused on data and data movement.

The CXL standard promises to help address this by enabling host processors (CPU, GPU, etc.) to access DRAM beyond standard DIMM slots on the motherboard, providing new levels of flexibility for different media characteristics (persistence, latency, bandwidth, endurance, etc.) and different media (DDR4, DDR5, LPDDR5, etc., as well as emerging alternative types).

In particular, CXL provides an interface based on the CXL.io protocol that leverages the PCIe 5.0 physical layer and electrical interfaces to provide extremely low latency interconnect paths – for memory access, communication between host processors, and among devices that need to share memory resources. This opens a range of opportunities; while the Samsung CXL Memory Expander is the first example, potential future avenues could include use of NVDIMM as backup for main memory; asymmetrical sharing of the host’s main memory with CXL-based memory, NVDIMM, and computational storage; and ultimately rack-level CXL-based disaggregation.

Memory Expander, a CXL-based module introduced earlier this year, is the first hardware solution in heterogeneous memory. While CXL allows expansion of memory into the server bay, with higher capacity and larger bandwidth, this heterogeneous memory environment presents a fundamental challenge: the need to coordinate and maximize performance of different types of memory with different characteristics and latencies, attached via different interfaces.

The inherent challenges associated with managing multiple memory types and a desire to make the Memory Expander as convenient and easy to implement as possible prompted Samsung’s development of the SMDK and represents a significant step in Samsung’s commitment to delivering a total memory solution that encompasses hardware and software.

How SMDK operates

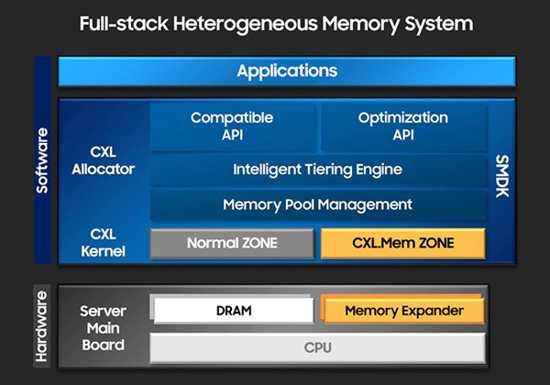

The SMDK is a collection of software tools and APIs that sit between applications and the hardware, as shown in the figure below. It supports a diverse range of mixed-use memory scenarios in which the main memory and the Memory Expander can be easily adjusted for priority, usage, bandwidth, and security, or simply used as is without modification to applications.

Toolkit thus reduces the burden of introducing new memory and allows users to quickly reap the benefits of heterogeneous memory.

The SMDK accomplishes this with a four-level approach:

-

A Memory Zone layer that distinguishes between onboard DIMM memory and Memory Expander (CXL) memory, which have different latencies, and optimizes how each pool of memory is used

-

Memory Pool Management, which makes the two pools of memory appear as one to applications, and manages memory topography in scalability

-

An Intelligent Tiering Engine, which handles communication between the kernel and applications and assigns memory based on the applications’ needs (latency, capacity, bandwidth, etc.)

-

A pair of APIs: a Compatible API, which allows end user access to the Memory Expander without any changes to their applications, and an Optimization API that can be used to gain higher levels of performance through optimization of the applications.

It’s worth noting that transparent memory management through the Compatible API is accomplished by inheriting and extending the Linux Process/Virtual Memory Manager (VMM) design, borrowing the design and strengths of the Linux kernel and maintaining its compatibility with CXL memory.

Progress to date and future vision

Among the early partner and customer full-stack demonstration projects for the Memory Expander and SMDK are IMDBs and VMs, two areas where immediate benefits can be obtained. Close behind are data-intensive AI/ML and edge cloud applications. Across the board, the combination of the Memory Expander and SMDK has the potential to improve the service quality of datacenter applications through expansion of both capacity and bandwidth.

Longer term, the objective of this project is to go beyond software development kits to provide a broader Scalable Memory Development Environment (SMDE) that makes it easy for anyone to take advantage of the new hybrid memory system in a next-generation architecture based on peer-to-peer communication.

With advanced computing applications of all types placing ever-greater demands on memories, Samsung has responded by creating a software development environment that facilitates more-efficient memory usage in heterogeneous environments. The SMDK is one example of how, as data usage continues to explode, The firm will evolve into a provider of solutions that build customer value by meeting the demand for advanced memory hardware and facilitating its adoption with robust software environments.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter