With WekaFS Limitless Data Platform, WekaIO Supports Nvidia Turbocharged HGX AI Supercomputing Platform

Enabling customers to accelerate AI/ML initiatives that accelerate time-to-value and time-to-market

This is a Press Release edited by StorageNewsletter.com on July 8, 2021 at 2:31 pmWekaIO announced the WekaFS Limitless Data Platform’s ability to leverage the newly-turbocharged Nvidia HGX AI supercomputing platform to deliver the best performance and throughput required of demanding enterprise workloads.

HGX AI supercomputing platform

The Nvidia HGX platform supports 3 technologies: the Nvidia A100 80GB GPU; Nvidia NDR 400G IB networking; and Nvidia Magnum IO GPUDirect Storage software.

Company’s launch-day support of Nvidia’s next-gen technologies is the result of a collaborative relationship between the companies and the continued commitment by the firm to provide customers with the latest features needed to best help store, protect, and manage their data.

WekaFS recently delivered among the greatest aggregate Nvidia Magnum IO GPUDirect Storage throughput numbers of all storage systems tested at Microsoft Research. Tests were run on a system that has WekaFS deployed in conjunction with multiple Nvidia DGX-2 servers in a staging environment and allowed engineers to achieve the highest throughput of any storage solution that had been tested to date. (i) This high-level performance was achieved and verified by running the Nvidia gdsio utility as a stress test and it showed sustained performance over the duration of the test.

“Advanced AI development requires powerful computing, which is why Nvidia works with innovative solution providers like WekaIO,” said Dion Harris, Nvidia. “Weka’s support for the Nvidia HGX reference architecture and Nvidia Magnum IO ensures customers can quickly deploy world-leading infrastructure for AI and HPC workloads across a growing number of use cases.“

Different stages within AI data pipelines have distinct storage requirements for massive ingest bandwidth, need mixed R/W handling and ultra-low latency, and often result in storage silos for each stage. This means business and IT leaders must reconsider how they architect their storage stacks and make purchasing decisions for these new workloads. Leveraging the upgrades to Nvidia’s platform, customers are better able to take advantage of performance gains with far less overhead, process data faster while minimizing power consumption, and increase AI/ML workloads while achieving improved accuracy.

Using WekaFS in conjunction with the new additions to the HGX AI supercomputing platform provides channel partners with the opportunity to deploy high-performance solutions to a growing number of industries that require fast access to data – whether on premises or in the cloud. Industries we serve that require an HPC storage system that can respond to application demands include manufacturing, life sciences, scientific research, energy exploration and extraction, and financial services.

“Nvidia is not only a technology partner to Weka but also an investor,” said Liran Zvibel, co-founder and CEO, WekaIO. “As such, we work closely to ensure that our Limitless Data Platform supports and incorporates the latest improvements from Nvidia as they become available. We have overcome significant challenges to modern enterprise workloads through our collaborations with leading technology providers like Nvidia and are looking forward to continuing our fruitful relationship into the future.“

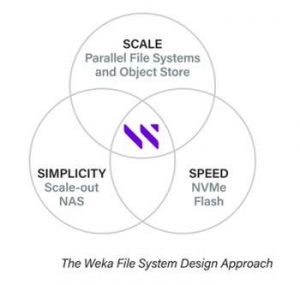

WekaFS is a fast and scalable POSIX-compliant parallel file system, designed to transcend the limitations of legacy file systems that leverage local storage, NFS, or block storage, making it for data-intensive AI and HPC workloads. It is a design integrating NVMe-based flash storage for the performance tier with GPU servers, object storage, and low latency interconnect fabrics into an NVMe-oF architecture, creating a high-performance scale-out storage system. WekaFS performance scales linearly as more servers are added to the storage cluster allowing the infrastructure to grow with the increasing demands of the business.

(i) Performance numbers shown here with Nvidia GPUDirect Storage on DGX A100 slots 0-3 and 6-9 are not the officially supported network configuration and are for experimental use only. Sharing the same network adapters for both compute and storage may impact the performance of standard or other benchmarks previously published by Nvidia on DGX A100 systems.

Resources:

Nvidia GPUDirect Storage Plus WekaIO Provides More Than Just Performance

Microsoft Research Customer Use Case: WekaIO and Nvidia GPUDirect Storage Results with Nvidia DGX-2 Servers

Weka AI and Nvidia A100 Reference Architecture

Weka AI and Nvidia accelerate AI data pipelines

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter