WekaIO: Testing Marked Results With Nvidia Magnum IO GPUDirect Storage in Microsoft Research Lab

WekaFS helps chief data officers and data scientists and engineers derive benefit from IT infrastructure by storage performance and NVIDIA GPUs.

This is a Press Release edited by StorageNewsletter.com on April 2, 2021 at 2:31 pmWekaIO, Inc. announced results of testing conducted with Microsoft Corp., which showed that the Weka File System (WekaFS) produced among the aggregate Nvidia Magnum IO GPUDirect Storage throughput numbers of all storage systems tested to date.

Click to enlarge

The company solves the storage challenges common with I/O-intensive workloads, such as AI. It delivers high bandwidth, low latency, and single-namespace visibility for the entire data pipeline, enabling chief data officers, data scientists, and data engineers to accelerate MLOps and time-to-value.

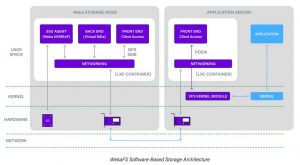

The tests were conducted at Microsoft Research using a single Nvidia DGX-2 server (*) connected to a WekaFS cluster over a Mellanox IB switch. The Microsoft Research engineers, in collaboration with WekaIO and Nvidia specialists, were able to achieve a high levelsof throughput in systems tested to the 16 Nvidia V100 Tensor Core GPUs using GDS. This erformance was achieved and verified by running the Nvidia gdsio utility for more than 10 minutes and showing sustained performance over that duration.

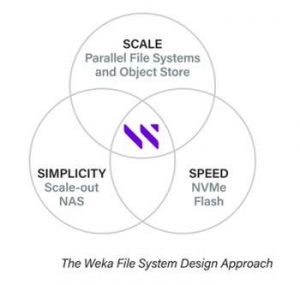

WekaFS is a fast and scalable POSIX-compliant parallel file system, designed to transcend the limitations of legacy file systems that leverage local storage, NFS, or block storage, making it for data-intensive AI and HPC workloads. It is a clean sheet design integrating NVMe-based flash storage for the performance tier with GPU servers, object storage and low latency interconnect fabrics into an NVMe-oF architecture, creating a high-performance scale-out storage system. It performance scales linearly as more servers are added to the storage cluster allowing the infrastructure to grow with the increasing demands of the business.

Click to enlarge

“The results from the Microsoft Research lab are outstanding, and we are pleased the team can utilize the benefits of their compute acceleration technology using Weka to achieve their business goals,” said Ken Grohe, president and chief revenue officer, WekaIO. “There are 3 critical components needed to achieve successful results like these from the Microsoft Research lab: compute acceleration technology such as GPUs, high speed networking, and a modern parallel file system like WekaFS. By combining WekaFS and Nvidia GPUDirect Storage (GDS), customers can accelerate AI/ML initiatives to dramatically accelerate their time-to-value and time-to-market. Our mission is to continue to fill the critical storage gap in IT infrastructure that facilitates agile, accelerated data centers.“

“Tests were run on a system that has WekaFS deployed in conjunction with multiple Nvidia DGX-2 servers in a staging environment and allowed us to achieve the highest throughput of any storage solution that has been tested to date,” said Jim Jernigan, principal R&D systems engineer, Microsoft Corp. “We were impressed with the performance metrics we were able to achieve from the GDS and WekaFS solution.“

(*) Nvidia DGX-2 server used a non-standard configuration with single-port NICs replaced by dual-port NICs.

Resources:

WekaFS

WekaFS: 10 Reasons to Deploy the WekaFS Parallel File System

IDC perspective: Weka Redefines what digitally transforming enterprises should expect from unstrustrured storage platforms (PDF)

Blogs:

Microsoft Research Customer Use Case: WekaIO and Nvidia GPUDirect Storage Results with Nvidia DGX-2 Servers

How GPUDirect Storage Accelerates Big Data Analytics

Weka AI and Nvidia Accelerate AI Data Pipelines

How GPUDirect Storage Accelerates Big Data Analytics

Nvidia GPUDirect Storage Webinar replay

Video: Visualizing 150TB of Data using GPUDirect Storage and the Weka File System

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter