Amazon S3 on Outposts Available

Shaking object storage landscape

This is a Press Release edited by StorageNewsletter.com on October 5, 2020 at 2:52 pmAWS Outposts customers can now use Amazon Simple Storage Service (S3) APIs to store and retrieve data in the same way they would access or use data in a regular AWS Region.

This means that many tools, apps, scripts, or utilities that already use S3 APIs, either directly or through SDKs, can now be configured to store that data locally on your Outposts.

AWS Outposts are a managed service that provides a consistent hybrid experience, with AWS installing the Outpost in your data center or colo facility. They are managed, monitored, and updated by AWS just like in the cloud. Customers use AWS Outposts to run services in their local environments, like Amazon Elastic Compute Cloud (EC2), Amazon Elastic Block Store (EBS), and Amazon Relational Database Service (RDS), and are for workloads that require low latency access to on-premises systems, local data processing, or local data storage.

Outposts are connected to an AWS Region and are also able to access Amazon S3 in AWS Regions, however, this new feature will allow you to use the S3 APIs to store data on the AWS Outposts hardware and process it locally. You can use S3 on Outposts to satisfy demanding performance needs by keeping data close to on-premises applications. It will also benefit if you want to reduce data transfers to AWS Regions, since you can perform filtering, compression, or other pre-processing on your data locally without having to send all of it to a region.

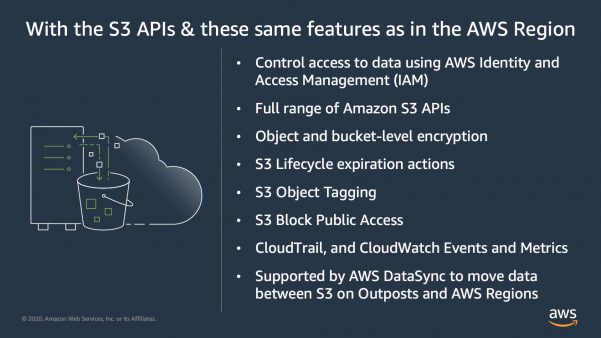

Speaking of keeping your data local, any objects and the associated metadata and tags are always stored on the Outpost and are never sent or stored elsewhere. However, it is essential to remember that if you have data residency requirements, you may need to put some guardrails in place to ensure no one has the permissions to copy objects manually from your Outposts to an AWS Region.

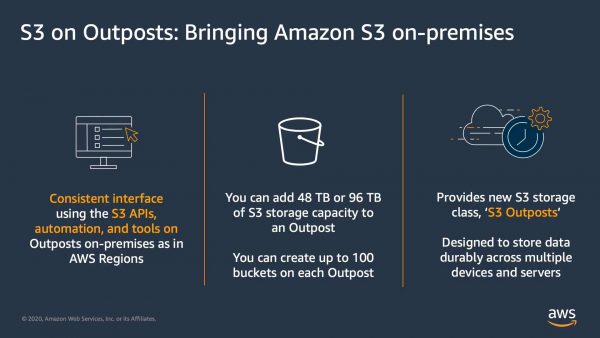

You can create S3 buckets on your Outpost and store and retrieve objects using the same Console, APIs, and SDKs that you would use in a regular AWS Region. Using the S3 APIs and features, S3 on Outposts makes it easy to store, secure, tag, retrieve, report on, and control access to the data on your Outpost.

S3 on Outposts provides a new Amazon S3 storage class, named S3 Outposts, which uses the S3 APIs, and is designed to durably and redundantly store data across multiple devices and servers on your Outposts. By default, all data stored is encrypted using server-side encryption with SSE-S3. You can optionally use server-side encryption with your own encryption keys (SSE-C) by specifying an encryption key as part of your object API requests.

When configuring your Outpost you can add 48TB or 96TB of S3 storage capacity, and you can create up to 100 buckets on each Outpost. If you have existing Outposts, you can add capacity via the AWS Outposts Console or speak to your AWS account team. If you are using no more than 11TB of exabyte storage on an existing Outpost today you can add up to 48TB with no hardware changes on the existing Outposts. Other configurations will require additional hardware on the Outpost (if the hardware footprint supports this) in order to add S3 storage.

So let me show you how I can create an S3 bucket on my Outposts and then store and retrieve some data in that bucket.

Storing data using S3 on Outposts

To get started, I updated my AWS Command Line Interface (CLI) to the latest version. I can create a new Bucket with the following command and specify which outpost I would like the bucket created on by using the –outposts-id switch.

aws s3control create-bucket –bucket my-news-blog-bucket –outpost-id op-12345

In response to the command, I am given the ARN of the bucket. I take note of this as I will need it in the next command.

Next, I will create an Access point. Access points are a relatively new way to manage access to an S3 bucket. Each access point enforces distinct permissions and network controls for any request made through it. S3 on Outposts requires a Amazon Virtual Private Cloud configuration so I need to provide the VPC details along with the create-access-point command.

aws s3control create-access-point –account-id 12345 –name prod –bucket

“arn:aws:s3-outposts:us-west-2:12345:outpost/op-12345/bucket/my-news-blog-bucket” –vpc-configuration VpcId=vpc-12345

S3 on Outposts uses endpoints to connect to Outposts buckets so that you can perform actions within your virtual private cloud (VPC). To create an endpoint, I run the following command.

aws s3outposts create-endpoint –outpost-id op-12345 –subnet-id subnet-12345 –security-group-id sg-12345

Now that I have set things up, I can start storing data. I use the put-object command to store an object in my newly created Amazon S3 bucket.

aws s3api put-object –key my_news_blog_archives.zip –body my_news_blog_archives.zip –bucket

arn:aws:s3-outposts:us-west-2:12345:outpost/op-12345/accesspoint/prod

Once the object is stored I can retrieve it by using the get-object command.

aws s3api get-object –key my_news_blog_archives.zip –bucket

arn:aws:s3-outposts:us-west-2:12345:outpost/op-12345/accesspoint/prod my_news_blog_archives.zip

There we have it. I’ve managed to store an object and then retrieve it, on my Outposts, using S3 on Outposts.

Transferring Data from Outposts

Now that you can store and retrieve data on your Outposts, you might want to transfer results to S3 in an AWS Region, or transfer data from AWS Regions to your Outposts for frequent local access, processing, and storage. You can use AWS DataSync to do this with the newly launched support for S3 on Outposts.

With DataSync, you can choose which objects to transfer, when to transfer them, and how much network bandwidth to use. DataSync also encrypts your data in-transit, verifies data integrity in-transit and at-rest, and provides granular visibility into the transfer process through Amazon CloudWatch metrics, logs, and events.

Order today

If you want to start using S3 on Outposts, please visit the AWS Outposts Console, here you can add S3 storage to your existing Outposts or order an Outposts configuration that includes the desired amount of S3.

Pricing with AWS Outposts works a little bit differently from most AWS services, in that it is not a pay-as-you-go service. You purchase Outposts capacity for a 3-year term and you can choose from a number of different payment schedules. There are a variety of AWS Outposts configurations featuring a combination of EC2 instance types and storage options. You can also increase your EC2 and storage capacity over time by upgrading your configuration.

Comments

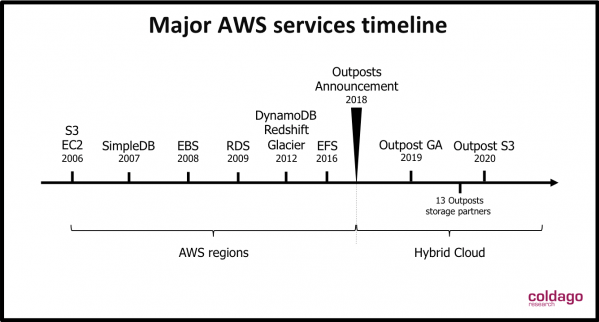

Launched in 2018 during AWS re:Invent conference, just 2 years ago, to run infrastructure and services on-premises to deliver a truly consistent hybrid experience, the company, at that time, shaked the IT industry.

It was generally available almost one year ago during the same annual event and showed 2 flavors, a native AWS instance just named AWS Outposts and a VMware Cloud on AWS Outposts one. The idea of AWS Outposts is to run compute, database and storage services on AWS hardware. It invites users to bridge their environment and strengthen their AWS experience reducing drastically IT complexity and mix hardware and software impacting positively the top line.

This fully managed approach offers EC2 instances, EBS volumes, RDS databases and users expected S3 since 2018. Elastic File System aka EFS should be announced soon.

This first S3 iteration is of course limited and 2 capacities are available 48TB and 96TB with 100 buckets maximum per Outposts instance. DataSync supports copy between Outposts and AWS region and S3 can be used to archive data on Glacier or leverage other AWS services offered in the region. It reminds what Qumulo announced with Shift before the summer.

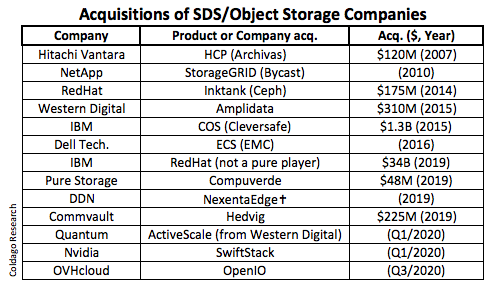

This is a huge announcement not only for AWS but also for the storage market. It shakes the storage industry for sure. In other words what is left on the table for other players, AWS and cloud generally eats everything. We already wrote a, article titled Object storage is dead, S3 eats everything. This is just another example of that.

Second, users don't speak any longer about object storage but S3 storage as the vast majority of them require such access method as the de facto standard like NFS or SCSI to refer respectively to file and block storage.

The battle for S3 as a protocol is over for quite a long time but now this appearance will shake the category. Small and fragile by revenue, installed based and team, pure players are under pressure again, a new degree is now visible, we saw several of them disappeared and/or acquired as the table below illustrates.

The key question is therefore how to resist or even survive? It turns out that the only possible alternative is related to the cost of the service as S3 has a price with a capacity based model plus traffic. Alternative seems to be capacity only and a cut. But even with that, AWS, Azure and Google, understand that the difference is made also by the tons of services - processing and computing capabilities - they provide in their cloud and not only storage that continue to attract lot of enterprises.

We won't be surprised to see a new wave of M&As, it will finally help many of them to survive, this announcement dictates that.

And the recent iteration about the ecosystem is related to AWS Outposts Ready program with 13 storage solutions certified and validated from:

- Clumio

- Cohesity

- Commvault

- CTera Networks

- Nasuni

- NetApp

- N2WS

- Pure Storage

- Qumulo

- Rubrik

- Veritas Technologies

- WekaIO

- Zadara

As of today, 78 are already listed covering all sectors.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter