Zettabyte Era Arrives

Traditional storage hierarchy paradigm will need to disrupt.

This is a Press Release edited by StorageNewsletter.com on August 13, 2020 at 2:25 pmThis report was written by analyst firm Horison Information Strategies.

The Zettabyte Era Arrives

Data creation is on an unprecedented trajectory and data has become the most valuable asset in most companies.

For example, in 2006, oil and energy companies dominated the list of the top five most valuable firms in the world, but today the list is dominated by firms based on digital content like Alphabet (Google), Apple, Amazon, Facebook and Microsoft. IDC’s latest report estimates that the Global StorageSphere installed base of storage capacity (not data) is more than doubling over the forecast period and is expected to grow between 2019-2024 at a CAGR of 17.8%, resulting in an installed base of storage capacity of 13.2ZB in 2024, compared with 5.8ZB in 2019. The amount of data that is actually stored, or utilized storage, is expected to grow at a 20.4% CAGR, from 3.5ZB in 2019 to 8.9ZB in 2024.

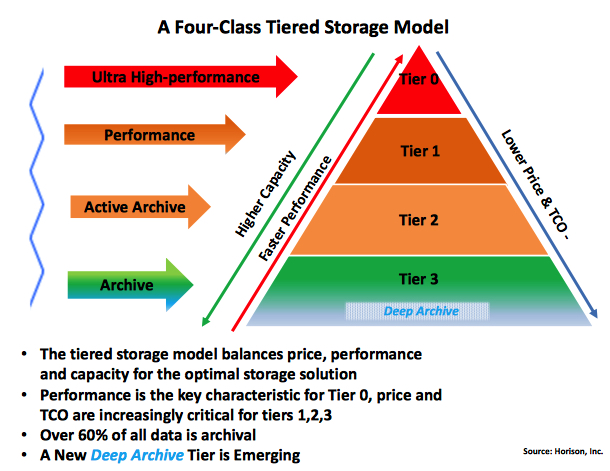

The storage infrastructure is a major component of most IT budgets. Storage tiering has become the key strategy that enables optimum use of storage resources, reduce costs and make the best use of available storage solutions for each class of data. Balancing a storage architecture to optimize for different workloads while minimizing costs now presents the next great storage challenge. In addition, over 60% of all data is archival and it could reach 80% or more by 2024, making archival data by far the largest storage class. Given this trajectory, the traditional storage hierarchy paradigm will need to disrupt itself – quickly.

Balancing Performance and Cost – Tiered Storage Architecture Provides Greatest Benefit

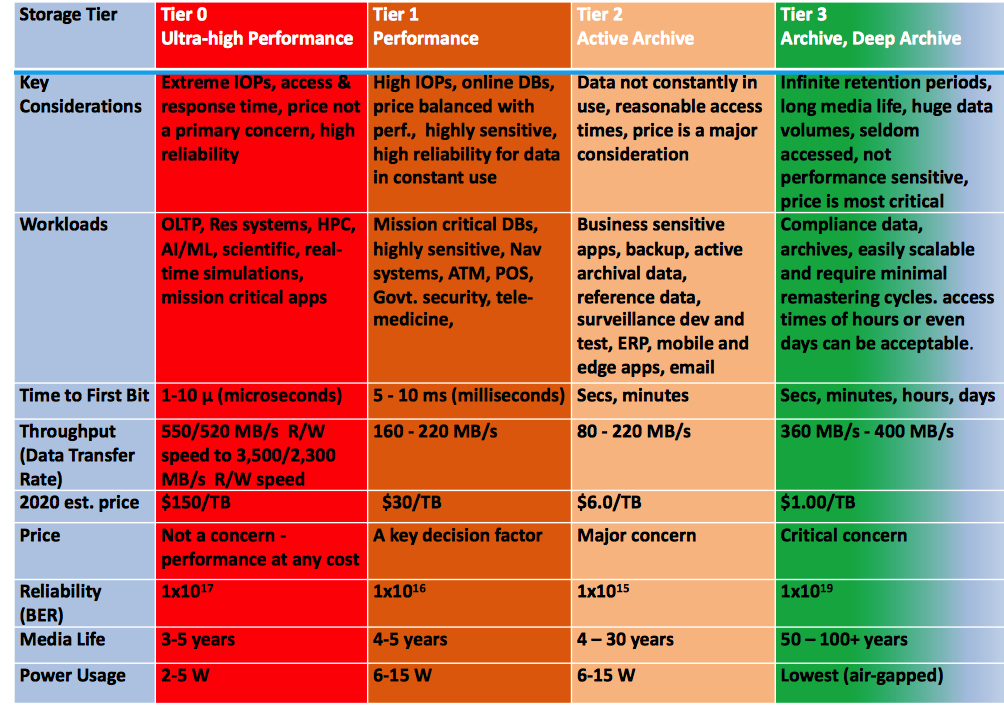

Today’s storage hierarchy is well established and consists of a four-class tiered model. Each tier has its own unique characteristics and value proposition. Today’s storage tiers range from ultra-high capacity, low cost storage at the bottom of the hierarchy to very high levels of performance and functionality and at the top. A multi-tiered storage architecture capitalizes on the significant cost differences of each tier by deploying advanced management tools that move, migrate, and respond to changing lifecycle needs of the data or application. Deploying as much data as possible in the archive tiers yields the most cost-effective architecture. As storage pools increase in capacity, the business case for implementing tiered storage becomes more compelling potentially saving millions of $s in storage costs.

Four Tiered Storage Model – Key Characteristics and Future Requirements

For Storage – It Always Comes Down to Price and TCO

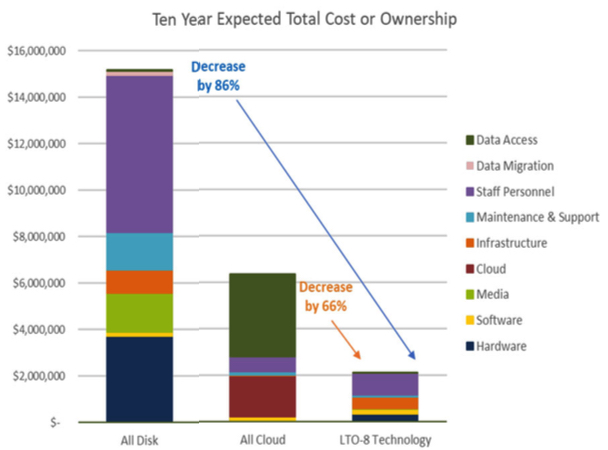

While price is and will continue be the single greatest driving force behind storage investments for the foreseeable future, a tiered storage strategy offers the most significant cost benefits compared to any other storage solution for nearly every workload. The attributes of long shelf-life, data integrity and extremely low acquisition costs play heavily in the TCO as data retention periods can exceed 100 years encouraging archival data to reside in the lowest cost storage tier. The adjacent TCO study estimates total storage costs over the next decade showing disk systems costing as much as 7x that of a tape system and a cloud system costing more than 3x that of tape. Getting the right data in the right place has great benefits.

Data Center Cost Models Reveal Compelling Value of Tiered Storage

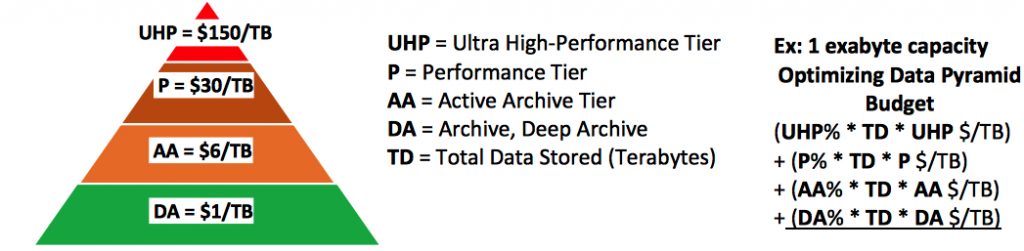

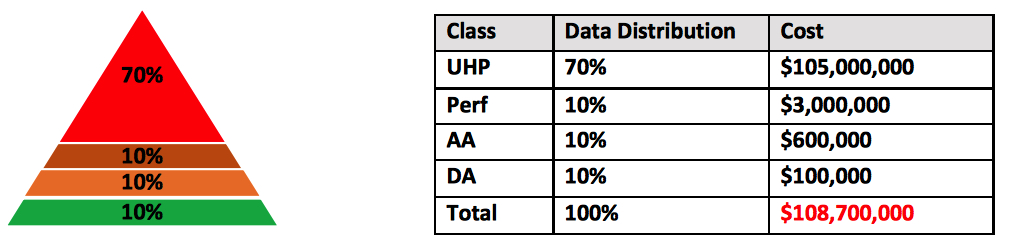

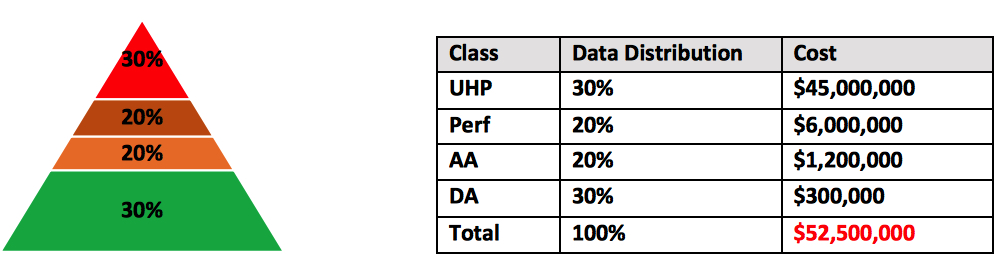

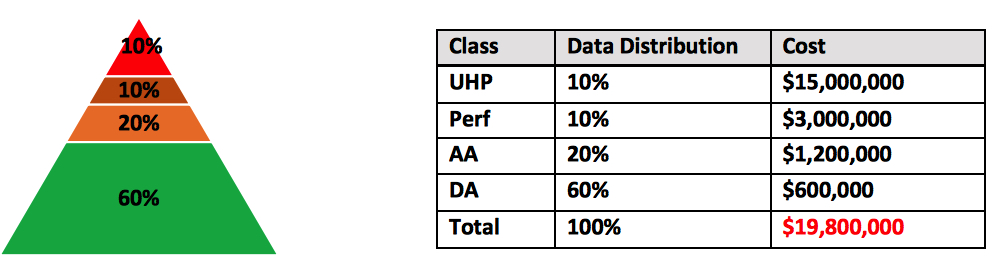

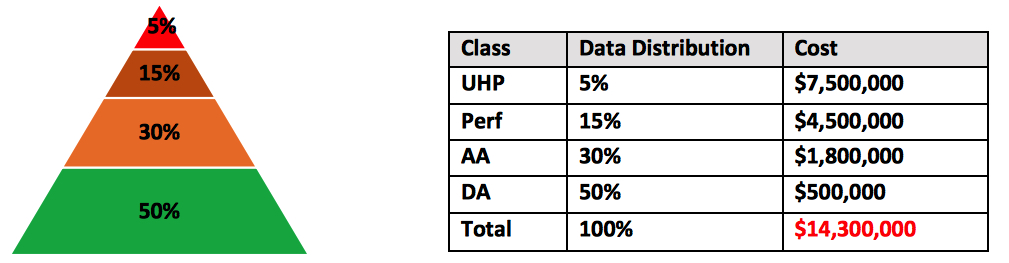

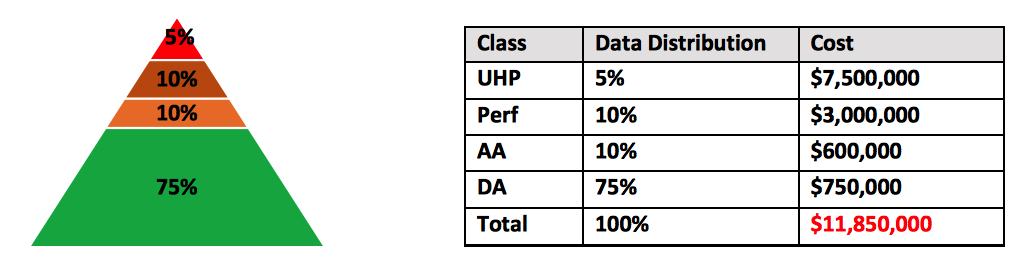

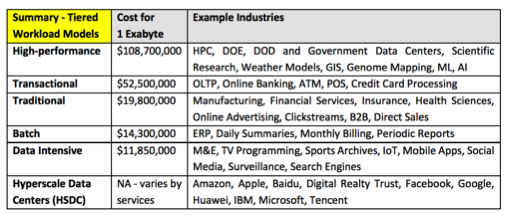

Industries optimize for different use cases as all workloads are not created equal. The diagram below applies costs of each storage tier to model five different data center workloads to store 1EB of data. Moving data to the optimal tier for each unique workload offers tremendous cost savings. Data centers have to balance unique, highly variable workflows with legacy and current applications while adopting new paradigms that exploit a variety of storage devices, systems, and architectures. Some are computing intensive; others are data and I/O intensive. Many collect and store large volumes of potentially important data, but it may remain inactive for years waiting to be mined. In other cases, raw data is collected and immediately processed, then stored for years just in case there’s a need for further processing or deeper analysis. In any case, optimizing the storage tiers is the key.

Five common data center workload models and Hyperscale Data Centers (HSDCs) are shown using the pricing assumptions below for allocating 1EB of storage capacity across the 4 tiers.

High-performance workload (Heavy Compute):

These HPC workloads have a HPC intensive architecture, where thousands of servers are networked together into a cluster. Software programs and algorithms are run simultaneously on the servers in the cluster with high performance storage systems to capture the output. HPC supports compute intensive applications requiring fast random access, block storage for pattern recognition that simulate future consequences and predict outcomes. HPC organizations use extensive analytic services (OLAP) to understand the vast amounts of data across complex hybrid environments.

Transactional workload (Heavy reads, writes and updates):

These workloads are response time critical business processes such as OLTP, real time communications, reservation systems, order processing optimized for heavy reads, writes and updates on small records, and ability to modify data in place. The financial industry uses heavy transaction-based services for online banking transactions, ATM, POS, credit card charges, audit and communication logs.

Traditional Data Center workload (Heavy Database):

This is the most common type of data distribution model. The de-facto standard database workload must be tuned and managed to support the service that’s using that data. Health care and life sciences use databases for electronic medical records, images (X-Ray, MRI, or CT), genome sequences, records of pharmaceutical development and approval fit this profile.

Batch workload (Business Support Activities):

These workloads are common and are designed to operate in the background and tend to process larger and more sequential volumes of data. Generally, batch workloads are seldom time sensitive and are executed on a regular schedule. Examples include daily reports from ERP, inventory, email, payroll, monthly or quarterly billing or compiling the results of months of online reports. Insurance processes many long-term accidents records and images, health claims, disputes, and payment history in batch mode. These workloads may require considerable compute and storage resources when in use.

Data Intensive workload (Heavy Archive):

Data-intensive applications devote most of their processing time to I/O and movement and manipulation of data as search times and data transfer speeds, not processor speeds, limits their performance. For example, the MLB Network archives over 1.2 million hours of content, which is indexed and stored with infinite retention periods. The M&E industry relies heavily on digital archives as they provide raw production footage. For movie production, it is common to have workflows that need access to archived digital assets at some point in time after the movie is made. Most M&E content is never deleted. Surveillance, medicine, finance, government, physical security and social media also fit this model.

Hyperscale Data Center workloads (compute, storage, backup, and archive cloud services)

With nearly 600 data centers worldwide and 40% in the US, the HSDC represents the fastest growing data center segment today. Most are large CSPs (cloud-service providers) offering highly distributed compute, storage, archive and backup services and can profile any of the five models above. They constantly re- engineer storage strategies to manage extreme data growth, enormous energy consumption, high carbon emissions, and long-term data retention requirements. HSDCs are struggling with insurmountable growth of disk farms which are devouring IT budgets and overcrowding data centers forcing data migration to lower cost archival solutions. Obviously, few data centers can afford to sustain this degree of inefficiency. HSDCs challenges are extreme at scale as they are accelerating the need for an archival breakthrough.

Future Looms Huge – How Do We Get from Today to Tomorrow?

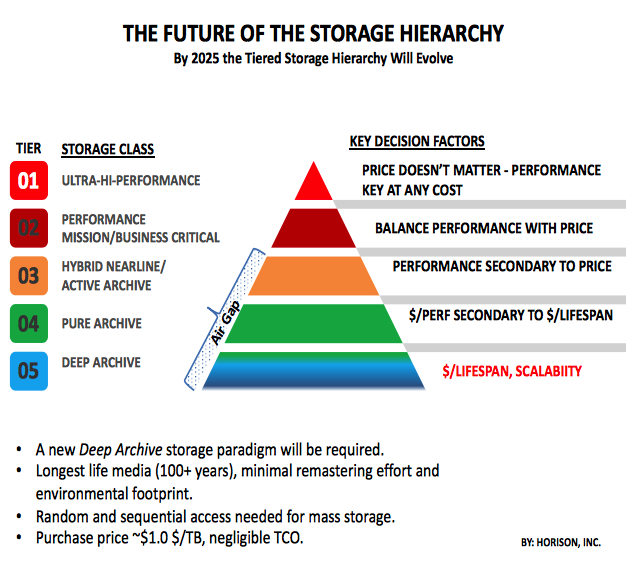

Today’s storage hierarchy will need to evolve providing more cost-effective solutions to address the archival avalanche. The emerging deep archive (cold) storage tier will need a new

breakthrough solution to provide highly secure, long-term storage and require minimal remastering cycles. Remastering is a costly, labor-intensive process that typically migrates previously archived data to new media every ~5- 0 years. Deep

archives will offer “infinite” capacity scaling and media life of 100+ years. The optimal design will have a robust roadmap, long media life expectancy, minimal environmental footprint, and a pricing approaching $1/TB, a price below all other competitor’s offerings. Fast access times are not critical for the Deep Archive tier.

What’s Ahead Current Storage Technologies?

For solid state (semi-conductor) storage, the roadmap shows significant progress through 2029. The increase in 3D NAND flash layers is leading to huge capacity increases at the die level. Leading suppliers are preparing for 128-layer 3D NAND, while outlining a roadmap to a staggering 800+ layers by 2030. Technology and cost challenges exist but are not expected to be insurmountable for the planning period. Solid state storage has broad market appeal and is heavily used from personal appliances to HSDCs.

For magnetic storage (disk and tape), the INSIC roadmap indicates challenges exist but are not expected to be too difficult to overcome in basic disk and tape technology through 2029. SSD will continue to take market share and high profit margins from disk at the high end of the storage market, while disk is being squeezed by tape for low cost/TCO archival storage at the low end. Lab demonstrations show HDD capacities approaching 40TBs and native tape cartridge capacities reaching 400TB’s are in sight. Single-layer disk areal density growth is slowing while performance gains are negligible. Tape is a sequential access only media and time to first bit can be a concern. For optical disc, the price, performance, low capacity and reliability levels, and slow roadmap progress compared to magnetic technologies have kept the Blu-ray optical disc product family confined to the shrinking home and car personal entertainment market. Optical disc has been here for decades but has never been viable in the data center.

Next Up for Storage Innovation?

Multi-layer photonic storage with 16+ layers for terabyte-scale capacities is under development and may offer the greatest promise in the next five years to address the archive avalanche. By delivering random access, extremely long shelf-life, 100-year remastering cycles with a small environmental footprint and low TCO, a truly scalable mass archival storage solution could finally emerge. The arrival of a multi-layer recording media at a cost per terabyte below that of magnetic tape could disrupt the archival paradigm.

Future Speculation – What’s Happening in Labs?

Synthetic DNA-based storage systems have received significant media attention due to the promise of ultrahigh storage density, random access, and long-term stability. DNA claims to offers a storage density of 2.2Pb/gram However, all known development still suffers from high cost, very long read-write latency and error-rates that render them noncompetitive with modern storage devices.

Quartz glass media is potentially durable for many decades because of its resistance to heat and weather and is also as hard as porcelain on the Mohs scale. A standard-sized glass disc can store around 360TBs of data, claiming an estimated lifespan of up to 13.8 billion years even at temperatures of 190°C. That’s as old as the Universe, and more than three times the age of the Earth. Progress has been negligible.

Holographic storage has been attempted for years and include nanoparticle-based film that can store information as 3D holograms, improving data density, read and write speeds and stability in harsh conditions. 5D crystal technology is a multi-state optical WORM technology, but the problem is that external UV light can erase the data. That doesn’t bode well for the long-term stability of the technology.

Quantum storage is investigating ways to use quantum physics to store a bit of data attached to the spin of an electron. In future, we could see instant data syncing between two points anywhere, based on quantum entanglement. Quantum computing may ultimately be the truly transformational technology, but “when” remains completely unknown.

Note: These technologies have been under development for decades and often make headlines but have never made it out of the laboratory. Given the challenges they face, it’s highly unlikely that any of these will arrive in the foreseeable future. So far there has been more written about these technologies than has been written on them.

Summary

The storage industry is hungry for new innovative hardware and software solutions that can change the rules of the game. The momentum is building for new deep archive storage tier with tremendous economic benefits to emerge. The new tier will effectively address cost, scalability, and security as the most compelling future storage considerations for IT managers. By 2024 archival data will represent between 60-80% of all digital data making it the single largest storage class and will approach $11 billion in annual revenues fueling demand for a new storage paradigm that can drive storage costs closer to the $1/TB level. To meet the archive challenge, disruption may be the greatest differentiator. The key issue now becomes which future technology will be able to successfully reinvent the traditional archival storage tiers, or will businesses be forced to continue using the less than optimal storage solutions of the past?

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter