Start-Up Profile: MemVerge

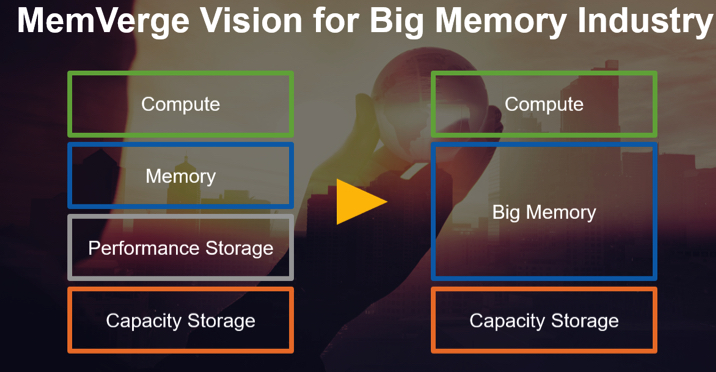

In software-defined memory technology that virtualizes different types of memory including DRAM and persistent memory

This is a Press Release edited by StorageNewsletter.com on July 23, 2020 at 2:18 pmCompany:

MemVerge, Inc.

HQ:

Milpitas, CA

Date founded:

2017

Financial funding:

Total of $43.5 million from storage investors Intel Capital, Cisco Investments, NetApp and SK hynix, as well as Gaorong Capital, Glory Ventures, Jerusalem Venture Partners, LDV Partners, Lightspeed Venture Partners, and northern light VC:

- seed round in 2017 but amount not revealed

- $24.5 million Series A in 2019

- $19 million in series B in 2020

Revenue:

Multiple PoCs installations expected to convert to sales during 2H20 and 1H21.

Founders:

Shuki Bruck, chairman, is the Gordon and Betty Moore professor of Computation and Neural System and Electrical Engineering at the California Institute of Technology (Caltech). He was the founding director of the Caltech (IST) program. and co-founded and served as chairman of XtremIO and Rainfinity.

Shuki Bruck, chairman, is the Gordon and Betty Moore professor of Computation and Neural System and Electrical Engineering at the California Institute of Technology (Caltech). He was the founding director of the Caltech (IST) program. and co-founded and served as chairman of XtremIO and Rainfinity.

Charles Fan, CEO, was formerly CTO of Cheetah Mobile leading its technology teams, and an SVP and/GM at VMware, founding the storage business unit that developed the Virtual SAN product. He also worked at EMC and was the founder of the EMC China R&D Center. He joined EMC via the acquisition of Rainfinity, where he was a co-founder and CTO.

Charles Fan, CEO, was formerly CTO of Cheetah Mobile leading its technology teams, and an SVP and/GM at VMware, founding the storage business unit that developed the Virtual SAN product. He also worked at EMC and was the founder of the EMC China R&D Center. He joined EMC via the acquisition of Rainfinity, where he was a co-founder and CTO.

Yue Li, CTO, previously worked as a senior post-doctoral scholar in memory systems at Caltech. He has research experience on both theoretical and experimental aspects of algorithms for non-volatile memories. His research has been published in journals and conferences on storage.

Yue Li, CTO, previously worked as a senior post-doctoral scholar in memory systems at Caltech. He has research experience on both theoretical and experimental aspects of algorithms for non-volatile memories. His research has been published in journals and conferences on storage.

Number of employees:

Approximately 50

Technology:

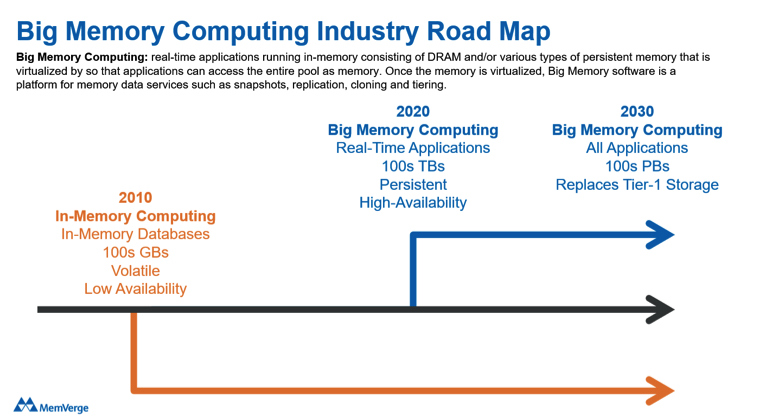

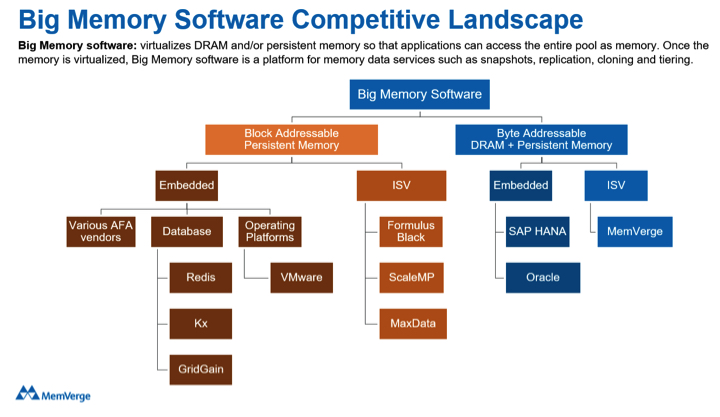

Big memory software is a new class of software-defined memory technology that virtualizes different types of memory including DRAM and persistent memory. Once virtualized, the software-defined memory can be accessed with modification by applications, replicated, tiered, and recovered at memory speeds.

Products description:

Memory can be accessed without code changes, scale-out in clusters to provide the capacity needed by real-time analytics and AI/ML apps, and deliver enterprise data services for HA.

Memory machine software steamrolls 4 major limitations of in-memory computing:

1. Although not quite as fast as DRAM, it promises the ability to keep the data intact for fast in-memory start up and recovery.

2. Big memory software enables transparent access to PMEM – Without big memory software, applications must be modified to access PMEM, being a major obstacle to simple and fast deployment of PMEM and all its benefits. It responds by virtualizing DRAM and PMEM so its transparent memory service can do the work needed for applications to access PMEM without code changes.

3. Taking memory capacity to the next level – PMEM offers higher density memory modules. Big memory software allows DRAM and high-density PMEM to scale-out in a cluster to form giant memory lakes with hundreds of terabytes in a single pool.

4. Big memory software delivers data services for HA – It is a platform for an array of memory data services starting with snapshot, cloning, replication and fast recovery. These are the same type of data services that help disk and flash storage achieve HA, only performing at memory speeds.

In the years ahead, the data universe will continue to expand, and the new normal will be real-time analytics and AI/ML integrated into mainstream business apps, but only if in-memory computing infrastructure can keep pace. Big memory computing – where memory is abundant, persistent, and highly-available – will succeed to unleash big (real-time) data.

Released date:

- Memory machine early access release with special support – April 2020

- Memory machine availability release – September 2020

Price:

$5/GB/year of memory used

Roadmap

Partners:

- DRAM vendors: SK hynix (investor)

- Persistent memory vendors: Intel (investor)

- Server vendors: Cisco (investor), Penguin (solutions including memory machine SW)

- Storage vendors: NetApp (investor)

- Database vendors: Kx, Redis (solutions qualified by MemVerge)

Distributor:

Colfax

OEMs:

Penguin, HPE (joint solutions)

Customers:

Names confidential, but are in the high-frequency trading and M&E markets

Applications:

- Latency-sensitive transactional workloads such as trading applications in capital markets

- Memory-intensive applications in M&E, healthcare, and oil and gas

- AI/ML inference like fraud detection, image classification and recommendation engines

Target market:

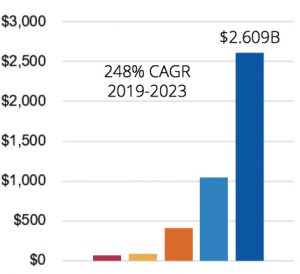

Market for real-time data and applications that run in-memory. According to IDC, real-time data will comprise almost 25% of all data by 2024.

Persistent Memory Revenue Forecast 2019-2023, IDC

Competition:

Read also:

Stealthy Start-Up MemVerge Discovered

In software that will empower seamless convergence of main computer memory and storage using single pool of non-volatile RAM

by Jean Jacques Maleval | November 22, 2018 | News

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter