WekaIO AI Accelerates Edge to Core to Cloud Data Pipelines

Expedites time-to-market and to-value while delivering agility and security at scale.

This is a Press Release edited by StorageNewsletter.com on April 28, 2020 at 2:33 pmWekaIO, Inc. introduced Weka AI, a transformative storage solution framework underpinned by the Weka File System (WekaFS) that enables accelerated edge to core to cloud data pipelines.

Weka AI is a framework of customizable reference architectures (RAs) and software development kits (SDKs) with technology alliances like Nvidia Corp., Mellanox Technologies, Ltd., and others in the Weka Innovation Network (WIN). The solution enables chief data officers, data scientists and data engineers to accelerate genomics, medical imaging, financial services industry (FSI) and advanced driver-assistance systems (ADAS) deep learning (DL) pipelines and is available to scale from small to medium and large integrated solutions, through resellers and channel partners.

Click to enlarge

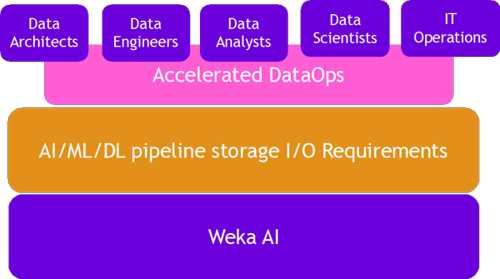

AI data pipelines are inherently different from traditional file-based IO applications. Each stage within AI data pipelines has distinct storage IO requirements: massive bandwidth for ingest and training, mixed R/W handling for extract, transform, load (ETL), low latency for inference, and a single namespace for entire data pipeline visibility. Additionally, AI at the edge is driving the need for edge to core to cloud data pipelines. Hence, the solution must meet these varied requirements, and deliver timely insights at scale. Traditional solutions lack these capabilities and often fall short in meeting performance, shareability across personas and data mobility requirements. Industries demand solutions to overcome those challenges – they demand data management for the AI era, which provides actionable intelligence by breaking silos, providing operational agility and governance to these data pipelines.

Weka AI is a framework combining multiple technology partnerships, architected to accelerate DataOps, by solving the storage challenges common with IO-intensive workloads, like AI, and deliver production-ready solutions. It leverages WekaFS to accelerate the AI data pipeline, delivering more than 73GB/sec of bandwidth to a single GPU client. In addition, it delivers operational agility with versioning, explainability and reproducibility and provides governance and compliance with in-line encryption and data protection. Engineered solutions with partners in the WIN program ensures Weka AI will provide data collection, workspaces and deep neural network (DNN) training, simulation, inference and lifecycle management for the entire data pipeline.

Kevin Tubbs, senior director, technology and business development, advanced solutions group, Penguin Computing, said: “Weka AI provides a solution to meet the requirements of modern AI applications. We are very excited to be working with Weka to accelerate next gen AI data pipelines.“

Amrinderpal Singh Oberai, director, data and AI, Groupware Technology, Inc., said: “The Groupware Data and AI team has tested and validated the Weka AI reference architecture in-house. The Weka AI framework provides us the flexibility and technology innovation to build custom solutions along with other ISV technology partners to solve industry challenges through AI.“

Paresh Kharya, director, product management, accelerated computing, Nvidia, said: “End-to-end application performance for AI requires feeding high performance NVIDIA GPUs with a high throughput data pipeline. Weka AI leverages GPUDirect storage to provide a direct path between storage and GPUs, eliminating I/O bottlenecks for data intensive AI applications.”

Gilad Shainer, SVP, marketing, Mellanox, said: “IB has become the de-facto standard for high performance and scalable AI infrastructures, delivering high data throughout and In-Network Computing acceleration engines. Utilizing GPUDirect and GPUDirect storage over IB with the Weka AI framework, provides our mutual customers with a world-leading platform for AI applications.“

Liran Zvibel, CEO and co-founder, WekaIO, said: “GPUDirect Storage eliminates IO bottlenecks and dramatically reduces latency, delivering full bandwidth to data hungry applications. By supporting GPUDirect Storage in its implementations, Weka AI continues to deliver on its promise of highest performance at any scale for the most data-intensive applications.“

Shailesh Manjrekar, head, AI and strategic alliances, WekaIO, said: “We are very excited to launch Weka AI to help businesses embark on their digital transformation journey. Line-of-business users as well as IT leaders can now implement AI 2.0 and cognitive computing workflows that scale, accelerate and derive actionable business insights, thus enabling Accelerated DataOps.“

Resources:

Weka AI with NVIDIA reference architecture (registration required)

Weka AI DS

Accelerated DataOps with Weka AI Blog – Part 1

Accelerated DataOps with Weka AI Blog – Part 2

Accelerated DataOps with Weka AI Blog – Part 3

Read also:

Exclusive Interview With WekaIO CEO Liran Zvibel

Growing fast as serious commercial alternative to leading HPC file storage such Lustre and IBM Spectrum Scale

by Philippe Nicolas | April 9, 2020 | News

600% Revenue Growth From 2018 to 2019 for WekaIO

With help of partners Supermicro, Dell EMC, HPE, and Nvidia

January 20, 2020 | Press Release

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter