Sidekick, High Performance Load Balancer, by MinIO

Soft launch for second product of portfolio

By Philippe Nicolas | April 3, 2020 at 2:35 pm![]() Blog post published on March 31, 2020 on MinIO, Inc.‘s web site, written by engineer Krishna Srinivas

Blog post published on March 31, 2020 on MinIO, Inc.‘s web site, written by engineer Krishna Srinivas

Almost all of the modern cloud-native applications use HTTPs as their primary transport mechanism even within the network. Every service is a collection of HTTPs endpoints provisioned dynamically at scale. Traditional load balancers that are built for serving web applications across the Internet are at a disadvantage here since they use old school DNS round-robin techniques for load balancing and failover. DNS based solutions are beyond repair for any modern requirements.

While some of the software-defined load balancers like NGINX, HAProxy, and Envoy Proxy are full-featured and handle complex web application requirements, they are not designed for high-performance, data-intensive workloads.

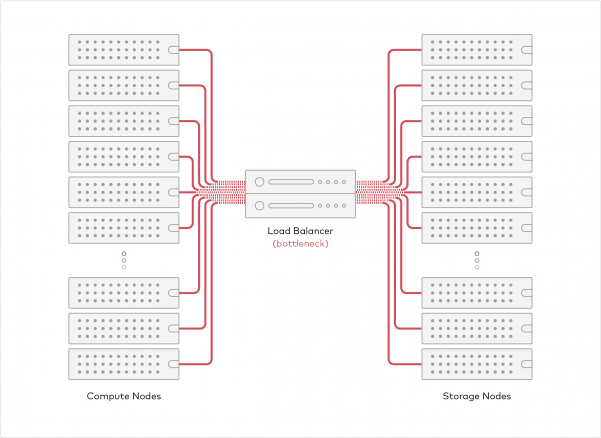

Modern data processing environments move terabytes of data between the compute and storage nodes on each run. One of the fundamental requirements in a load balancer is to distribute the traffic without compromising on performance. The introduction of a load balancer layer between the storage and compute nodes as a separate appliance often ends up impairing the performance. Traditional load balancer appliances have limited aggregate bandwidth and introduce an extra network hop. This architectural limitation is also true for software-defined load balancers running on commodity servers.

This becomes an issues in the modern data processing environment where it is common to have 100s to 1000s of nodes pounding on the storage servers concurrently.

Since there were no load balancers designed to meet these high-performance data processing needs, we built one ourselves. It is called Sidekick and harkens back to the days where every superhero (MinIO) had a trusty sidekick.

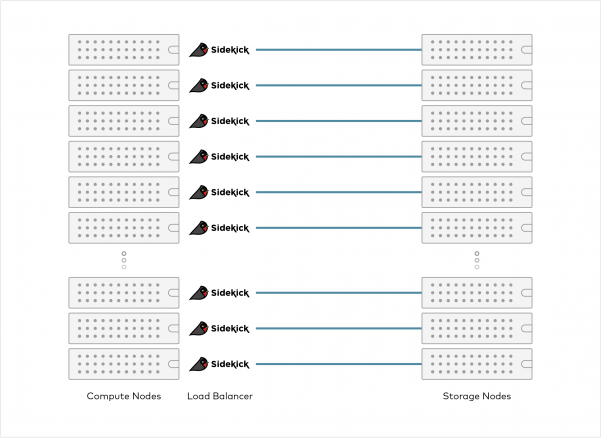

Sidekick solves the network bottleneck by taking a sidecar approach instead. It runs as a tiny sidecar process alongside of each of the client applications. This way, the applications can communicate directly with the servers without an extra physical hop. Since each of the clients run their own sidekick in a share-nothing model, you can scale the load balancer to any number of clients.

In a cloud-native environment like Kubernetes, Sidekick runs as a sidecar container. It is easy to add Sidekick to existing applications without any modification to your application binary or container image.

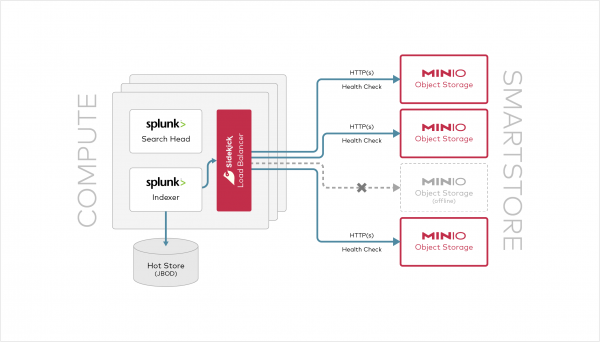

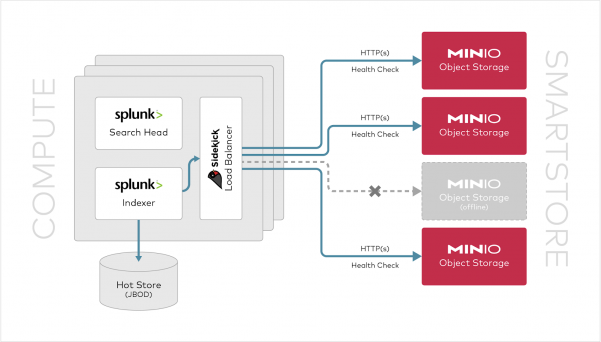

Example: Distributing Splunk Indexers SmartStore access across MinIO cluster

Let’s look at a specific application example: Splunk.

In this case we will use MinIO’s as a high-performance, AWS S3, compatible object storage as a SmartStore endpoint for Spunk. Refer to Leveraging MinIO for Splunk SmartStore S3 Storage whitepaper for in-depth review.

Splunk runs multiple indexers on a distributed set of nodes to spread the workloads. Sidekick sits in between the Indexers and the MinIO cluster to provide the appropriate load balancing and failover capability. Because Sidekick is based on a share-nothing architecture, each Sidekick is deployed independently along the side of the Splunk indexer. As a result, Splunk now talks to the local Sidekick process and Sidekick becomes the interface to MinIO. These indexers talk to the object storage server via HTTP RESTful AWS S3 API.

Configuring Splunk SmartStore to use Sidekick:

[volume:s3]

storageType = remote

path = s3://smartstore/remote_volume

remote.s3.access_key = minio

remote.s3.secret_key = minio123

remote.s3.supports_versioning = false

remote.s3.endpoint = http://localhost:8080

Sidekick takes a cluster of MinIO server addresses (16 of them in this example) and the health-check port and combines them into a single local endpoint. The load is evenly distributed across all the servers using a randomized round-robin scheduler.

$ sidekick --health-path=/minio/health/ready https://minio{1...16}:9000

Sidekick constantly monitors the MinIO servers for availability using the readiness service API. For legacy applications, it will fallback to port-reachability for readiness checks. This readiness API is a standard requirement in the Kubernetes landscape. If any of the MinIO servers go down, Sidekick will automatically reroute the S3 requests to other servers until the failed server comes back online. Applications get the benefit of the circuit breaker design pattern for free.

Advantages of Sidekick

What are the core advantages of using Sidekick over other load balancers?

Layer Reduction – Eliminates the intermediate layer and extra hardware requirements. This delivers superior performance and scalability.

Instant Failover – Detects failures early and directs application traffic to other servers avoiding expensive application timeouts and complex error handling requirements.

Performance – Sidekick is purpose-built for high-performance data transfers to handle large environments. It is tested to scale seamlessly with 100GbE networks and NVMe class storage.

Minimalism – Taking a minimalist approach is the key to its performance advantage. Other load balancers have evolved into bloatware where the complexity comes at the cost of manageability and performance.

Disadvantages of Sidekick

It is designed to only run as a sidecar with a minimal set of features. If you have complex requirements, there are better alternatives.

Just as importantly, we don’t anticipate changing this minimalist, performance oriented approach. Sidekick is designed to do a few things and do them exceptionally well.

Roadmap

The Sidekick team is currently working on adding a shared caching storage functionality. This feature will enable applications to transparently use MinIO on NVMe or Optane SSDs to act as a caching tier. This will have applications across a number of edge computing use cases.

How to Get Started

Sidekick is licensed under GNU AGPL v3 and is available on Github. We encourage to take it for a spin. If you have already deployed MinIO you will immediately grasp its minimalist similarity. If not, take the opportunity to fire up the entire object storage suite. We have documentation and the community Slack channel to help on your way.

Comments

Again MinIO shakes established positions like they did a few years ago with their open source object storage software today among the most deployed and adopted on the planet. Numbers speak for themselves.

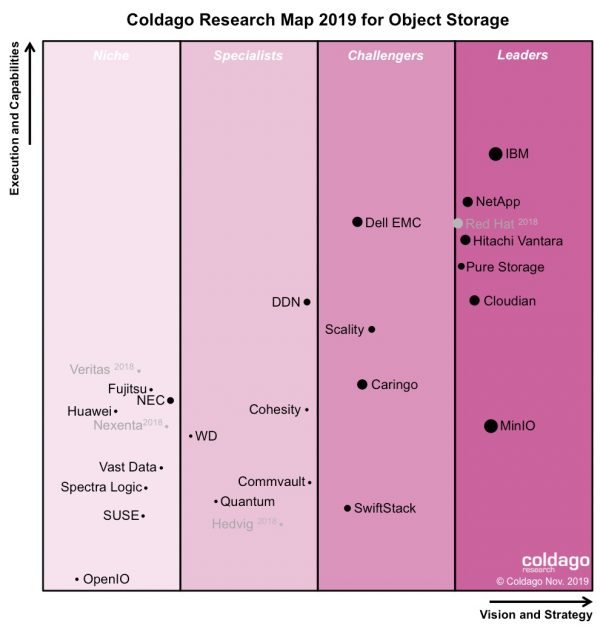

In its last technology report, Coldago Research has positioned MinIO as a leader in its Map 2019 for Object Storage published last December 2019 illustrating the massive adoption by developers, partners and enterprises of all sizes. Among partners there are Cisco, Datera, Humio, iXsystems, Nutanix, Pivotal, Portworx, Qumulo, Robin.IO, Splunk, Ugloo and VMware. We discover configurations and reference architectures in many places by various partners confirming its unique status. The product has become a reference in object storage sometimes copied by competition and for others source of inspiration.

With the classic model Build, Buy or Partner, the easiest would have been to partner with someone but it means adopting a solution not really tailored for MinIO, buy is not in its DNA so they build again something new well aligned with their storage software, requirements, design and performance needs.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter