DDN Works With Nvidia to Ease Deployment and Set Boundaries in Performance for Data-Intensive AI and HPC

Combine power of DGX SuperPOD systems with A3I data management system so customers can deploy HPC infrastructure with minimal complexity and reduced timelines.

This is a Press Release edited by StorageNewsletter.com on November 29, 2019 at 2:27 pmDataDirect Networks, Inc. (DDN) worked with Nvidia Corp. to combine the power of DGX SuperPOD systems with A3I data management system so customers can deploy HPC infrastructure with minimal complexity and reduced timelines.

Additionally, by leveraging the Nvidia Magnum IO software stack to optimize IO and the DDN parallel file system, customers can speed up data science workflows by up to 20 times.

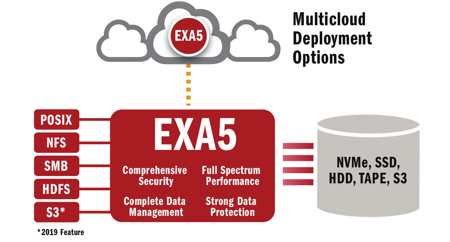

The Magnum IO softwrae suite increases performance and reduces latency to move massive amounts of data from storage to GPUs in minutes, rather than hours. The company testing has confirmed that, using the software’s Nvidia GPUDirect storage feature, the intensive workflows will see improvements and benefit AI and HPC application output directly. The firm expects to support the Nvidia Magnum IO suite, including GPUDirect Storage, in an EXAScaler EXA5 release in the middle of 2020.

“We value the deep engineering interlock between Nvidia and DDN because of the direct benefits to our mutual customers,” said Sven Oehme, chief research officer, DDN. “Our companies share the desire to push the boundaries of I/O performance while simultaneously making deployment of these very large systems much easier.“

During testing with DGX SuperPOD, which itself is designed to deploy supercomputing-level compute quickly, the company was able to demonstrate that its data management appliance, the AI400, could be deployed within hours and a single appliance could support the data-hungry DGX SuperPOD by scaling as the number of GPUs scaled all the way to 80 nodes. Benchmarks over a variety of different deep learning models with different I/O requirements representative of deep learning workloads showed that the company’s system could keep DGX SuperPOD system fully saturated.

“DGX SuperPOD was built to deliver the world’s fastest performance on the most complex AI workloads,” said Charlie Boyle, VP and GM, DGX systems, Nvidia. “With DDN and Nvidia, customers now have a systemized solution that any organization can deploy in weeks.“

Click to enlarge

While the testing described above with DGX SuperPOD was performed with AI400, the company has since announced the AI400X. The appliance has been updated to provide better IO/s and throughput and will ship with Mellanox HDR100 IB connections to support next-gen HDR fabrics. With these enhancements, the AI400X appliance could provide better performance for AI and HPC applications.

Resources:

NVIDIA Magnum IO

A3I appliances

DGX SuperPOD Solution Brief

SuperPOD Reference Architecture

Read also:

SC19: DDN Expands Data Management Capabilities and Launches Platforms for AI and HPC

Next gene of SFA platforms, data management features of EXAScaler EXA5 file solution

November, 20, 2019 | Press Release

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter