Vast Data Emerges From Stealth Mode With $80 Million in Funding

Revealing file server or NAS with flash at HDD price

This is a Press Release edited by StorageNewsletter.com on March 1, 2019 at 2:20 pmVAST Data, Inc. announced its new storage architecture intended on breaking decades of tradeoffs to eliminate infrastructure complexity and application bottlenecks. VAST’s exabyte-scale Universal Storage system is built entirely from high-performance flash media and features several innovations that result in a total cost of acquisition that is equivalent to HDD based archive systems.

Enterprises can now consolidate applications onto a single tier of storage that meets the performance needs of the most demanding workloads, is scalable enough to manage all of a customer’s data and is affordable enough that it eliminates the need for storage tiering and archiving.

As part of the launch, the start-up announced it has raised $80 million of funding in two rounds, backed by Norwest Venture Partners, TPG Growth, Dell Technologies Capital, 83 North (formerly Greylock IL) and Goldman Sachs.

The announcement of this funding comes on the heels of VAST completing its first quarter of operation where it has experienced historic customer adoption and product sales. Since releasing the product for availability in November of 2018, its bookings have outpaced the fastest growing enterprise technology companies.

“Storage has always been complicated. Organizations for decades have been dealing with a complex pyramid of technologies that force some tradeoff between performance and capacity,” said Renen Hallak, founder and CEO. “VAST Data was founded to break this and many other long-standing tradeoffs. By applying new thinking to many of the toughest problems, we are working to simplify how customers store and access vast reserves of data in real time, leading to insights that were not possible before.”

Birth of Universal Storage

The young company invented a new type of storage architecture to exploit technologies such as NVMe over Fabrics, Storage Class Memory (SCM) and low-cost QLC flash, that weren’t available until 2018. The result is an exabyte-scale, all-NVMe flash, disaggregated shared-everything (DASE) architecture that breaks from the idea that scalable storage needs to be built as shared-nothing clusters. This architecture enables global algorithms that deliver game-changing levels of storage efficiency and system resilience.

Some of the significant breakthroughs of VAST’s Universal Storage platform include:

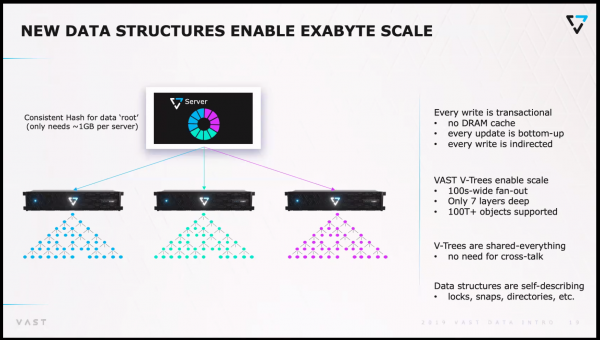

• Exabyte-Scale, 100% Persistent Global Namespace: Each server has access to all of the media in the cluster, eliminating the need for expensive DRAM-based acceleration or HDD tiering, ensuring that every read and write is serviced by fast NVMe media. Servers are loosely coupled in the VAST architecture and can scale to near-infinite numbers because they don’t need to coordinate I/O with each other. They are also not encumbered by any cluster cross-talk that is often challenging to shared-nothing architectures. The servers can be containerized and embedded into application servers to bring NVMe over Fabrics performance to every host.

• Global QLC Flash Translation: The VAST DASE architecture is optimized for the unique and challenging way that new low-cost, low-endurance QLC media must be written to. By employing new application-aware data placement methods in conjunction with a large SCM write buffer, Universal Storage can extract unnaturally high levels of longevity from low-endurance QLC flash and enable low-cost flash systems to be deployed for over 10 years.

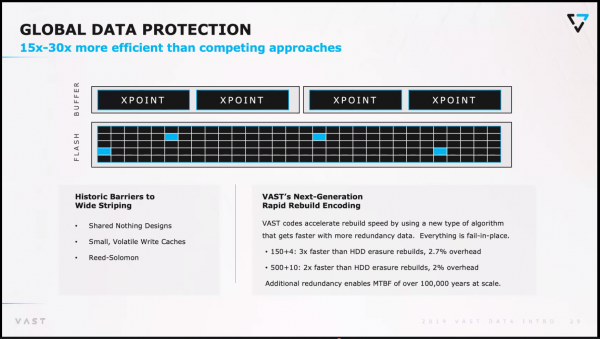

• Global Data Protection: New Global Erasure Codes have broken an age-old tradeoff between the cost of data protection and a system’s resilience. With company’s work on data protection algorithms, storage gets more resilient as clusters grow while data protection overhead is as low as just 2% (compared to 33 to 66% for traditional systems)

• Similarity-Based Data Reduction: The vendor has invented a new form of data reduction that is both global and byte-granular. The system discovers and exploits patterns of data similarity across a global namespace at a level of granularity that is 4,000 to 128,000 times smaller than today’s deduplication approaches. The net result is a system that realizes efficiency advantages on unstructured data, structured data and backup data without compromising the access speeds that customers expect from all-NVMe flash technology.

Key Benefits

There are three ways that customers can deploy Universal Storage platform: a turnkey server and storage cluster appliance, storage plus VAST container software that runs on customer machines, or software only.

Whatever the deployment model, customers enjoy benefits from VAST’s synthesis of storage innovations, including:

• Flash Performance at HDD Cost: All applications, from AI to backup, can be served by flash, increasing performance without increasing the cost of capacity

• Massive Scalability: Customers no longer need to move and manage their data across a complicated collection of storage systems. Everything can be available from a single ‘source of truth’ in real-time. Universal Storage is easier to manage and administer, and becomes more reliable and efficient as it scales.

• New Insights: With this increased flexibility and scalability, there are new opportunities to analyze and achieve insights from vast reserves of data.

• Data Center in a Rack: Customers can now house dozens of petabytes in a single rack, providing reductions in the amount of floor space, power and cooling needed.

• 10-Year Investment Protection: With 10-year endurance warranty, customers can now deploy QLC flash with peace of mind. DASE architecture enables better investment amortization than legacy HDD architectures which need to be replaced every three to five years, while also eliminating the need to perform complex data migrations.

Austin Che, founder, Ginkgo Bioworks, said: “Ginkgo Bioworks designs custom microbes for a variety of industries using our automated biological foundry. Our mission to make biology easier to engineer is enabled by VAST Data making storage easy. Our output is exponentially increasing along with decreasing unit costs so we are always looking for new technologies that enable us to increase output and reduce cost. VAST Data provides Ginkgo the potential to ride the declining cost curve of flash while also providing near-infinite scale.”

Yogesh Khanna, SVP and CTO, General Dynamics Information Technology (GDIT), said: “Our work with VAST Data provides an opportunity for General Dynamics customers to utilize the vision of an all-flash data center with deep analytics for large quantities of data. GDIT is already delivering multi-petabyte VAST Universal Storage systems to customers who are eager to move beyond the HDD era and accelerate access to their data.”

Eyal Toledano, CTO, Zebra Medical Vision Ltd., said: “Zebra is transforming patient care and radiology with the power of AI. To achieve our mission, our GPU infrastructure needs high-speed accelerated file access to shared storage that is faster than what traditional scale out file systems can deliver. That said – we’re also a fast-growing company and we don’t have the resources to become HPC storage technicians. VAST provides Zebra a solution to all of our A.I. storage challenges by delivering performance superior to what is possible with traditional NAS while also providing a simple, scalable appliance that requires no effort to deploy and manage.”

Comments

This announcement marks a real event in the storage industry as Vast Data, based in NYC, NY, with operations and a support center in San Jose, CA and R&D in Israel, shakes the market, established positions and many strong beliefs existing for a few decades.

The main idea and the trigger of the project was how to drive cost down with a real new approach to storage and here I don’t mention primary or secondary. How to beat HDD, remove them completely and avoid complex data management such tiering and still deliver performance ?

If you offer a flash farm at a price of HDD one, you don’t need HDD at all and tiering is useless and guess what, you can put all data in only one tier, this new flash tier. Why you need to consider HDD any more? And the dream comes true with Vast Data proving it’s possible and real at the data center level. According to Jeff Denworth, VP product, we speak about $0.30/GB for average cases and pennies per gigabyte for backup when data are very redundant.

We used to say and it's still true that HDD associated with data reduction (deduplication and compression) can beat tape for secondary storage moving tape potentially to deep archive. Here a pretty similar approach is made but for demanding environments where IO/s are critical to sustain real business applications. So this result is possible thanks to storage class memory like Intel 3D XPoint, QLC flash, end to end NVMe, unique algorithms for data reduction, protection and management and a new internal file system. And it was not possible before these three first elements exist even with fantastic data-oriented algorithms.

We’re speaking here about universal both in term of components but also in term of usages. We used to distinct primary and secondary storage based on their role in the enterprise. To be clear, primary storage supports the business, I mean business critical applications run and use this storage, and any downtime impacts the business, it’s a must have. Secondary storage protects and supports IT not the business, potentially this level is optional if the primary has everything in it, secondary is a nice to have. You lose it or you don't have it, the business on the primary is running and not impacted. With Vast Data, there is no such consideration as all data - production and copy data - can reside in only one tier, all flash at the HDD price.

A very important design choice is the total absence of state at the compute node level as it represents a real difficulty to deliver high performance for users' IO/s with consistency challenges across tons of nodes. The development team has chosen an any-to-any architecture, named DASE for DisAggregated Shared Everything, meaning that any node can speak super fast to any flash entity in every storage chassis thanks to NVMe over Fabrics. The normal cache/memory layer presented in the server and limited to this chassis move down to a share pool accessible to every server avoiding consistency challenges and some performance penalties. Vast Data demonstrates that caching and stateful model are the enemy of scalability.

The other key development is related to QLC operation mode as the engineering team wished to fully control how flash behave to maximize the endurance of cells respecting the financial initial cost goal. A new data placement approach was invented thanks to the SCM layer that helps build and organize large write stripes to QLCs. The main idea behind this was to eliminate garbage collection, read-modify-write operations and write amplification.

Metadata store is also a key point as Vast Data doesn't rely on any commercial or open source databases even some well designed with distributed philosophy in mind. In fact, the team has built its own model shared across storage nodes as nothing again exists and resides on compute nodes. They invented what they name a V-Tree structure with seven layers and very large to cover the data model.

Data protection and data reduction, the term chosen by Vast Data as it is more than deduplication, are realized when IO operations are acknowledged, in other words when data are written to the SCM layer i.e on 3D XPoint elements. Compute nodes send one copy of the same data to three storage nodes and then the global erasure coding operates with very low overhead, around 2% with model like 500 + 10. For the reduction aspect, the logic is global and works at the byte level. Both approaches are Vast Data IP.

Finally, even if lots of things within boxes represent new innovation, applications continue to consume storage via classic interfaces. The Vast Data farm is dedicated to unstructured content so it exposes industry standards file sharing protocols such NFS, here in v3 - we expect SMB in the near future - and a de-facto object interface with a S3 compatible API.

We’ll see how the market will react to this announcement representing a real breakthrough for the storage industry with real engineering efforts, developments and innovations.

The $80 million financial funding here announced were received in three rounds:

- 2016: $15 million in series A

- 2018: $25 million in series A

- 2019: $40 million in series B

Executives have deep backgroung in AFA's companies including XtremIO, Kaminario and Pure storage.

Renen Hallak, CEO and co-founder: Prior to founding the start-up, led the architecture and development of an AFA at XtremIO (sold to EMC), from inception to over a billion dollars in revenue while acting as VP R&D and leading a team of over 200 engineers; earlier developed a content distribution system at Intercast, from inception to initial deployment and acted as chief architect; was also a member of the CTO team at Time to Know; published thesis in the journal of Computational Complexity and presented at the Theory of Cryptography Conference.

Renen Hallak, CEO and co-founder: Prior to founding the start-up, led the architecture and development of an AFA at XtremIO (sold to EMC), from inception to over a billion dollars in revenue while acting as VP R&D and leading a team of over 200 engineers; earlier developed a content distribution system at Intercast, from inception to initial deployment and acted as chief architect; was also a member of the CTO team at Time to Know; published thesis in the journal of Computational Complexity and presented at the Theory of Cryptography Conference.

Shachar Fienblit, VP R&D and co-founder, coming from Kaminario (7 years ending as CTO) and IBM

Jeff Denworth, VP products and co-founder, was at CTERA, DDN (for seven years), Cluster File Systems and Dataram.

Mike Wing, president: formerly during 12 years at Dell EMC ending as SVP, primary storage and previously 3 at EMC

Avery Pham, VP operations: worked more then 5 years at Pure storage, DDN, EMC and Cisco.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter