International Massive Storage Systems & Technology 2017 Recap

Probably oldest storage conference

By Philippe Nicolas | May 24, 2017 at 2:55 pmThe 33rd International Massive Storage Systems & Technology, aka MSST, edition 2017 happened last week in Santa Clara University.

A few hundreds people were present. The 5 days conference has a unique format with a day of tutorials, two days of invited papers and two days of peer-reviewed research papers plus an opportunity for vendors to expose their solutions and interact with attendees. The first edition took place in May 1974 in Washington, DC as the IEEE Mass Storage Workshop.

2017’s topics were essentially around tiered storage with the campaign storage flavor, erasure coding, open source storage, long-term and extreme-scale storage and non-volatile memory.

Campaign Storage was covered in four sessions: Los Alamos Nat. Labs. (LANL) about MarFS, Campaign Storage by Peter Braam, also the name of his new company, a panel and a short talk, illustrating a pretty hot topic in HPC environments.

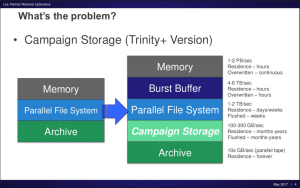

To define campaign storage, let’s first say that it is a new tier in the hierarchy of storage. LANL invented this concept in 2014 and uses a simple illustration to introduce the concept and the need for this new level.

LANL started the MarFS project a few years ago to implement a real campaign storage model and they have tried two commercial products to finally select only one.

The initial goal was ambitious in term of capacity and they built a first production design with 22PB. But the recent situation shows only 2PB used after 6 months. David Bonnie, technical lead, LANL, said during his presentation that MarFS stopped to use a commercial object storage that was becoming too expensive and they finally jumped into a new open source design named Multi-Component Repositories based on NFS/ZFS with same systems and still GPFS for metadata storage. Peter Braam, CEO and founder of Campaign Storage as a company, also promoted this approach in a more generic manner.

Erasure coding is now a common feature. Long-term storage especially with high volumes of data without erasure coding but replication projects a high TCO as it means a higher cost of storage with more devices, more cabling, more racks and more energy. In other words, erasure coding is mandatory and is almost present in every large capacity storage solutions like object storage for instance. It also means that differentiators between these solutions are less and less visible and today we count almost 30 object storage vendors on the planet. Remember a few years ago when only Amplidata and Cleversafe provided such protection method.

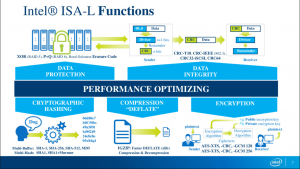

Two sessions covered erasure coding: Intel about ISA-L and MemoScale. Intel ISA-L (Intelligent Storage Acceleration) is a library providing low-level functions for storage applications that require high throughput and low latency such hashing, compression, encryption, data integrity and erasure coding. With Intel E5-2600v4 processor generation, ISA-L can deliver 5GB/s of EC. It is available for Linux, Windows and FreeBSD and open source with code downloadable from GitHub under BSD license model.

Without any question by the audience, the presenter, Greg Tucker, from Intel, has mentioned the Mojette Transform, developed by Rozo Systems for its scale-out NAS RozoFS, meaning that Intel has heard about this approach.

MemoScale, storage software ISV from Norway, has made a second interesting approach after December appearance at The IT Press Tour where they started to get attention and seen in the press. Their commercial approach supports Ceph, OpenStack Swift and Hadoop HDFS but is also available as a C-library. They claim to be the fastest SHA3 and Reed Solomon implementation and presented 2 benchmarks where they exploded Jerasure on Intel, AMD or ARM processors.

To find all downloadable presentations and sessions’ details

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter